Theory of Computing

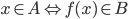

Matthew Barnes, Mathias Ritter

Books 4

Automata theory: Regular languages 5

Deterministic Finite Automata 5

Alphabets and strings 5

Languages 6

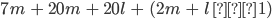

Deterministic finite automata (DFAs) 6

Product construction 9

Regular languages 10

Nondeterminism, subset construction and ϵ-moves 15

Nondeterminism 15

Subset construction 18

ϵ-moves 21

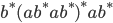

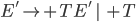

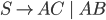

Regular expressions and Kleene’s theorem 23

Defining regular expressions 23

Atomic patterns 23

Compound patterns 24

Examples 24

Kleene’s theorem 24

Reg exp to εNFA 25

εNFA to reg exp 26

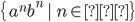

Limitations of regular languages 29

Pumping (🎃) Lemma for regular languages -

contrapositive form 30

Automata theory: Context free languages 34

Pushdown Automata (PDA) 34

Transition relation 34

Configuration 35

Acceptance 35

By final state 36

By empty stack 36

Example PDA accepting palindromes 36

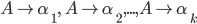

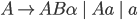

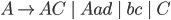

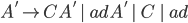

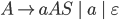

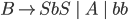

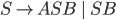

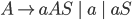

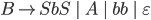

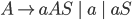

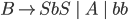

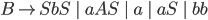

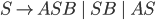

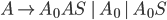

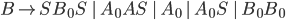

Context free grammars 38

Chomsky Normal Form 41

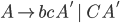

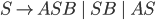

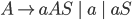

Greibach Normal Form 42

Removing epsilon and unit productions 42

Removing left-recursive productions 42

Converting between CFG, CNF and GNF 44

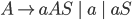

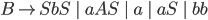

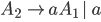

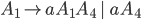

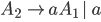

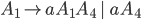

Converting a CFG to GNF 44

Converting a CFG to GNF 46

PDA and CFG conversions 49

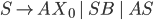

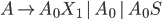

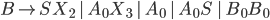

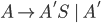

CFG to PDA 49

PDA to CFG 51

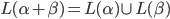

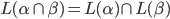

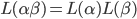

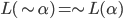

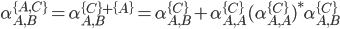

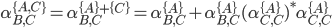

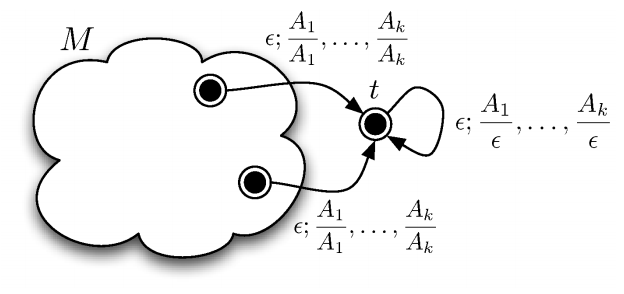

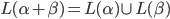

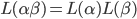

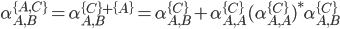

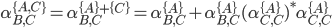

Proving closure 58

Union 58

Concatenation 58

Kleene star 59

Intersection with regular languages 59

Limits of context-free languages 60

Pumping (🎃) Lemma for context-free languages -

contrapositive form 63

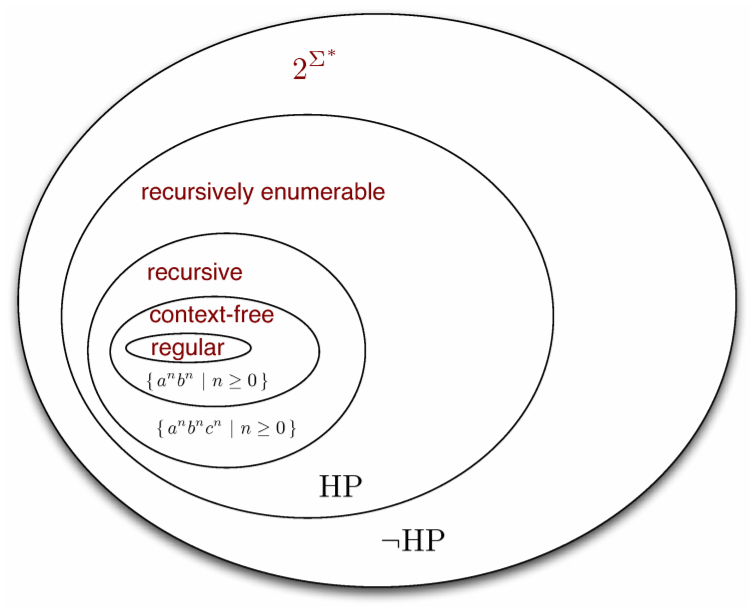

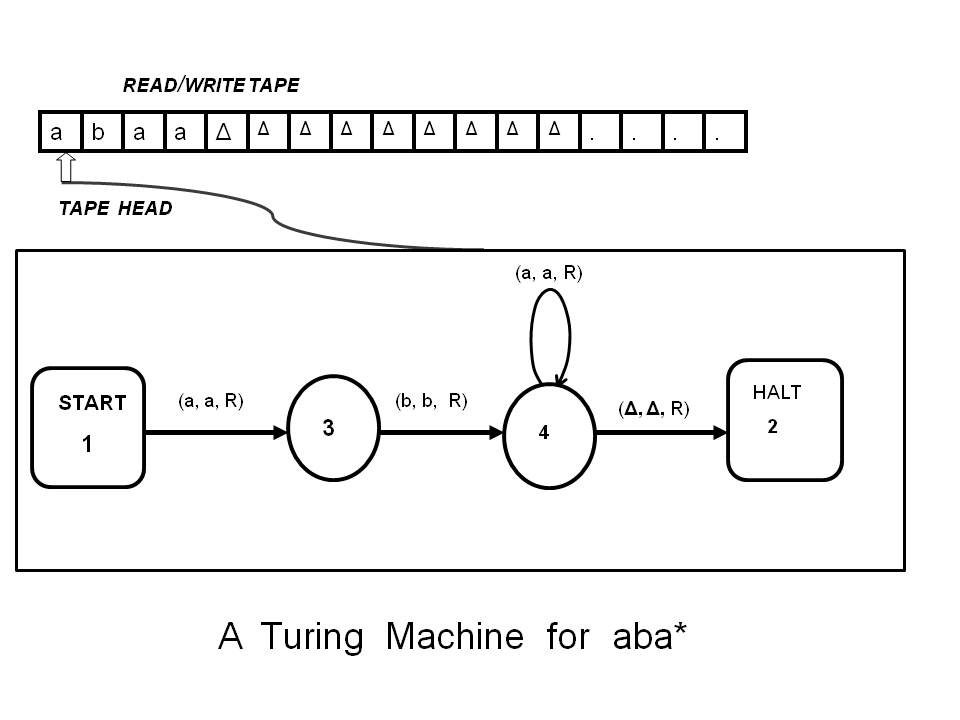

Computability theory 67

Turing machines 67

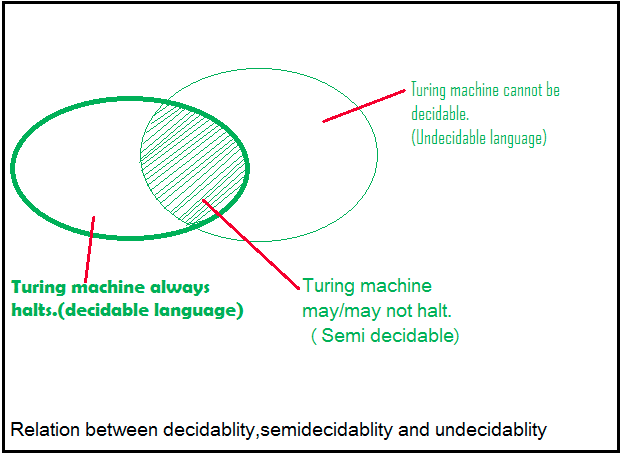

Decidability 68

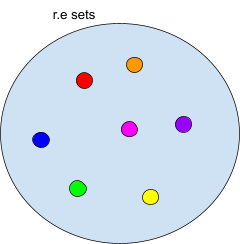

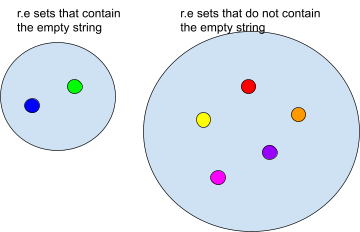

Recursive and R.E languages 68

Proof: Recursive Sets Closed under Complement 68

(Semi) Decidable properties 70

Universal Turing machines 71

Multiple tapes 71

Simulating Turing machines 71

Encoding Turing machines 71

Constructing a UTM 72

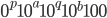

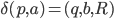

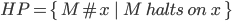

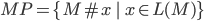

Halting problem 72

Proof by contradiction 72

Proof by diagonalisation 73

Decidable / Undecidable problems 74

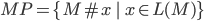

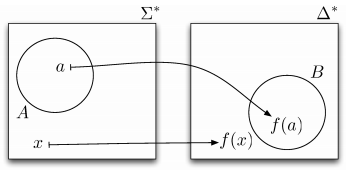

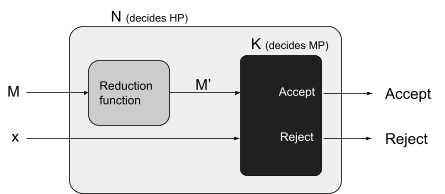

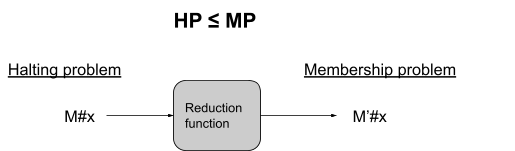

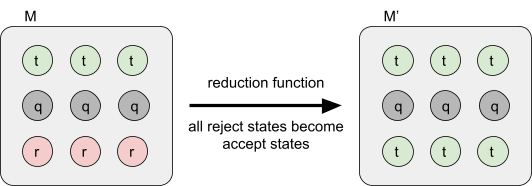

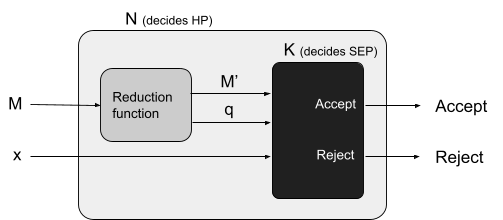

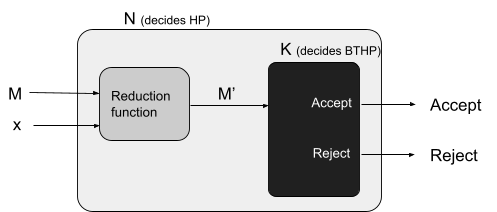

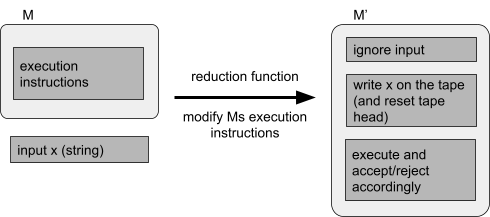

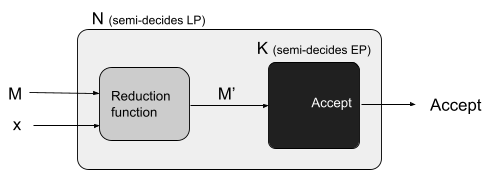

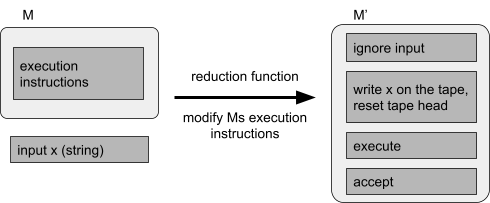

Reductions 77

Properties of reductions 82

Rice’s theorem 83

Complexity theory 85

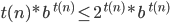

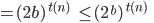

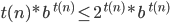

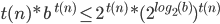

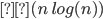

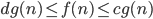

Big-O and Big-ϴ 86

Big-ϴ 86

Big-O 86

The class P 87

Feasible and infeasible problems 87

Decision problems 87

Definition 88

The PATH problem 89

The HAMPATH problem 90

Regular and context-free languages 90

The class NP 91

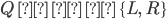

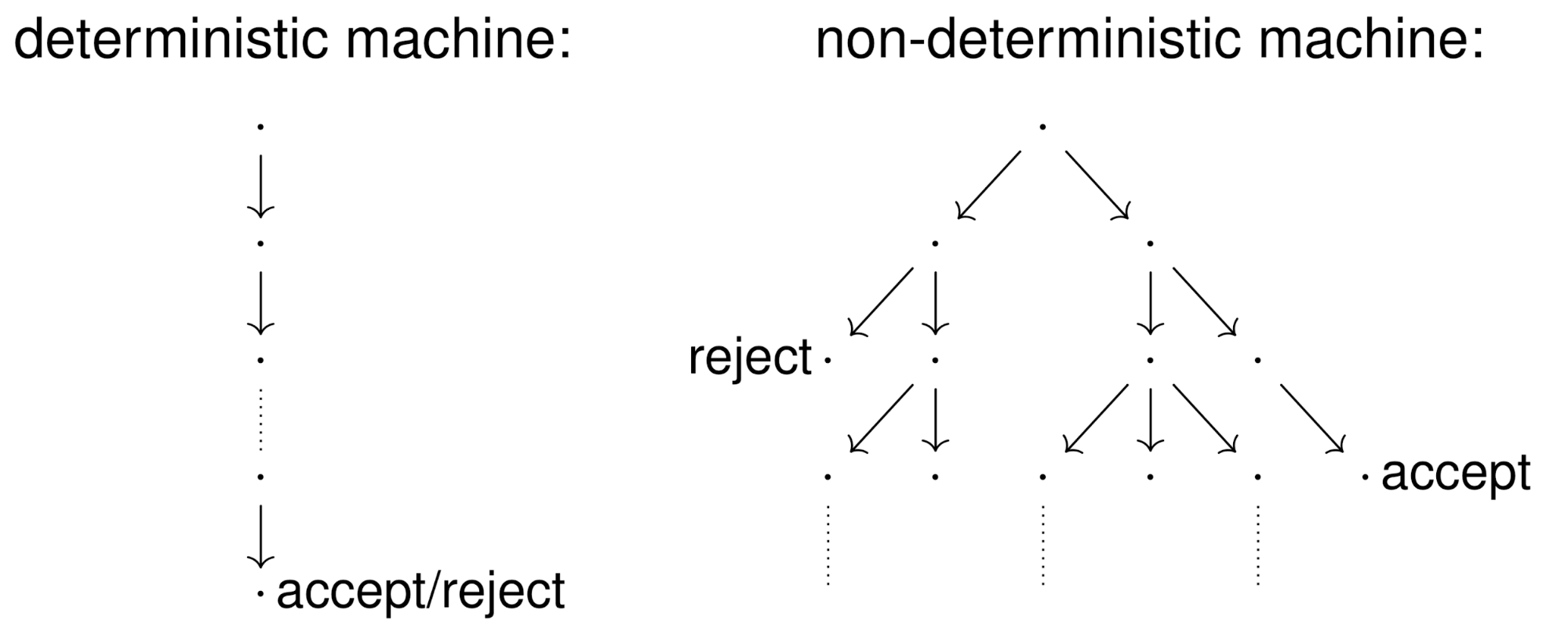

Non-deterministic Turing machine 91

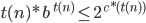

Time complexity of Turing machines 92

Definition 95

The SAT problem 95

The HAMPATH problem 96

The TSP(D) problem 97

The 3COL problem 97

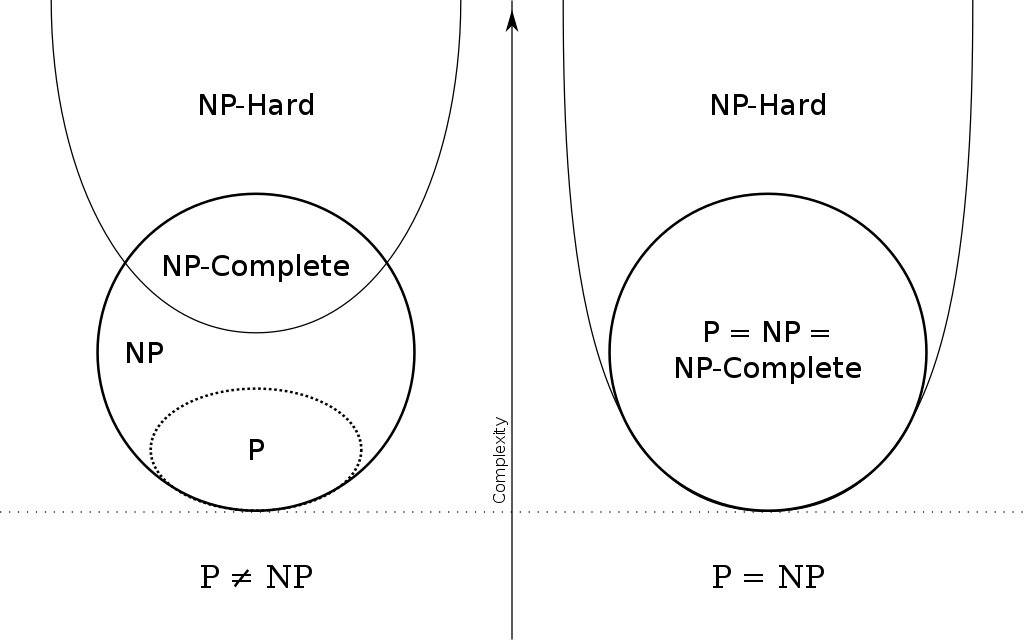

NP = P? 97

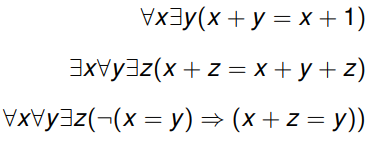

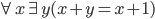

Presburger arithmetic 98

NP-Completeness 99

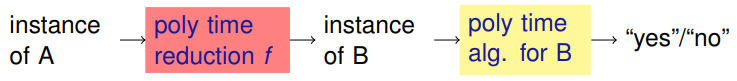

Polynomial time reduction 99

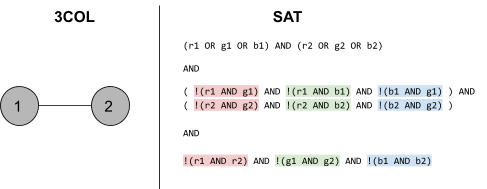

3COL to SAT 100

NP-hard 101

The Cook-Levin theorem 101

What is NP-completeness 101

Space complexity 101

Space complexity 101

PSPACE 103

NPSPACE 103

Savitch’s Theorem 104

EXPTIME 104

Relationships between complexity classes 104

PSPACE-hard 104

PSPACE-completeness 105

TL;DR 105

Automata theory: Regular languages 105

Automata theory: Context free languages 106

Computability theory 107

Complexity theory 108

Books

-

There are two main books for this module. I’ve added

PDF links to both of them.

Automata theory: Regular languages

-

Automata relates to machines with finite memory.

-

Unlike maths, where we can have infinite of something, we

cannot have infinite of something in an automata because

it’ll run out of memory.

-

Automata also relates to pattern matching, modelling,

verification of hardware etc.

Deterministic Finite Automata

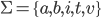

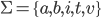

Alphabets and strings

-

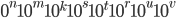

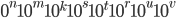

An alphabet is a finite set of elements. It is denoted with capital sigma,

Σ.

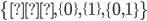

- Examples:

-

Σ = {0, 1}

-

Σ = {a, b, c}

-

Σ ≠ {1, 2, 3, 4, ...}

-

A string ‘s’ over Σ is a finite sequence of elements of Σ.

-

Two strings ‘s’ and ‘t’ are equal

when they have the same elements of the same order.

- Examples:

-

Σ = {0, 1}, s = “0100110”

-

Σ = {a, b}, s = “abbaba”

-

The set of all strings is denoted with sigma star, Σ*.

-

It will always be infinite, unless the alphabet is

empty.

-

In every alphabet, including an empty alphabet, the set

contains the empty string ‘ϵ’.

-

More names for Σ* are “free monoid on Σ”, or

“Kleene star of Σ”.

- Examples:

-

Σ = {}, Σ* = { ϵ }

-

Σ = {a}, Σ* = { ϵ, a, aa, aaa, aaaa ...

}

-

Σ = {0, 1}, Σ* = { ϵ, 0, 1, 10, 11, 100,

001, 000 ...}

-

We can clean up our notation by not including Σ, but

just replacing it with its definition:

-

{}* = { ϵ }

-

{a}* = { ϵ, a, aa, aaa, aaaa ... }

-

{0, 1}* = { ϵ, 0, 1, 10, 11, 100, 001, 000 ...}

-

The length # of a string is its number of elements.

- Examples:

- #(abba) = 4

-

#(00101) = 5

-

#(joestar) = 7

-

The empty string is usually denoted with a lunate epsilon character,

ϵ.

- Laws:

-

You can concatenate two strings, ‘s’ and ‘t’,

like this: ‘st’.

- Example:

-

s = “ab”, t = “ba”, st = “abba”

-

s = 011, t = 101, st = 001101

-

s = “za”, t = “warudo”, st = “zawarudo”

-

s = ϵ, t = ϵ, st = ϵ

-

ϵs = sϵ = s

Languages

-

A language L over an alphabet Σ is some subset of strings in

Σ*.

- Examples:

-

Σ = {a, b, c}

-

L1 = {ϵ, a, bb, cba}

-

L2 = {bbb, aaa}

-

L3 = {cccccacccccc}

-

L4 = Ø = {}

-

Two languages are equal when they contain the same strings

(in terms of sets, they are equal).

-

If the language should also contain the empty string

ϵ it must be explicitly added to the language as an

element of the set.

Deterministic finite automata (DFAs)

-

A finite automata is a mathematical model of a system with a finite

number of states and a finite amount of memory space.

-

A state is a description of a system at some point in time.

-

A transition allows the DFA to go from one state to the

other.

-

Think of DFAs like flow-charts; you go from one state to

the next until you reach the goal state.

-

There are three kinds of finite automata:

-

Deterministic

-

Nondeterministic

-

Nondeterministic with ϵ-moves

-

Right now, we’ll focus on deterministic finite automata.

-

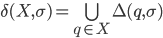

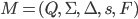

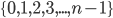

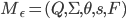

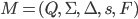

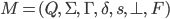

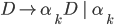

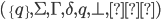

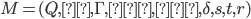

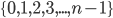

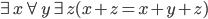

A DFA (deterministic finite automata) has 5

properties:

|

Property

|

Symbol

|

Description

|

|

States

|

Q

|

Possible states that the automata can be

in.

|

|

Alphabet

|

Σ

|

The alphabet that the transition names depend

on.

|

|

Transitions

|

δ

|

A function that allows the automata to go from

one state to the other by consuming a

symbol.

This takes an element of Σ and a state

from Q, and outputs the next state from Q to

transition to.

|

|

Start state

|

s

|

The state that the automata starts at.

|

|

Final states (accept states)

|

F

|

Set of states at which, if the automata ends at

them, it accepts the string that was entered

into it.

|

-

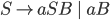

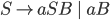

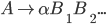

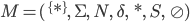

To define a DFA mathematically, use the properties above

into a tuple:

-

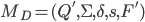

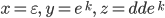

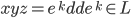

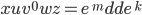

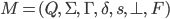

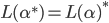

Example, given that M is a DFA:

-

An automata takes in a string that contains letters from

the alphabet and reads it from left to right, with each

letter being an action (or transition) that changes the

state of the automata.

-

All states must have all possible transitions, i.e. each state must have

exactly one transition for every element of the

alphabet

-

If the automata ends on a final state, it’ll accept the string.

-

If the automata does not end on a final state, it’ll reject the string.

-

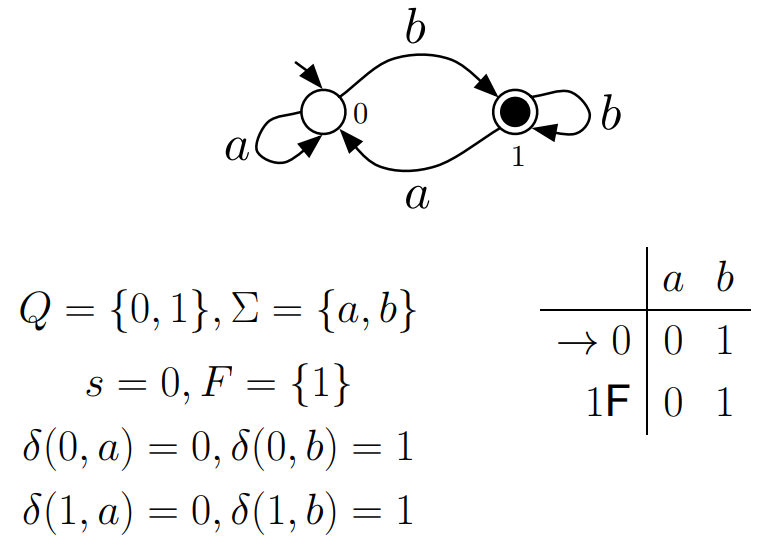

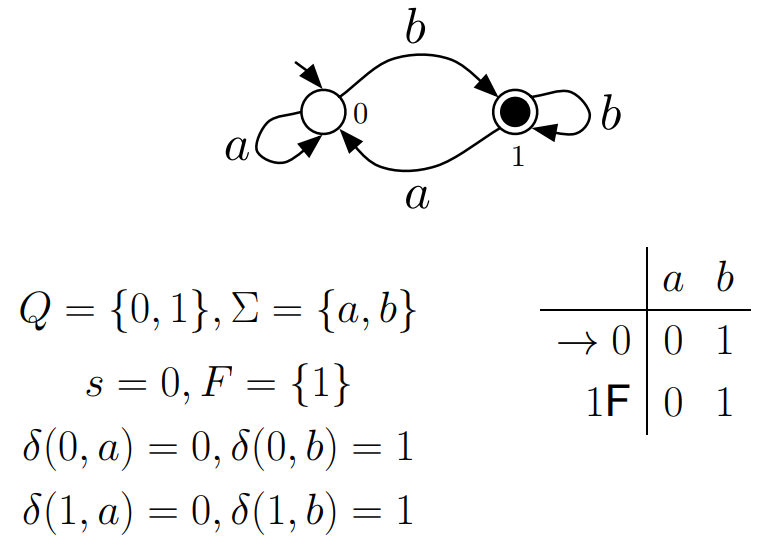

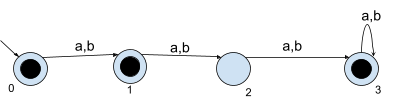

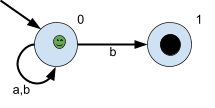

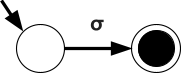

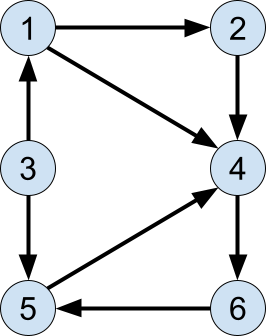

You can also represent DFAs as graphs (pictures), similar to state-machine diagrams:

-

This small arrow pointing to the ‘0’

state coming from nowhere means that ‘0’ is the

initial state.

This small arrow pointing to the ‘0’

state coming from nowhere means that ‘0’ is the

initial state.

-

The states that are not filled in are not final

states.

The states that are not filled in are not final

states.

-

The states that are filled in are final states.

The states that are filled in are final states.

-

The arrows represent the transitions between states,

which are triggered by the input character shown

The arrows represent the transitions between states,

which are triggered by the input character shown

-

This DFA accepts strings like abbab and babbbab, but not abba or ϵ.

-

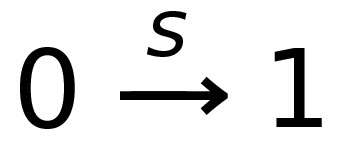

To say that a string ‘s’ takes the DFA from

state 0 to state 1, you would write that as:

-

- Where:

-

Left-hand side = beginning state

-

Right-hand side = ending state

-

Text on the top = string transition

Product construction

-

Product construction is where you merge two DFAs together,

so that the combined DFA is actually the two component DFAs

running in parallel.

-

The states aren’t just single string / character

names. The states are referenced in a tuple (or pair), with

each element being a state name from one of the component

DFAs.

-

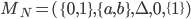

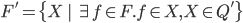

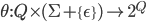

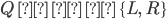

Let’s define the DFAs of L1 and L2 as this, respectively:

-

Now, if we found the product of the DFA of L1 and the DFA of L2, then the states of that product would be:

-

(0, 0), (0, 1), (0, 2), (1, 0), (1, 1), (1, 2), (2, 0), (2,

1), (2, 2), (3, 0), (3, 1), (3, 2)

-

The product transition would need a pair state and a

transition name. For example, you could go from (2,0) to

(3,1) by using ‘a’, or you could go from (0,0)

to (1,0) by using ‘b’.

-

The set of final states F is F1 ⨯ F2, where F1 is the set of final states of L1 and F2 is the set of final states of L2. Therefore, with normal, unedited product construction,

for the DFA to accept the string, we need to be in a final

state for both component DFAs.

-

This can be changed, however, to just “at least one

DFA to be on a final state” if we define F to be (Q1 ⨯ F2) ∪ (F1 ⨯ Q2), where Qx is the set of states for a DFA.

-

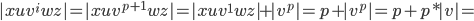

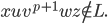

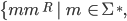

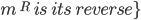

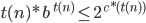

Therefore, the language expressed by the product of

and

and  is

is  .

.

-

It’s best to view this as a table, where you can see

the DFAs of L1 and L2 simultaneously:

|

L1’s DFA state

|

L2’s DFA state

|

Product state

|

Transition

|

Step

|

|

0

|

0

|

(0,0)

|

None (yet)

|

0

|

|

1

|

1

|

(1,1)

|

a

|

1

|

|

2

|

1

|

(2,1)

|

b

|

2

|

|

3

|

2

|

(3,2)

|

a

|

3

|

-

To view this more simply, you can just follow two DFAs at

the same time, and put one’s state on the left of the

tuple and the other’s state on the right of the tuple.

It’s nothing more than running two DFAs at the same

time, inputting the same word into both of them.

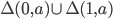

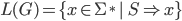

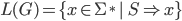

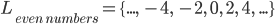

Regular languages

-

When you have a DFA called M, then L(M) is the language of

all accepted strings.

-

For example, in the previous DFA, L(M) = {b, ab, aab, bb,

abb ...} and it will not include elements like

‘a’, ‘ϵ’, ‘ba’ or

‘bba’ because they all will not be accepted by

that DFA.

-

A regular language L is when there exists some DFA M such that L(M)

exists.

-

More informally, a language L is regular if you can come up

with some DFA where it’ll accept all the words in L and only those words in L.

-

For example, the language { ab, ba, aa, bb } is regular because I can construct a DFA that accepts only those

words:

-

All finite languages are regular, because we could (in theory) just build a control flow

tree of constant size (you can visualize it as a bunch of

nested if-statements that examine one digit after the other)

(citation).

-

The empty language Ø is regular, because you can create a DFA that can’t accept

anything (for example, a DFA with no final states), not even

the empty string. If a DFA can’t accept any strings,

then the L(M) of that DFA would be the empty set Ø,

making Ø regular.

-

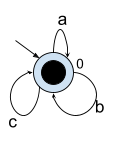

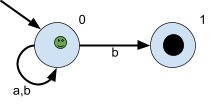

The set of all strings Σ* is regular, because you can set up a DFA consisting of a single final

state that transitions to itself for any symbol. This

accepts any element from the alphabet Σ, like this one

when Σ = {a, b, c} and Σ* = {ϵ, a, b, c,

ab, bc, abc, cba ...}:

-

If L is regular, then the complement (Σ* - L, or ~L)

is always regular.

-

For example, let’s take the language L = {ab, ba, aa,

bb} from before because we know that’s regular

(therefore there must be a DFA whose L(M) is L). The

alphabet that it’s from is Σ = {a, b}. The

complement of L, ~L, will be the set of all strings from Σ* except for the ones in L.

-

If we take the DFA whose L(M) is L from before and flip all

the final states to non-final states and vice versa, we can

create a new DFA whose L(M) is ~L (citation).

-

This works on any language. We’re simply getting the

“inverse” of this DFA.

-

If L1 and L2 are regular, then the union, L1 ⋃ L2, is regular.

-

If we want to prove that L1 ⋃ L2 is regular, we need to show a DFA exists for L1 ⋃ L2.

-

We can use product construction for L1 and L2 , then we can modify it a bit to suit what we

need.

-

First of all, let’s define the result of the product

of L1 ⋃ L2 to be M.

-

Now, we change the set of final states F of M to be

“at least one DFA must be on a final state to accept

the string”, so we define F to be (Q1 ⨯ F2) ∪ (F1 ⨯ Q2), where Qx is the set of states for a DFA.

-

By doing that, we have now defined a DFA where L(M) is

L1 ⋃ L2.

-

If L1 and L2 are regular, then the intersection, L1 ∩ L2, is regular.

-

This one’s even easier than the union one.

-

Basically, we use product construction for the DFAs L1 and L2.

-

That’s it. We don’t even need to change the set

of final states. This is because, by default, the product of

two DFAs require both DFAs to be on a final state for the

aggregate DFA to accept the string, which is the

intersection.

-

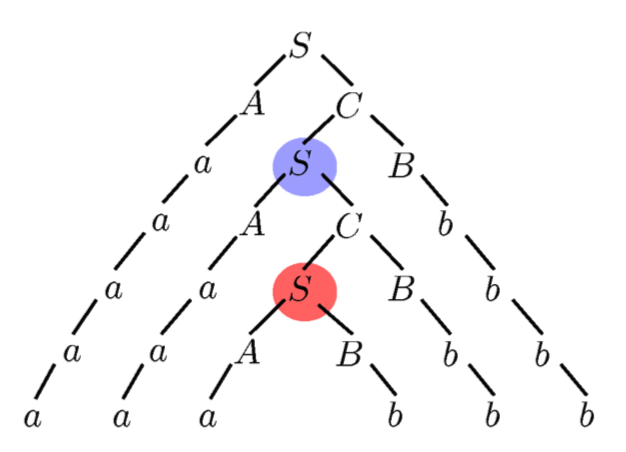

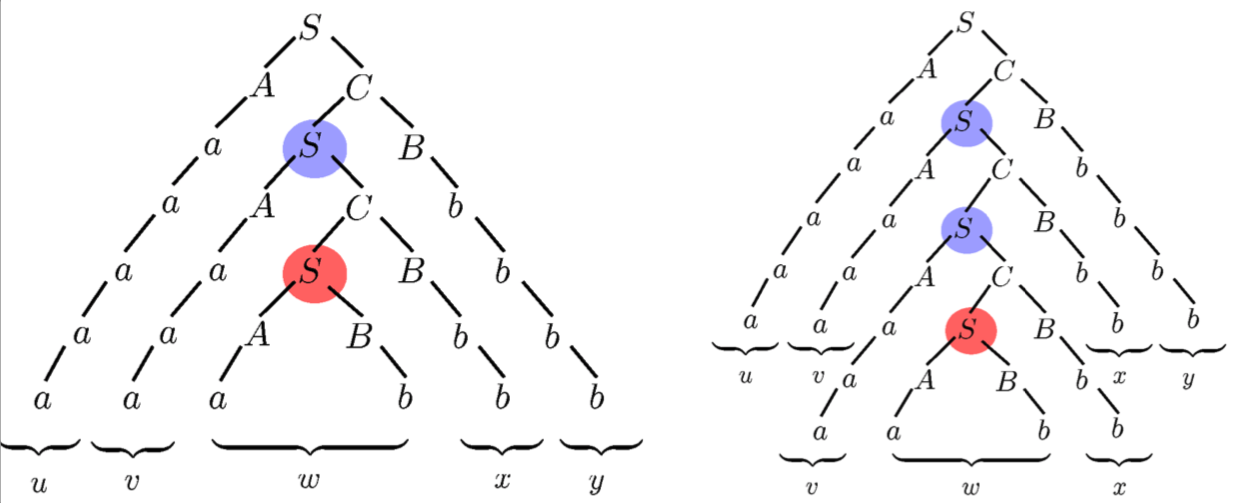

If L1 and L2 are regular then L1 L2 = { xy | x ∈ L1, y ∈ L2 } is regular.

-

This proof requires NFAs (nondeterministic finite

automata), which are covered in more detail later, but

basically it’s the same as DFA except you can have

multiple transitions in NFAs, and you can take multiple

paths at the same time. Think of it like two threads running

in parallel, or two trains travelling on different paths in

parallel. It’s similar to a fork node in an activity

diagram in UML and how it models concurrency in a

system.

-

First, we define two DFAs for L1 and L2:

-

M1 = (Q1, Σ, δ1, s1, F1)

-

M2 = (Q2, Σ, δ2, s2, F2)

-

Now, we’re going to create an NFA from these two

DFAs:

-

M3 = (Q1 ∪ Q2, Σ, δ1 ∪ δ2, s1, F2)

-

and for each state q ∈ F1, δ(q, ϵ) = s2 (what this means is, for each final state of the

first DFA, make a transition that goes from that final state

to the starting state of the second DFA). The transition has

the name ‘ϵ’, which means that we can take

this transition without using up any characters in the

string, it’s basically a free move, but more on that

in a later topic. See ‘ϵ-moves’).

-

What this NFA really means is that we start off in terms of

M1, but we need to finish off in terms of M2.

-

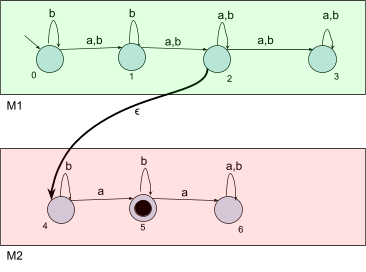

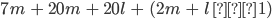

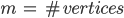

For example, take the two example DFAs for a second:

-

If we defined a new NFA, like we did above, for these two

example DFAs, it’ll look something like the

illustration below. I’ve created two illustrations of

the same NFA: one without annotations, and one with

annotations.

|

The proper one

|

|

|

|

The illustrative one

|

|

|

-

First of all, the top half of this NFA is basically M1. However, when we reach M1’s final state, there’s actually an epsilon

move taking the NFA to it’s bottom half. If we

don’t reach that epsilon move, we can’t move

onto the bottom half of the NFA. When we reach that epsilon

move, the string (up to this point) should be one of M1’s accepted strings.

-

Second, the bottom half of the NFA is M2. Once we’ve reached the bottom half, we’ve

found an M1 string, now we just need an M2 string. Once we’ve got an M2 string, we’ll be on a final node and the string

will be accepted.

-

By reaching the final node, we know that the input string

is made up of an M1 accepted string as the first half, and an M2 accepted string as the second half. This is literally

the definition of L1 L2, so we can safely say that the L(M) of this NFA is L1 L2.

-

This NFA should accept any concatenated string from L1 and L2. Therefore, L1 L2 is regular.

-

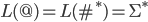

If L is regular then L* = { x1 ... xk | k ∈ N, xi ∈ L } is regular.

-

L* is basically the set of every possible word you can make

by concatenating the words of L onto themselves.

-

So if L had “add” and “dad”, L* would have words like “adddad”, “dadadddad”, “daddaddad” etc. and it would go on infinitely.

-

The operator * is called “Kleene star”, and

we’ve been using it already (when we write Σ* to

refer to the set of all words of that alphabet).

-

Yet again, we’ll need NFAs to prove this.

-

Let’s use my “add dad” example above, and

construct a DFA, M, where L(M) is L:

-

The red nodes are “error nodes”, where the DFA

goes when we know the string will be rejected.

-

This accepts “add” and “dad”, but

we need it to accept words like “adddad” as

well.

-

Luckily, epsilon-moves allow us to very easily construct an

NFA that does this:

-

Now, when we complete the word “add” or

“dad”, we can move straight back to the start

node and search for “add” or “dad”

again. Remember, epsilon moves don’t require any

characters to use, so we can go straight from the end of

“add” to the start of “dad”, for

example.

-

The reason why we create a new final start state that

immediately goes to the old start state is because Kleene

star supports the empty string (in the picture, it’s

state 8)

-

To more generalise this proof, let’s define a general

DFA:

-

M = (Q, Σ, δ, s’, F ∪ s’)

-

Now, we set epsilon moves branching from all the final

states to the new final starting state:

-

(For all states q in the set of final states F, there is a

transition function where when the NFA is in state q, and

the empty epsilon move ϵ is input, the state transitioned to is the new final

start state s’.)

-

That’s all there is to this proof.

Nondeterminism, subset construction and ϵ-moves

Nondeterminism

-

With a DFA, there is always a next state to go to when you

input a letter.

-

However, what if there are cases where there isn’t

always a next state to go to? What if there’s multiple

states we can go to?

-

That’s what an NFA is (nondeterministic finite

automata), it’s basically a DFA that can either branch

off to multiple states, or fail if there are no states to go

to. In other words, it guesses where it’s supposed to

go. If the guesses are wrong, they “die”.

-

In other words, a state no longer has |Σ|

transitions, where each transition is every letter of the

automata’s alphabet.

-

The whole gimmick of an NFA is that we can go through

different “paths” simultaneously. Some paths can die, some paths can survive and reach final

states. We only need one path to be on a final state to accept

the string.

-

"It is helpful to think about this process in terms of

guessing and verifying.

On a given input, imagine the

automaton guessing a successful computation

or proof

that the input is a "yes" instance of the decision

problem, then

verifying that its guess was indeed

correct." Source: Kozen textbook

-

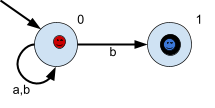

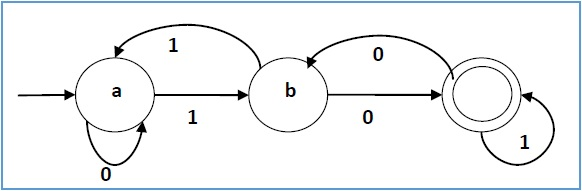

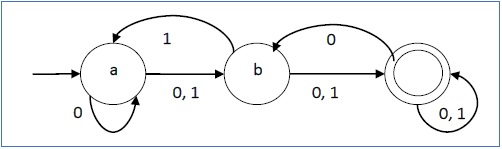

As you can see, it’s slightly different from a

DFA:

-

There are two ‘b’ transitions in state

‘0’

-

There are no transitions in state ‘1’

-

Let’s walk through this NFA to get a feel for how

NFAs work:

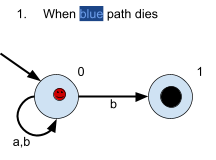

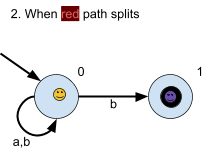

|

Step

|

Description

|

String so far

|

Illustration

|

|

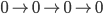

1

|

So far, we haven’t gone anywhere.

The arrow that seems to come from nowhere

indicates where we are initially, so we start at

state ‘0’.

The smiley faces indicate different

“paths” that the NFA is going

through. A different coloured face is a

different path, and their journey is displayed

on the right, for example the green path has

just started on 0, so a green ‘0’ is

shown on the right.

|

|

|

|

2

|

Let’s try inputting ‘a’ and

see where that leads us.

There’s only one transition we can go

down if we input ‘a’, so no new

simultaneous paths are created; we just stick

with our original one.

The ‘a’ path just goes from 0 to 0,

so our green path goes from ‘0’ to

‘0’.

|

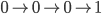

|

|

|

3

|

Let’s try inputting a ‘b’ and

see where that leads us.

There are two paths that ‘b’ can

take us, from 0 to 0 or from 0 to 1. Here, our green path splits into two, one red and one blue.

The red one picks the path that stays on 0, and

the blue path picks the one that goes to

1.

Right now, there exists a path that is on a

final state: the blue one. Therefore, if we left

the string as just  , this NFA will accept that string. , this NFA will accept that string.

|

|

|

|

4

|

Let’s try inputting another

‘b’ and see where that leads

us.

The red and blue paths will both need to go

through a ‘b’ transition. Since the

blue path doesn’t have a blue transition

where it is, it’ll

‘die’.

The red path needs to go down a ‘b’

transition, too. There are two transitions that

the red path can go down, the one that goes from

0 to 0 and the one that goes from 0 to 1. Like

last time, the red path splits into two

different paths: a yellow path and purple

path.

The yellow path takes the transition from 0 to

0, therefore the yellow path is still on

0.

The purple path takes the transition from 0 to

1, therefore the purple path is now on 1.

The NFA should accept this string too, since

the purple path is on a final state.

|

|

|

-

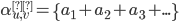

Now, let’s formally construct an NFA:

-

is the set of all states

is the set of all states

-

is an alphabet

is an alphabet

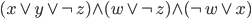

-

is the transition function

is the transition function  , which basically means input a state and a letter from the

alphabet and get a set of all states we could be in (see

‘Subset construction’)

, which basically means input a state and a letter from the

alphabet and get a set of all states we could be in (see

‘Subset construction’)

-

is the start state

is the start state

-

is the set of final states

is the set of final states

-

Let’s formally construct the NFA as shown

above:

-

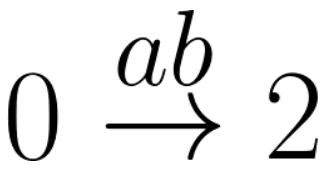

There is some notation that is worth noting:

-

When you see something like:

-

This means that the NFA will go from 0 to 2 if you input

‘a’, and then ‘b’. There should be

some intermediate state, either ‘0’,

‘2’, or even ‘1’, that you enter

when you input ‘a’ and leave when you input

‘b’.

Subset construction

-

It’s easy to convert DFAs into NFAs, because DFAs are

a subset of NFAs.

-

But how do you convert NFAs to DFAs?

-

You use subset construction. Basically, each state is a

subset of states that we could’ve gone to in our NFA

counterpart.

-

For example, in our NFA, if we’re in state 0 and by

inputting ‘b’ we can either go to 0 or go to 1,

in the DFA we’ll actually go to the state {0,1}

because we can go to either 0 or 1 in the NFA.

-

Let’s define an NFA

, then let’s create a DFA

, then let’s create a DFA  which is based on

which is based on  :

:

-

This may look like an alien language to you now, but

I’ll go through each line and explain it in plain

English, then I’ll apply subset construction to the

example above.

-

First things first, what’s going on up there?

|

Line in weird maths language

|

Line in plain English

|

|

|

Here, we create an NFA with the parameters  , ,  , ,  , ,  and and  . .

|

|

|

Here, we create a DFA with the parameters  , ,  , ,  , ,  and and  . The parameters . The parameters  , ,  and and  are all dependent on the parameters for

the NFA are all dependent on the parameters for

the NFA  . .

|

|

|

is the set of all states, like is the set of all states, like  . However, . However,  is the powerset of is the powerset of  , so it’s the set of all subsets of , so it’s the set of all subsets of  , so for example, , so for example,

Because of this, the states in the DFA is all

the subsets of  , so you could have a state , so you could have a state  , a state , a state  or even a state or even a state  . .

|

|

|

From before, we know that  is a transition function that defines how

we go from one state to the other. Therefore,

the inputs must be a state and a letter. is a transition function that defines how

we go from one state to the other. Therefore,

the inputs must be a state and a letter.

is our state input. It’s actually a

set, which makes sense, because the states in

our DFA are all subsets of is our state input. It’s actually a

set, which makes sense, because the states in

our DFA are all subsets of  . .

is our letter input. Nothing strange

here; it’s just a letter within the

alphabet is our letter input. Nothing strange

here; it’s just a letter within the

alphabet  . .

Don’t be too confused with the  that comes after. This simply means that,

for each NFA state in our input that comes after. This simply means that,

for each NFA state in our input  , we’re going to apply the NFA transition

function on that state with our input letter , we’re going to apply the NFA transition

function on that state with our input letter  and put the result in our state

output. and put the result in our state

output.

More formally,  is a bit like the sum in maths is a bit like the sum in maths , except instead of adding all the terms, it

unions all the terms. , except instead of adding all the terms, it

unions all the terms.

Remember that the transition function also

outputs a state. Since the states of our DFA are

subsets of  , the DFA transition function , the DFA transition function  also outputs a subset of also outputs a subset of  . .

For example, let’s say we input  . The output will be . The output will be  . I’ll go over this in more detail in our

example below, but the point is that this

transition function just transitions all the

states in . I’ll go over this in more detail in our

example below, but the point is that this

transition function just transitions all the

states in  and unions them all together into one big

output. and unions them all together into one big

output.

|

|

|

The set of final states  is a subset of is a subset of  . .

For a DFA state to be a final state, it needs

to contain an NFA state that is in  . .

For example, if 0 is a non-final state and if 1

is a final state in the NFA, then in the DFA,

{0} would not be a final state, but {0,1} and

{1} would be final states.

That’s basically what the left expression

gets; a subset of  where all elements contain at least one

final state from where all elements contain at least one

final state from  . .

|

-

Now that we’ve got the formalities out the way, we

can work on an example. Let’s convert the NFA above

into a DFA.

-

As said above, we’ll define our DFA as

and our NFA as

and our NFA as  , so we’ll go through this step-by-step by explaining

how we get each parameter for the DFA

, so we’ll go through this step-by-step by explaining

how we get each parameter for the DFA  :

:

|

What we’re defining

|

How we did it

|

|

|

The value of  is is  , so we just need to get the powerset of , so we just need to get the powerset of  , or the ‘set of subsets’. This

is: , or the ‘set of subsets’. This

is:

|

|

|

This is just the same as before, nothing new

here:

|

|

|

First of all, let’s create a transition

table. This is a table where the input letters

go on the top, the states go on the left, and

you can look up transition results by looking up

a state and a letter:

Now we work through this table from top to

bottom. First of all, there’s the empty

set state. You can’t go from any state

from the empty set state; it’s practically

a death state. So just input all empty set

states here.

Now we’re moving onto the singleton

states. For  and and  , just have a look at the NFA and see what

states you’d end up at if you were at 0

and you took the paths labelled ‘a’.

As you can see, you’d just end up back at

0, so we just input , just have a look at the NFA and see what

states you’d end up at if you were at 0

and you took the paths labelled ‘a’.

As you can see, you’d just end up back at

0, so we just input  there. As for there. As for  and and  , you can see that you can go to 0 or 1 using a

‘b’ path on 0, so we can input , you can see that you can go to 0 or 1 using a

‘b’ path on 0, so we can input  there. Continue this until we’re done

with all the singleton states. there. Continue this until we’re done

with all the singleton states.

Now we’re faced with a state with more

than one element! Remember the definition from

before: we need to perform the transition

function on all the elements, then union the

results together. For example, on  and and  , we need to union the results of doing , we need to union the results of doing  and and  , and , and  and and  . Just above the . Just above the  and and  cell, we can see the results of those. We

just need to union those together, by which I

mean, union cell, we can see the results of those. We

just need to union those together, by which I

mean, union  and and  together. When we do that, we get together. When we do that, we get  , so in this transition table, when you input , so in this transition table, when you input  on the state on the state  , you get , you get  . Repeat this for all the states and inputs

until you finish the table. . Repeat this for all the states and inputs

until you finish the table.

Once you’re done, that’s pretty

much it! This transition table is your new

transition function for your DFA.

This video goes into more detail and I

strongly suggest it if you still struggle

with this.

|

|

|

It’s pretty much the same as the

original, but you encapsulate it in a set:

|

|

|

Get all the elements of  : :

{ , ,  , ,  , ,  } }

Now get rid of the ones that do not have final

states in them:

{ , ,  , ,  , ,  } }

So therefore, your new  is: is:

{ { , ,  } }

|

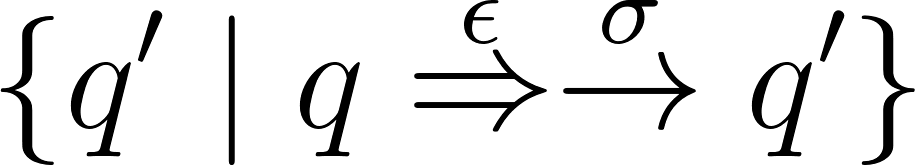

ϵ-moves

-

An epsilon-move (ϵ-move) is a type of transition that

you can use without needing to “spend” or

“consume” any symbols.

-

It’s basically a free move.

-

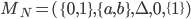

An NFA that uses epsilon-moves are called

ϵNFAs.

-

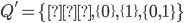

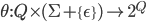

They’re formally defined like this:

- where

-

This is basically the same as an NFA, except transitions

now support epsilon-moves.

-

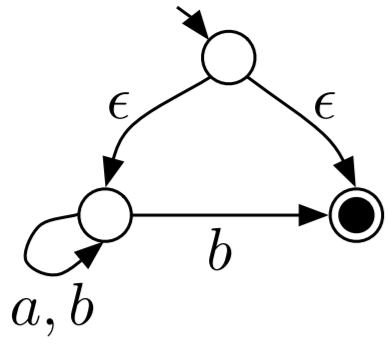

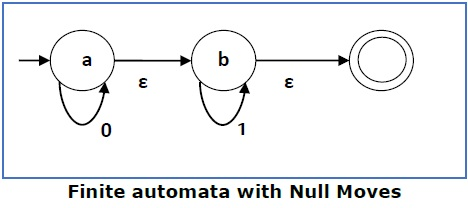

Here is an example of an ϵNFA:

-

From the starting state, you can go to the left-most state

and the right-most final state, all without consuming any

symbols.

-

You can also convert ϵNFAs to NFAs. Don’t

worry! It’s not as long as subset construction.

-

You only need to edit the transition function and the set

of final states.

-

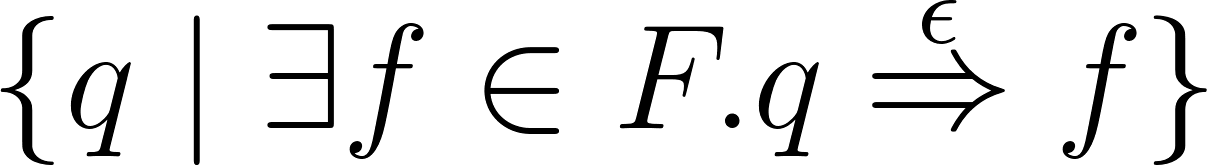

With an ϵNFA

we can create an NFA

we can create an NFA like so:

like so:

-

Your next line will be “Is this going to be

translated into plain English again?”

-

Please see below for a translation into plain

English.

|

Line in weird maths language

|

Line in plain English

|

|

|

We’re just defining an ϵNFA with

these parameters.

|

|

|

We’re just defining an NFA with these

parameters. These parameters will be based off

of the parameters for  . .

|

|

|

When you input a state and a symbol, the output

will be all the possible states it can reach to

using epsilon-moves and that symbol.

|

|

|

The new set of final states is a superset of

the old set of final states, meaning we still

keep the final states the same, but we add more.

We add the states that can epsilon-move towards

a final state.

|

-

Now I’ll go over an example, by converting that

ϵNFA above into an NFA:

|

What we’re defining

|

How we did it

|

|

|

First, let’s create that transition table

again. Just for example’s sake, I’m

calling the initial state 0, the left-most state

1 and the final state 2:

|

|

|

|

|

0

|

|

|

|

1

|

|

|

|

2

|

|

|

If you were at state 0 and had an input

‘a’, what states could you get to?

Well, you could go down the left epsilon-move to

get to state 1 and spend the ‘a’ on

going back to state 1. That’s pretty much

it, so here, we’d input just state

1.

If you were at state 0 and had an input

‘b’, what states could you get to?

You could go down the left epsilon-move and use

‘b’ to go to state 1 or use your

‘b’ to get to state 2. If you went

down the right epsilon-move, you wouldn’t

be able to use ‘b’, so you can

ignore it. Seems like you can get to states 1

and 2, so we put that in the transition

table.

We keep going like this until we fill out the

transition table:

This transition table is our new transition

function!

|

|

|

For this one, you could look at the final

states in the graph, and work your way back up

the epsilon-moves, looking for non-final states

that can epsilon-move to the final state.

As you can see in the graph above, if you start

at state 2 (the final state) and work your way

up the epsilon-move, you’ll find

yourself at state 0. Therefore, we can make state

0 a final state. However, you cannot go up to

state 1 from state 2, so state 1 cannot be a new

final state.

Obviously, we keep the old final states from

the ϵNFA:

|

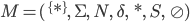

Regular expressions and Kleene’s theorem

Defining regular expressions

-

A string s matches a regular expression

whenever

whenever

-

Regular expressions can be defined using atomic and

compound patterns

Atomic patterns

-

There exist the following atomic patterns, which do not

need to be broken down further:

|

Pattern

|

Matched by …

|

|

|

… the single symbol  from the alphabet: from the alphabet:

|

|

|

… the empty string

|

|

|

… nothing

|

|

|

… any single symbol from the alphabet

|

|

|

… any string formed by the symbols in  (including the empty string), i.e. any

string in (including the empty string), i.e. any

string in

|

Compound patterns

-

Compound patterns are defined recursively, based on the

atomic patterns.

|

Pattern

|

A String will match if ...

|

|

|

… it matches  or or

|

|

|

… it matches both  and and

|

|

|

… it can be broken down into two parts

such that the first part matches  and the second part matches and the second part matches

|

|

|

… it does not match

|

|

|

… it matches 0 or more repetitions of  . .

|

|

|

… it matches 1 or more repetitions of  . .

|

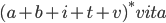

Examples

-

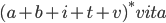

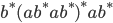

All strings that end in “vita”:  or more elegant:

or more elegant:

-

All strings with an even number of a’s:

-

All strings with an odd number of a’s:

Kleene’s theorem

-

If

is a regular expression then

is a regular expression then  is a regular language.

is a regular language.

-

All strings that match a regular expression form a

language. This language is regular.

-

If L is a regular language then

for some regular expression

for some regular expression  .

.

-

If a language is regular, then there exists a regular

expression that matches all strings in that language (and

not more/less).

-

Therefore, we can conclude that finite automata and regular

expressions describe the same languages; they are equally

powerful.

-

In order to prove this theorem, we need to show that we can

convert a regular expression to a finite automaton and vice

versa.

Reg exp to εNFA

-

We will convert a regular expression to a

-

Basically, we’re going to split our regular

expression into “building blocks”, then

we’re going to convert those “building

blocks” into automata individually.

-

With each regex “building block”, we need to

show that we can build an automata out of it. In other

words, for each possible regex “building block”,

we need to show that it’s language is regular.

-

Once we’ve shown that all our building blocks are

regular, we can use said building blocks to build an

automata out of any regex expression.

-

We can prove we can convert by induction, as regular

expressions are built inductively.

-

This means that we can build any regular expression by

combining our atomic patterns, which results in a compound

pattern. Think of the atomic patterns as our basic building

blocks:

-

First we check if our basic building blocks are

regular.

-

Then we assume that the blocks we use to build compound

patterns are regular.

-

Then we check if the compound patterns are regular.

-

If they are regular we have completed the proof.

-

Let’s start with checking the base cases, these are

our basic building blocks, represented by atomic regular

expressions:

-

is regular:

is regular:

-

is regular:

is regular:

-

is regular:

is regular:

-

These are all atomic regular expressions we have to

check, as # and @ are redundant, meaning that they can also

be represented by compound patterns.

-

Now, assume that the building blocks we use to build

compound patterns are regular. Then we will check if the

compound patterns are regular:

-

We have already proved above that the union of two regular

languages is regular.

-

It was also proved above that the concatenation of two

languages are regular.

-

Again, we have proved above that kleene star is

regular.

-

These are all compound regular expressions we have to check

as all other compound regular expressions are redundant,

meaning that they can also be represented by other compound

patterns.

-

Now we have completed the proof that from any regular

expression we can construct an automaton.

εNFA to reg exp

-

First of all, let’s simply state what we want to do.

We want to prove that there is always a way to convert an

εNFA to a regular expression.

-

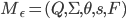

We will construct an NFA

-

is the set of states

is the set of states

-

is the alphabet

is the alphabet

-

is the transition function

is the transition function

-

is the start state

is the start state

-

is the set of final states

is the set of final states

-

This is what we want: the regular expression

which describes all possible paths

which describes all possible paths

-

starting at the state u

-

ending at the state v

-

passing through 0 or more intermediary states, which are in

the set X.

-

It’s important to note that we do not need to pass

through any intermediate states in X, but we have the

opportunity to pass through as many as we want.

-

If we can define the regex

mathematically, then we’ve shown that we can convert

an εNFA to a regex, thereby finishing our

proof.

mathematically, then we’ve shown that we can convert

an εNFA to a regex, thereby finishing our

proof.

-

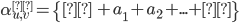

We shall prove this by induction! In case you’ve

forgotten, proof by induction goes like this:

- Base case

-

Inductive step

-

Yes, there will be some weird maths language, but

don’t worry; I’ll translate it for you. It

sounds scary at first, but once you see the proof,

it’s not that bad:

|

Step

|

Weird maths language

|

Normal English + pretty pictures

|

|

Base case

|

Let

be all symbols such that

for

|

Firstly, our base case. The simplest case is going from the initial state to the

final state without going through any

intermediate states.

This is what we’re defining on the left

here; from top to bottom, we’re

defining

-

If the initial state isn’t the same

as the final state and there are transitions

from initial state to final state

-

If the initial state isn’t the same

as the final state and there are no transitions from initial state to final

state

-

If the initial state is the final state and

there are transitions from the initial state

to the final state

-

If the initial state is the final state and

there are no transitions from the initial state to the

final state

All those  symbols are all transitions that go straight

from the initial state to the final state. symbols are all transitions that go straight

from the initial state to the final state.

|

|

Inductive step

|

|

First, we have a proposition (assumption). We propose that  exists and works. exists and works.

Then, we define a  out of out of  . Once we do that, we’ve defined our

inductive step. . Once we do that, we’ve defined our

inductive step.

By doing this, we show that if there exists a

regex from u to v through intermediate states X,

there also exists a regex from u to v with

intermediate states X + {q}, where

‘q’ is any other state in the

automata.

This means we can keep adding on states from

our base case  until we’ve filled in all the states in

our automata. until we’ve filled in all the states in

our automata.

|

-

As you can see from the proof, we can just keep adding on

new states to our

. That means we can add our initial state and final state

to this regular expression, and then append all the

intermediate states onto

. That means we can add our initial state and final state

to this regular expression, and then append all the

intermediate states onto  and it’ll work.

and it’ll work.

-

That’s great, but how do you actually convert an

εNFA to a regular expression? We’ve proved

that we can do it, so how do we actually do it?

-

Below you’ll see an example of how to actually

convert an εNFA into a regular expression. It

involves breaking down the regex you need by taking out

intermediate states, until you get something trivial, then

working your way back up again.

-

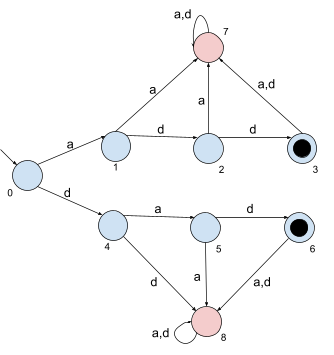

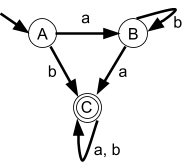

Given the following NFA

-

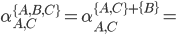

We need to find a regular expression that starts at state

A, ends at state C and passes through any of A,B,C zero or

more times.

-

Therefore, we are looking for

-

The starting state u is going to be A

-

The ending state v is going to be C

-

The set of states which we can pass through X is equal to

Q

-

Now, we are going to rewrite this regular expression using

the formula above

-

We are going to pull out one of the states from X

-

It is best to choose a state that reduces the paths we can

go a lot

-

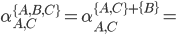

I’m going to pull out B. This gives:

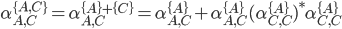

-

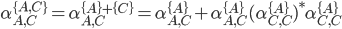

We can decompose this further into

-

It is up to you how far you decompose.

-

If you are able to see what the regular expression is right

away, you don’t need to decompose. However, if you

barely decompose, the chances that you miss a path are

high.

-

We could decompose what we have got now even further, but I

don’t think that it is necessary to do so as the

regular expressions for these are fairly easy to find.

-

Let’s take a look at the first decomposed regular

expression:

b + b(a+b)*(a+b) = b(a+b)*

b + b(a+b)*(a+b) = b(a+b)*

-

Any string that starts with b or that starts with b followed by one or more mixed a’s

and/or b’s will be matched. This can be simplified into a string starting with b followed by zero or more mixed a’s and/or

b’s.

-

Let’s take a look at the second decomposed regular

expression:

a + ε(ε)*a = a + a = a

a + ε(ε)*a = a + a = a

-

Any string that contains exactly one a will be

matched.

-

Let’s take a look at the third decomposed regular

expression:

a + a(a+b)*(a+b) = a(a+b)*

a + a(a+b)*(a+b) = a(a+b)*

-

This is very similar to the first one. It will match a

string starting with a followed by zero or more mixed

a’s and/or b’s.

-

As you might have noticed, I didn’t decompose the

blue part. The reason for that is that the blue regular

expression starts and ends in the same state, meaning that

it can only take transitions to the state itself (if

exists):

-

b

b

-

Any string that contains exactly one b will be matched.

-

Now, let’s put together the regular expressions from

the coloured parts:

-

b(a+b)* + ab*a(a+b)*

b(a+b)* + ab*a(a+b)*

-

This is the regular expression that is represented by this

NFA - we are done!

-

Often, there is more than one regular expression that is

represented by an automaton, meaning that there is no single

correct solution. For example, the regular expression (ab*a

+ b)(a + b)* is correct as well.

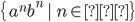

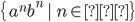

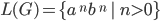

Limitations of regular languages

-

Is every language regular? No!

-

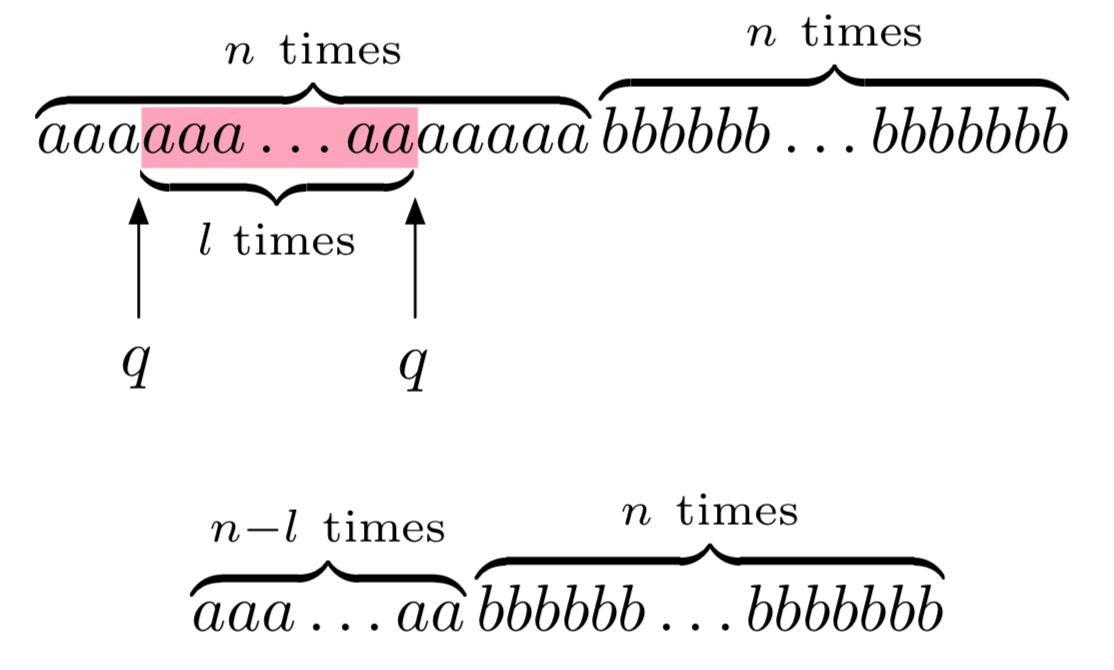

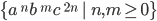

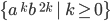

For example, look at the language

-

Our automaton has finitely many states.

-

It’s going to scan the string from the left to the

right, starting with the a’s.

-

Then, when it’s going to scan the b’s, it has

to remember how many a’s there were previously.

-

Since n can be infinitely big, there is no way to do this

with our finite number of states!

-

We are going to prove by contradiction that the language

is not regular.

is not regular.

-

Suppose we have an automaton

that accepts this language. Since the number of

states it has is finite, we are going to say that it has

that accepts this language. Since the number of

states it has is finite, we are going to say that it has  states.

states.

-

Next, we are going to choose

such that

such that  . We can always find an

. We can always find an  that is greater than

that is greater than  because

because  is not bounded, i.e. can be infinitely large.

is not bounded, i.e. can be infinitely large.

-

Now, our automaton is going to start to scan the

’s from the left to the right. Since we have less

states than the number of a’s, there must be a state

we are going to enter at least twice (pigeonhole principle)!

We are going to call this state

’s from the left to the right. Since we have less

states than the number of a’s, there must be a state

we are going to enter at least twice (pigeonhole principle)!

We are going to call this state  . Between the first time we enter

. Between the first time we enter  and the second time we enter

and the second time we enter  we are going to scan

we are going to scan

’s. After the second time we entered

’s. After the second time we entered  our automaton is going to continue to the final

state.

our automaton is going to continue to the final

state.

-

When the automaton enters state

for the first time, it goes on a path that leads it

to

for the first time, it goes on a path that leads it

to  again. Then, after arriving at

again. Then, after arriving at  for the second time, it goes on a path that leads it

to the final state.

for the second time, it goes on a path that leads it

to the final state.

-

Here is the problem: Why arrive at

, and then take a path that leads to

, and then take a path that leads to  again? Why not take the path that goes to the final

state upon arriving at

again? Why not take the path that goes to the final

state upon arriving at  for the first time? This means that our automaton

would accept a string with

for the first time? This means that our automaton

would accept a string with  number of

number of  ’s. However, now the number of

’s. However, now the number of  ’s is not anymore equal to the number of

’s is not anymore equal to the number of  ’s and here we have our contradiction!

’s and here we have our contradiction!

Pumping (🎃) Lemma for regular languages - contrapositive

form

-

We can use the contrapositive form of the pumping lemma for

regular languages to show that a language is not regular. We

cannot use the pumping lemma to show that a language is

regular!

-

It works by splitting up the language into parts, then

“pumping” a subpart until you get a string that

isn’t in the language. This is similar to what we have

just done before.

-

The different steps of the proof can be understood as a

game versus a demon.

-

The demon chooses a number

such that

such that  .

.

-

We cannot choose a value for k, we only know that it is

greater than 0.

-

We choose a string

such that

such that

.

.

-

We can choose any string

that is within the language. However, we have to make sure

that, no matter what k is, our string

that is within the language. However, we have to make sure

that, no matter what k is, our string  is always in the language. We do not need to cover

the whole language with our string!

is always in the language. We do not need to cover

the whole language with our string!

-

and

and  can be empty, there is no restriction concerning

them.

can be empty, there is no restriction concerning

them.

-

The length of the

part must be at least

part must be at least  . A way to make sure that this is always the case is to let

the

. A way to make sure that this is always the case is to let

the  part be equal to something to the power of

part be equal to something to the power of  .

.

-

due to the lower bound of k.

due to the lower bound of k.

-

As a general tip, try to make

as trivial as possible, like in the following

example:

as trivial as possible, like in the following

example:

-

For instance, we could let

to make sure that

to make sure that  is at least

is at least  long (

long ( is a symbol from the alphabet).

is a symbol from the alphabet).

-

The demon splits the

part of the string into

part of the string into  such that

such that  , i.e.

, i.e.  The whole string would be

The whole string would be  .

.

-

You cannot choose how the string is going to be split! All

you know is that the

part of the string is not going to be empty.

part of the string is not going to be empty.

-

Therefore, you have to do a general case and define

such that every way of splitting the string is

possible and we do not introduce any restrictions.

such that every way of splitting the string is

possible and we do not introduce any restrictions.

-

and

and  can be empty, there is no restriction concerning

them.

can be empty, there is no restriction concerning

them.

-

For instance, if

, then

, then  and

and  because v cannot be empty. Furthermore, the remaining

part

because v cannot be empty. Furthermore, the remaining

part

. If we add the two parts, we are going to get back to

. If we add the two parts, we are going to get back to  . Also, we haven’t made any assumptions about how the

string is going to be split.

. Also, we haven’t made any assumptions about how the

string is going to be split.

-

We pick an

such that the string

such that the string  If this is the case we have proven that the language is not

regular!

If this is the case we have proven that the language is not

regular!

-

We can pick any

we want as long as it is equal to or greater than 0.

we want as long as it is equal to or greater than 0.

-

Our goal is to choose an

such that the resulting string is outside of the

language.

such that the resulting string is outside of the

language.

-

For instance,

and

and

. If we now choose

. If we now choose  , we are going to get

, we are going to get  . We have now removed

. We have now removed

’s compared to what we had as

’s compared to what we had as  in the step before, which was

in the step before, which was

. Also we know that

. Also we know that  , meaning that we removed at least one

, meaning that we removed at least one  !

!

-

You MUST say “Therefore L is not regular”, if you

don’t, you will lose marks.

-

If this still doesn’t make any sense, look at a few

examples below, and then read the general case above

again.

|

Example

|

Description

|

|

|

-

The demon chooses

such that such that

-

Now choose a string

and and   . .

-

I’ll do it like

, so , so  and and  . .

-

For every value of

I will get a specific string which is

in the language of I will get a specific string which is

in the language of  . .

-

It is ok that I cannot get the empty string

(

which is in the language) because I do not need to cover the

whole language. which is in the language) because I do not need to cover the

whole language.

-

The demon splits your

into a into a  such that such that

-

Now, we have to do the splitting in a

general case.

-

Since

cannot be empty, then cannot be empty, then  and and  . .

-

Since

, then , then   . .

-

We pick an

such that the string such that the string

-

Now for this example, choose

-

Then

as the number of b’s will be bigger

than the number of a’s, no matter what

value k is. as the number of b’s will be bigger

than the number of a’s, no matter what

value k is.

-

Therefore L is not regular

|

|

|

-

The demon chooses

such that such that

-

Now choose a string

and and   . .

-

I’m going to choose

, so , so

-

The demon splits your

into a into a  such that such that

-

, as it cannot be empty we have , as it cannot be empty we have  . .

-

The remaining part

because because

-

We pick an

such that the string such that the string

-

We pick

-

Then

because the power we have got here is not

going to be a factorial! because the power we have got here is not

going to be a factorial!

-

Let me prove why this is not going to be a

factorial:

-

is going to be some factorial is going to be some factorial  . .

-

The next higher factorial is going to be

. .

-

However, our power

is going to be in the middle of these

two factorials: is going to be in the middle of these

two factorials:  Therefore, it cannot be a factorial! Therefore, it cannot be a factorial!

-

If you still have some doubts, try it out

with the smallest k we could have,

. Then choose the largest l we can choose, . Then choose the largest l we can choose,  . So we have . So we have  which holds. For any choice of which holds. For any choice of  the next higher factorial is going to

grow even faster, therefore this will always

hold. the next higher factorial is going to

grow even faster, therefore this will always

hold.

-

Therefore L is not regular

|

|

|

-

The demon chooses

such that such that

-

Now choose a string

and and   . .

-

I’m going to choose

where where  is going to be the next prime number is going to be the next prime number  . Since the number of primes is infinite

this will always work, no matter how large . Since the number of primes is infinite

this will always work, no matter how large  is. is.

-

Therefore,

-

The demon splits your

into a into a  such that such that

-

The length of

is going to be is going to be  , so , so

-

Since

we have we have

-

That’s all info we need to continue

our proof, you will see why in the next

step.

-

We pick an

such that the string such that the string

-

We will pick

-

I’m going to show that the length of

is not going to be equal to a prime

number, therefore it is outside of the

language. is not going to be equal to a prime

number, therefore it is outside of the

language.

-

, which is not going to be a prime number

since we also have a factor , which is not going to be a prime number

since we also have a factor  here but prime numbers should only be

divisible by 1 or itself (p). here but prime numbers should only be

divisible by 1 or itself (p).

-

Therefore,

-

Therefore L is not regular

|

|

and

|

-

The demon chooses

such that such that

-

Now choose a string

and and   . .

-

I’m going to choose

where where  and and

-

-

The demon splits your

into a into a  such that such that

-

We pick an

such that the string such that the string

-

Pick

-

Now

and and  because we deleted at least one because we deleted at least one  by letting by letting  . .

-

Therefore

-

Therefore L is not regular

|

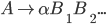

Automata theory: Context free languages

Pushdown Automata (PDA)

-

Pushdown automata are an extension to the automata we have

seen before.

-

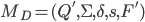

We will take an

and add a stack to the control unit.

and add a stack to the control unit.

-

The stack does not have a size limit, i.e. is infinitely

big.

-

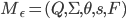

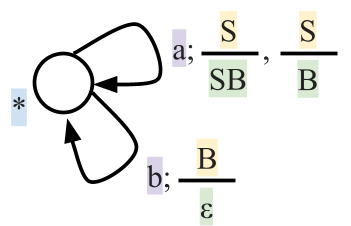

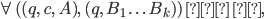

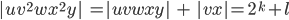

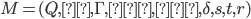

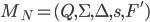

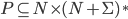

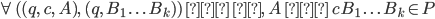

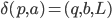

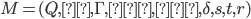

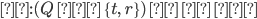

More formally, a PDA is a 7 tuple

-

is the set of states

is the set of states

-

is the input alphabet

is the input alphabet

-

is the stack alphabet (the set of things we can put

on the stack)

is the stack alphabet (the set of things we can put

on the stack)

-

is the transition relation (more detail see

below)

is the transition relation (more detail see

below)

-

is the start state

is the start state

-

is the initial stack symbol

is the initial stack symbol

-

is the set of final states

is the set of final states

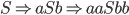

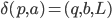

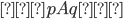

Transition relation

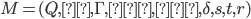

-

Now, let’s take a closer look at the transition

relation

:

:

-

is the state the PDA is currently in.

is the state the PDA is currently in.

-

is the symbol we are currently reading. This symbol

could be any symbol from our alphabet or epsilon, which

means that we can do epsilon moves by reading nothing!

is the symbol we are currently reading. This symbol

could be any symbol from our alphabet or epsilon, which

means that we can do epsilon moves by reading nothing!

-

is the symbol which is currently on top of the stack.

When we make a transition we are going to pop (remove) this

symbol from the top of the stack.

is the symbol which is currently on top of the stack.

When we make a transition we are going to pop (remove) this

symbol from the top of the stack.

-

is the resulting state after we have made the

transition.

is the resulting state after we have made the

transition.

-

is going to be 0 or more symbols from our stack

alphabet that we are going to push on the stack when we make

the transition.

is going to be 0 or more symbols from our stack

alphabet that we are going to push on the stack when we make

the transition.

-

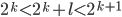

Let’s look at how one tuple of our transition

relation looks like:

Here, we are in the state  , we will read the symbol

, we will read the symbol  , and we see

, and we see  on top of the stack. We will then pop (remove)

on top of the stack. We will then pop (remove)  off the stack, go to the next state

off the stack, go to the next state  and push (add)

and push (add)  on the stack. We will start pushing with

on the stack. We will start pushing with  and end with

and end with  , such that we now have

, such that we now have  on top of the stack.

on top of the stack.

-

Keep in mind that if

is not on the top of the stack, we cannot perform

this transition. Think of it as like a “second

input”.

is not on the top of the stack, we cannot perform

this transition. Think of it as like a “second

input”.

-

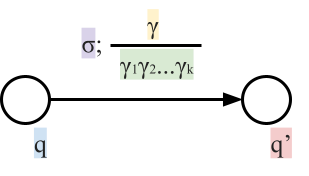

Graphically, the transition looks like this:

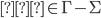

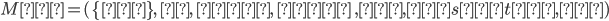

Configuration

-

A configuration is a complete description of our PDA at a

certain point in time. It is represented as an element

of:

Configurations

-

is the current state.

is the current state.

-

is the whole part of the input we still have left to scan/read.

Note that this is not only the next symbol.

is the whole part of the input we still have left to scan/read.

Note that this is not only the next symbol.

-

is the whole stack content. Note that this is not only the

symbol on top of the stack; it’s the entire thing.

is the whole stack content. Note that this is not only the

symbol on top of the stack; it’s the entire thing.

-

Therefore, every configuration will be of the form

Configurations

-

We can define relations between those configurations, which

tell us how to go from one configuration to the next

configuration. We have to distinguish between two cases: The

case when we consume a symbol from the input, and the case

where we don’t consume any symbol and take an epsilon

move.

-

Case 1: We consume a symbol. We write the relation

as:

when there exists such an element in

-

Case 2: We don’t consume a symbol and take an epsilon

move. We write the relation as:

when there exists such an element in

Acceptance

-

A PDA can accept a string either by empty stack or by final

state.

-

We can turn a PDA that accepts by final state into a PDA

that accepts by empty stack and vice versa.

By final state

-

A PDA will accept a string by final state if we can go from

the start configuration to a final configuration which

contains a final state and where we don’t have any

string left to scan/consume. Between those two

configurations there can be 0 or more other intermediary

configurations which we pass through.

-

Formally, this is written as:

-

We will start at state s with the whole input string x

still remaining to scan, and our stack contains the initial

stack symbol only.

-

We will go through as many intermediary states as we want

to.

-

We will end in a state f where we don’t have anything

remaining to scan, and our stack content is g.

-

Therefore, the language accepted by the PDA is

By empty stack

-

A PDA will accept a string by empty stack if we can go from

the start configuration to a final configuration where the

stack is completely empty and where we don’t have any

string left to scan/consume. Between those two

configurations there can be 0 or more other intermediary

configurations which we pass through.

-

Formally, this is written as:

-

We will start at state s with the whole input string x

still remaining to scan, and our stack contains the initial

stack symbol only.

-

We will go through as many intermediary states as we want

to.

-

We will end in a state q where we don’t have anything

remaining to scan, and our stack is empty.

-

“Stack is empty” means that we do not have

or any other symbol on the stack

or any other symbol on the stack

-

Therefore, the language accepted by the PDA is

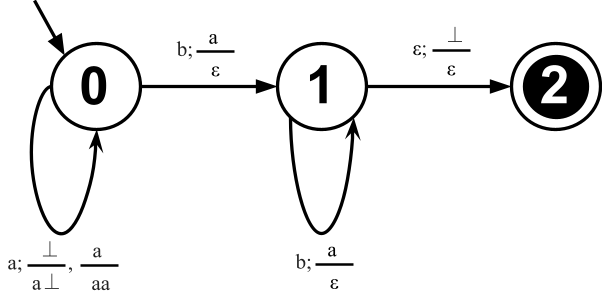

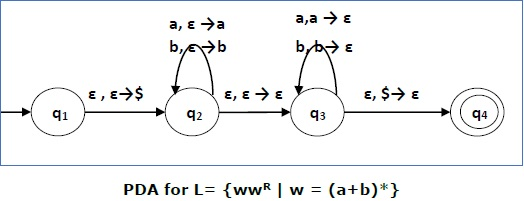

Example PDA accepting palindromes

-

A Palindrome is a string where the reverse of that string

is equal to the original string.

-

Examples: abba, abdba, aaaa

-

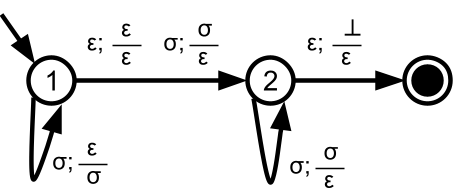

A PDA that will accept all palindromes by final state (and

also empty stack) looks like this:

where  , i.e.

, i.e.  is any symbol from our alphabet

is any symbol from our alphabet  .

.

-

Let’s start from scratch and build a PDA that looks

like this.

-

We are going to differentiate between palindromes with an

even number of symbols and those with an odd number of

symbols

-

Our strategy for constructing the PDA that accepts

palindromes with an even number of symbols will be the

following:

-

We scan the word until we reach the middle of it.

-

Then we scan the second half of the word, expecting the

symbols from the first half of the word in reverse

order.

-

If this is the case, our word is a palindrome.

-

Now, we can assign a state for every step of our

strategy

-

We will be in state 1 when we scan the first half of the

word.

-

We will be in state 2 when we scan the second half of the

word.

-

We will be in the final state when we have finished

scanning the whole word and the reverse of the first half is

equal to the second half of the word.

-

Let’s think about our transitions for each of our

states:

-

We will stay in state 1 and keep adding the current symbol

to the stack if we haven’t reached the middle of the

word.

-

We will make a transition to state 2 when we have reached

the middle of the word, without changing the stack.

-

We will stay in state 2 and keep popping the current symbol

from the stack as long as the current symbol is equal to the

symbol of the top of the stack.

-

We will make a transition to the final state if our stack

contains only the initial stack symbol, and remove the

initial stack symbol.

-

However, there is still one problem: Palindromes with an

odd number of symbols, such as “abdba”. How to

deal with them? We can throw away the symbol in the middle!

For this example, it means that we throw away

“d” and we will check the remaining string

“abba”. We have already defined a PDA for

checking palindromes with an even number of symbols

above.

-

In order to integrate the “throw away the middle

symbol” action into our existing PDA we will add one

transition from state 1 to 2 where we consume a symbol but

don’t change the stack contents.

-

Now we have arrived at our final PDA, which is equal to the

image above.

-

Some questions you might ask yourself are:

-

How does the PDA know when it has reached the middle of the

word?

-

How does the PDA know that it has arrived at the middle

symbol of an odd string, which can be thrown away?

-

The answer is that the PDA is going to guess. The PDA can

do this because it is non-deterministic, similar to NFAs we

have seen before. In other words, the PDA can take any of

the transitions that are possible.

-

If we have a palindrome as a string and we process this

palindrome, there will be one combination of transitions

where the PDA will go into the final state at the end.

-

Let’s simulate our PDA on the string

“abcba”, which is a palindrome:

The PDA has reached state 2 and will therefore

accept.

-

Let’s simulate our PDA on the string

“abcde”, which is not a palindrome:

X at this point our computation dies because there is no

transition we could take (d ≠ b). Therefore the PDA will

reject.

X at this point our computation dies because there is no

transition we could take (d ≠ b). Therefore the PDA will

reject.

Context free grammars

-

A CFG, consisting of terminal and non-terminal symbols, is

used to generate strings (sentences) by following its

production rules.

-

The terminal symbols are the symbols which will form the

string we are going to generate.

-

The non-terminal symbols are going to be replaced by other

symbols. These other symbols can be terminals, non-terminals

or a mix of both.

-

There exist so called “productions” which tell

us how to replace the non-terminal symbols.

-

We have to keep replacing non-terminal symbols until we

arrive at a string that consists solely of terminal symbols.

This process is called “derivation”.

-

The final string is also called a sentence.

-

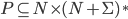

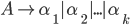

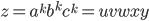

Formally, a CFG is a quadruple

-

is a finite set of nonterminal symbols

is a finite set of nonterminal symbols

-

is a finite set of terminal symbols

is a finite set of terminal symbols

-

are the productions

are the productions

-

is the start nonterminal

is the start nonterminal

-

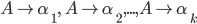

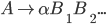

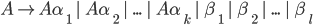

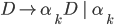

To define production rules …

-

we will use the shorthand

to mean

-

we will use the shorthand

to mean

-

To express that

is derivable from

is derivable from  we will write

we will write  or

or

-

Without

: This means that given the string

: This means that given the string  , we have applied some production rules and arrived at

string

, we have applied some production rules and arrived at

string

-

With

: This means that given the string

: This means that given the string  , we have applied exactly

, we have applied exactly  production rules and arrived at string

production rules and arrived at string

-

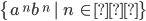

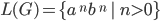

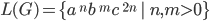

The language generated by a CFG G is the set of all its

sentences, i.e. the set of all strings that can be generated

by using its production rules.

-

The language generated is going to be equal to the set of

all strings x that are derivable from the start

non-terminal.

-

We can apply as many production rules as we want, as long

as we end with a string (sentence) that solely consists of

terminals.

-

This language L(G), generated by some CFG G, is called

context-free (CFL).

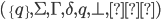

-

If it’s all still a bit too confusing for you, look

at the table below, where we convert context-free languages

into context-free grammars:

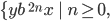

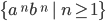

|

Language

|

Converting it to a grammar

|

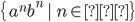

|

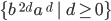

|

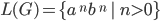

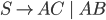

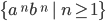

-

Every string with the same number of

a’s followed by the same number of

b’s will be in the language.

-

We have shown that this language is not

regular, however, it is context free as we

can come up with a CFG for it.

-

The CFG is

-

We can produce 0 a’s and b’s,

i.e. the empty string, by applying only the

second production rule:

-

We can produce 1 a and 1 b by applying the

first production rule once and the second

production rule last:

-

We can produce 2 a’s and 2 b’s

by applying the first production rule 2

times and the second production rule

last:

-

Therefore, we can produce

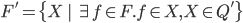

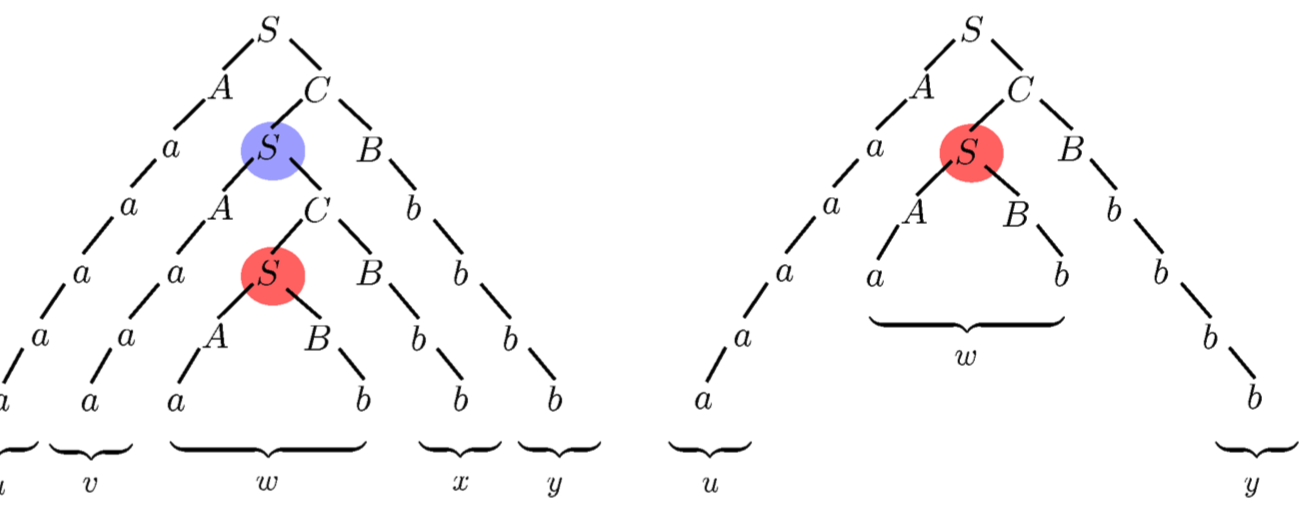

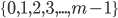

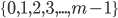

a’s and a’s and  b’s by applying the first