Social Computing Techniques (COMP3208) Notes Document

Introductory Lecture 4

Module Overview 4

Coursework 5

Additional Links and Resources 6

Recommender Systems 7

Where to Find What 7

What is Social Computing? 7

What is Crowdsourcing? 8

Crowdsourcing Examples 9

CAPTCHA 9

NoiseTube 9

Amazon Mechanical Turk 10

Some other examples 10

Recommender Systems 11

Online Advertising Auctions 11

Summary 13

Past & Present 13

Terminology 13

History of Social Computing 13

1714 - Longitude Prize 13

1791 - Gaspard de Prony’s Logarithmic Tables 14

Wisdom of the Crowds 14

1906 - Sir Francis Galton 15

1984 - Space Shuttle Challenger Disaster 16

Early Social Computing Systems 17

Commercialisation 20

Web 2.0 22

Rise of Crowdsourcing 23

Crowdsourcing for Social Good 24

Crowdsourcing and Machine Learning 25

Why Do We Still Use Social Computing? 25

Features of Computing Systems 27

Recommender Systems 28

Learning Outcomes 28

Resources 28

What are Recommender Systems? 29

Examples of Recommender Systems 29

Why use Recommender Systems? 32

Potential Issues 33

Paradigms of Recommender Systems 33

Content-based 33

Knowledge-based 34

Collaborative Filtering 35

Hybrid 36

Pros and Cons of Different Approaches 36

Similarity Coefficients 37

“Cleaning” Features 37

Calculating Similarity 39

Exercise 39

Self-Test Question Answers 40

Question 1 40

Question 2 40

Question 3 41

User-Based Collaborative Filtering 41

Similarity Measure (for Ratings) 42

Pearson Coefficient Example 43

Neighbourhood Selection 44

Prediction 44

Improving Prediction 45

Produce Recommendation 46

Item-Based Collaborative Filtering 46

Similarity Measure (for Ratings) 47

Adjusted Cosine Similarity Example 48

Prediction 49

Exercise 2 49

Alternative Approaches 51

Simple Approach: Slope One 51

Types of Ratings 52

Example: Items Bought Together 52

Evaluating Recommender Systems 53

Correct and Incorrect Classifications 54

Metrics 55

Precision 55

Recall 55

Accuracy 55

Rank Score 56

Error Measures 56

Online Auctions 57

Where Are Auctions Used and Why? 57

Sponsored Search 57

Display Advertising 58

Why Use Auctions? 59

Auction Design 59

Auction Types 60

English Auction 60

Dutch Auction 61

Sealed-Bid First Price Auction 61

Sealed-Bid Second Price Auction (Vickery Auction) 62

Dominant Strategy 62

Formal Model and Utility 63

Computing Expected Utilities 64

Reputation Systems 65

Trust Issues… 65

Terminology 65

Cherries and Lemons 65

The Lemon Problem 66

Moral Hazard 66

Trust on the Web 66

The Solution? 67

Reputation vs. Recommender Systems 67

Design Considerations 67

Existing Systems 67

Reputation Value 69

Measuring Confidence 69

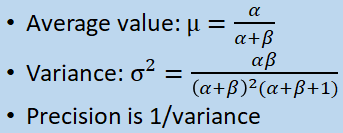

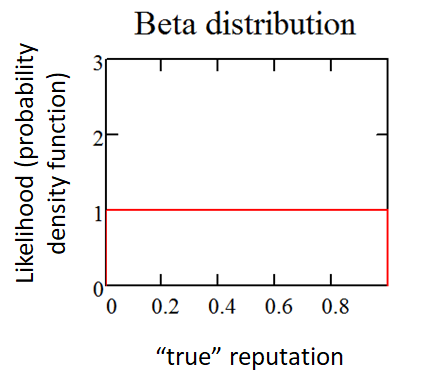

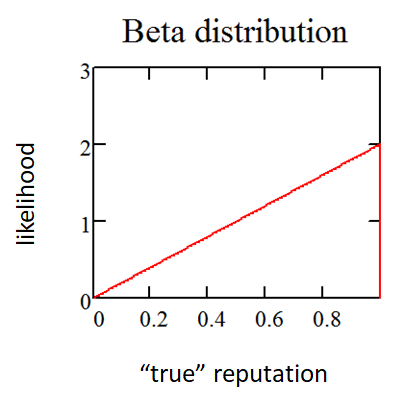

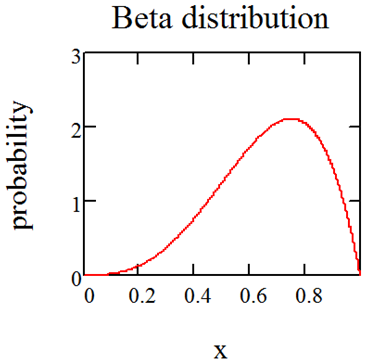

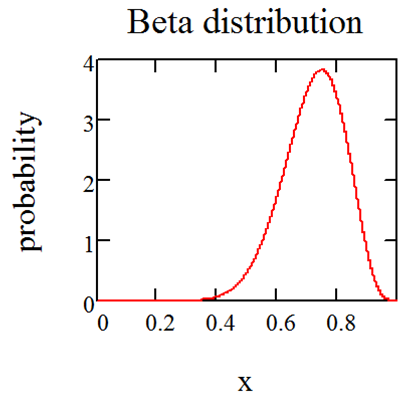

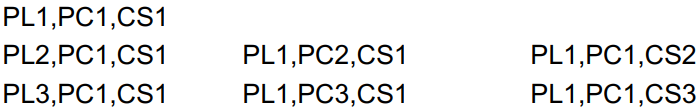

Probabilistic Approach 70

Using the Beta Distribution 70

Evaluating Reputation Systems 72

Issues 72

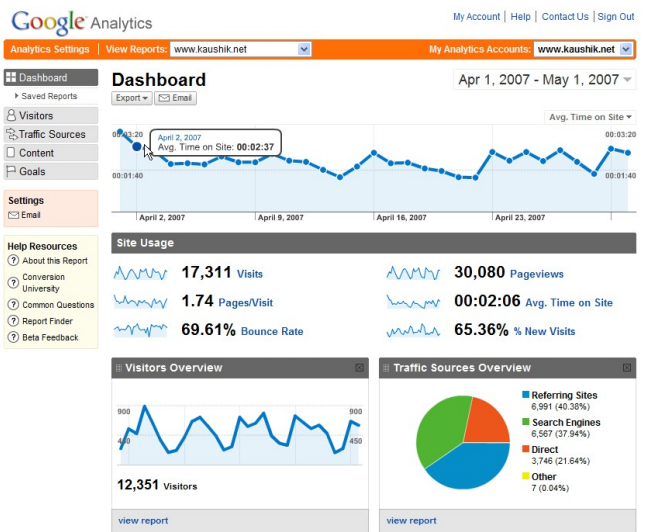

Financial Incentives 74

Experimental Design 75

Human Computation 75

Modern Recommendation Systems 75

Incentives in Crowd 75

Rank Aggregation 75

Experts Crowdsourcing 75

Revision Lectures 75

tl;dr 75

Introductory Lecture 75

Past & Present 76

Recommender Systems 79

Online Auctions 90

Reputation Systems 92

Additional Lectures 96

Introduction to Coursework 96

Assignments 96

Useful Resources 97

Forming Groups (of 2 or 3) 97

Task Datasets 97

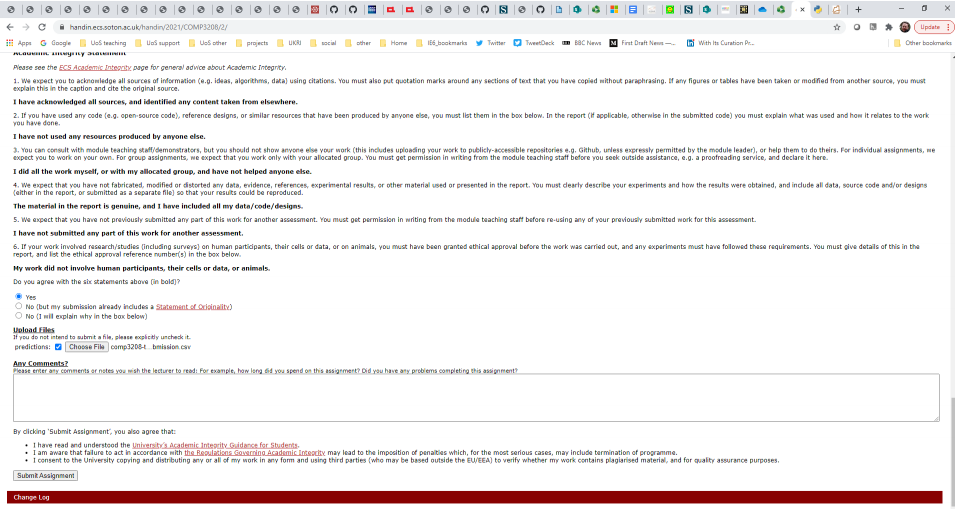

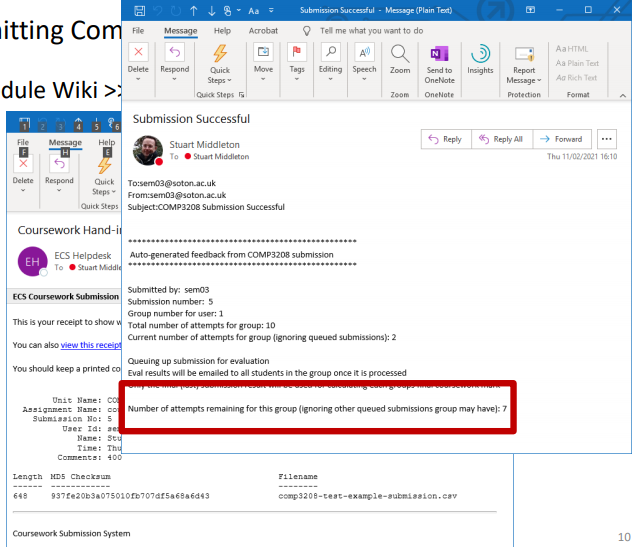

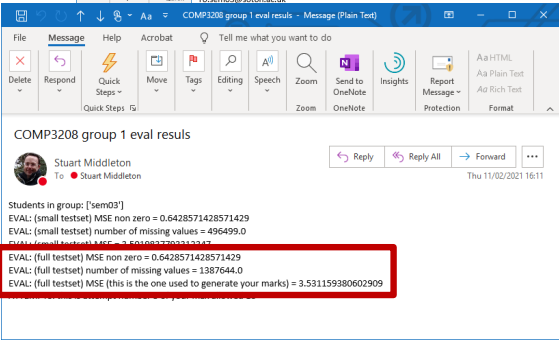

Submitting Computed Ratings 98

Written Report 100

Academic Integrity 100

Coursework Surgery Sessions 101

Coursework “Surgery” 1 101

Coursework “Surgery” 2 101

Coursework “Surgery” 3 101

Coursework “Surgery” 4 101

Guest Lecture: Davide Zilli from Mind Foundry 101

Guest Lecture: Mikhail Fain from Cookpad 101

Introductory Lecture

Module Overview

The primary module aim is to investigate how online collective intelligence and “crowd

wisdom” is used in various business and research areas, and the underlying technologies/algorithms used in practice.

Main topics:

-

Crowdsourcing

-

Recommender Systems

-

Reputation Systems

-

Experimental Design and Web Analytics

-

Online Advertising Auctions

-

Rank Aggregation

-

There’ll also be some guest lectures from

industry/academia discussing applications; confirmed for

2020-21 (if you’re reading this later, RIP):

-

Mikhail Fain (Cookpad: Food tech company)

-

Davide Zilli (Mind Foundry: Oxford’s spin-off

company)

Learning outcomes:

-

demonstrate knowledge of social computing technology

applications, e.g. crowdsourcing, recommender systems, online

auctions

-

describe the most important techniques and issues in designing, building

and modelling social computing systems

-

set up social computing experiments and analyse the results with a scientific approach

-

tl;dr social computing

There is a strong applied component to the module (i.e. learning how shit is

actually used). Note that it’s not about social networks (that’s for COMP6250: Social Media and Network

Science).

Assessment:

-

40% coursework

-

60% final exam (hence I’m making these notes, bruh I’m not

getting paid for this)

Lecturers:

-

Dr. Sarah Hewitt

-

Dr. Stuart Middleton

-

Dr. Jie Zhang

Coursework

-

Done in pairs (email sem03@soton.ac.uk your team choices,

subject 'COMP3208 coursework teams')

-

Aim is to design, implement, and evaluate a recommender system (to predict ratings for a combination of user and

items for which no ratings are provided)

-

Medium size dataset will be provided consisting of user ratings for items

(~20 million entries)

Additional Links and Resources

Recommender Systems

-

relevant chapters: 1, 2, 4 and 7 (and possibly 3 for the

coursework)

Where to Find What

-

MS Teams: live lectures, interactive sessions,

Q&As

-

Module page: general module info, schedule, slides, other

materials

-

Blackboard: self-test exercises, final assessment

What is Social Computing?

It’s a widely used concept with no single definition, but

according to HP Research Labs:

"Social Computing Research focuses on methods for harvesting the collective intelligence of groups of people in order to realize greater value from the interaction between users and information”

(basically, harvest info from users to learn about them and

their interactions with #content)

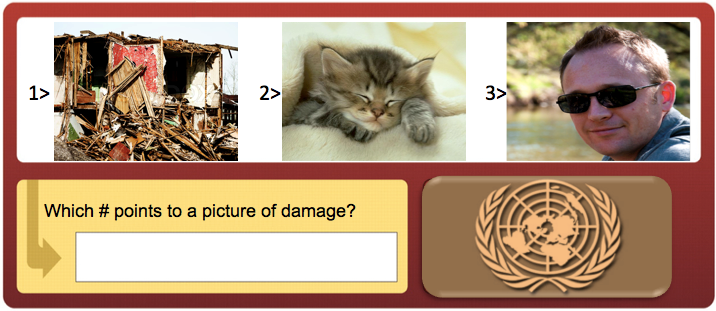

ECS has done various research in social computing, e.g.

enhancing crowdsourcing techniques for sustainable disaster

relief; here’s a flex:

What is Crowdsourcing?

Dictionary definition of crowdsource: “obtain (information or input into a particular task or project)

by enlisting the services of a number of people, either paid or unpaid, typically via the Internet”.

(Basically, get people online to do shit for you)

Topics to be investigated include:

-

What type of problems are suitable for crowdsourcing?

-

How to divide a complex task into many simple tasks that

can be done with no expertise and no/little need for

additional technology?

-

How to design appropriate (e.g. monetary) incentives?

-

How to ensure the quality, e.g. using reputation and/or

voting systems?

Crowdsourcing Examples

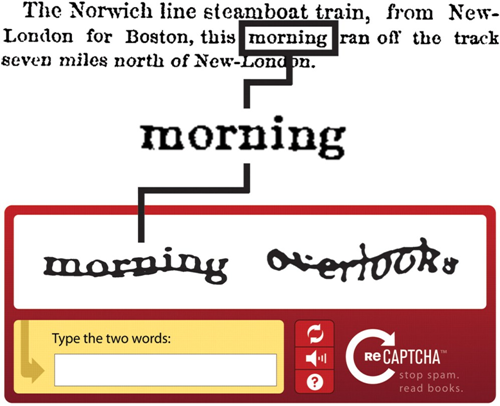

CAPTCHA

-

We’ve all done it, we all hate it

-

"Completely Automated Public Turing test to tell

Computers and Humans Apart" - a type of challenge-response test used in computing to determine whether or not the

user’s human

-

Most common type of CAPTCHA (displayed as Version 1.0)

invented in 1997 (oldskool); it requires the user to correctly evaluate and enter a sequence of letters or

numbers perceptible in a distorted image displayed on the

screen

-

As it’s administered by a computer, in contrast to

the standard Turing test that is administered by a human, a

CAPTCHA is sometimes described as a reverse Turing test

-

However it’s received many criticisms, especially from people with

disabilities, but also from other people who feel that their everyday

work and shitposting is slowed down by distorted words that

are difficult to read (it takes the average person ~10

seconds to solve a typical CAPTCHA - you’re too

slow!)

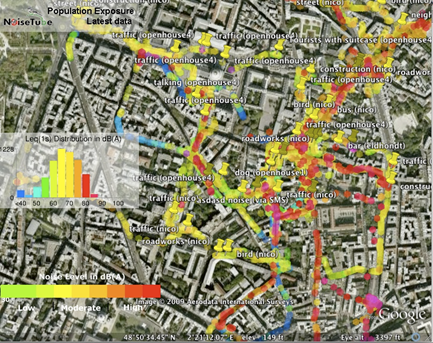

NoiseTube

-

An example of citizen sensing

-

A research project started in 2008 at the Sony Computer

Science Laboratory in Paris in collaboration with Vrije

Universiteit Brussel

-

Goal of the project is to turn smartphones into mobile

noise level meters to enable citizens to measure their exposure to noise in their

everyday environment, and participate in the collective noise mapping of their city or neighborhood (so people can snitch

on your free parties)

-

Software platform that consists of a mobile application

(available for Android and iOS ) and a website (visit

www.noisetube.net and register an account) where users can

submit their noise measurements to visualise and share them

with others

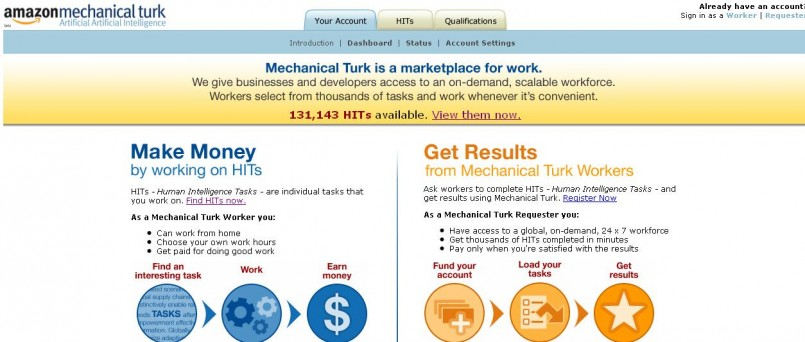

Amazon Mechanical Turk

-

An example of human computation (so Jeff Bezos can make even more money)

-

A crowdsourcing website for businesses to hire remotely located

"crowdworkers" to perform discrete on-demand tasks that computers

are currently unable to do

-

can include anything from conducting simple data validation

and research, to more subjective tasks like survey

participation and content moderation

-

On the Amazon website, “there are still many things

that human beings can do much more effectively than

computers” (but robots will take over someday)

Some other examples

-

Citizen science: e.g. Zooniverse, EteRNA

-

Citizen journalism: e.g. Ushahidi

-

Others: e.g. Red balloon challenge

Recommender Systems

-

A subclass of information filtering system that seeks to predict the "rating" or

"preference" a user would give to an item

-

They are primarily used in commercial applications

-

Collaborative filtering: use collective tastes of many

users to produce personalised predictions about the interests of

individual users

-

e.g. "people who bought these items also

bought.." or "people who listened to these songs

also listened to..." or “people who read these

notes also lost the will to live... ” etc.

-

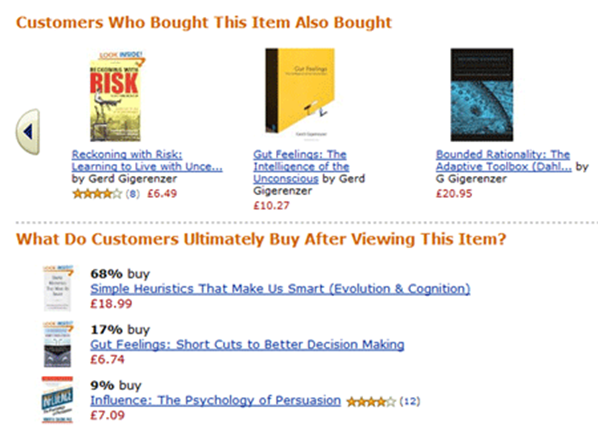

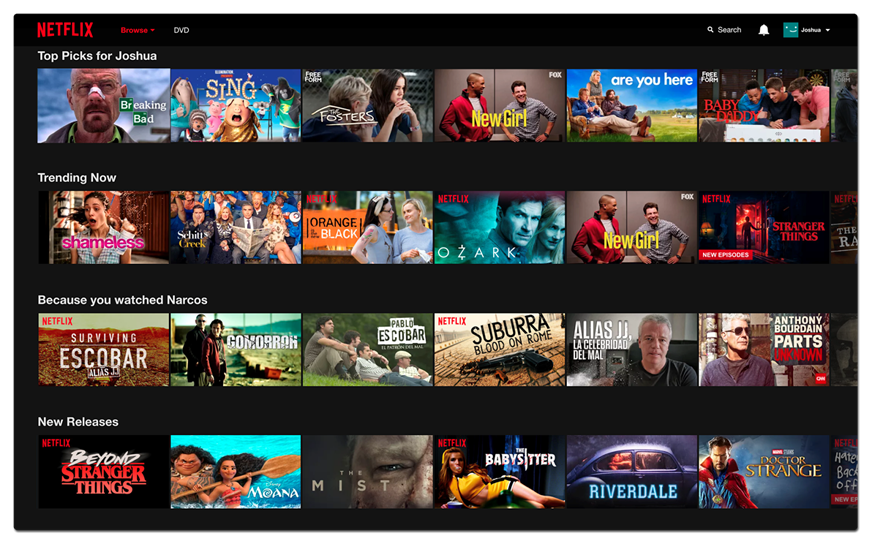

Sites like Amazon and Netflix use recommender systems for

their content, using item-to-item collaborative filtering,

which scales to massive data sets and produces

“high-quality” (I don’t think

they’re very good tbh) recommendations in real

time

Topics to be investigated include:

-

What are the technologies for collaborative

filtering?

-

How to implement a recommender system using medium size

data sets?

-

How to evaluate and compare the effectiveness of a

recommender system?

Online Advertising Auctions

-

Many websites rely on advertising for their income,

especially those offering free content (RIP GeoCities)

-

These ads are determined through very fast auctions,

where advertisers bid on users the moment they enter a

website. Instead of having a complex algorithm for matching

advertisers to users, an auction-approach is used

-

There are two main types of online advertising:

-

sponsored search/keyword advertising

-

search ads only appear in search engine results. Advertisers select a list of keywords that are relevant

to their company or products, and can bid to have their ad

show when someone searches for those words or phrases

-

in sponsored search, advertisers bid on keywords entered by the user

-

display ads are the banners or text you see on websites that sell space to advertisers. These ads used to be

the result of direct relationships between sites and

advertisers (and in some cases, they still are)

-

In display advertising, advertisers bid based on user profile (determined by tracking users through cookies)

-

Remember, you can never escape the corporate machine

Topics to be investigated include:

-

What type of companies play a role in online

advertising

-

What are the different auctions and how do they work?

-

What are the characteristics of the auctions used in online

advertising, and their advantages and disadvantages

-

How should advertisers bid in the auctions.

-

Who learns the user profiles, and how do they do it?

Summary

-

The module focuses on social computing techniques (duh), with 6 main topics (not including social networks, so get off TikTok and

revise)

-

The common theme is the use of collective intelligence (a.k.a the wisdom of the crowds) for research and business applications

-

Remaining topics to look at:

-

Reputation Systems

-

Experimental Design

-

Rank Aggregation

Past & Present

Terminology

Before diving into the lecture, some useful terms:

-

Collective Intelligence - “intelligence that emerges from the

collaboration, collective efforts, and competition of many

individuals”

-

Social Computing - “methods for building computational systems

that harness collective intelligence”

-

Human Computation - “outsourcing computational steps (microtasks)

that are difficult for machines to humans”

-

Crowdsourcing -“obtaining services, ideas or content from a large

group of people through an open call (typically to an online

community) rather than from traditional employees or

suppliers”

History of Social Computing

1714 - Longitude Prize

-

Back in 1714, the British Government offered, by Act of

Parliament, £20k (over £2m today) for a solution

which could find longitude to within half a degree

(equivalent to 2 minutes of time)

-

A group later known as the Board of Longitude was set up to

assess submissions and offer rewards

-

This was won by John Harrison, who invented the revolutionary marine chronometer

-

The clock in the pic above is displayed in the Science

Museum in London (after lockdown you can go check it out if

you want)

-

So why are we talking about clocks? Because this case is

seen by some as the first example of crowdsourcing

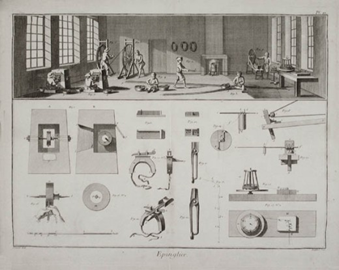

1791 - Gaspard de Prony’s Logarithmic Tables

-

In 1791, de Prony worked on producing logarithmic and trigonometric tables for the French Cadastre, as the French National

Assembly (after the French Revolution) wanted to bring uniformity to measurements and standards used throughout the nation.

-

Inspired by Adam Smith's Wealth of Nations, de Prony divided up labor into three levels, bragging that he "could manufacture logarithms as

easily as one manufactures pins." (basically he wanted

to run a logarithm sweatshop)

-

The three levels were:

-

First level: 5 or 6 high-ranking mathematicians with sophisticated

analytical skills (to choose analytical formulas, specify

the number of decimals and numerical range the tables were

to cover, etc.)

-

Second level: 7 or 8 lesser mathematicians (to calculate pivotal values

with the formulas, sets of starting differences, prepare

templates for the human computers, etc.)

-

Third level: 60-90 “human computers” (the first code monkeys, if you will), many of whom

were out-of-work hairdressers (blame the aristocrats) with

rudimentary arithmetic knowledge, to carry out the most

laborious and repetitive tasks

-

This is actually argued to be the origin of the word

“computer”, fun fact

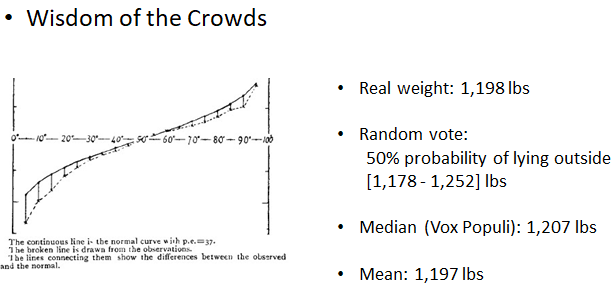

Wisdom of the Crowds

As mentioned before, the wisdom of the crowd is the collective opinion of a group of individuals rather than that of a single expert.

1906 - Sir Francis Galton

-

This guy was a Victorian statistician, progressive, polymath, sociologist, psychologist,

anthropologist, eugenicist, tropical explorer, geographer,

inventor, meteorologist, proto-geneticist, and

psychometrician (tl;dr he did a lot of shit)

-

He invented stuff like:

-

The first weather map

-

Fingerprints

-

Efficacy of prayer

-

Nature versus nurture

-

Core to statistical analysis is the idea that measurements vary (no shit); they have a central tendency (i.e. mean), and a

spread around this central value (i.e. variance). Galton

came up with a measure to quantify normal variation: standard deviation

-

So why is there a picture of an ox above? Well ,in 1906,

Galton visited a livestock fair, and an ox was on display,

where the villagers were invited to guess the animal's

weight (its real weight was 1198 lbs) after it was slaughtered and dressed. Nearly 800 people

guessed, and Galton studied their individual entries after

the event.

-

Galton stated "the middlemost estimate expresses the

vox populi (i.e. voice of the people), every other estimate

being condemned as too low or too high by a majority of the

voters". He reported that the median was 1,207 pounds; to his surprise, this was within 0.8% of the real weight measured by the judges

-

Soon afterwards, in response to an enquiry, he reported the mean (of the guesses) was 1,197 pounds, but didn’t comment on its improved accuracy. Recent

archival research has found some slips in transmitting

Galton's calculations to the original article in the

journal “Nature”: the median was actually 1,208

pounds, and the dressed weight of the ox 1,197 pounds, so the mean estimate had zero error

-

Thus, had Galton known the true result, his conclusion on

the wisdom of the crowd would’ve been more strongly

expressed

-

The same year, Galton suggested in a letter to Nature a

better method of cutting a round cake by avoiding making

radial incisions… but that’s another bedtime

story

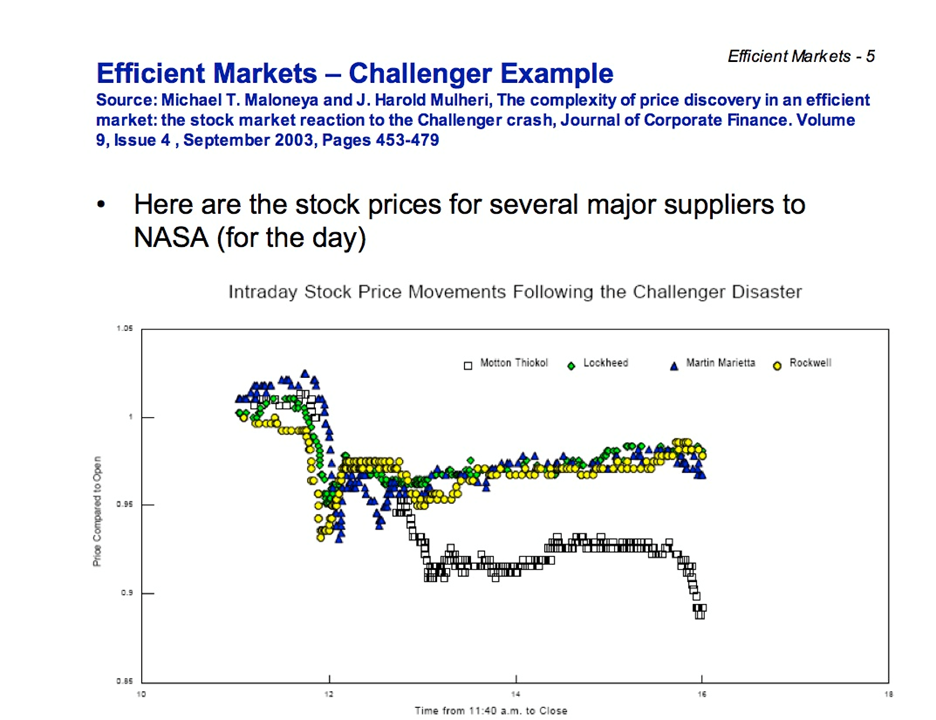

1984 - Space Shuttle Challenger Disaster

You may be aware of the tragic Challenger disaster in 1986,

where the space shuttle exploded 73 seconds after lift-off: https://www.youtube.com/watch?v=j4JOjcDFtBE

As you can imagine, the disaster had a big impact on the stock market at the time, and particularly the stock prices for NASA’s suppliers, which can be seen in the graph below:

NASA’s 4 main suppliers were:

-

Rockwell International: built the shuttle and its main engines

-

Lockheed: managed ground support

-

Martin Marietta: manufactured the ship's external fuel tank

-

Morton Thiokol: built the solid-fuel booster rocket

Early Social Computing Systems

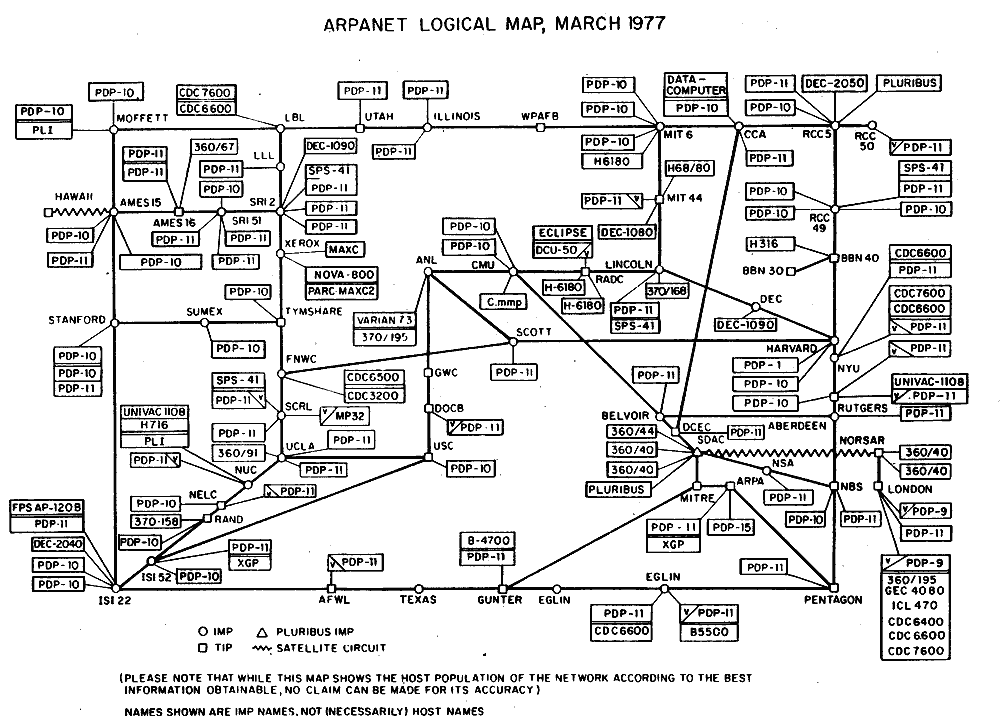

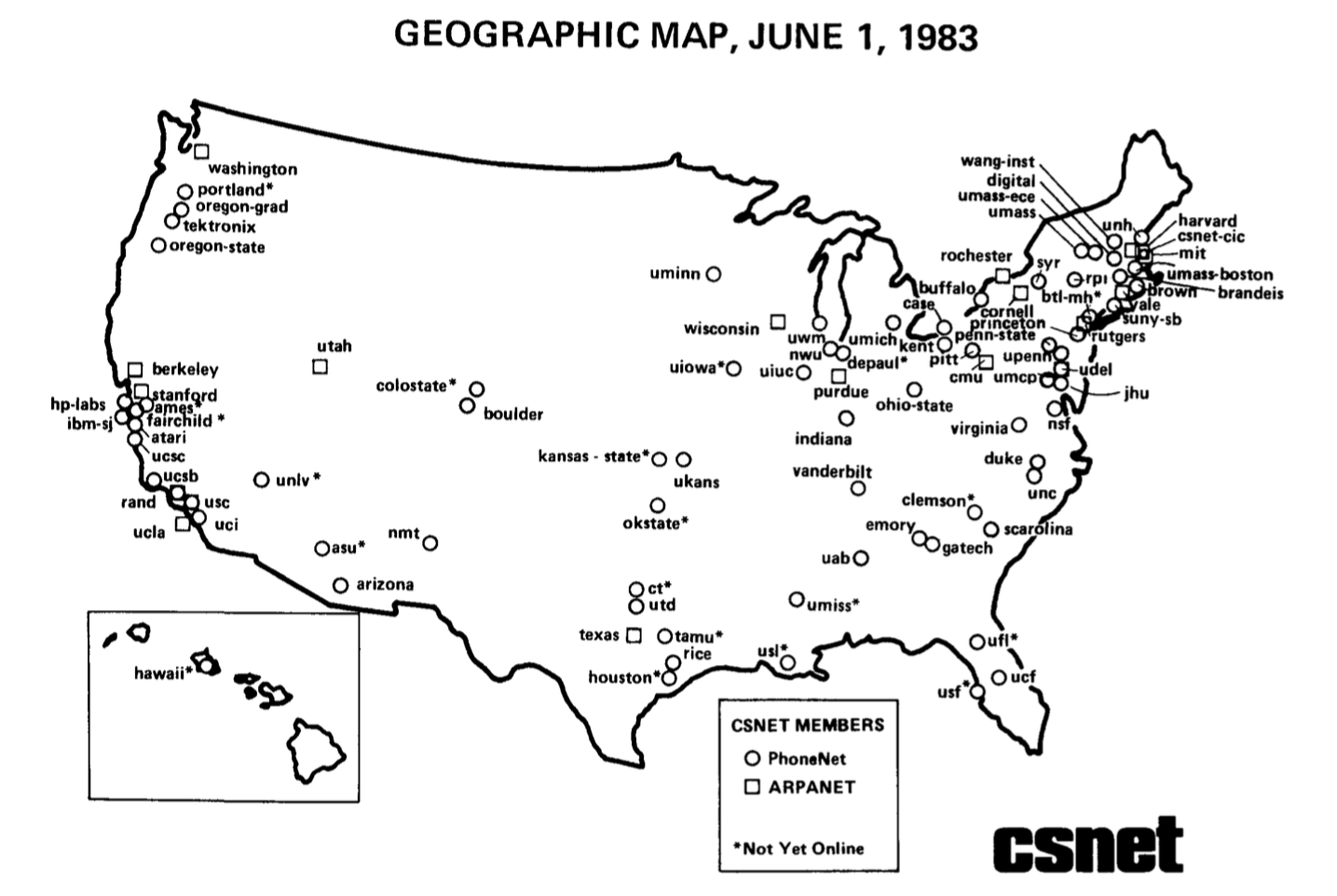

-

In the 80s the rise of the internet came about, as systems expanded worldwide and became more accessible to

people

-

1981: ARPANET was expanded in 1981 as the National Science

Foundation (NSF), funded by the Computer Science Network

(CSNET)

-

1982: Internet Protocol Suite (TCP/IP) was standardized, allowing worldwide proliferation of

interconnected networks

-

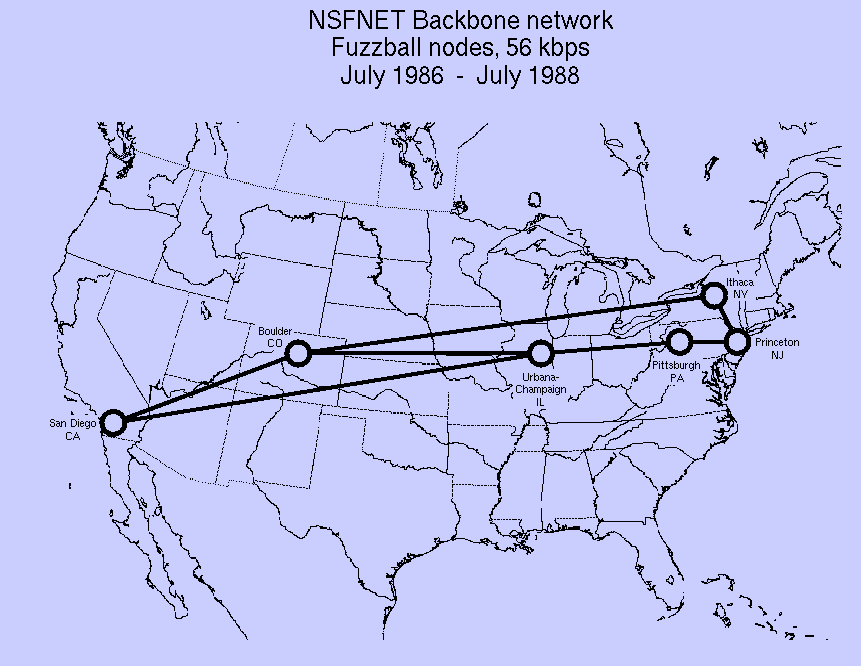

1986: TCP/IP network access expanded again when the National

Science Foundation Network (NSFNet) provided access to supercomputer sites in the US for

researchers (first at speeds of 56 kbit/s, later at 1.5

Mbit/s and 45 Mbit/s)

-

1988–89: NSFNet expanded into academic and research organizations

in Europe, Australia, New Zealand and Japan

-

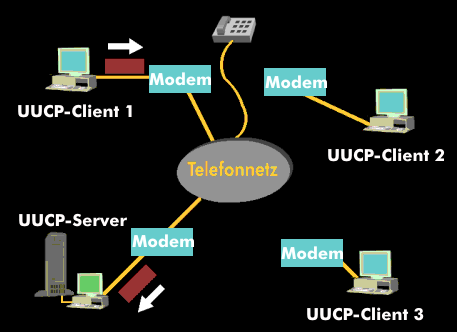

Although other network protocols such as UUCP had global reach well before this time, this marked the beginning of the Internet as an

intercontinental network

-

1989: Commercial Internet service providers (ISPs) emerged in the US and Australia (then ARPANET was

decommissioned in 1990, RIP)

-

Then in the 90s, some other pretty revolutionary social computing systems came into existence:

-

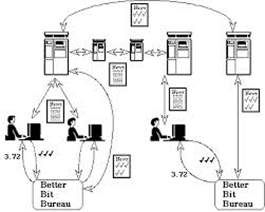

In 1992, Xerox developed Tapestry, an experimental collaborative email filtering system, based on the belief that information filtering could be

more effective with humans involved in the filtering

process

-

It was designed to support both content-based filtering and collaborative filtering; people could record their reactions

(called “annotations”, accessible by other users’ filters) to

documents they read

-

It was intended to serve both as a mail filter and

information repository, comprising an indexer, document

store, annotation store, filterer, little box, remailer,

appraiser and reader/browser

-

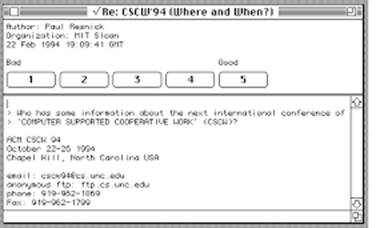

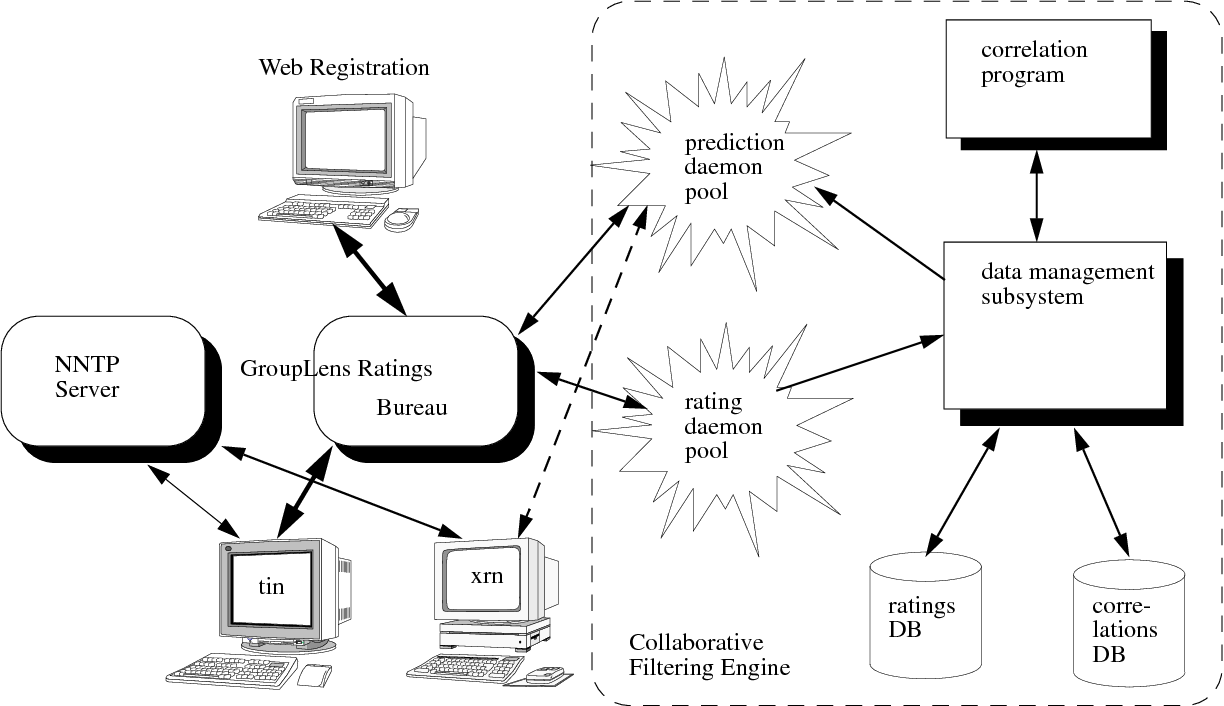

Also in 1992, the GroupLens lab at the University of Minnesota was set up, one of

the first to study automated recommender systems; they wrote:

-

the GroupLens recommender

-

a USENET article recommendation engine (USENET was an oldbie internet discussion group

network)

-

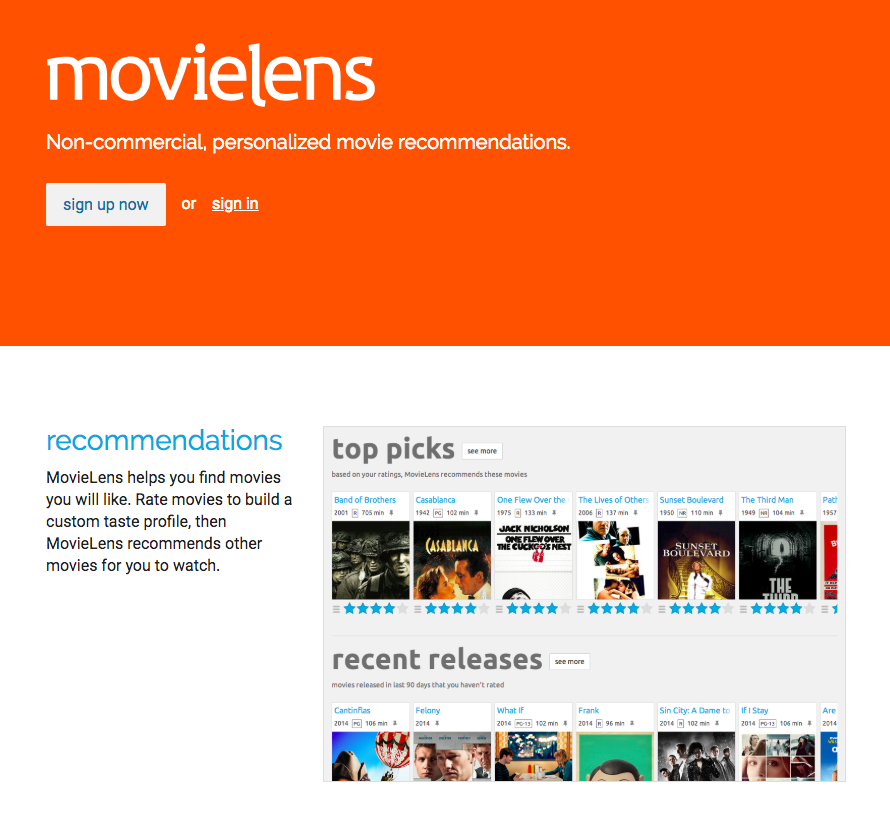

MovieLens (1997), a popular movie recommendation site used to

study recommendation engines, tagging systems, and user

interfaces

-

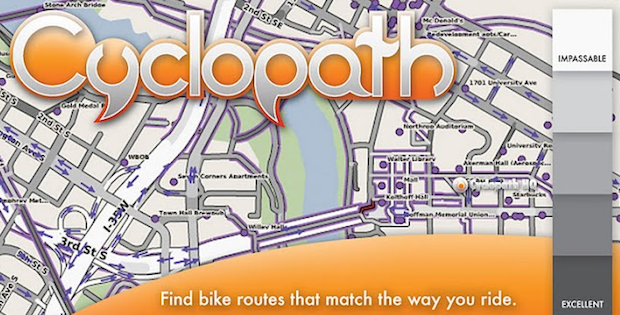

They also gained notability for work studying open content

communities such as Cyclopath, a geo-wiki used in the local area to help plan the

regional cycling system

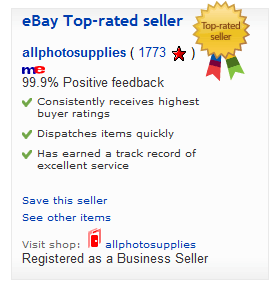

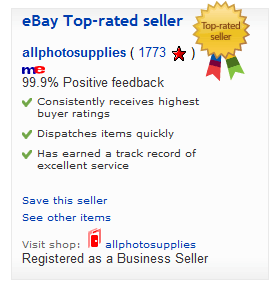

Commercialisation

Overtime. such systems became more commercial, and if you

haven’t been living under a rock you probably know:

-

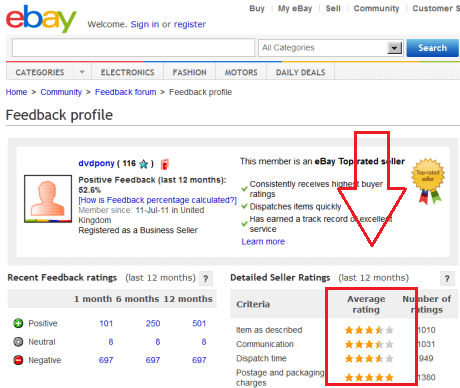

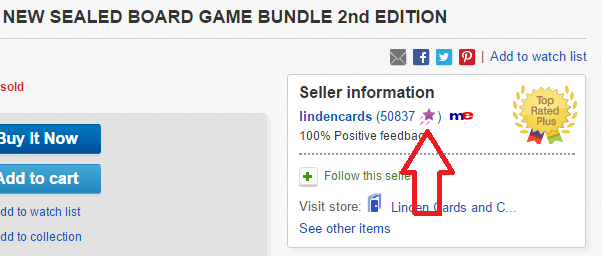

eBay (1995), which uses a public reputation system to help users be able to trust sellers when buying

stuff

-

eBay uses a feedback and ratings policy, and on a product page, an information box gives relevant history about the user selling the

product

-

Amazon (1997), which uses a collaborative filtering service called BookMatcher, which makes book recommendations to patrons

-

Products are recommended to a user based on the ratings given by previous users, and Amazon’s patent includes a technique to automatically update the database of recommended

items based on the underlying database of books available.

It also uses statistical analysis to validate recommendations before

offering them

-

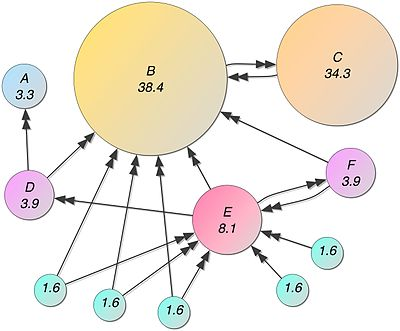

Google (1998) - a PageRank built on collective intelligence, the major difference with the Google algorithm being that PageRank evaluates the link. When a page is linked by

another page with “authority”, this gives more relevance to the page itself; a page with

qualitatively valuable information actually needs months to

acquire some “authority”.

-

Since 2000, it uses AdWords, which uses auctioning to rank pages. The position of an

ad in the results for a particular search, called an

“Ad Rank”, is calculated via bid multiplied by quality score.

-

Here’s a flex of Google’s revenue, if you

care:

-

$59.06 bn revenue in 2014, ~90%

-

$67.39 bn in 2015, ~90%

-

$79.38 bn in 2016, ~89%

-

$95.38 bn in 2017, ~86%

-

$116.32 bn in 2018, ~85%

Web 2.0

Web 2.0 (also known as Participative/Participatory and Social

Web), coined in 1999, refers to sites that emphasize:

-

user-generated content

- ease of use

-

participatory culture

-

interoperability (i.e., compatible with other products, systems, and

devices)

Here are a bunch of sites (that you’ve probably heard of

unless you’ve just immigrated from Pluto) that boomed in

the 00s built on a crowd-generated content model:

-

2000: Threadless - an e-commerce site where designs are created by and

chosen by an online community of artists (in some way like

an OG RedBubble). Each week, about 1,000 designs are

submitted online and are put to a public vote.

-

2000: iStockPhoto - a royalty free, international micro stock

photography provider, which offers millions of photos,

illustrations, clip art, videos and audio tracks. Artists,

designers and photographers worldwide contribute their work

(like Hide The Pain Harold, does anyone remember that meme?)

to iStock collections in return for royalties

-

2001: Wikipedia - a free online encyclopedia, created and edited by volunteers around the world and hosted by the Wikimedia

Foundation (and where I’m stealing most of the stuff

in this document from)

-

2004: Flickr - an image hosting and video hosting service, as well as an

online community. It is (or was, does anyone still use it?)

a popular way for amateur and professional photographers to

host high-resolution photos

-

2005: YouTube

-

Social Media (Myspace, Facebook, Twitter)

-

Do I even need to explain the last two points, you know

what this shit is

Rise of Crowdsourcing

As mentioned before (doesn’t hurt to recap), coined in

2006 by Jeff Howe in Wired Magazine, crowdsourcing is to

“obtain (information or input into a particular task or project)

by enlisting the services of a number of people, either paid or unpaid, typically via the Internet”.

(Basically, get people online to do shit for you)

Here are some notable examples of crowdsourcing

initiatives:

-

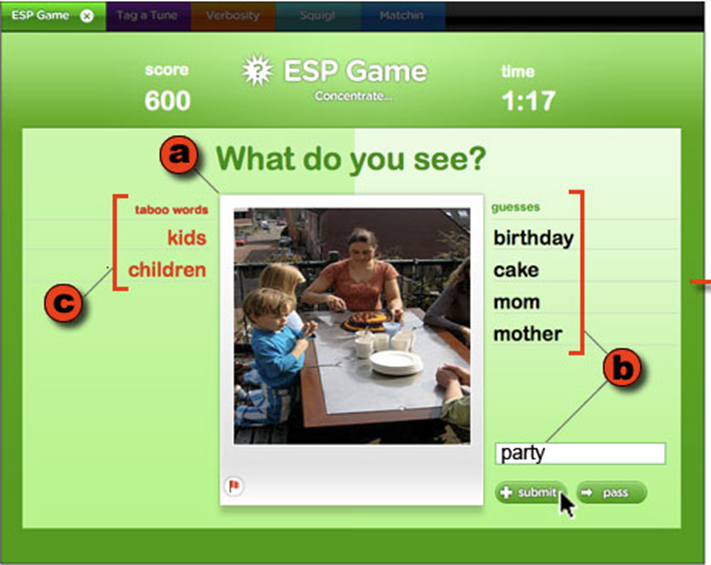

2004: ESP Game - this was a human-based computation game developed to address the problem of creating

difficult metadata. The idea was to use the computational power of humans to perform a task that computers can’t

(originally, image recognition) by packaging the task as a

game

-

It was originally conceived by Luis von Ahn of Carnegie

Mellon University, but Google bought a licence to create

their own version of the game in 2006, Google Image Labeler, to return better search results for its online

images

-

2005: Amazon Mechanical Turk - I talked about this shit here, as of 2011 it had half a million users

Crowdsourcing for Social Good

-

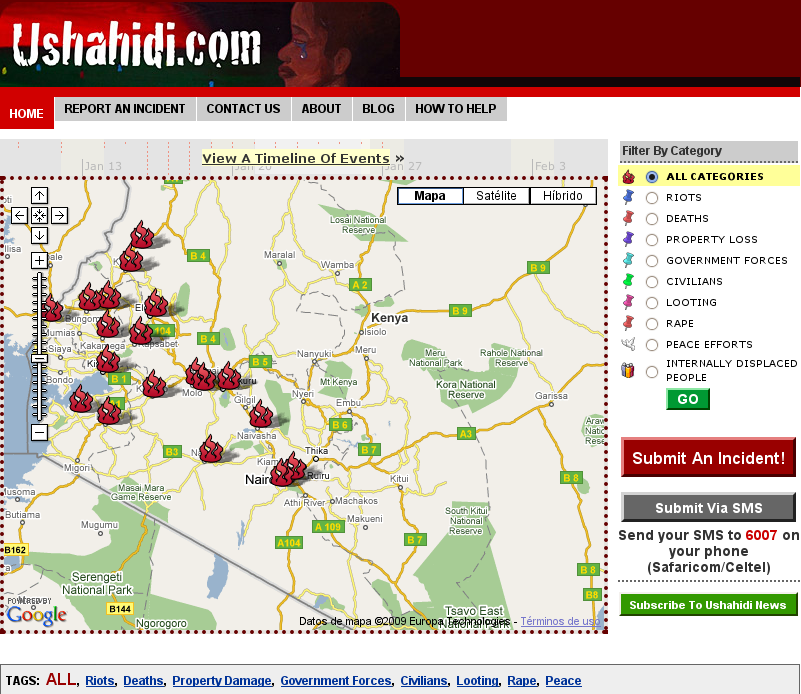

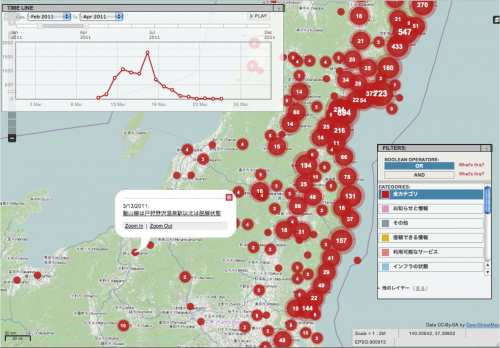

2007, 2010: Ushahidi - created in 2007, Ushahidi (meaning

“testimony” in Swahili), is both the name of a

Kenyan not-for-profit civic tech company and of a

crowdsourcing platform allowing users to submit violence reports and mapping of

events.

-

It was notably used during the 2007–08 Kenyan crisis, and the 2010 Haiti earthquake; here’s a video of a visualisation of the response

to the earthquake by the OpenStreetMap community: https://www.youtube.com/watch?v=BwMM_vsA3aY

-

Also, a 4636 emergency shortcode was set up to run on the Digicel

and the Comcel networks in Haiti, and works as follows:

-

People in Haiti text location, name and requests for aid /

reports etc. to 4636.

-

The data is streamed from different celtels to a server

hosted by Ushahidi.

-

100s of Kreyol-speaking volunteers translate, categorize and plot the

geocoords of the location if possible.

-

The structured data is streamed to different

organizations on the ground like Red Cross and InSTEDD, who act on

it / pass it to appropriate people there.

-

It is also streamed to the main Ushahidi database (the

publicly viewable one) where it is combined with other data,

further annotated etc. and made available to other

organizations.

Crowdsourcing and Machine Learning

-

There are also examples of crowdsourcing in machine learning applications

-

You guys remember good ol’ Wizard of Oz prototyping from Interaction Design? Stuff like that can be done

in the domain of AI “essentially prototyping the AI with human beings”.

-

In some cases, humans are used to train the AI system and

improve its accuracy. A company called Scale offers a bank of human workers to

provide training data for self-driving cars and other

AI-powered systems.

-

“Scalers” will, for example, look at camera or

sensor feeds and label cars, pedestrians and cyclists in the

frame. With enough of this human calibration, the AI will

learn to recognise these objects itself (tl;dr using humans

to train AI)

-

In other cases, companies “fake it until they make

it”, telling investors and users they have developed a

scalable AI technology while secretly relying on human

intelligence (basically bullshitting the customer)

-

As a specific example, a company called Figure Eight, a human-in-the-loop (i.e. getting people to help with shit) machine

learning and artificial intelligence company, uses human intelligence to do simple tasks (such as transcribing text or annotating images) to train machine learning algorithms

-

Figure Eight's software automates tasks for machine

learning algorithms, which can be used to improve catalog search results, approve photos, support

customers etc.

-

The technology can be used in the development of

self-driving cars, intelligent personal assistants and other

technology that uses machine learning, and Figure Eight

works with a lot of big companies

Why Do We Still Use Social Computing?

There’s still vast commercial potential (i.e. companies

can make $$$) from social computing:

-

Google’s built on collective intelligence

-

Facebook uses a newsfeed

-

Sales are driven via engagement through relevant recommendations and adverts

-

Crowdsourcing offers access to a worldwide labour market

-

Crowdsourcing can also lead to greater user engagement, and possibilities such as:

-

Large-scale digitisation (reCaptcha)

-

Translation through language learning (Duolingo)

-

Word processor with a crowd inside (Soylent)

-

Social computing can also assist with contributions to science (no, really, it can), providing us with knowledge and

insights on:

-

Human behaviour

-

Economic incentives

-

Social science research

-

Citizen science

-

Unprecedented access to data (participatory sensing) and human computation (e.g. Galaxy Zoo)

-

Galaxy Zoo is a crowdsourced astronomy project which invites people to assist in the morphological

classification of large numbers of galaxies. It’s also

an example of citizen science as it enlists the help of

members of the public to help in scientific research.

Features of Computing Systems

One can also think of computing features as being either

relevant to “traditional” or “social”

computing. Some of the most important characteristics of

social computing can be summarized as user-created content

where:

-

users can control the data

-

unique sharing of content or media

-

ability to tap into the intelligence (unless you’re on something like TikTok) of

other users collectively

-

unique communication and collaborative environment

-

major emphasis on social networks

-

interactive, user-friendly interface

When analysing a social computing system, one can ask the

questions (common sense really):

-

What is its purpose (besides providing material for this module)?

-

What problem(s) does it solve?

-

What were possibly the key (social computing)

challenges in implementing the system?

-

How are users incentivised to participate?

-

How could the system be improved?

-

Is it successful?

Here’s a soulless, unimaginative corporate graphic for

your viewing pleasure:

Recommender Systems

Learning Outcomes

-

Identify and compare key recommender systems, understanding which ones are appropriate for what types

of applications

-

Apply recommender systems techniques to specific settings

-

Evaluate recommender systems

Resources

Some of the slides I’m ripping off are based on

tutorials by Dietmar Jannach and Gerhard Friedrich, and there are two textbooks for this part of the

module:

-

(the intro) Recommender systems: an introduction (Dietmar Jannach, Markus Zanker, Alexander Felfernig,

Gerhard Friedrich)

-

(the real deal) Recommender Systems: The Textbook (Charu C. Aggarwal, 2016 - ISBN 978-3319296579, published

by Springer)

-

Furthermore, some useful websites:

What are Recommender Systems?

Recomender systems are a type of information filtering system which filters items based on a personal profile. (tl;dr they recommend you stuff)

They’re different from many other information filtering

systems (e.g. standard search engines and reputation systems) in

that the recommendations are personal to the specific user or group

of users (with the theory that we aren’t as unique as we

think we are, you’re not special mate).

Recommender systems are given:

-

a user model which entails ratings, preferences, demographics, and situation

context (things like how old we are, where we live :o how

much we earn, what kind of job we do, what gender we are

etc.).

-

Recommender systems can infer quite a lot of info about the

kind of person we are from this combination of

characteristics, and thus the kind of things we’re

more likely to like

-

items, the things that we select - these items may or may not have any additional characteristics associated with them, but are important in

themselves

The recommender system can then calculate a relevance score used to rank the options, and can finally choose the set of options to recommend to us.

Examples of Recommender Systems

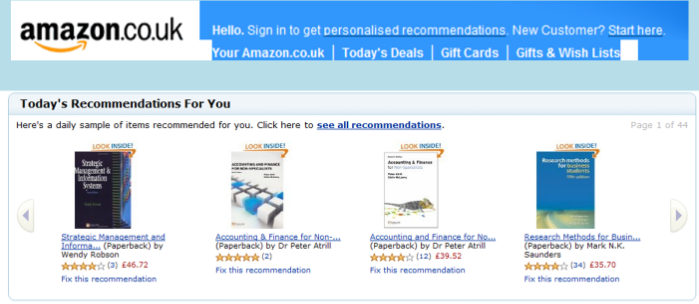

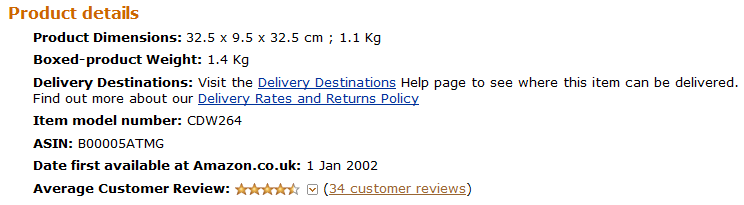

-

Amazon is of course one of the biggest online retail spaces;

because it’s so huge with so many products, good

recommender systems are invaluable

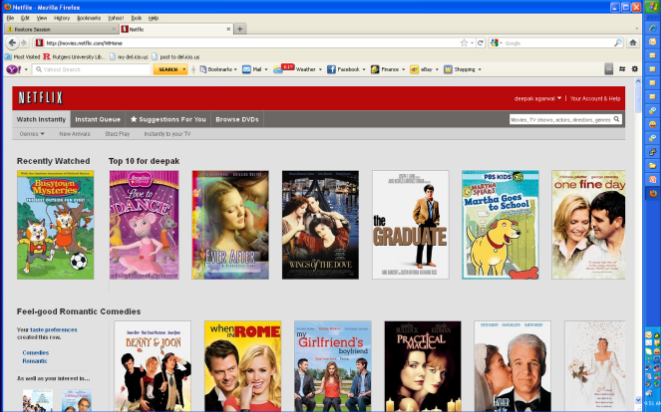

-

Netflix (though there are lots of rivals), with their own

[Original Content](do not steal) and a huge library of other

films, shows etc. Obviously it’d take forever to trawl

through it all, so recommender systems can help

(the 2000s called, they want their OS back)

-

News recommendations; after viewing a few news stories these sites often

recommend other articles you may be interested in

-

Google Play (recommending apps one might be interested based on

what they’ve already downloaded)

-

Job sites (“jobs you may be interested in”)

-

Facebook (“groups you may like”)

-

Picasa (recommending similar photos)

-

Targeted advertising (e.g. annoying banners at the top of the page, using a

recommender in addition to simply cookies and search history

etc.)

-

A web app called Spydify was developed by students at the uni, which produces

visualizations such as graphs and infographics to show

users’ preferred artists/songs, shows other metrics

(such as how “danceable” the music they listen

to is), and helps recommend new music based on one’s

tastes; check it out!

Why use Recommender Systems?

Recommender systems can provide:

-

Reduce information overload (browsing things online can be more difficult and

overwhelming vs. IRL; “we can only see what fits on

our screen”)

-

Find things that are interesting

-

Narrow down the set of choices (we can see things we’ve bought before, and

promotional items)

-

Help one explore the space of options

-

Discover new things

-

Entertainment

-

tl;dr they help people find shit they like

-

If it makes things easier for the user, the user is more likely to return to the site

-

Additional and probably uniquely personalised service for the customer

-

Increase trust and customer loyalty

-

Increase sales, click-through rates (CTRs),

conversions (i.e. successful engagements with recommended items,

i.e. the user takes the bait) etc.

-

Opportunities for promotion and persuasion

-

Obtain more knowledge about customers

-

tl;dr they help companies make more $$$ from people

engaging with shit

Potential Issues

However, naturally some challenges exist around developing and using recommenders, such

as:

-

Lack of data (from which to learn and make recommendations) - this

is known as the “cold start problem”

-

Privacy - despite being anonymised, data is effectively collected

about users to, in most applications, make a profit, and

this data is often sold between companies (and the moment

one starts swapping databases it can become much easier to

identify individuals)

-

Lots of similar items - there can be an issue with lack of variety in

recommendations, and the scope thereof becoming too

narrow/niche

-

From a user perspective, shared accounts - if someone is sharing an account with someone else,

particularly if they have very different tastes, the

algorithm can get “confused” and make

some… interesting recommendations

-

One could argue being recommended things can limit people pursuing other options - if you simply follow recommendations, you may never see

some of the different options and discover new things you

might actually like

Paradigms of Recommender Systems

These are some different forms/models of recommender system,

including:

-

Content-based

-

Knowledge-based

-

Collaborative filtering

- Hybrid

Looking at the first example:

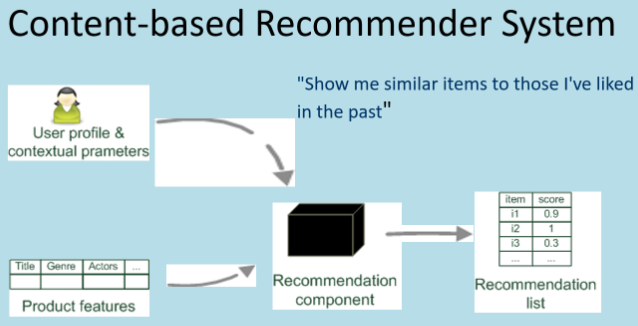

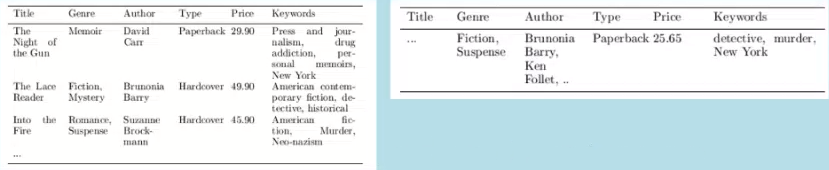

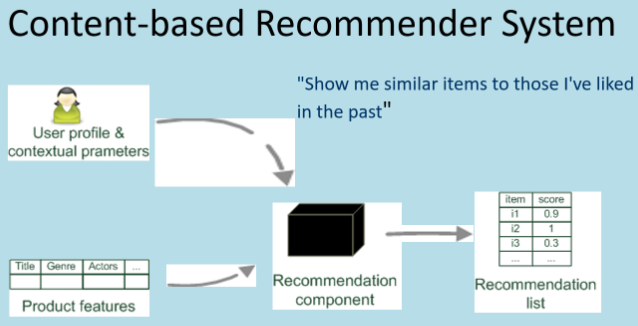

Content-based

-

Build a profile of a user (i.e. user model), which is automatically generated and updated (e.g. learn model based on previously bought

items)

-

Identify a set of features from items bought/looked at; for example:

-

Books/movies: title, genre, writers, actors, and many other

keywords

-

Music: using tags/attributes (e.g. in the Music Genome Project, used by Pandora)

-

Web pages/documents: metadata (basically tags), or actual

contents

-

Find item similarities based on features (i.e. content)

-

Finally, produce recommendation based on user profile and

similarity

-

e.g. items similar to items bought, or albums similar to

albums listened to

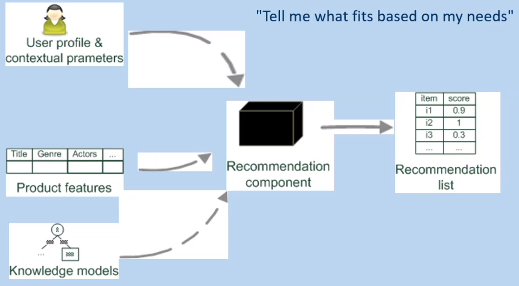

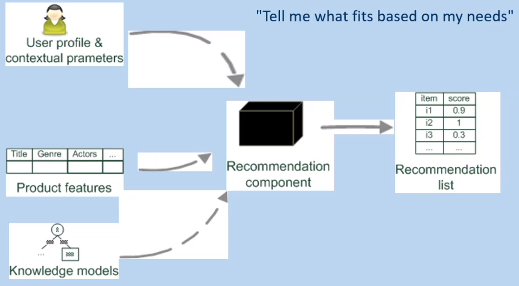

Knowledge-based

-

Makes use of (often) manually generated information about both users and items

-

User information generated, e.g. using preference elicitation (ask user sets of questions to determine their likes

or demographic information, and build a profile)

-

In practice, the system’s “knowledge” can

be updated and fed back into recommendations periodically,

e.g. run overnight

-

Item similarity/recommendation determined by “experts”

-

System mimics behaviour of an experienced sales assistant (bookshops being a good example; e.g. you might tell an

assistant what you last read, and they can use their

knowledge of books and what’s in the store to

recommend something new)

-

Useful when recommending to first-time users (e.g. for one-time purchases) and novel items

-

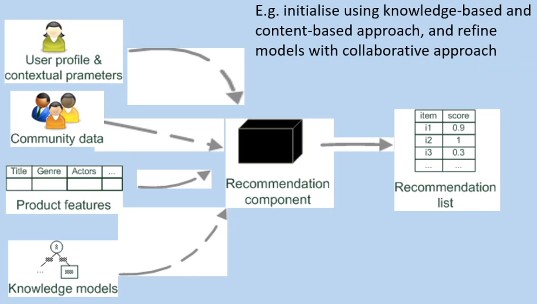

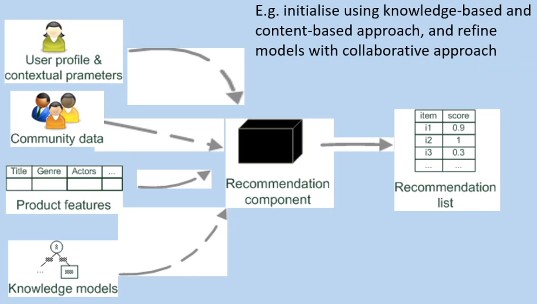

In short, knowledge-based systems take the user profile and contextual parameters, but also product features and knowledge models they already have to build a recommendation list

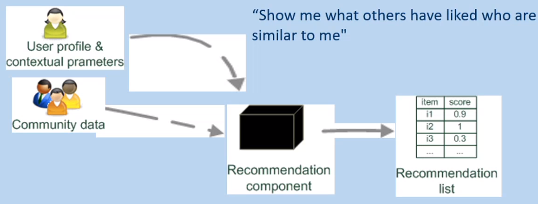

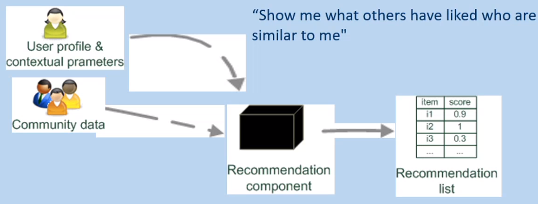

Collaborative Filtering

-

The most prominent approach to generate recommendations

-

used by large, commercial e-commerce sites

-

well-understood; been around for a long time, and various algorithms and

variations exist

-

applicable in many domains (books, films, DVDs, etc.), but doesn’t need

info about the items themselves

-

Uses the wisdom of the crowds to recommend items, where users rate catalog items

implicitly (e.g. watching a film multiple times,

demonstrates we probably liked it) or explicitly (e.g.

actually rating it highly)

-

The basic idea is that customers who had similar tastes in

the past, will have similar tastes in the future; in this

sense it exploits correlation between people with similar

interests

-

Ultimately, it’s all about the user profile, contextual parameters and community data

-

There are two main types of collaborative filtering:

-

User-based - considers similarities between users, and produces

recommendations based on similar interests

-

Item-based - considers similarity between items: “users who liked item x, also liked item

y”

Hybrid

-

As the name suggests, hybrid approaches use a combination of techniques; a recommender system can become more powerful when

different things are put together

Pros and Cons of Different Approaches

|

Type of Recommender

|

Pros

|

Cons

|

|

Collaborative

|

-

No knowledge-engineering effort

-

Well understood

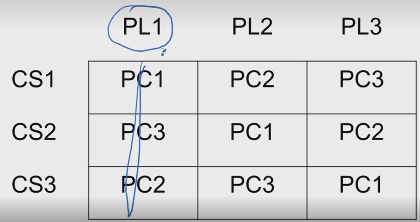

-

Works well in some domains

-

Can produce “surprising”

recommendations

|

-

Requires some form of rating feedback

-

“Cold start” for new users and

items

-

Sparsity problems

-

No integration of other knowledge

sources

|

|

Content-based

|

-

No community required

-

Comparison between items possible

|

-

Content descriptions necessary

-

“Cold start” for new

users

-

No “surprises”

|

|

Knowledge-based

|

-

Deterministic (i.e. a given set of inputs

will always return the same output)

recommendations

-

Guaranteed quality

-

No “cold start”

-

Can resemble sales dialogue

|

-

Knowledge engineering effort to bootstrap

(the start up cost is high in terms of data

needed to make recommendations) - hence it

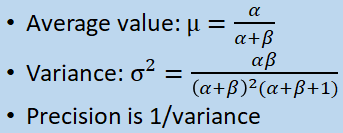

can be very computationally expensive

-

Basically static

-

Doesn’t react to short-term

trends

|

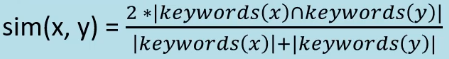

Similarity Coefficients

(FYI, a coefficient is a number in front of a variable of term, e.g. in 6a + 3b + 2x²y, the term coefficients are 6,

3 and 2 respectively)

So how do we mathematically evaluate the similarity between

documents?

Below are a couple of simple approaches, computing document similarity based on keyword overlap:

(x and y are two books, and |X| indicates the size of a set X -

remember cardinality in Foundations?)

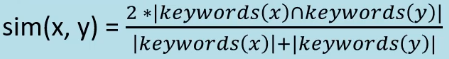

Dice’s coefficient:

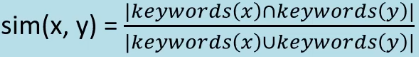

Jaccard’s coefficient:

The above two coefficients effectively tackle the problem in

the same way, just via slightly different calculations. One

tends to choose the coefficient that’s most suitable for the dataset hand/problem being solved.

At the two extremes:

-

Identical sets of keywords = a similarity of 1

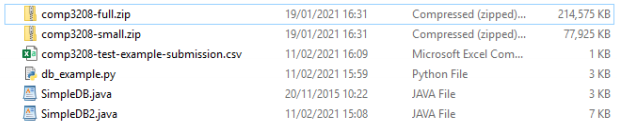

-

Completely different keywords = a similarity of 0

“Cleaning” Features

(FYI for below, an array is a horizontal list of numbers,

whereas a matrix is a 2D grid)

There are some potential shortcomings to using keywords; e.g.

they:

-

(Generally speaking) Need to be transformed to numbers (we simply care about frequency of occurrence)

-

Contain “noise” words such as “and”, “the”,

“but” etc. (these don’t actually tell us

anything about the item)

-

Generate a sparse matrix (i.e. a matrix which contains very few non-zero elements,

as there are a lot of possible keywords; big matrices can

waste lots of memory space)

One mechanism to combat this is to clean the features, basically optimizing the set of keywords for the task at

hand, using techniques such as (if you did a machine learning

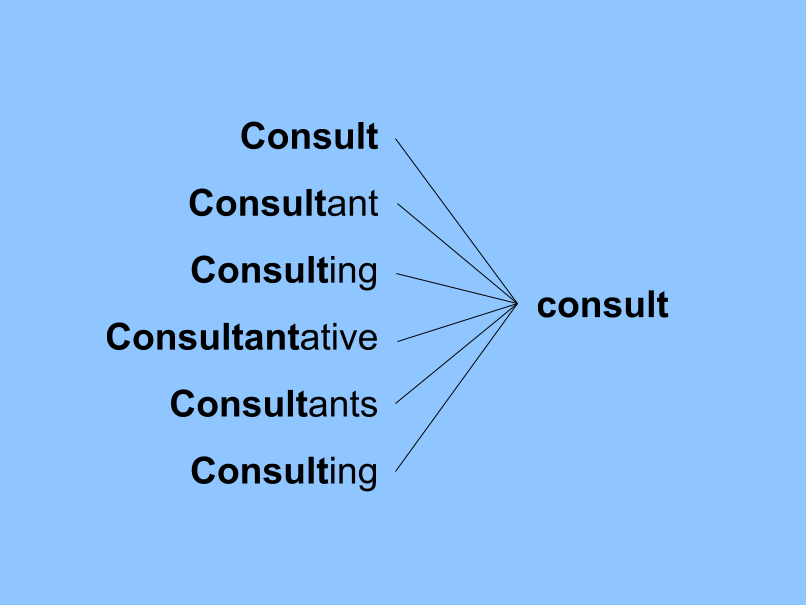

module, here’s some déjà vu):

-

Stop-word removal - filtering out words that aren’t semantically

meaningful, like “a”, “an”,

“the” etc.

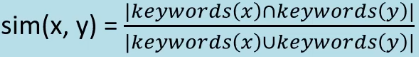

-

Stemming - reducing inflection in words to their root forms,

which still capture the same meaning; e.g.:

-

Phrase extraction - extracting words that occur together and have a

specific meaning, e.g. “hot dog”, as a single

term (note, it doesn’t have to be two words, it can be

any set that occur together; one has to be mindful of

computational expense however)

-

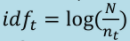

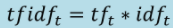

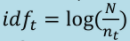

Term-frequency inverse-document frequency (TF-IDF) - basically assigning less weight to common words

(e.g. stop words); mathematically:

-

Inverse-document frequency (IDF) =

, where nₜ = number of times a term t appears across documents, and N

= number of documents

, where nₜ = number of times a term t appears across documents, and N

= number of documents

-

Term-frequency inverse-document frequency (TF-IDF) =

, where tfₜ = a normalised and/or dampened frequency of term t in the

document

, where tfₜ = a normalised and/or dampened frequency of term t in the

document

-

In short, the reason we use this is because a word that

doesn't occur very often in a set of documents, may

actually be an important word (i.e. the less frequency a word is used, the more important it

might be). It may be more indicative of what the document is about

than any of the other words.

-

It’s also weighted against the document length, so

e.g. if a word only occurs once in a short document, the

weighting’s going to be much lower vs. if it appears

just a few times in a long document

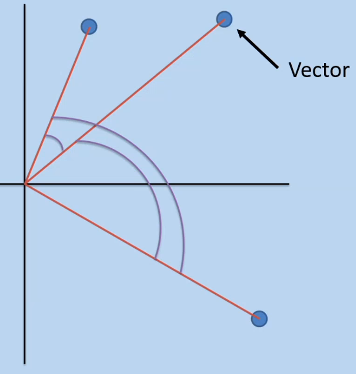

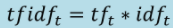

Calculating Similarity

-

Given a feature vector (which can represent all the words

in a document, for example), there are many ways to

determine the similarity between two vectors (so e.g. two

documents).

-

The most commonly used is cosine similarity, simply the angle between two vectors; the smaller the angle, the closer the two

vectors/documents/set of word are to each other (one can do cosine similarity on words or entire

documents)

-

If two vectors are at 90 degrees apart, they’re as

dissimilar as they can possibly be; if they're less than

90 degrees, they become more similar.

-

Here’s the actual equation to calculate it:

-

As with the aforementioned similarity coefficients, at the

two extremes:

-

Identical vectors/documents

= a similarity of 1

-

Completely different vectors/documents = a similarity of 0

Exercise

(This exercise taken from the slides, originally from

StackOverflow apparently)

Suppose we want to compare the similarity between two

documents, in this case simple sentences (but we can apply the

same approach to books or websites):

-

Document x: “Julie loves me more than Linda loves

me”

-

Document y: “Jane likes me more than Julie loves

me”

Step 1: Get the frequency of each term (order does not matter) to

produce vectors x̄ and ȳ.

x̄ = (Jane: 0, Julie: 1, likes: 0, Linda: 1, loves: 2, me:

2, more: 1, than: 1)

ȳ = (Jane: 1, Julie: 1, likes: 1, Linda: 0, loves: 1, me:

2, more: 1, than: 1)

Though to simplify we can just write them like:

x̄ = (0,1,0,1,2,2,1,1)

ȳ = (1,1,1,0,1,2,1,1)

Step 2: Calculate the similarity, using the Dice, Jaccard and Cosine

approaches.

Dice’s coefficient = (2*5) / (6+7) = 10/13

Jaccard’s coefficient = 5/8

Cosine similarity = (0*1 + 1*1 + 0*1 + 1*0 + 2*1 + 2*2 + 1*1 + 1*1) /

(√(0²+1²+0²+1²+2²+2²+1²+1²)

*

√(1²+1²+1²+0²+1²+2²+1²+1²))

= 9 / (√12 * √10) = (3√30) / 20

Bear in mind that for Dice and Jaccard, we don’t care

about the frequency, only if the words appear or not (i.e. the

cardinality of the sets, i.e. number of unique elements).

Self-Test Question Answers

If it isn’t obvious, green = correct, red = incorrect (if you’re colourblind have no fear, I’ll

also add a tick ✓ and cross ✗).

Question 1

A definition of a recommender system is:

-

✓ A type of information filtering system which

filters items based on a personal profile

-

✓ A type of information filtering system which

filters items based on a personal profile. It is different

from many other information filtering systems (such as

standard search engines and reputation systems), in that the

recommendations are personal to the specific user or group

of users

-

✗ An information filtering system (don’t ask

me why this one’s incorrect)

-

✗ A special kind of search engine

Question 2

Examples of providers that use recommender systems are:

-

✗ Amazon and Netflix (again I don’t understand

why this isn’t technically correct but we move)

-

✓ Amazon, Netflix and Spotify

-

✓ Amazon, Netflix, Spotify and many large

supermarkets

-

✗ Amazon, Netflix, Spotify and Google search

Question 3

There are many advantages to using recommender systems for both

customers and providers. Some of these for the customer are

reducing information overload, and helping them discover new

things. For the provider, they increase trust and customer

loyalty which can in turn increase sales revenue.

User-Based Collaborative Filtering

We touched on collaborative filtering in the previous lecture;

focusing more on user-based collaborative filtering...

-

The basic assumption is that people who agreed in the past are likely to agree

again (i.e. we’re not quite as unique as we think we

are, and there are others out there with similar likes to

us)

-

We try to find users who are similar to each other based on their overlap in interests and

opinions (e.g. using jointly rated items)

-

If ratings for the same items are similar, it is assumed

that users have similar interests and so will have similar

interest for future items

-

Then the system can recommend items from similar users which are rated

positively (and which the user has not yet

rated/seen/bought)

In terms of requirements, systems like Amazon have millions of items and tens of

millions of users, so clearly algorithms need to have good

performance independent of these colossal numbers. The

algorithms should ideally have:

-

constant time performance

-

response time of <0.5 seconds

-

still able to give new users good recommendations

So what steps does one need to consider for the system?

-

Representation - what will be used as input? e..g items we or other

users have purchased, items browsed, ratings users have left

behind, etc.

-

Similarity measure - how do we calculate the similarity between 2

users?

-

Neighbourhood selection - do we select all users (have to think about

efficiency/scalability; if you have millions of users

it’s infeasible to do similarity calculations between

them all!) or a subset of users? On what basis do we

choose?

-

Predict/estimate rating - need to calculate a predicted rating (i.e. what we

predict the user might rate the items) for a user’s

unrated items

-

Generation of recommendation - select the “top M” recommendations ranked by

predicted rating

Naturally however, some issues exist with user-based

collaborative filtering such as:

-

User cold-start problem - not enough info known about a new user to decide

who is similar, and possibly a lack of other users

-

Sparsity - recommending from a large items set where users may

have only rated a few, it can be hard to find users

who’ve rated the same items

-

Scalability - with many users calculations can become

computationally expensive (O(N²) complexity)

-

To combat this, representative groups of similar users can

be created to infer similar items (ringing a bell from

machine learning if you did that?)

-

ML algorithms like clustering can be used to learn and

create these groups, but these are time-consuming in their

own right, so in practice tend to be run periodically, e.g.

once at night

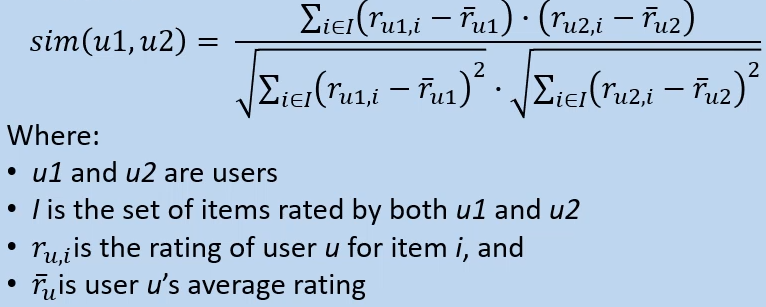

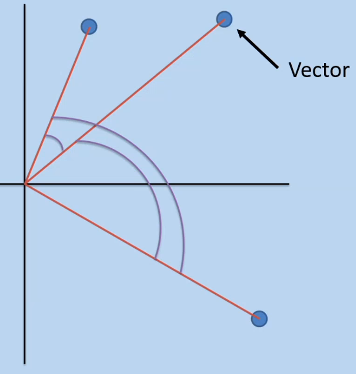

Similarity Measure (for Ratings)

-

This only considers users who’ve rated some of the

same items

-

The Pearson coefficient has been shown to be the most effective for user-based collaborative filtering

-

Used in statistics to calculate the correlation between 2 random variables:

-

This formula returns values between:

-

+1 (perfectly correlated)

-

-1 (perfectly negatively correlated)

-

It looks at the similarity in variations over items, not their absolute values

-

This is because it corrects for the average value, so e.g. users who consistently rate high can still be

similar to users who consistently rate low, as long as the correlation is the same!

-

This way, inherent/unconscious bias one user may have

shouldn’t unfairly skew the results

-

Note that, while used frequently in recommender systems,

you wouldn’t want to use correlation in all

situations (or may at least want to use it with caution), as correlation does not equal causation

-

So e.g. if you were looking for symptoms for a particular

illness, looking for correlation between variables,

you’d have to be very careful about the results and

conclusion drawn

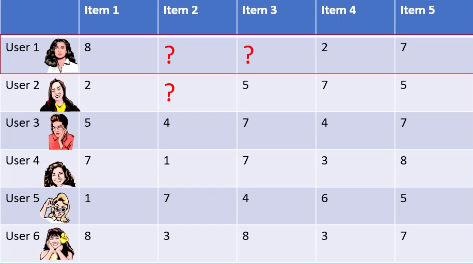

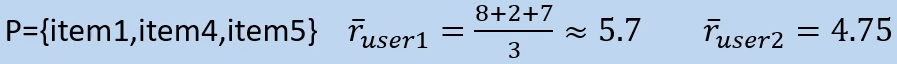

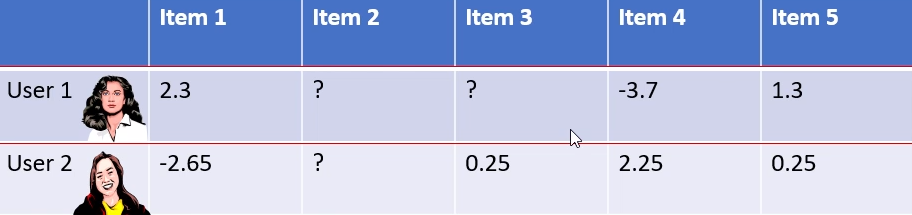

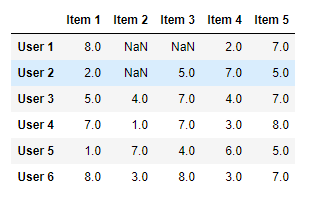

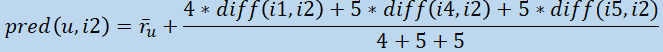

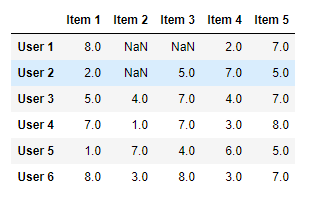

Pearson Coefficient Example

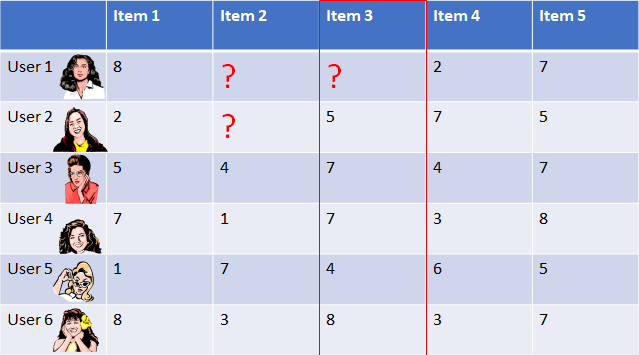

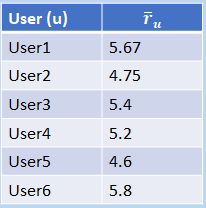

Say we have 6 users as shown in the table below, but users 1

and 2 have not rated some items:

For users 1 and 2, we can calculate the mean average user rating from the items they’ve both rated so far:

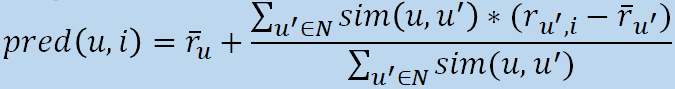

Then we can deduct user 1’s average from each of user 1’s

ratings, and deduct user 2’s average from each of user

2’s ratings, so we end up with:

Finally we can plug the values into the Pearson coefficient formula (given above) like so, to calculate the similarity

between user 1 and 2’s ratings (it looks more complicated

than it is!):

As the resulting value of -0.89 is close to -1, we can see that

their ratings are strongly negatively/inversely correlated.

Neighbourhood Selection

After calculating the similarity between all users, how do we

choose which to base our base our recommendation on? We could

use different constraints/selection criteria for neighbours, for

example:

-

Have to share at least one rating (though maybe more)?

-

Only those with a positive correlation?

-

Either threshold (by e.g. a minimum positive rating) or fix

the maximum number of neighbours, and choose n most correlated neighbours (ideally we can use a combination of thresholding and

max)

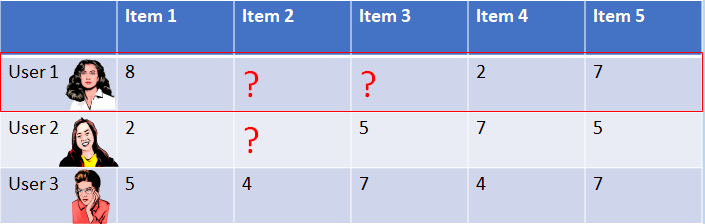

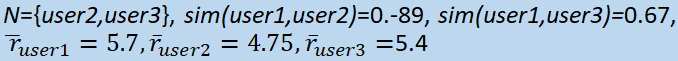

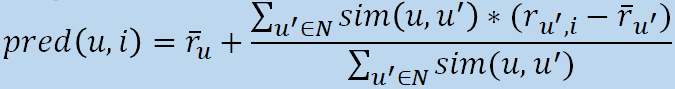

Prediction

We can then use the given user ratings and similarity

calculations to predict unrated items.

-

This function can be used to predict rating of user u for item i, given neighbourhood N (where N = the set of users in the selected

neighbourhood)

-

This formula adds/subtracts the neighbours’ bias from

the given user’s average

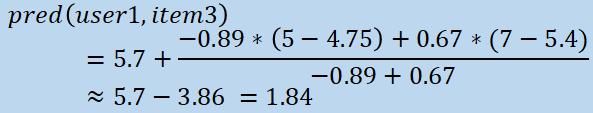

For example, say user 2 and user 3 have rated item 3, but user

1 hasn’t:

We can make a prediction of what user 1 will rate item 3, based on user 2 and 3’s ratings of item 3 and those

users’ similarity to user 1.

So, given the following user rating average and user similarity

results:

...we can simply plug the values into the prediction formula to calculate user 1’s predicted item 3 rating like

so:

Improving Prediction

Some neighbour ratings may be more “valuable” than

others; agreement on controversial items can be more informative than on common items.

Some things that can be done to possibly improve the prediction

function include:

-

Give more weight to higher variance items

-

Significance weighting - when the number of co-rated items between users is

low, linearly reduce the weight (and vice versa)

-

Formally, from the textbook: “When the two users have

only a small number of ratings in common, the similarity

function should be reduced with a discount factor to

de-emphasize the importance of that user pair. This method

is referred to as significance weighting.

-

Case amplification - give more weight to “very similar”

neighbours (i.e. that have a similarity value close to

1)

-

Neighbourhood selection - as touched upon above, use a similarity threshold, or a

fixed number of neighbours

Produce Recommendation

This is the final step (kinda obvious) for the user-based

collaborative filtering recommender to take; it can:

-

Recommend M items with the highest predicted rating

-

M can be fixed or flexible (based on the confidence, or a

given threshold)

-

Rank the recommendations in order of highest predicted ratings

-

In practice, other factors are also taken into account

while generating the recommendations, such as:

-

Item popularity

-

Item novelty

-

Variation of recommended items

-

Other (e.g. business) reasons for promoting certain

items

Item-Based Collaborative Filtering

We can also do collaborative filtering based on items!

-

The assumption is that a given user is more likely to have the same opinion for similar

items

-

Therefore, one can use ratings to e.g. derive the similarity between items (rather than users)

-

Compared to user-based collaborative filtering, some

advantages include:

-

No user “cold start” problem

-

Greater scalability with more users

-

Can be pre-computed “offline” (as one only

needs to select the top items from a list)

-

Results can be equally as good

As with user-based, one needs to consider the main steps for

the system, which are similar but of course in terms of items

rather than users:

-

Representation - what will be used as input? e..g items we or other

users have purchased, items browsed, ratings users have left

behind, etc.

-

Similarity measure - how do we calculate the similarity between 2 items?

-

Neighbourhood selection - do we select all items (have to think about efficiency/scalability; if you

have millions of items it’s infeasible to do

similarity calculations between them all!) or a subset of items? On what basis do we choose?

-

Predict/estimate rating - need to calculate a predicted rating (i.e. what we

predict the user might rate the items) for a user’s

unrated items

-

Generation of recommendation - select the “top M” recommendations ranked by

predicted rating

Naturally however, some issues exist with item-based

collaborative filtering; pre-processing techniques can be used

to mitigate these.

-

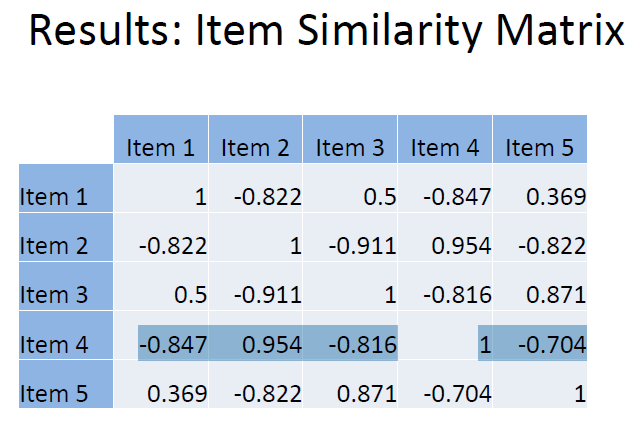

We might want to compute a similarity matrix offline with the items (at most (n*(n-1))/2 entries with n

items), which can be reduced further

-

An item similarity matrix changes less often than a user similarity matrix

-

With optimization, one can compute recommendations very fast

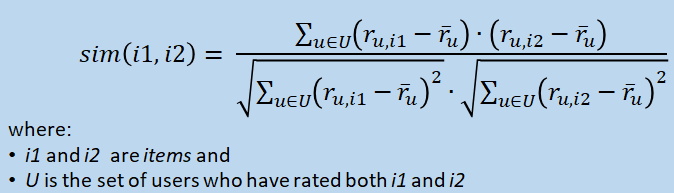

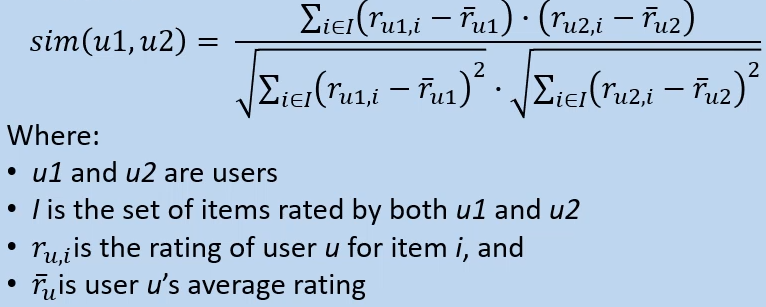

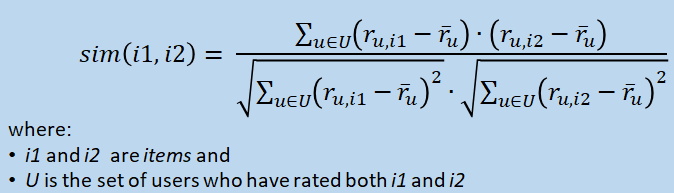

Similarity Measure (for Ratings)

Looking again at the table, say this time we want to find the

similarity between two items (rather than users):

-

One can technically just use Pearson’s coefficient

again, swapping users and items, taking an item average

instead of a user average

-

However, this isn’t adjusted/weighted for

users’ average, which could result in unfair

bias

-

Thus a better similarity function to use for instance is adjusted cosine similarity; this calculates the angle (so difference) between two

item vectors, but adjusted for user average:

-

As before, this formula returns values between:

-

+1 (perfectly correlated)

-

-1 (perfectly negatively correlated)

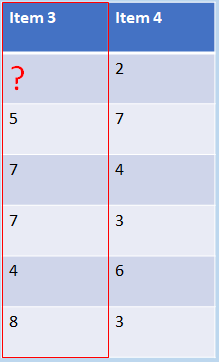

Adjusted Cosine Similarity Example

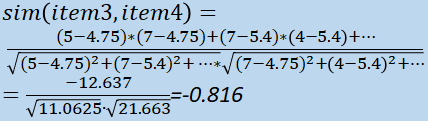

So for example, say we have user ratings for items 3 and 4 like so (as you can see user 1 hasn’t rated both

items, so we just ignore this row):

...and we calculate each user’s mean average rating (for all the items each one’s rated) like so:

...we can simply plug these values into the similarity function (summing the products of the respective weighted item

ratings, and dividing this result by the product of the two

items’ weighted square sums - sounds a lot more

complicated than it is, just look at the formula) and simplify

the expression for the final result:

As the resulting value of -0.816 is close to -1 (similar to the

previous example), we can see that their ratings are strongly negatively/inversely correlated.

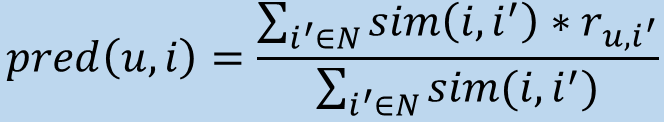

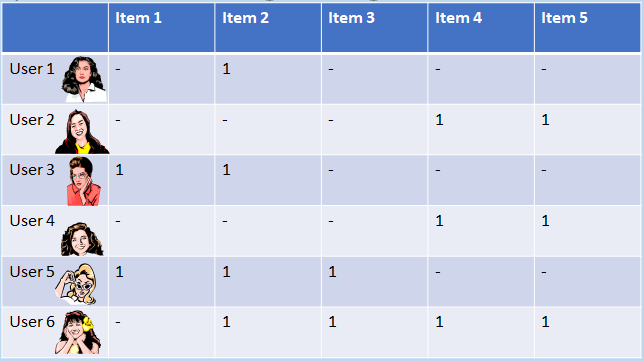

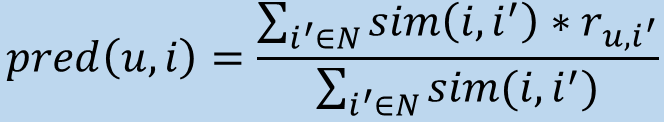

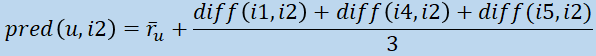

Prediction

Similarly to the user-based collaborative filtering system, we

can predict unrated items.

-

This function can be used to predict rating of user u for item i, given neighbourhood N (where N = the set of items in the selected neighbourhood)

-

Note how this formula is slightly simpler than the previous

one, as we need not subtract user biases, due to only

comparing similarity between items themselves

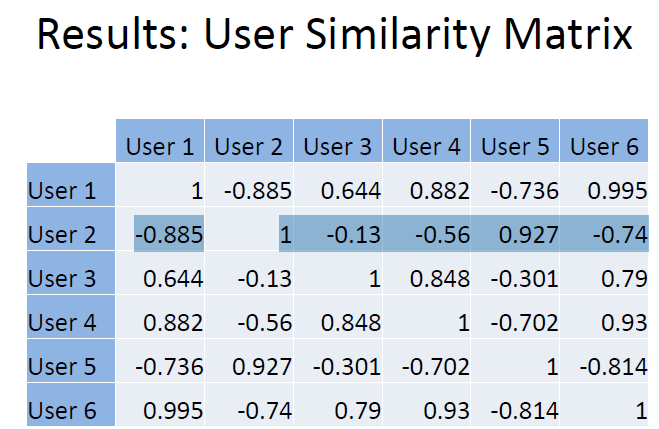

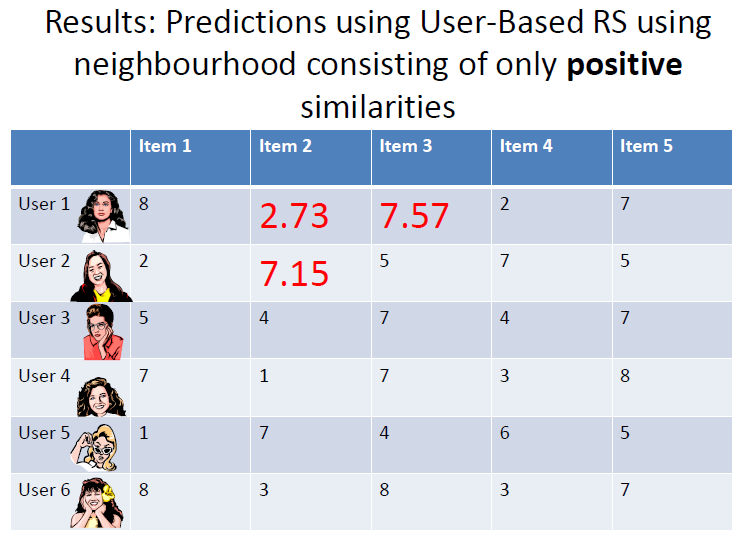

Exercise 2

(From Blackboard)

-

Compute the similarity matrices for user- and item-based

recommender systems for the example provided.

-

Compute the predictions using the two different approaches,

with a neighbourhood containing only positive similarity

values (i.e. ignoring any negative values)

Alternative Approaches

Of course, there are other ways of predicting ratings beyond

similarity matrices and whatnot…

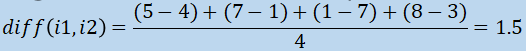

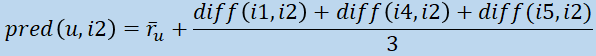

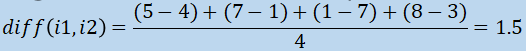

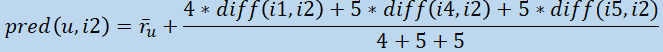

Simple Approach: Slope One

This approach focuses on computing the average differences between pairs of items; say for instance we have the following table of user item

ratings:

...the (mean) average difference between items 1 and 2 is simply (ignoring user 1 and

2’s ratings, who haven’t rated item 2):

We can also calculate a predicted rating for item 2, taking the mean of the average differences

between item 2 and every other item that all of the users have rated (so 1, 4 and 5):

Optionally we can adapt the prediction formula to weight each difference based on the number of jointly weighted items, like so:

Types of Ratings

Pure collaborative filtering-based systems, as seen above, only

rely on the rating matrix; the question is, what kind of

ratings can one use? For example:

-

The most common that we’re familiar with is a Likert

scale, typically 1-5 or 1-7

-

However one has to consider the optimal granularity of scale; for example, research has shown that a 1-10 scale is

better accepted in films for example, which offers more

granularity

-

One can also have multidimensional ratings (e.g. multiple ratings per movie, as a

user’s opinion may change with multiple viewings; this

is effectively a human aspect of rating)

-

There are challenges, such as users not always willing to rate many items, resulting in sparse matrices, so one has to consider how to incentivise users to rate

more items (e.g. paying users, having a point system, or

“nudge” users, e.g. a notification saying

“5 of your friends have already rated x thing, would

you like to do that?”)

-

Clicks, page views, time spent on a page, demo downloads

etc.

-

So the user isn’t explicitly rating something, but

their activity shows they're likely interested in it

-

These can of course be used in addition to explicit

ratings

-

The question is how do we interpret it “correctly”? (e.g. if a user spends longer browsing a page, does that

actually indicate they’re more interested in an

item?)

Example: Items Bought Together

Say for instance we have the following matrix of items that

people have bought together:

...It’s apparent for example that item 2 is quite

frequently bought with item 3 (so we can infer that if a user

buys one of the items, they’re more likely to buy the

other too).

Evaluating Recommender Systems

What makes for a “good” recommendation?

Of course it can vary between companies, thinking about e.g.

what they’re trying to persuade people to buy, what

behaviour are they trying to persuade people to engage in, etc.

These things will have an impact on good ways of evaluating the

recommender system, going back to the “human factor” (remembering that people are mostly predictable).

So what kinds of measures are used in practice?

-

Total sales numbers

-

Promotion of certain items

-

Click-through rates

-

Interactivity on the platform

-

Customer return rates (if an item gets returned a lot, that can be a

negative; it could have been recommended in the wrong

place/to the wrong people)

-

Customer satisfaction and loyalty

How can we measure the performance (again considering the

“human factor”)?

-

Online tests with real users

-

For instance, A/B testing (also known as split testing or bucket testing), a

binary method comparing two versions to assess which one

performs better.

-

Example measures (indicating good performance)

include:

-

sales increase

-

higher click-through rates

-

For instance, carrying out controlled experiments

-

An example measure is satisfaction with the system, which

can be qualitatively assessed.g. via questionnaires

-

There are issues associated with these however; e.g. people

tend to be much more positive in a satisfaction survey when

the person/entity that wants to know is present in the same

space (so people can be less honest/more afraid of saying

harsh things)

-

Based on historical data we have (rather than new incoming data)

-

Example measures include:

-

Prediction accuracy

- Coverage

For offline experiments, some common protocol:

-

We can hide some items where we know there’s a ground

truth

-

e.g. if we already know that people who buy item 2 usually

buy item 3

-

We can also hide some information from domain experts

-

If somebody already knows a lot about the thing we’re

asking them to evaluate, they’re perhaps not the right

person to ask due to already having too much contextual

information

-

We can also rank items or predict hidden ratings, using a metric to evaluate this

-

After conducting some experiment, we effectively have a

test set which we can use to test the accuracy of prediction

classification

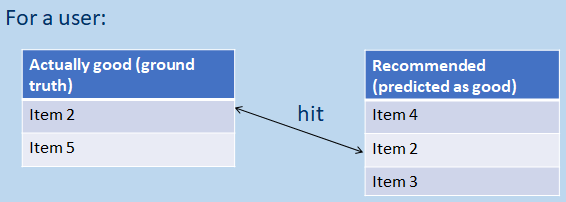

Correct and Incorrect Classifications

How can we label correct and incorrect classifications that the

system makes? The following four test responses are often

used:

-

True positives (TP) - the recommended item is relevant to the user

-

True negatives (TN) - the recommended item is not relevant to the user

-

False positives (FP) - an item which is not relevant has not been

recommended

-

False negatives (FN) - an item is relevant, but this has not been

recommended

-

True positives and false negatives will be classified in

the same space

-

sometimes this doesn’t matter but there are certain

circumstances it does (e.g. for covid testing! it’s

important that people who have covid receive a true positive

result, and the worst outcome is a false negative, i.e.

someone’s told they don’t have it when in

reality they do)

-

A false positive is easier to deal with than a false

negative, but ideally we still want to minimize these.

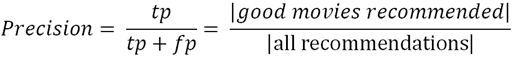

Metrics

Various metrics can be used to attempt to measure the

performance of an classification algorithm, such as:

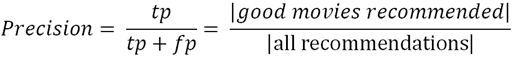

Precision

-

This is a measure of exactness which determines the fraction of relevant items

retrieved out of all items retrieved (e.g. the % of movie recommendations that are

actually good for the user).

-

tp = true positive, fp = false positive (the less false

positives we have, the better the precision)

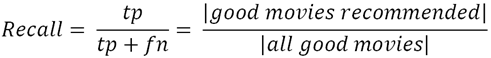

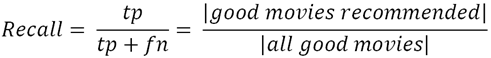

Recall

-

This is a measure of completeness, which determines the fraction of relevant items retrieved out of all relevant items (e.g. the % of all good movies for the user, that

were actually recommended).

-

tp = true positive, fn = false negative (the less false

negatives we have, the better the recall)

Things like calculating the area under a curve can give us a

visual representation of precision and recall.

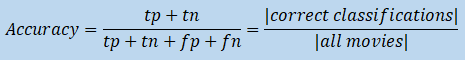

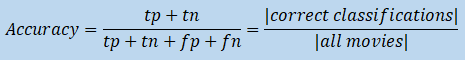

Accuracy

This simple metric considers the accuracy of all correct classifications (i.e. the true positives and the true negatives), given by the formula below:

This is arguably the metric that’s of most interest for

recommender systems, which quickly lets us see how effective it

is at making predictions.

In a recommender system, the item rank score/position really

matters; we want to make sure that ground truths are recommended more often than things where the

certainty is less.

For example, looking at the following table excerpt:

...if someone has bought item 2, we want to know that:

-

they’ve been recommended it

-

it was pretty high up on the list of recommendations

-

Rank score extends precision and recall, to take the positions of correct items in a ranked list into

account.

-

Lower-ranked items can be overlooked by users, so this

metric is especially important in recommender systems.

-

We can try to optimize models for such measures (e.g. AUC, “area under the curve”)

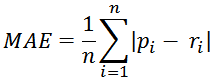

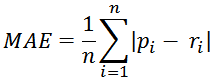

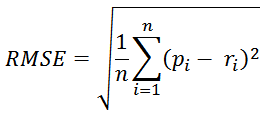

Error Measures

There are different ways of measuring the error rate of a

recommender; the two most commonly used are probably:

-

Mean absolute error (MAE)

-

this computes the deviation between predicted rating and actual ratings

-

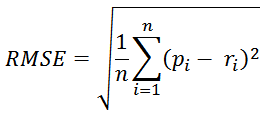

Root mean square error (RMSE)

-

this is similar to MAE but places more emphasis on greater deviation (due to the values being squared)

Online Auctions

Where Are Auctions Used and Why?

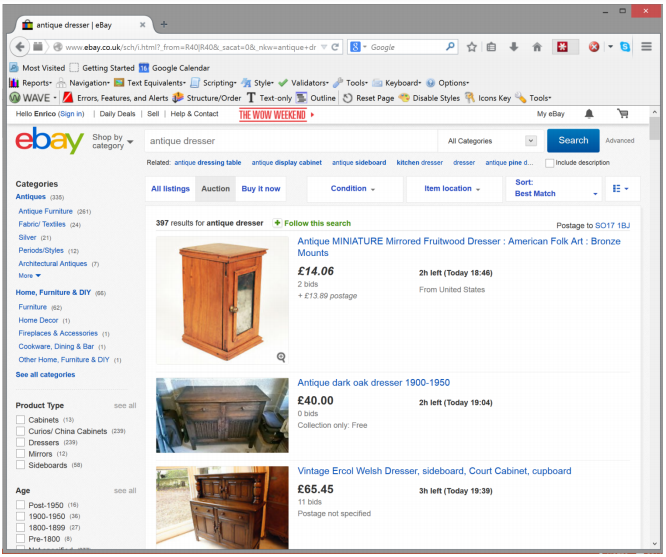

One of the first things that may come to mind when people talk

abou auctions are eBay auctions. There are two main ways that

you can buy things: buy it now, and auctions! (You’re probably familiar with the system but notice

the current price, number of bids, time remaining etc:)

eBay charges a % of commission fees for items sold on their platform

Going into some stuff mentioned in the first lecture...

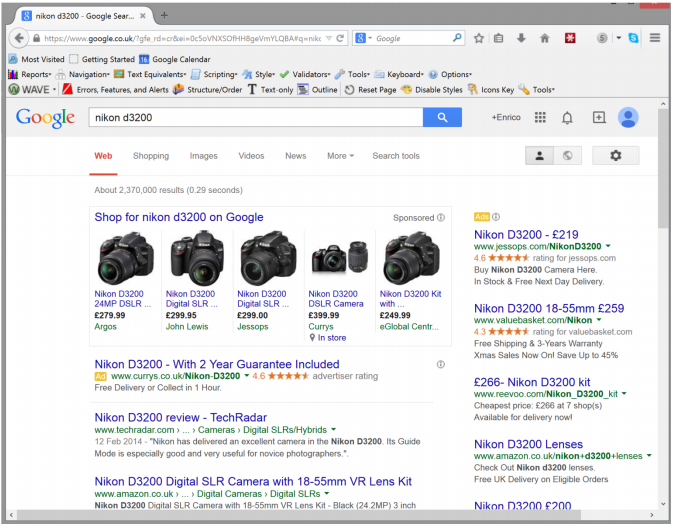

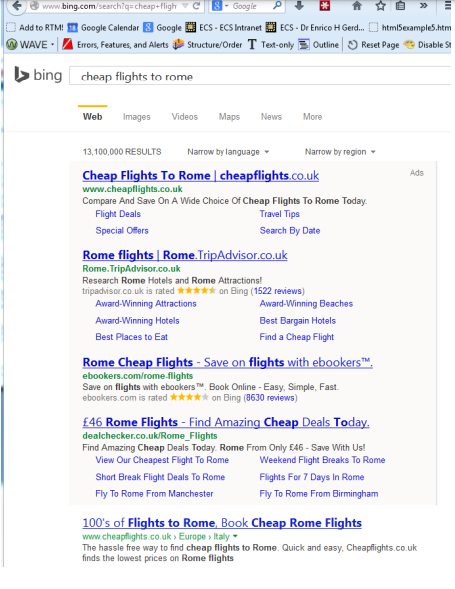

Sponsored Search

Sponsored search is the basic model that most search engines use to generate revenue (at least 80% of revenue by big companies like Google is

generated by selling advertisements on the internet).

e.g. searching for a product on Google, the search results with

green links are ranked by page ranking advertisement results. Note how some of them are marked as “Ad”; these

advertisers pay Google to put their results on top for those keywords.

Yahoo for instance used to call sponsored search auctions

“computational advertising”, it’s the same idea.

Display Advertising

Another type of auction is display advertising, a type of graphic advertising on websites (shown via web

browsers), usually in the form of some media e.g. an image

banner or a video ad.

These days as systems are more sophisticated, they often use

cookies from your browsing and purchase history to better target

ads to you. (So e.g say you buy some plane tickets and then

scroll through Facebook, you might see more airline/holiday

advertisements pop up.)

Why Use Auctions?

So why use auctions?

-

It’s a type of dynamic pricing based on competition

-

Essentially a price discovery mechanism (e.g. on an eBay auction, via people bidding the

final selling price for an item is

“discovered”)

-

Resources are distributed and allocated to the most

relevant person (e.g. most relevant ad is shown)

-

Computation is done by the “crowd”, which in this case consists of the bidders - going back

to the “wisdom of the crowds”

Auction Design

There are of course different types of auctions, each with

advantages and disadvantages. There are equivalences between

them, and they're more/less suited to different scenarios.

The auction design affects how people behave during an

auction - so one must consider:

-

What are the desired objectives and properties in a use case?

-

How can auctions be designed so that users are incentivized to behave “correctly”?

The most typically desired auction property is for the bidders to bid truthfully; i.e. no matter how valuable the item, we ideally want users

to bid what they think the price should be; this is a price discovery process (of course this isn’t always easily

feasible in practice).

Auction Types

There are four classical auction types…

English Auction

This type has as very long history and is the most commonly

known type of auction, entailing (referring to a singular

item):

-

Usually a fairly low price is set so that bidders are

willing to increase it and get the ball rolling

-

An opening bid

-

Ascending bidding increase

Low-res pic of Sotheby’s (fancy place):

Pretty simple process:

-

Each bidder is free to raise the current highest bid

-

When no bidder is willing to raise anymore, the auction

ends

-

The highest bidder wins the item at the price of their

bid

English auctions are most commonly used (as you’d expect)

for selling goods, e.g. antiques and artworks.

-

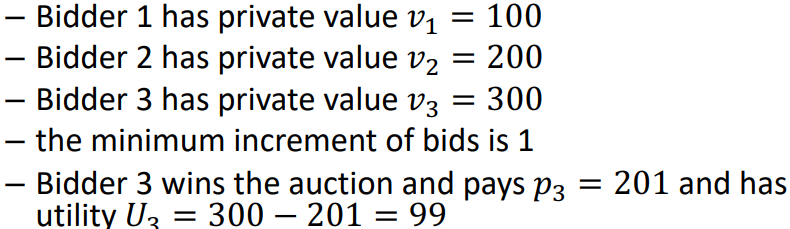

Bidders in an auction can be assumed to have a

“private value”, i.e. what they’re ultimately willing to pay

for the item, independent of what the other bidders may

believe the item is worth.

-

However, their bid may be influenced by what they believe

others will bid

-

The bidder may speculate about the bids of others, and shade their own bid

-

One may make as few bids as possible to avoid raising the

price more than necessary

-

A bidder’s first bids are often lower than their

“private value”

-

The concept of individual rationality indicates that bidders will typically not bid more

than they are willing to pay

-

A bidder may also refrain from bidding until the bidding

slows down, in the hopes that it’ll stop below their

maximum bid (and then they can place one)

Dutch Auction

This is an example of an open-bid descending auction

(effectively the opposite way to the English auction).

-

The auction starts at a high price (artificially high so that no one is realistically willing

to pay it), announced to the public

-

The auctioneer then lowers the price until a bidder makes a bid equal to the current offer

price

-

The good is then allocated to the bidder that made the offer, and the agent pays the price they bid

This type of auction is famously used for selling flowers in

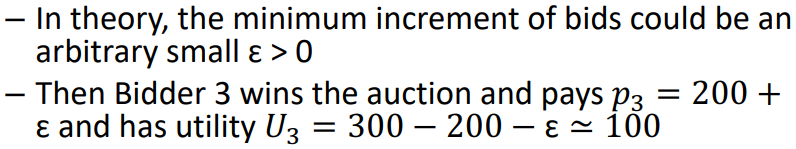

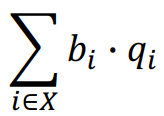

the Netherlands, fun fact (flowers have a limited life cycle so