Programming Language Concepts

Matthew Barnes

Syntax to execution 2

Introduction 2

Syntax and BNF 4

Names, scoping and binding 7

Lexing and parsing 8

In Haskell 9

Testing 10

Introduction to types 10

Type derivation 12

Type checking and type inference 14

Reasoning about programs 19

Intro to semantics 19

Denotational semantics 19

Operational semantics 20

Type safety 23

Preservation 24

Progress 25

Structured types 26

Structured types 26

Subtyping 31

Objects 39

Lambda calculus and Curry Howard 46

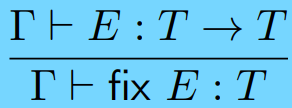

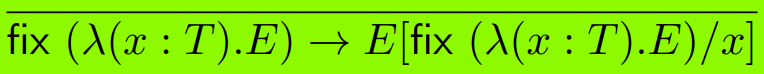

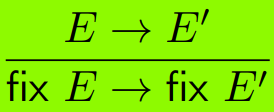

Fixed-point combinator 49

Curry-Howard correspondence 51

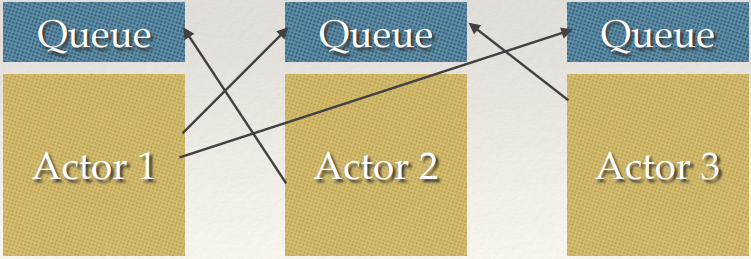

Concurrency in programming languages 54

Race conditions and mutual exclusion 54

Atomicity, locks, monitors 58

Barriers and latches, performance and scalability, CAS and

fine-grained concurrency 61

Message passing, software transactional memory 65

Rust and concurrency 70

Semantics of concurrency 73

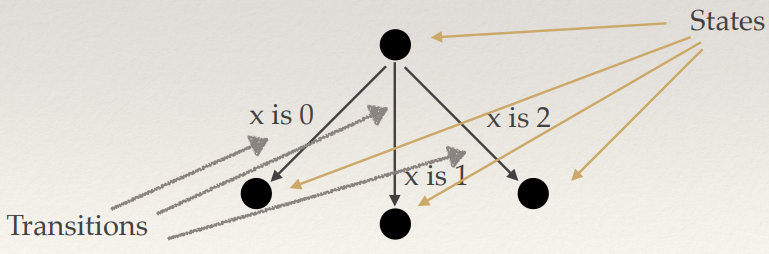

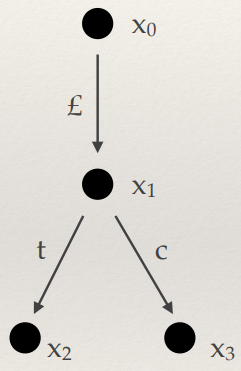

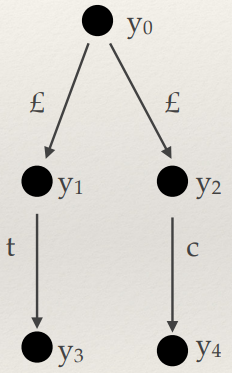

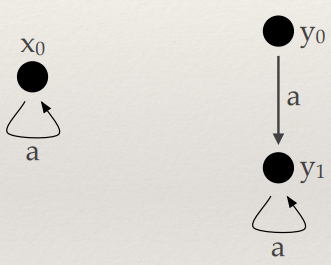

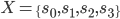

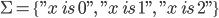

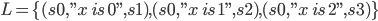

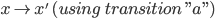

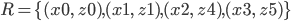

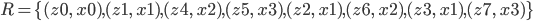

Labelled Transitions Systems 73

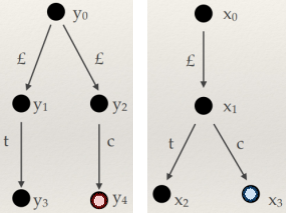

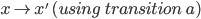

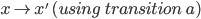

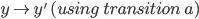

Simulations 76

Bisimulations 82

TL;DR 84

Syntax to execution 84

Testing 86

Reasoning about programs 87

Structured types 88

Concurrency in programming languages 89

Semantics of concurrency 90

Syntax to execution

Introduction

-

Application domain: a field of computation fulfilled by various languages and

paradigms

-

The types of application domain are:

-

Scientific computing (Fortran, Mathematica, MatLab, OCAML, Haskell)

-

Business applications (COBOL, BPEL)

-

Artificial Intelligence (LISP, Prolog)

-

Systems (C, C++)

-

Web (HTML, XHTML, Perl, PHP, Ruby, JavaScript)

-

A programming language family is a group of languages with similar / the same set of

conventions and semantics.

-

The families of languages are:

-

Imperative (C, C++, Java, C#, Pascal)

-

Functional (OCaml, Haskell, ML, Lisp, Scheme, F#)

-

Logic / Declarative (Prolog)

-

There are two kinds of software design methodologies:

-

The high-level methodology, data oriented, relates to ADTs and object-oriented design. It cares less

about program efficiency and more about the structure of

code.

-

The low-level methodology, procedure oriented, emphasises decomposing code into logically independent

actions, and can also emphasise on concurrent

programming.

-

Cross-fertilisation is where concepts from one family can be used in languages

belonging to another.

-

For example, functional programming can be used in

imperative languages like Java, C# and Rust.

-

Monads can allow programmers to write imperative style code

in functional languages.

-

Declarative code, like regexp, SQL and HTML, can be written

in some imperative languages.

-

How good is a language? We need to follow it through

evaluation criteria!

-

How easy is it to read the code?

-

You can make complex things seem simpler just by making it

more readable

-

Feature multiplicity: multiple ways to achieve the same result

-

Syntactic sugar: a simpler, more intuitive way to write something

-

Remember, feature multiplicity and syntactic sugar are not the same thing. Feature multiplicity means there are lots

of ways of achieving the same result with different

semantics, whereas syntactic sugar is just an alternate way

of writing something while still keeping the exact same

semantics.

-

Orthogonality: a property of two features, meaning that they are

independent of each other. Informally, it means that there

are no constraints in combining two features together. An

example of non-orthogonality would be that you can’t create a void variable in C. Similarly, an array can contain any data type except void. For example when applying public and static to a method, they do not interfere with each other,

so these two are orthogonal.

-

Obfuscation: intentionally making code hard to understand

-

How well does the language abstract hardware and

concepts?

-

It could use functions, modules, classes or objects.

-

More abstraction = Further separation from machine-level

thinking

-

How efficient is your language?

-

Efficiency can be described in both syntax and in

semantics.

-

C could be said to have semantical efficiency since C

compilers are great at optimising code.

-

Haskell could be said to have syntactical efficiency since

you can implement an algorithm like quicksort in 5 lines,

whereas C would require significantly more.

-

A brief history of time programming languages:

-

Early 1950s: people made Fortran, the first high-level compiled

language.

-

IBM704 hardware supported floating point numbers.

-

Late 1950s: LISP was made, the first functional language (+ a

bootleg named Scheme)

-

1960s: everyone got together and made ALGOL. They realised

programming languages could be their own field of

study.

-

1970s: COBOL was made. Emphasises data processing. C, Unix,

Prolog and Ada were made.

-

1970s-80s: BASIC for microcomputers was made

-

1980s: ML was made, everything is typed at compile time.

Smalltalk was made and the discovery of object-oriented

programming.

-

1990s: Java and the JVM was made, it had flexibility and

portability

-

2000s: Scripting and server-side programming was born -

Perl, Python, PHP, JavaScript, Ruby etc.

Syntax and BNF

-

Syntax: the rules / language for writing programs

-

Semantics: the meaning of programs, what happens when we run

them

-

BNF (Backus-Naur form): a metalanguage that describes a CFG.

-

It has terminals (also called tokens or lexemes) and

non-terminals, just like a CFG.

-

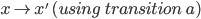

Here is an example of BNF:

<expr> ::= <expr> + <expr> | <expr> *

<expr> | <lit>

<lit> ::= 1 | 2 | 3 | 4

-

A legal sentence of a grammar is strings for which there is

a derivation in the BNF, just like how CFGs accept strings

for which there is a derivation for.

-

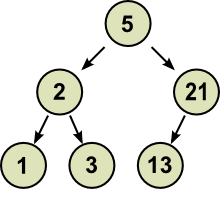

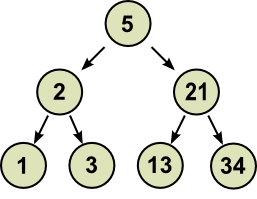

These derivations can be transformed into parse trees.

-

However, some sentences might have more than one

derivation, making the grammar ambiguous.

-

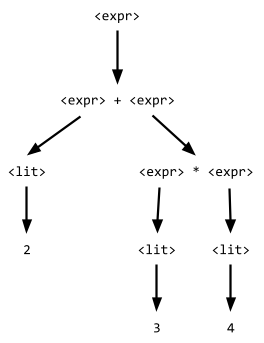

For example, the string “2 + 3 * 4” has two

derivations in the above BNF:

|

Derivation #1

|

Derivation #2

|

|

|

|

-

Which one do we pick? They’ll both yield different

results.

-

We could just put parentheses everywhere, but there’s

a better way.

-

We know that * binds tighter than + (it has higher

precedence), so why don’t we adjust our BNF to reflect

that?

<expr> ::= <mexpr> + <expr> |

<mexpr>

<mexpr> ::= <bexpr> * <mexpr> |

<bexpr>

<bexpr> ::= (<expr>) | <lit>

<lit> ::= 1 | 2 | 3 | 4 | ...

-

Now, 2 + 3 * 4 has a unique parse tree.

-

The reason addition is above multiplication here is that we

evaluate up the parse tree, so multiplication is evaluated

first (using call-by-value).

-

Call by name evaluates down, but it achieves the same

result as call-by-value, since it treats the arguments

as unevaluated ‘thunks’.

-

Operations that have the same precedence, like +, - and *,\

can also have ambiguous parse trees. Therefore, bracketing

becomes important.

-

Additionally, some operations are not associative, for example 1 - (2 - 3) isn’t the same as (1 - 2) -

3.

-

How can we make operations associate to the left or right?

Let’s adjust our BNF so that the + operator associates

to the left instead of the right:

<expr> ::= <expr> + <mexpr> |

<mexpr>

<mexpr> ::= <bexpr> * <mexpr> |

<bexpr>

<bexpr> ::= (<expr>) | <lit>

<lit> ::= 1 | 2 | 3 | 4 | …

-

Note that we just swapped round the terms in the

<expr> definition—it’s that easy! Now

1+2+3 will associate to ((1+2)+3).

-

A general rule for associativity is that the highest level

expression is on the same side as the associativity:

-

The highest level expression is on the left hand side for

left-associativity

-

The highest level expression is on the right hand side for

right-associativity

-

EBNF (Extended Backus-Naur form): A syntactic sugar for BNF. It doesn’t add anything

new, it just makes things easier to write.

-

Here’s a list of symbols that can be used in EBNF, as

apparent in the proposed ISO/IEC 14977 standard:

|

Usage

|

Notation

|

|

definition

|

=

|

|

concatenation

|

,

|

|

termination

|

;

|

|

alternation

|

|

|

|

optional

|

[ ... ]

|

|

repetition

|

{ ... }

|

|

grouping

|

( ... )

|

|

terminal string

|

“ ... “

|

|

terminal string

|

‘ ... ‘

|

|

comment

|

(* ... *)

|

|

special sequence

|

? ... ?

|

|

exception

|

-

|

<if_stmt> ::= if <expr> then <stmt> [else <stmt>]

The [else <stmt>] is optional because it’s enclosed in square

brackets

<switch_stmt> ::= switch (<var>) { { case <lit> : <stmt>; } }

The {case <lit> : <stmt>;} can be repeated because it’s enclosed in curly

braces

Names, scoping and binding

-

Name (aka identifier): reserved keywords with certain behaviours / functions in

a language

-

Variable: an allocated memory location

-

Nowadays, they’re more like placeholders for values

of a specific type.

-

Aliasing: when two variables point to the same location

-

An example of this in C is using pointers: int*ptr=x. ptr points to x’s location.

-

Binding: an association between an entity and an attribute

- Examples:

-

variable and its type

-

variable and its scope

-

There are two kinds of binding:

-

Static binding: occurs before execution and remains unchanged

during

-

This is prevalent in C where you explicitly set a type, or

in Haskell where the most general type is used

(polymorphism)

-

Type checking is done at compile time, so there are no

runtime errors concerning types

-

It’s also safer: if you mess up your types,

you’ll know at compilation time instead of

run-time.

-

Dynamic binding: first occurs during execution or changes during

-

This is prevalent in JavaScript where a variable can change

type depending on what value you put in it

-

You can code stuff faster, but things are more likely to go

wrong during execution

-

You can also create ad hoc polymorphic functions

-

Increases run-time of the program as the program needs

type-check and run the main thread at the same time. This is

negligible on modern computers though.

-

Kinds of variables (Memory Allocation):

-

Static variables: bound to a location in memory when we initialise

them

-

(e.g. a global C variable)

-

Stack variables: memory is allocated from the run-time stack and

deallocated when the variable is out of scope

-

(e.g. a local Java variable in a method)

-

Explicit heap variables: memory is bound explicitly

-

(e.g. using malloc in C or new in Java)

-

Implicit heap variables: the variable is only brought into being when it is

assigned values

-

(e.g. any JavaScript variable, you can’t make an

empty JavaScript variable)

-

The scope of a variable is the location in which you can use

it.

-

Local scope variables are only visible within their own

“block”

-

Global scope variables can be seen anywhere, unless it’s

hidden by a locally scoped variable.

-

Static (lexical) scope variables are variables where the scope can be

determined at compile time

-

Dynamic scope variables are global variables that are set a

temporary value within a scope, and their old value is set

back when that scope is exited. It’s not even a scope,

making this a misnomer.

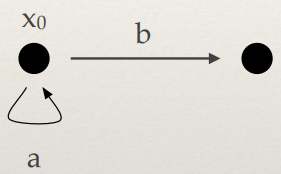

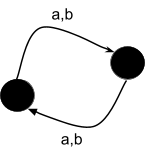

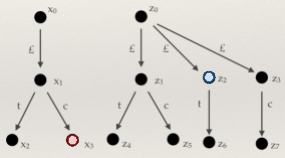

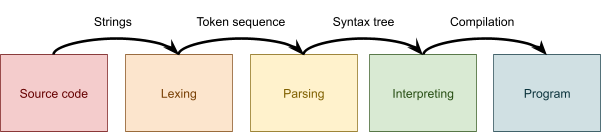

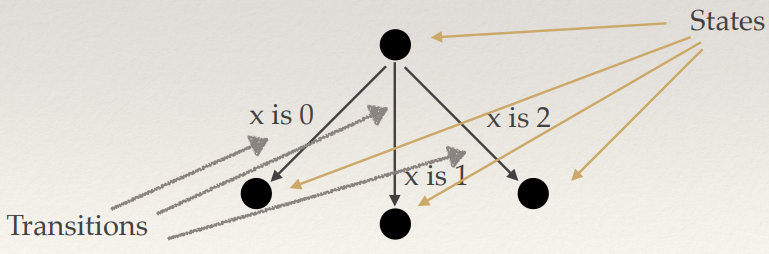

Lexing and parsing

-

Lexical analysis (lexing) is the process of pattern matching on text.

-

In it, we identify tokens and form an interface for the

parser.

-

Tokens are identified with regular expressions, and then

the lexer constructs an automaton, which is executed as

individual characters are read.

-

This is possible because the power of regular expressions

matches that of finite automata.

-

An example of a library that performs lexical analysis

would be “alex”.

-

After lexing, we usually get a list of tokens, which we can

feed into a parser to give it some kind of meaning.

-

If you want to look at lexical analysis more, geeks for

geeks has a good page on it: https://www.geeksforgeeks.org/introduction-of-lexical-analysis/

-

Parsing is the process of creating a syntax tree from a list of

tokens.

-

There are two ways of doing this:

-

LL grammars (leftmost derivations)

-

recursive descent parsers

-

Works like context free grammars

-

LR grammars (rightmost derivations)

-

Works like pushdown automata

-

Top-down LL parsing, or recursive-descent parsing, works by using context free grammars, like:

<expr> -> <term> {(+ | -)

<term>}

<term> -> <factor> {(* | /)

<factor>}

<factor> -> id | int_constant | (<expr>)

-

However, be careful of left recursion, because if we have

left recursion our parser could enter an infinite

loop.

-

For example, the grammar...

-

A → A + B

-

... has left recursion. The parser could keep recursing

into the left-hand side A and never stop.

-

A way to avoid this is by putting our grammar into Greibach

normal form, so each iteration captures at least one token

before recursing into any non-terminals.

-

An example of a library that does recursive-descent parsing

would be “parsec”.

-

Sometimes, in top-down LL parsing, we might need to know

the next few tokens to avoid ambiguity.

-

We can just make our grammar not left recursive (which is

called left factoring)

-

Or, we can use a parser LL(k) where k is the

lookahead.

-

Bottom-up LR parsing works similarly to a deterministic pushdown automaton

and uses a stack.

-

The statespace and transition functions are precomputed - this is known as an LR parsing table.

-

The time complexity of parsing is linear (the size of the

input string)

-

This requires preprocessing; an example tool would be

“yacc”.

-

Two classic C tools for building lexers and parsers are

“lex” and “yacc”,

respectively.

-

“Happy” is a Haskell variant for

“yacc”.

In Haskell

-

We have a variety of tools to pick from, like:

-

Alex (generates lexers)

-

Parsec (recursive descent library)

-

Happy (a compiler compiler, or a parser generator)

-

Alex is similar to the classic C tool “Lex”

-

An Alex input file consists of regular expressions, which

are the token definitions.

-

You invoke Alex with the following command:

-

alex { option } file.x { option }

-

Alex usually creates ‘file.hs’ which contains

the source for the lexer.

-

Alex provides a basic interface to the generated

lexer.

-

When Alex parses your Tokens.x file and gives you a

Tokens.hs file, that file provides definitions for:

-

type AlexInput

-

alexGetByte :: AlexInput -> Maybe (Word8, AlexInput)

-

alexInputPrevChar :: AlexInput -> Char

-

alexScanTokens :: String -> [Token] (used for lexing)

-

Basically, when Alex creates your Tokens.hs file, you can

‘import’ that into your Haskell code and start

using alexScanTokens to lex strings straight away!

-

The lexer is independent of input type.

-

There are other wrappers too, like:

-

“posn” wrapper provides more information about line and column numbers,

useful for syntax errors

-

“monad” wrapper state monad can keep track of current input and text

position

-

“monadUserState” wrapper extends monad wrapper, but includes the AlexUserState

type

-

Parsec is a library that combines small parsec functions to

build more sophisticated parsers.

-

Parsing with Parsec is top-down recursive.

-

Two important functions in Parsec are:

-

makeTokenParser similar to Alex

-

buildExpressionParser

-

Happy is a bottom-up parser generator, inspired by

yacc.

-

It’s generally faster than top-down recursive

parsing.

-

In fact, it’s so good that GHC uses it (the compiler

for Haskell).

-

Like Alex, it requires a separate input file that defines a

grammar.

-

When you run Happy, it outputs a compilable Haskell

module.

-

You can invoke Happy using the following command:

-

happy [ options ] file [ options ]

Testing

Introduction to types

-

Type system: a tractable syntactic method for proving the absence of

certain program behaviours by classifying (program) phrases

according to the kinds of values they compute

-

In English, a type system is a set of rules that assigns

types to various constructs of a computer program, such as

variables, expressions, functions or modules. In other

words, when you have “var x = 5” in a program, the type system is the one that goes

“that’s an integer”.

-

Types are abstract enough to hide away low-level details like memory, and

they’re also precise enough to let us formalise and check properties using

mathematical tools.

-

What do types do for us?

-

With type systems and types, we can:

-

ensure correctness and guarantee the absence of certain

behaviours

-

enforce higher-level modularity properties

-

enforce disciplined programming (unless you’re using

weak typing)

-

How do we use types?

-

Strongly typed: prevents access to private data, from corrupting memory

and from crashing the machine e.g. java

-

Weakly typed: do not always prevent errors but use types for other

compilation purposes such as memory layout e.g. C

-

Static typing: checks typing at compile time e.g. C

-

Dynamic typing: checks typing during runtime e.g. JavaScript

-

Strong vs Weak

-

With strong, types must be declared “whenever an

object is passed from a calling function to a called

function, its type must be compatible with the type declared

in the called function”.

-

Strong => Verbose

-

With weak typing, the wrong type may be passed to a

function, and the function is free to choose how to behave

in that case.

-

Weak type languages coerce data to be of the required

type.

-

Basically, strong vs weak is all about how types are

implicitly converted from one type to another, e.g. string

to an int.

-

A language that is blatantly strong is Java, because

it’s so adamant and strict about types (and is often

criticised so).

-

A language that is blatantly weak is Javascript, because it

implicitly converts everything (in fact, it’s very

hard to get a typing error in JS!):

|

Example

|

Result

|

Explanation

|

|

1 + true

|

2

|

The boolean value true is coerced to 1.

|

|

true - true

|

0

|

|

true == 1

|

true

|

|

[] + []

|

empty string

|

In case of an object (array is an object as

well), it is first converted to a primitive

using the toString method. This yields an empty

string for an empty array, and the concatenation

of two empty strings is an empty string.

|

|

true === 1

|

false

|

The triple equals operator prevents type

coercion. This is why most of the time you want

to prefer it over “==”, which

applies coercion.

|

|

91 - “1”

|

90

|

The string “1” is coerced to the

number 1.

|

|

9 + “1”

|

91

|

In this case, the “+” operator is

considered as string concatenation. 9 is coerced

to “9” and thus the result of

“9” + “1” is 91.

|

-

Static vs Dynamic

-

Static types use approximation because determining control

flow is undecidable

-

Compile time error can avoid run time errors

-

Static is good where types are used for memory layout, like

C

-

It is bad to use dynamic with critical systems, because

dynamic doesn’t check types until runtime

|

|

Weak

|

Strong

|

|

Dynamic

|

PHP, Perl, Javascript, Lua

|

Python, Lisp, Scheme

|

|

Static

|

C, C++

|

Ada, OCaml, Haskell, Java, C#

|

-

When do we check types?

-

With statically typed languages, type checking is done at compilation. Type checking done on the AST (Abstract Syntax Tree) is

called semantic analysis.

-

Type inference = compiler computes what type is, like

‘var’ in Javascript or in Java

-

A type checker is an algorithm written in some high level language that

checks the type of a program to see if it all holds

up.

-

How can we trust type checking algorithms?

-

If our program is secure with its types, it has type safety.

-

“Well-typed programs never go wrong” - Robin

Milner

-

What does it take to prove type safety?

-

“Well-typed programs never go wrong”

-

To prove this statement, you need to:

-

Know what well-typed means

-

Know what programs means

-

Know what never go means

-

Know what wrong means

-

We inductively define typing relation, like a proof in propositional logic

-

We inductively define reduction relation, defines how program evaluates through runtime

states

-

We inductively define description of error states of program

-

We use mathematical descriptions to try to prove that type

safety holds

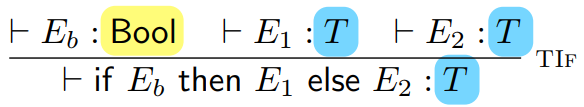

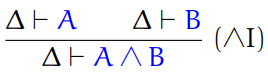

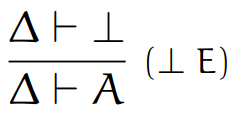

Type derivation

-

With programming languages, the compiler needs to check

that the program doesn’t consume data of the wrong

type.

-

Therefore, it needs to perform type derivation.

-

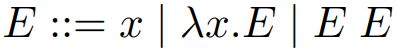

First of all, we’re going to define a toy language

for our examples:

-

T, U ::= Int | Bool

-

E ::= N | true | false | E < E | E + E | x |

-

| if E then E else E

-

| let (x : T) = E in E

-

Each ‘fragment’ of the program needs a

type.

-

We can define a typing relation written like this:

-

Which means “E has type T”, where E is the

program and T is the type.

-

Examples are:

-

⊢ false : Bool

-

⊢ 56 : Int

-

⊢ “KING CRIMSON: IT JUST WORKS” :

String

-

We may need to approximate the type if we can’t

determine it statically.

-

We can apply this typing relation to programming languages

using inductive rules.

-

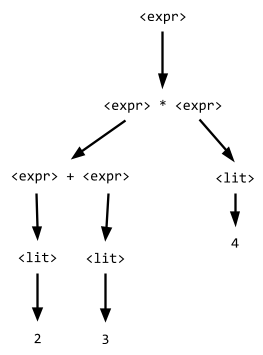

As a quick refresher, there are two kinds of rules with

inductive rules: base cases, and inductive steps.

-

The base case is our smallest, atomic rule, and is usually

used for literals. For example:

-

The inductive step takes a program and splits it up into

smaller rules, for example:

-

In this syntax, the relation below the line is split up

into the relations above the line.

-

With the base cases, they can’t be split up into

anything, because base cases are atomic.

-

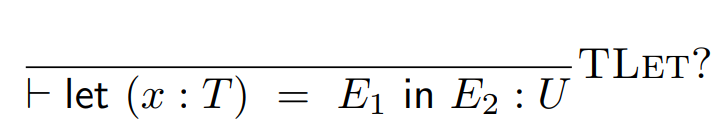

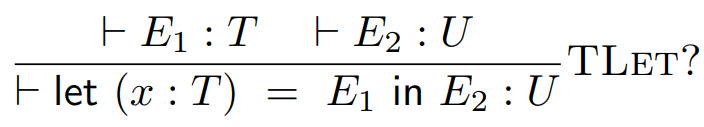

But what if we have some program that defines variables

with types, like this:

-

How do we split this up using inductive rules?

-

If we do something like:

-

We won’t remember what type x is when we split up E2, so we’ll run into a problem.

-

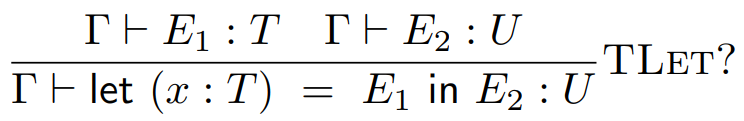

We need to remember what the type of x is! For this, we’ll use type environments.

-

Type environment: A mapping from variables to types. It’s represented

by the capital Greek letter gamma: Γ

-

It goes to the left of the tack, like this:

-

So now, we can remember variable types! But first, we need

to add a mapping from x to its type into our type environment when we split

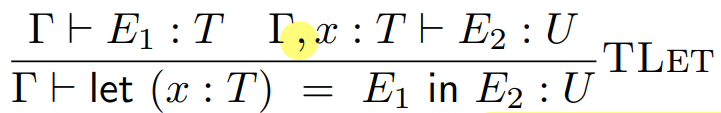

up this let expression. We can do that by writing:

-

The comma means that we’re adding the mapping from x to type T into our type environment gamma (Γ). So now, when we split up E2 even further, we can remember what type x is.

-

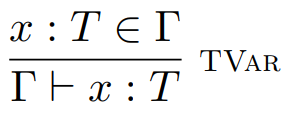

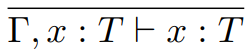

Now that we have type environments, we can define a rule

for variables:

-

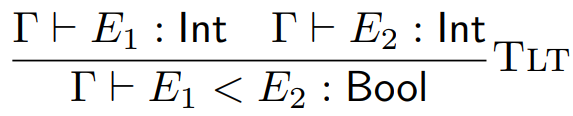

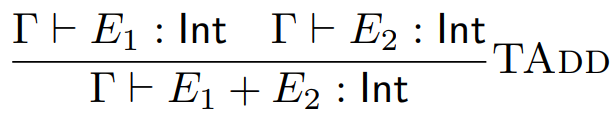

We can also define rules for more programs, such as

comparisons:

-

Just a note, we’re adding lambda calculus to our toy

language:

-

T, U ::= Int | Bool

-

E ::= n | true | false | E < E | E + E | x |

-

| if E then E else E

-

| let (x : T) = E in E

-

λ (x : T). E

- E E

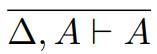

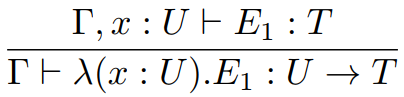

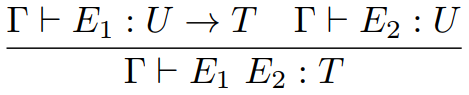

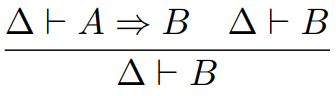

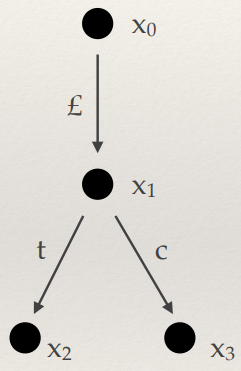

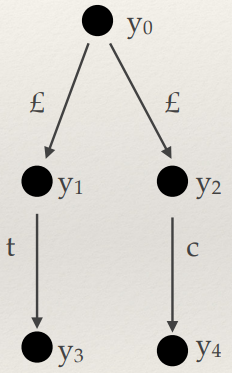

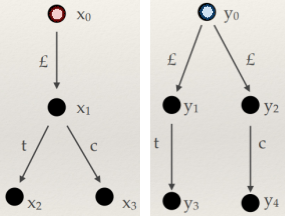

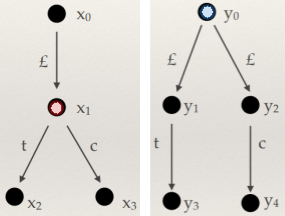

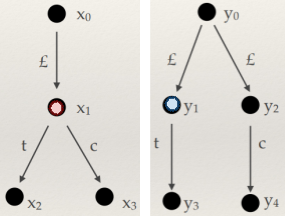

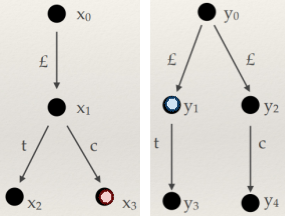

Type checking and type inference

-

Formally, a set of inference rules S defined over programs used to define an inductive

relation R is called syntax directed if, whenever a program (think AST) E holds in R then there is a unique rule in S that justifies this. Moreover, this unique rule is

determined by the syntactic operator at the root of E.

-

Informally, a language compiler is syntax directed if it uses a parser to figure out what the program does. In

other words, the semantics of a program (what it does)

depends on the syntax itself (what is written, so pretty

much any compiler used today is syntax directed).

-

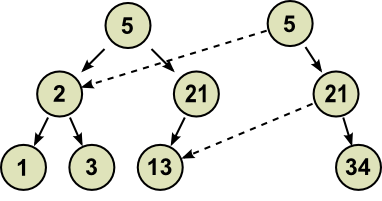

As it happens, ASTs and type derivation trees are exactly the same shape.

-

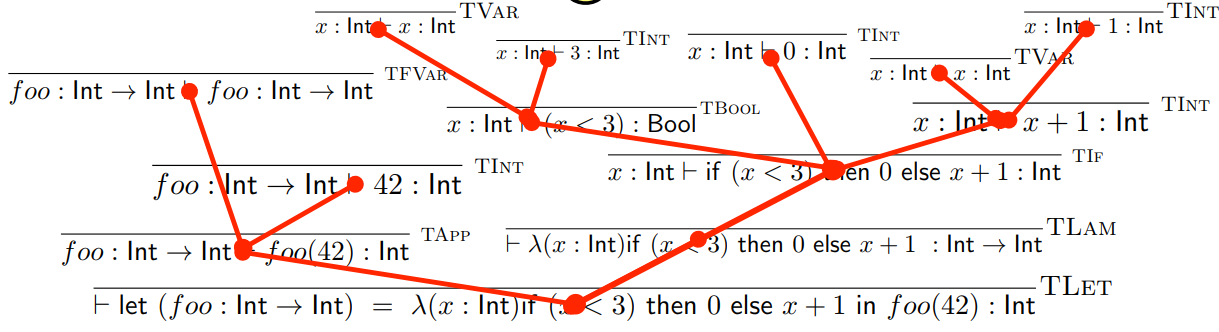

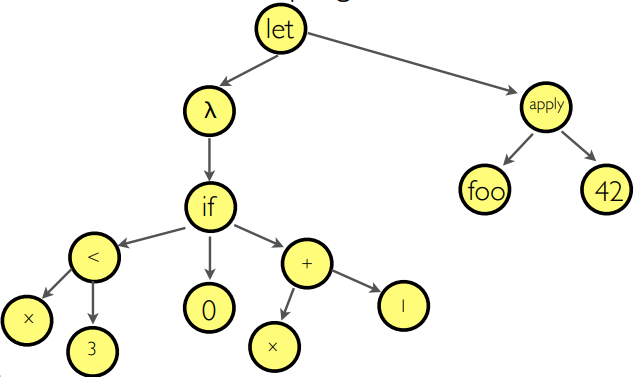

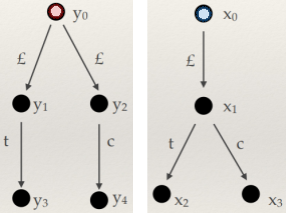

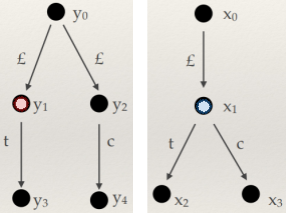

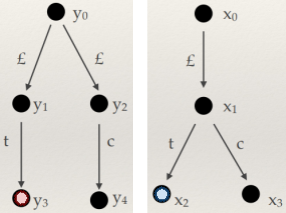

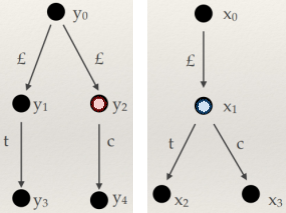

Here’s an example, with the program:

-

if (x < 3)

- then 0

-

else (x + 1)

-

If you flipped that red tree upside down and compared it

with that AST, they’d be the same tree. Trust

me.

-

Why does this matter? Well, instead of having two trees,

one for interpreting and one for types, why not just do type

checking in the AST? This is basically type inference, which

we will touch on in a moment.

-

Another thing is the inversion lemma, which is inferring the type of a subprogram given the

type of the whole program.

-

For example, we have no idea what type E1 is on its own, but if we see it in this sort of

context:

-

Now it’s obvious that E1 is a boolean type. That’s inversion

lemma!

-

The inversion lemma can be applied to nearly every term in

our toy lambda language:

-

If Γ ⊢ n : T then T is Int

-

If Γ ⊢ b : T then T is Bool

-

If Γ ⊢ x : T then x : T is in the mapping

Γ

-

If Γ ⊢ E1 < E2 : T then Γ ⊢ E1 :

Int and Γ ⊢ E2 : Int and T is Bool

-

If Γ ⊢ E1 + E2 : T then Γ ⊢ E1 :

Int and Γ ⊢ E2 : Int and T is Int

-

If Γ ⊢ if E1 then E2 else E3 : T then Γ

⊢ E1 : Bool and Γ ⊢ E2 : T and Γ

⊢ E3 : T

-

If Γ ⊢ λ (x : T) E : U’ then

Γ , x : T ⊢ E : U and U’ is T →

U

-

If Γ ⊢ let (x : T) = E1 in E2 : U then Γ

, x : T ⊢ E2 : U and Γ ⊢ E1 : T

-

If Γ ⊢ E1( E2 ) : U then Γ ⊢ E1 : T

➝ U and Γ ⊢ E2 : T for some T

-

That’s great, and now we have type inference, but we

have one problem.

-

What if we don’t know the types?

-

For example, if we had a function that adds two arguments

together, they could be integers, floats, strings etc.

-

We can’t have a single type for that! So what do we

do?

-

We use type variables and unification!

-

Type variable: a symbolic value to represent some unknown type with

constraints.

-

We’ve already been using type variables in Haskell,

where a would be a type variable:

-

(+) :: Num a => a -> a -> a

-

Unification: the process of testing an argument against the

constraints of a type variable

-

Here’s an example of unification with the

program:

-

let foo = λ(x) if (x < 3) then 0 else (x +

1)

-

in let cast = λ(y) if (y) then 1 else 0

-

in cast (foo (42))

|

Step

|

Explanation

|

Program

|

Types

|

|

0

|

We haven’t done anything yet.

|

let foo = λ(x) if (x < 3) then 0 else

(x + 1)

in let cast = λ(y) if (y) then 1 else

0

in cast (foo (42))

|

N/A

|

|

1

|

We will now simplify the first let.

We’re going to create type variables for

the variable ‘foo’ and the

expression that ‘foo’ is equal to,

and the rest of our program.

|

let foo = λ(x) if (x < 3) then 0 else (x +

1)

in let cast = λ(y) if (y) then 1 else

0

in cast (foo (42))

|

a = ?

b = ?

|

|

2

|

Now we’re going to dig deeper into that λ(x)

We can give type variables to the parameter x and the lambda body.

Also, now that we have c and d, we can build up

the type variable a from that, because we know

it’s a function (it’s got lambda in

it), so a is c -> d.

|

let foo = λ(x) if (x < 3) then 0 else (x + 1)

in let cast = λ(y) if (y) then 1 else

0

in cast (foo (42))

|

a = c ⟶ d

b = ?

c = ?

d = ?

|

|

3

|

Let’s look more at that if

statement.

Obviously, x < 3 is a boolean, so no

problems here. Plus, this condition can only

work if x is an integer, so it’s likely

that the type variable c is an integer.

0 is most definitely an integer, and x + 1

looks like it could be an integer too, so it

would be fair to say that the type variable

‘d’ is an integer.

|

let foo = λ(x) if (x < 3) then 0 else (x + 1)

in let cast = λ(y) if (y) then 1 else

0

in cast (foo (42))

|

a = c ⟶ d

b = ?

c = Integer

d = Integer

|

|

4

|

Now let’s split up that blue bit (the bit

we haven’t evaluated yet).

Let’s give everything type variables

first, like ‘cast’ and the

expression that cast equals, and cast(foo(42)).

This also means we can say b = f, because the

type of a let is the type of the expression

it’s passing variables to.

|

let foo = λ(x) if (x < 3) then 0 else (x + 1)

in let cast = λ(y) if (y) then 1 else 0

in cast (foo (42))

|

a = c ⟶ d

b = f

c = Integer

d = Integer

e = ?

f = ?

|

|

5

|

Let’s unfold that λ(y) and give it the type variable:

e = g -> h

where

(y) is type g

and

if (y) then 1 else 0 is type h

|

let foo = λ(x) if (x < 3) then 0 else (x + 1)

in let cast = λ(y) if (y) then 1 else 0

in cast (foo (42))

|

a = c ⟶ d

b = f

c = Integer

d = Integer

e = g ⟶ h

f = ?

g = ?

h = ?

|

|

6

|

Let’s unfold the if statement in that y

lambda.

It’s clear that y is a boolean, because

it’s the only thing in the condition, so g

is a boolean type.

1 and 0 are integers, so it makes sense that h

would be an integer.

|

let foo = λ(x) if (x < 3) then 0 else (x + 1)

in let cast = λ(y) if (y) then 1 else 0

in cast (foo (42))

|

a = c ⟶ d

b = f

c = Integer

d = Integer

e = g ⟶ h

f = ?

g = Boolean

h = Integer

|

|

7

|

Let’s unfold the applications now.

The application is cast(foo(42)), and we already know the type of cast; it’s h, so f = h.

By unwinding foo(42), we can tell that c is an

integer because an integer is being passed in as

an argument. We’ve already got the type of

c, and it all matches up fine.

But here’s where the problem lies: since

the return type of foo is being passed into

cast, that must mean g = d. However, g is a boolean and d is an integer.

Objection! There is a glaring contradiction in

this source code!

|

let foo = λ(x) if (x < 3) then 0 else (x + 1)

in let cast = λ(y) if (y) then 1 else 0

in cast (foo (42))

|

a = c ⟶ d

b = f

c = Integer

d = Integer

e = g ⟶ h

f = h

g = Boolean

h = Integer

g = d

Boolean = Integer?

|

Reasoning about programs

Intro to semantics

-

The semantics of a program is the meaning of the program;

what does it actually do?

-

There are formal ways of giving the semantics of a

program:

-

Denotational semantics - mapping every program to a mathematical

structure in some domain

-

Operational semantics - inductively maps programs and values they

produce, or states the programs can transition between are

used

-

Axiomatic semantics - use formal logic, like Hoare logic, to prove

properties of a program

Denotational semantics

-

With denotational semantics, we map our programs to elements of a semantic

domain.

-

Semantic domain: a set of possible values a program can be (it’s

basically a type)

-

For example, the semantic domain of a program that adds two

integers could be the set of all integers.

-

To do denotational semantics, we say that if there exists

a

-

Γ ⊢ E : T

-

there must exist a:

-

[[ E ]]σ = V

- Where:

-

σ is a mapping from free variables in Γ to a value in the semantic domain

-

V is the value in the semantic domain that E represents

-

If this is true, then we can say that σ semantically entails Γ, or σ ⊨ Γ

-

Here is an example with the toy lambda language:

-

[[ true ]]σ

= true

-

[[ false ]]σ

= false

-

[[ n ]]σ

= n (the corresponding natural

in N)

-

[[ E < E’ ]]σ

= true if [[ E ]]σ < [[

E’ ]]σ

-

[[ E < E’ ]]σ

= false otherwise

-

[[ E + E’ ]]σ

= [[ E ]]σ + [[ E’

]]σ

-

[[ if E then E’ else E’’ ]]σ = [[

E’ ]]σ if [[ E ]]σ = true

-

[[ if E then E’ else E’’ ]]σ = [[

E’’ ]]σ if [[ E ]]σ = false

-

[[ λ (x : T) E ]]σ

= v ↦ [[ E ]]σ [ x ↦ v ]

-

[[ let (x : T) = E in E’ ]]σ = [[ E’

]]σ [ x ↦ [[ E ]]σ ]

-

[[ E E’ ]]σ

= [[ E ]]σ( [[ E’ ]]

)σ

-

There’s criticism about denotational semantics, such

as:

-

It’s just renaming programs

-

The model is “too big” (Int -> Int defines all the functions, even ones that are impossible)

-

Modelling recursion is very hard

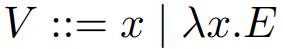

Operational semantics

-

With operational semantics, we use inductively defined relations to map programs to

values.

-

It’s very similar to type checking!

-

There’s two kinds of operational semantics:

-

In big step semantics, we have relations from programs to values.

-

We split these relations to other, smaller relations along

with some conditions that must hold true.

-

These relations are written as E ⇓ V, where E is the

program and V is the value.

-

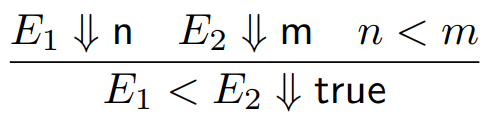

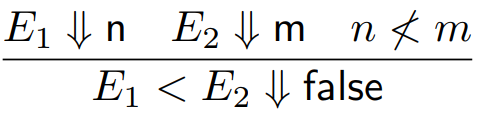

For example, for E1 > E2 ⇓ true to be true:

-

E1 must evaluate to some number n

-

E2 must evaluate to some number m

-

n > m must be true

-

So as you can see, we’ve split up our relation into

smaller relations and we’ve also got a condition along

with it that must hold true.

-

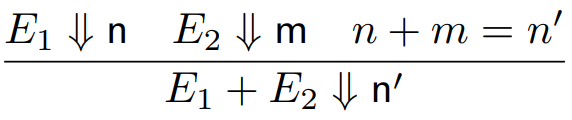

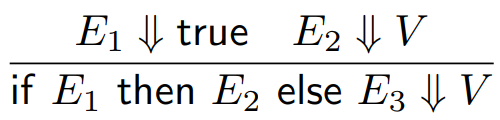

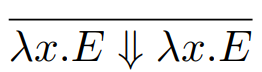

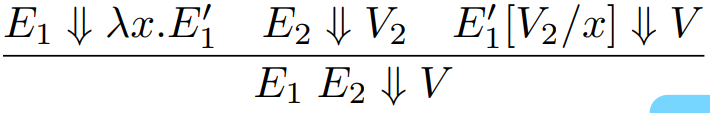

There are other rules too, such as:

-

For literals, or rules that can’t be split up any

further, we write:

-

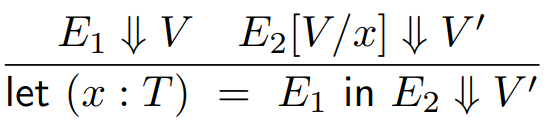

To do things like substitution, we can do:

-

Where E2[V/x] is an expression where V has been substituted in place of x within E2.

-

Like type checking, we can apply this for the whole program

to check the semantics of a program.

-

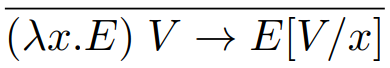

Small step semantics is similar, but instead of analysing the parts

that make up the program, we analyse the steps the program

takes to go from one state to another.

-

To put it in layman’s terms, when you do the sum

(6 / 3) + 2, you don’t analyse how the divide operation works,

then how the plus symbol works, then each number etc. do

you? No! You take it one step at a time and simplify the

whole thing until you get one number at the end.

-

So if we used small step with this sum, we’d have

something like:

-

(6 / 3) + 2 -> (2) + 2

-

(2) + 2 -> 2 + 2

-

2 + 2 -> 4

-

That’s the difference between small step and big step

semantics.

-

By using small step, we can see more clearly how a program

behaves.

-

In small step semantics, we prove that each step of the

computation is logically sound.

-

More formally, we use an inductive relation of the form E -> E’ where E is a step in the computation and E’ is the next step after E.

-

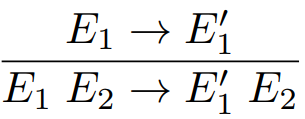

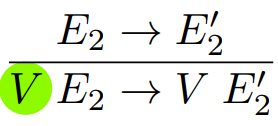

There are lots of small step relations, so I’ll

explain a few so you can get the general gist of it:

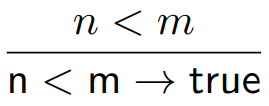

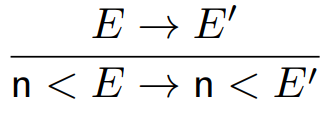

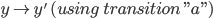

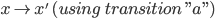

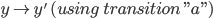

|

Small step relation

|

Explanation

|

|

|

Here, the program n < m moves to just ‘true’. The

only way that this can happen is if n is less

than m, so the predicate n < m is at the top.

|

|

|

Here, the program moves from n < E to n < E’. If the program is simply replacing E with

E’, then that means E must be equivalent

to E’ in some way. Therefore, the step E -> E’ is at the top.

|

|

|

Here, the program is moving from an if

statement to the program that should run if the

condition is true. We can see that the condition

is the literal ‘true’, so this

program makes perfect sense. There’s no

need to prove anything further because

there’s no ambiguity or room for doubt;

this is exactly what should happen.

|

-

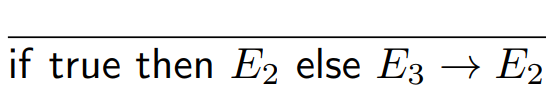

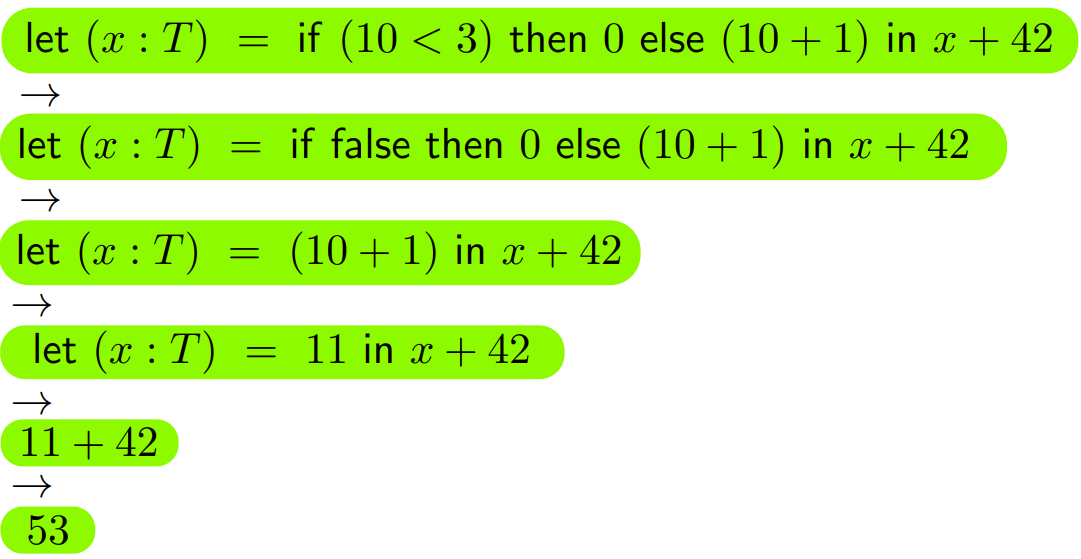

Let’s use big step and small step in an actual

example, so we can see them in action!

-

We have our program:

-

let (x : Int) =

-

if (10 < 3) then 0 else (10 + 1)

-

in x + 42

-

The value is 53, and we want to ensure that the semantics

of our program is correct.

-

With big step semantics, we would do this as follows:

-

We start with the whole program and split it up until we

reach just literals.

-

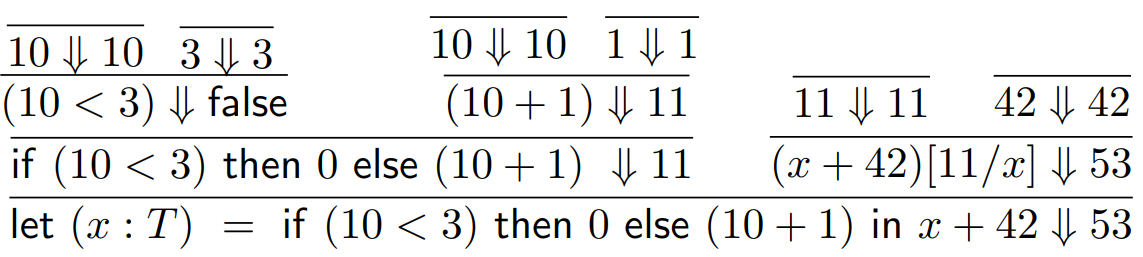

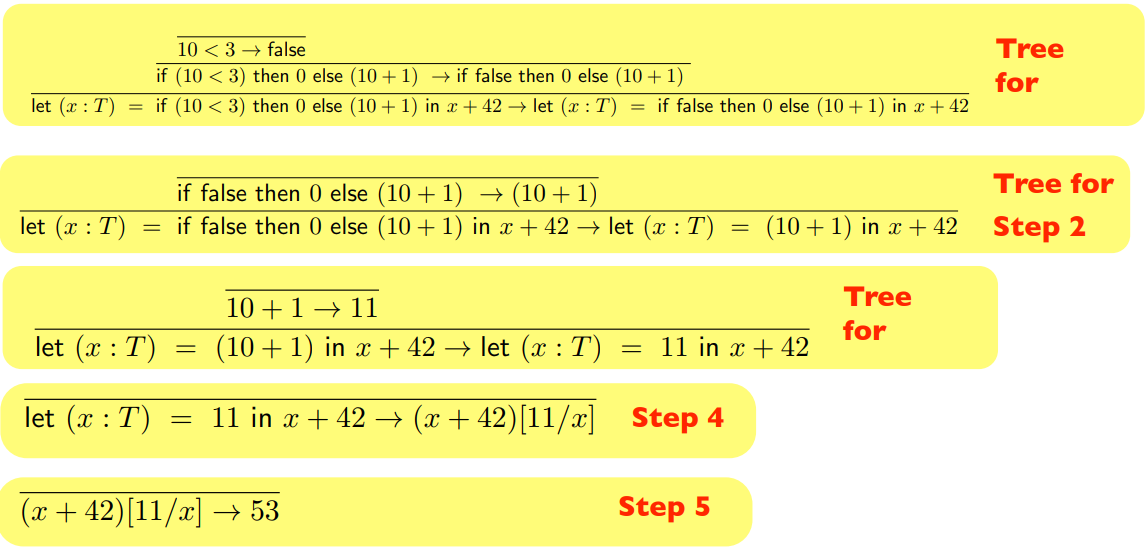

With small step, we would do this:

-

We analyse and prove each step of the computation, not each

term in the program.

-

Sometimes, we don’t even need the proof tree:

-

As you can see, big step and small step semantics do the

same thing in different ways.

-

To formalise this we first define the following:

-

If and only if there exists a (possibly empty)

sequence:

-

E = E1 → E2 → … → En = E’

Type safety

-

Operational semantics sometimes isn’t enough.

-

It doesn’t handle all ill-typed programs.

-

For example, operational semantics would not fail with this

program:

-

if (if (true) then false else 0) then true else 34

-

Why? Because that inner if statement will always return

‘false’ and not ‘0’ (and

‘0’ is not of the boolean type which is required

for the outer if statement), so operational semantics lets

this slide.

-

We need some way to find out if our program is type

safe!

-

How can we formally declare our language as “type

safe”?

-

It must satisfy two properties: preservation and

progress.

-

Preservation: at each step of the program, the type does not

change

-

Progress: well-typed terms never get stuck

-

Let’s go into more detail!

-

When we try to interpret the program:

-

if (if (false) then false else 0) then true else 34

-

We get a type error in the inner if statement, because it

returns ‘0’ when it should be returning a

boolean. This is called getting stuck.

-

It gets stuck because if you try to do small step

operational semantics:

-

if (if (false) then false else 0) then true

else 34

-

-> if (0) then true else 34

-

-> ???

-

There is no relation to continue this process, so we are

stuck at this step and can’t go further.

-

How do we avoid this?

-

In small step semantics, we can just model a run-time error

if we get stuck at a step and can’t go any

further.

-

In big step semantics, it’s a bit trickier. If we

were to do while loops, or recursion, we can’t tell

the difference between a stuck term and a divergent

term.

-

Therefore, we’d need an error relation, ↯

- For example:

-

Would hold for any integer literal ‘n’

-

You’d need all possible cases to handle all possible

errors, but even still, this isn’t enough.

-

Big step semantics only looks at the whole program and the

result, not each computation along the way.

-

Big step can give us weak preservation, but small step can

give us actual preservation.

Preservation

-

Formally, weak preservation goes like this:

-

if ⊢ E : T and E ⇓ V then ⊢ V : T

-

Informally, weak preservation holds if all programs have

the same type as their values (5 + 5 is of type integer, it

evaluates to 10, which is of type integer, so weak

preservation holds).

-

Formally, preservation goes like this:

-

if ⊢ E : T and E → E‘ then ⊢

E’ : T

-

Informally, preservation holds if each step of the

computation has the same type as the computation before

it.

-

Basically, preservation holds if the types don’t

change. Simple, right?

-

How do we prove preservation in our toy language? We need to do a

proof by induction.

-

Our base cases are the literals, Integer and Boolean.

-

The only relations from the literals are:

-

if ⊢ True : Bool and True → True then ⊢

True : Bool

-

if ⊢ False : Bool and False → False then ⊢

False : Bool

-

if ⊢ n : Integer and n → n then ⊢ n :

Integer

-

Which all trivially satisfy preservation, so our base cases

are fine.

-

Now for our inductive cases. We need one inductive case per

rule, but we’ll just do the if statement for this

example.

-

Our if statement is of the form:

-

if E1 then E2 else E3 : T

-

where E1 : Bool

-

and E2, E3 : T

-

There are two possible cases:

-

E1 is some expression and can be reduced from E1 to E1’

-

Remember, in this proof, we have one inductive case for

each rule. So whatever rule E1 falls under, we have an inductive case we can apply

it to, and we’ll see that E1’ is also a boolean. Basically, we can apply this same

proof to E1 to show that E1’ is also a boolean.

-

This means the if statement will become:

-

if E1’ then E2 else E3 : T

-

... and the types will still hold.

-

E1 is some literal and the expression is either E2 or E3.

-

If E1 is some boolean literal, the expression will be

either E2 or E3.

-

But both E2 and E3 are of type T anyway, so the types will hold.

-

This is only for the if statement, but there are lots of

other inductive cases, covering all the other rules of the

language.

Progress

-

It’s not enough to have preservation. What if we have

a well-typed system that allows stuck terms?

-

For example, what if you had a lambda function, sitting on

its own, not being applied to anything? It’s not a

value, like an integer or a boolean. We only want to compute

values, not terms!

-

Formally, progress means:

-

if ⊢ E : T then either E → E’ (for some

E’) or E is a value V

-

Informally, progress means that if you have some term, you

must be able to perform some step towards a value or it must

be a value.

-

When a language has progress, you can’t have terms

that can’t simplify down to values. This means, for

example, you can’t have a function, on its own, that

isn’t being applied to some argument.

-

How do we prove progress in our toy language? We’ll use a proof

by induction, very similar to preservation!

-

Our base cases are the literals, Integer and Boolean.

-

If we have a literal, it trivially satisfies the theorem

because they’re already values.

-

For our inductive cases, again, we need one for each rule,

but we’ll use the if statement as an example.

-

Our if statement is of the form:

-

There are two possible cases:

-

E1 is not a value

-

Like in the preservation proof, we can use the inductive

hypothesis of this very proof on E1 to show that E1 must have some step it can simplify to, E1’.

-

Therefore, this if statement has a step:

-

if E1 then E2 else E3

-

-> if E1’ then E2 else E3

-

This satisfies the theorem.

-

E1 is a value

-

If E1 is a value, that means this if statement has two

possible steps:

-

if true then E2 else E3

-

-> E2

- or

-

if false then E2 else E3

-

-> E3

-

This satisfies the theorem.

-

As you can see, for all cases, if statements must have a

step. This is just one of the many inductive cases for each

of the language rules.

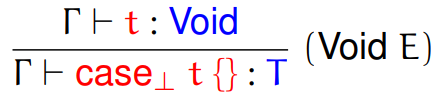

Structured types

Structured types

-

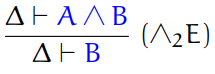

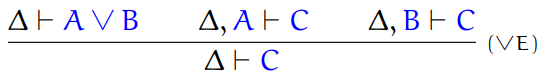

How do we do type derivation on structured types?

-

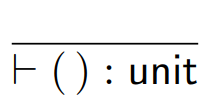

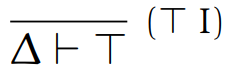

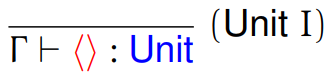

First of all, the unit type.

-

This type is really easy to understand. This type can only

have one value: the unit value.

-

It looks like this: ()

-

It’s the type of Java methods that take no

parameters, such as: toString()

-

This also means we can thunk expressions into

functions.

-

Thunking: wrapping an expression into a function for later use, for

example:

-

function() { console.log(“Hello World!”);

}

-

console.log(“Hello World!”);

-

You can also unthunk too, when you unwrap an expression from a

function.

-

The type rule for the unit type is this:

-

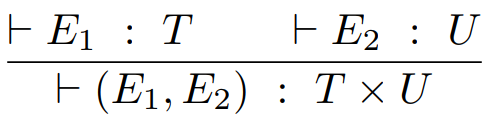

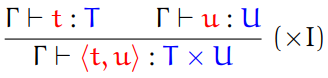

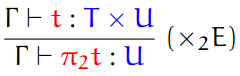

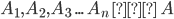

Second, we have pairs and tuples.

-

We can represent the types of these using the cartesian

product:

-

For tuples, we just extend this:

-

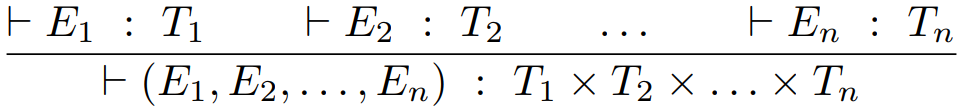

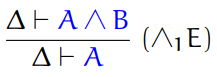

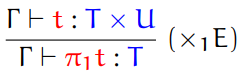

Third, we have destructors.

-

Destructor: a function which unwraps a wrapped value

-

Projection: a special type of destructor which unwraps a tuple,

usually a pair

-

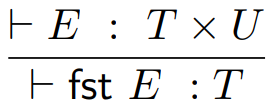

The two projections that unwraps a pair are fst and snd, which you’ve already seen in Haskell.

-

The type rules for these are:

-

For tuples of size n, we need n projection functions.

-

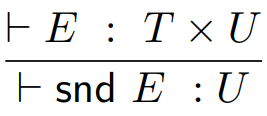

Fourth, we have record types.

-

Record: a special type of tuple in which all the elements are

referenced by a label.

-

C structs and Java objects are all records.

-

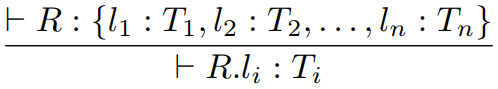

The destructor for records are called selections, and are written like so:

-

Exactly the same as Java, right?

-

The syntax goes like this:

|

Record

|

C struct

|

Java object / class

|

|

Student : {

name : String,

ID : Integer

}

|

struct Student {

char* name;

int ID;

};

|

class Student {

String name;

int ID;

};

|

-

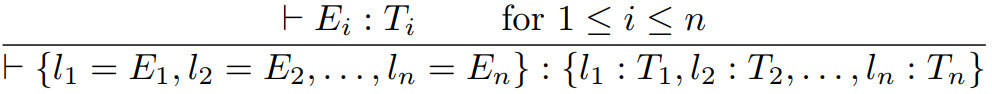

The type rules look like this:

|

Type rule in weird maths language

|

Type rule in English

|

|

|

Each value in the record Ei has a respective type Ti

|

|

|

Pretty much the same, except we use

selections

|

-

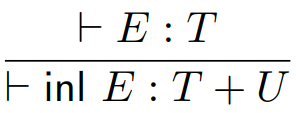

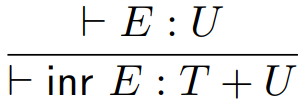

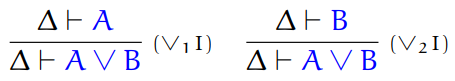

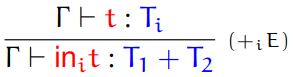

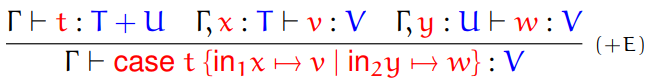

Fifth, we have sum types.

-

Sum: a type that can handle values of either one type or the

other type

-

They are written like so:

-

The constructors for this type are called injections.

-

The two injections are called inl and inr, where inl states that this is a value of the left type and inr states that this is a value of the right type.

-

Here are plenty of examples:

-

inl 10 could be a type of Int + Int

-

inl true could be a type of Bool + Int

-

inl true could be a type of Bool + Float

-

inl 67.5 could be a type of Float + Bool

-

inr ‘a’ could be a type of Int + Char

-

inr 78 could be a type of String + Int

-

inr Nothing could be a type of Int + Maybe

-

inr () could be a type of () + ()

-

Here are the type rules for these injections:

-

The only destructor for the sum type is pattern matching,

or case:

-

case E of inl x -> E1 | inr x -> E2

-

There’s something weird about sum types.

-

They’re not unique types. Remember that inl true value we had above? See that it can be of type Bool + Int, or Bool + Float, or Bool + anything?

-

This is a complication for type checking. How do we get

around this?

-

We can have unique names for the injections based on the

type it’s referring to.

-

For example, we could have inlI for Bool + Int, and inlF for Bool + Float.

-

Keep in mind that sum isn’t exclusively binary; we

could have lots of types, like T1 + T2 + T3 + ... + Tn.

-

These special sums with uniquely named injections have a

name. They’re called variants!

-

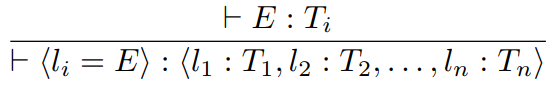

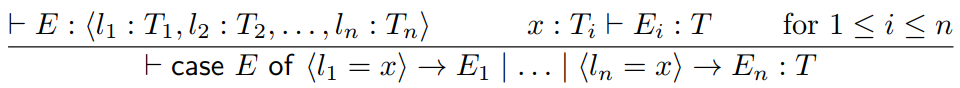

Sixth, we have variants.

-

In the same way that records are just tuples with names,

variants are just sums with names.

-

A variant is written as follows: <l1 : T1, l2 : T2 , ... , ln : Tn>

-

The constructor of a variant is the injection named with

labels: <li = E>

-

So, with our example of Bool + Int and Bool + Float, we could have:

-

E1 = <inlI : Bool, inrI : Int>

-

E2 = <inlF : Bool, inrF : Float>

-

So when we construct a type from one of these variants,

such as <inlF = true> or <inrI = 56>, there is no ambiguity over which sum those values are

from, because the labels tell us so.

-

The type rules look like this:

|

Type rule in weird maths language

|

Type rule in English

|

|

|

When we construct a value from this variant,

the value must have the type that the

label’s injection is assigned to.

|

|

|

When we do a pattern match, or a case statement, the value inside the case statement must be a variant.

Additionally, for each ‘case’ within

the case statement, the pattern match’s type

must match up with the variant’s type (and

the return type of the whole case statement must match up too,

obviously).

|

-

This isn’t completely perfect. For example, what if

we have:

-

E1 = <boolType : Bool, intType : Int>

-

E2 = <boolType : Bool, floatType : Float>

-

Then we try and use <boolType = true>, which variant does this belong to? E1 or E2?

-

Don’t worry; there’s no seventh type which

solves this! In Haskell, it uses the latest variant in which

this constructor is defined, so Haskell would pick E2, assuming that E1 was defined before E2.

-

You may be thinking “What’s the point of

variants? Will I ever use them in a programming language?

No. PLC worst module”

-

You’ve actually been using variants this whole time,

in Haskell, when you use the data keyword!

-

For example, enumerations are just variants with unit types:

-

Days = <Mon : (), Tues : (), Wed : (), Thu : (), Fri :

(), Sat : (), Sun : ()>

-

To get a ‘Monday’ instance: <Mon = ()>

-

It’s just that the unit types are abstracted away by

syntactic sugar, so you’re left with:

-

Days = <Mon, Tues, Wed, Thu, Fri, Sat, Sun>

-

To get a ‘Monday’ instance: <Mon>

-

data Days = Mon | Tues | Wed | Thu | Fri | Sat | Sun

-

You can also mix and match labels of unit type and labels

of non-unit type.

-

This is exactly what the Maybe structure does. Maybe is

just a variant!

-

Maybe = <Nothing : (), Just : a>

-

Maybe = <Nothing, Just : a>

-

data Maybe = Nothing | Just a

-

You want another example?

-

What if I told you that the syntax of lambda calculus can

be modelled in one big variant?

-

Lambda = <Value : Char, Abs : Char × Lambda, App :

Lambda × Lambda>

-

data Lambda = Value Char | Abs Char Lambda | App Lambda

Lambda

-

Where Char is used as a variable name.

-

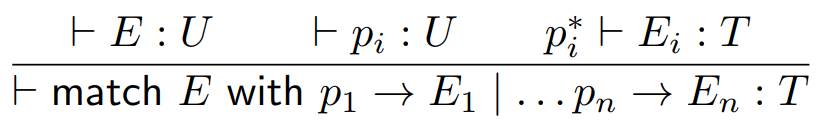

What if we wanted to use pattern matching in our toy language?

-

We can do so like this:

-

Where pi* just encompasses all the information about the pattern

matched object.

-

It gets a little complicated because now we’ll need a

type system for the language of patterns, but we won’t

worry about that.

-

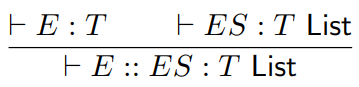

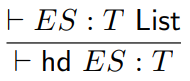

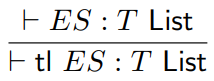

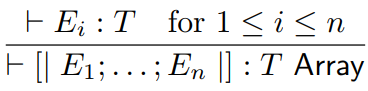

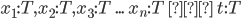

Seventh, we have lists and arrays.

-

Lists are easy, we can model them like we do in Haskell,

with the cons operator ::

-

We can also model the head and tail functions:

-

Arrays feel like they’d be the same, but

they’re not structural types. You can’t build

them up, they have no constructor and you can’t

pattern match on them.

-

That won’t stop us from modelling them, though! We

can treat arrays a bit like records, except the indexes are

labels:

-

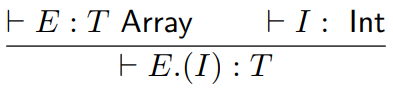

Lastly, we have functions.

-

Functions, like arrays, are not structural types. However,

we can still model their types.

-

We can just have T -> U, where T is the parameter type and U is the return type.

-

T or U can also be a function, allowing us to have

higher-order functions.

-

The type rule for functions is:

-

Where “fun(x : T) -> E” is the function, x is the parameter and E is the return value.

Subtyping

-

First of all, what is subtyping?

-

Subtyping: loosens our strict type checking and allows us to use

subset types.

-

That sounds weird, but if you’ve used any OOP

language, you’ve done subtyping before.

-

Before we go into detail, subtyping is a property of

polymorphism.

-

Polymorphism: literally means “many shapes”

-

There are three kinds of polymorphism:

-

Parametric: C++ templates, Java generics etc.

-

Subtype: Inheritance in OOP, duck typing

-

Ad Hoc: overloading functions and methods

-

Ad hoc is boring, so we won’t talk about that.

Parametric is interesting, but that’s not why

we’re here.

-

This topic is all about subtype polymorphism (big

surprise).

-

There’s two kinds of subtyping:

-

Nominal: explicitly calling a type a child type of some parent

type. Basically inheritance in OOP.

-

Structural: if a type has all the properties of a parent type, then

we can just call it a child type. Basically, that’s

duck typing. “If it looks like a duck, swims like a

duck, and quacks like a duck, then it probably is a

duck”. The wikipedia article shows a good example.

-

In the following examples, I will be using the types

“Dog” and “Animal”, where

“Dog” is a subtype of

“Animal”.

-

Every property of an Animal is also the property of a

Dog.

-

This property is called subsumption.

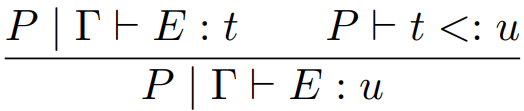

-

More formally, if T is a subtype of U then every value of

type T can also be considered as a value of type U.

-

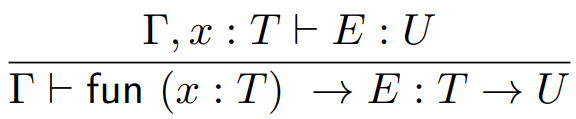

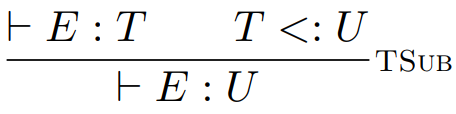

Here is the type rule for subsumption:

-

As you can see, we’ve defined a new subtyping

relation, <:

-

When you see T <: U, just think of it as T extends U, if that helps.

-

This is applied to both structural and nominal

subtyping.

-

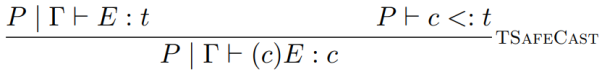

But how do we define this subtyping relation? This is where

the differences between structural and nominal show.

-

In nominal subtyping, even if two types have the exact same

properties, they are unique.

-

In Java, for example:

|

class Address {

String name;

String address;

}

|

class Email {

String name;

String address;

}

|

-

Both Address and Email are identical in properties, but

because they’ve been defined as two different types,

they’re unique. A bit like twins; genetically,

they’re the same, but they’re both their own

person.

-

So how do we define the subtyping relation with nominal

subtyping?

-

Just like in Java, we explicitly state child and parent

relations with extends.

-

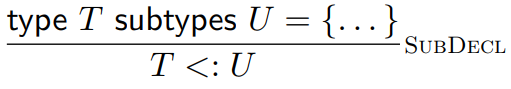

So we will do that with our type rule (except instead of extends, it’s subtypes):

-

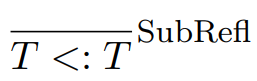

That’s not enough, we need to define reflexivity and

transitivity, too:

|

|

|

|

A Dog can be treated as a subtype of a

Dog

|

If a JackRussell is a Dog, and a Dog is an

Animal, then a JackRussell is an Animal

|

-

In Java, every type is a subtype of Object, as well, which

helps (except primitives).

-

There’s one small problem though: what if the parent

type is a triple and the child type is a pair?

-

Which two elements does the child inherit?

-

Java fixes this by only allowing classes to have

subtypes.

-

In structural subtyping, everything is implicit. It relies on the

structure to tell if a type is a child type.

-

Some examples will use JavaScript, so apologies if you

don’t know JS!

-

For primities, it’s easy:

-

short <: int

-

float <: double

-

Everything else is based on structure, for example a pair

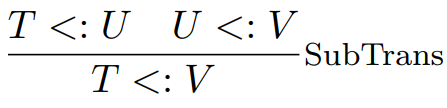

of Dogs is also a subtype for a pair of Animals.

-

Here’s a type rule for pairs:

-

For records, it gets a bit more complicated.

-

There are three properties of record subtyping that we can

define:

-

Depth subtyping: where the types in a record are subtypes of the

types in another record.

- For example:

-

R1 : { a : Dog, b: Cat } <: R2 : { a : Animal, b : Animal }

-

... so if you had a function f that expected a parameter of type R2, you could pass in a value of type R1 and it’ll work due to depth subtyping.

-

I wrote an example in TypeScript. It’s a bit long, so

if you know TypeScript, look at the link here for a more in-depth, practical example.

-

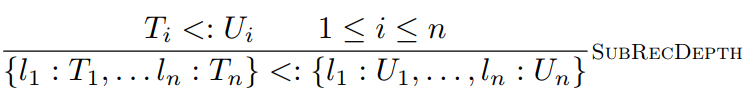

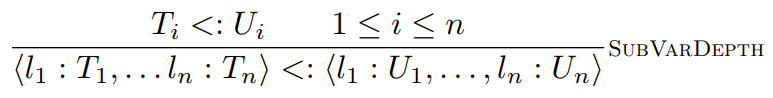

Here is a type rule for it:

-

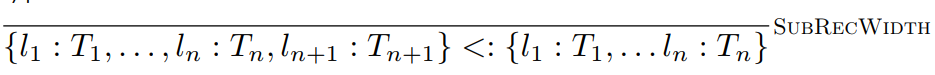

Width subtyping: where the child type has more properties than

the parent type.

-

JavaScript supports width subtyping.

-

Here’s two examples, one with records and one with

JS:

|

Records

|

JavaScript

|

|

Dog : {

colour : String,

age : Int,

breed : String

}

<:

Animal : {

colour : String,

age : Int

}

|

// This function expects an animal

function getAge(animal) {

return animal.age;

}

let dog = {

colour: “brown”,

age: 10,

breed: “Golden

Retriever”

};

getAge(dog); // This will work because of width

subtyping

|

-

Permuted fields: fields can be in any order

-

JavaScript also supports this.

-

Here are two examples again:

|

Records

|

JavaScript

|

|

Dog : {

colour : String,

age : Int,

breed : String

}

<:

Dog : {

breed : String,

colour : String,

age : Int

}

|

// This function expects an animal

function getAge(animal) {

return animal.age;

}

let animal1 = {

colour: “brown”,

age: 10

};

let animal2 = {

age: 4,

colour: “red”

};

// These will both work because of this

subtyping rule

getAge(animal1);

getAge(animal2);

|

-

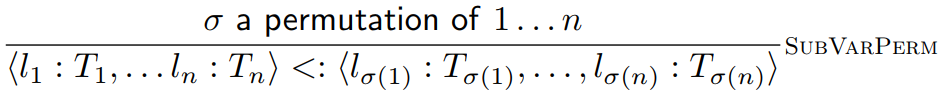

Here is the type rule for this:

-

Not all languages adopt these rules, for example Java

doesn’t adopt depth subtyping.

-

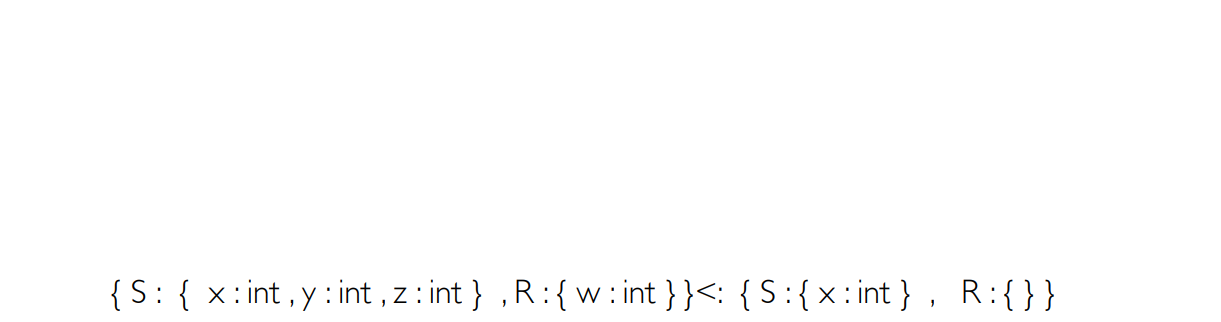

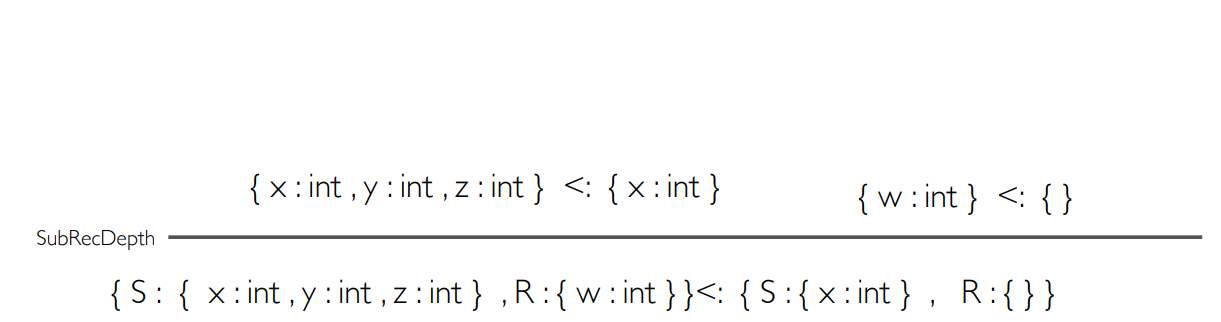

Want an example of type checking with record

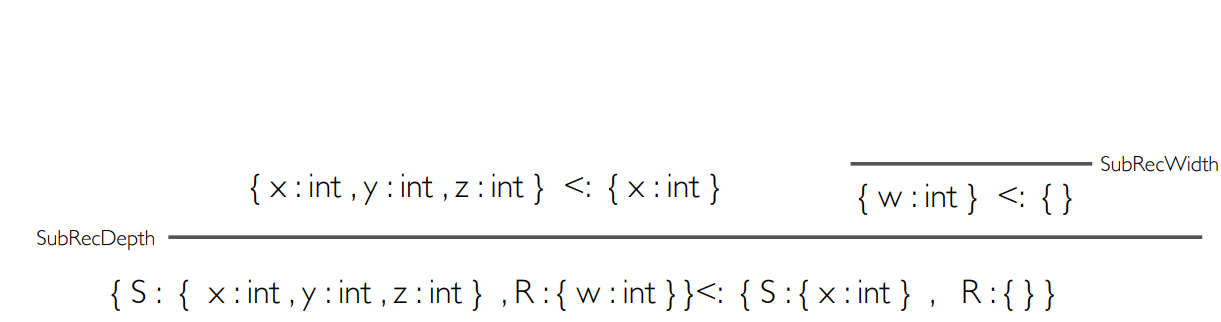

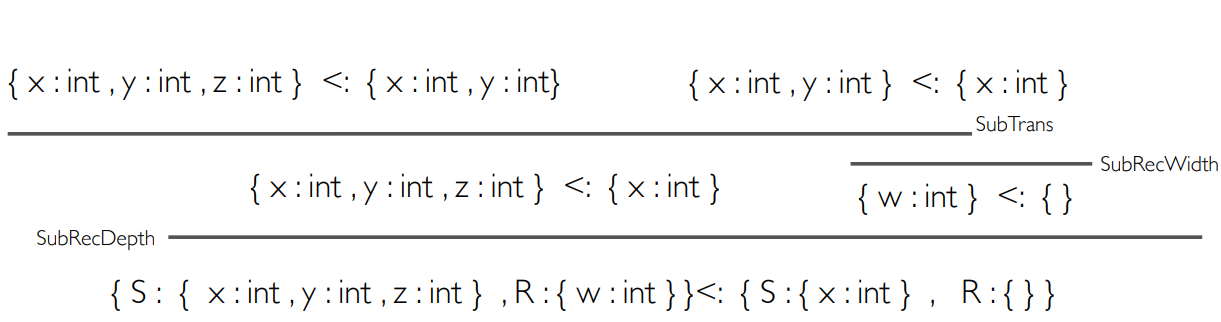

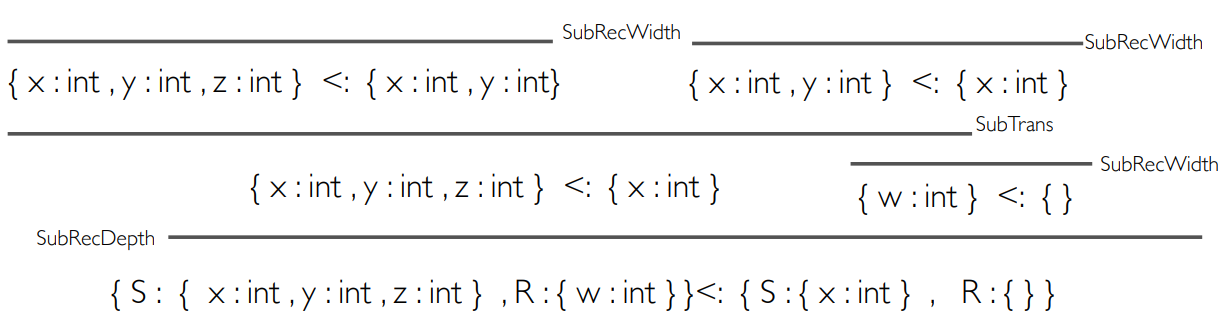

subtyping?

|

How do we know that this is type-safe? We use

the type rules above!

|

|

|

|

We’ll use the depth subtyping rule,

because the left S is a subtype of the right S

and the left R is a subtype of the right

R.

After that, we now have two terms to

simplify.

|

|

|

|

We’ll now use the width subtyping rule on

the right term, because the left record has one

extra property compared to the empty right

record.

This ends that branch, so now we can do the

left.

|

|

|

|

Here, we can use the transitivity type rule to

split up the terms.

You may be thinking “why not just use the

width subtyping rule?”

The width subtyping rule only supports one

extra property, so we need to use the

transitivity rule to split up the terms until

the records differ by one property. Don’t

worry though, it’s not a solid rule;

it’s just a formality.

|

|

|

|

Finally, we use the width subtyping rules to

finish the branches, since the records now

differ by one property.

|

|

|

-

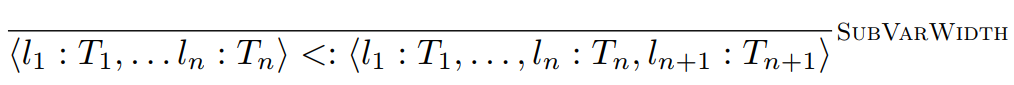

What about variants?

-

It’s pretty much the same, except the width subtyping

rule is swapped.

-

Instead of the left having one more property than the

right, it’s the right that has one more property than

the left!

-

Let’s just say we have our days of the week (variants

of the unit type):

-

Days : {Mon, Tue, Wed, Thu, Fri, Sat, Sun}

-

But we have another variant that adds an extra day:

JoJoDay

-

JDays : {Mon, Tue, Wed, Thu, Fri, Sat, Sun, JoJo}

-

If we have a value of type Days, it’s also a value of type JDays, because all the labels in Days exist in JDays.

-

If we have a value of type JDays, it’s not a value of Days, because the label JoJo doesn’t exist in Days.

- Therefore:

-

Days <: JDays

-

{Mon, Tue, Wed, Thu, Fri, Sat, Sun} <: {Mon, Tue, Wed,

Thu, Fri, Sat, Sun, JoJo}

-

Here is the type rule for that:

-

However, depth and permutations are the same:

-

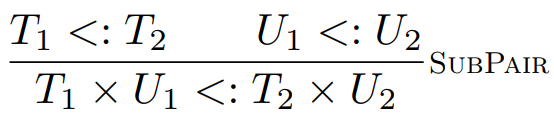

Do you remember when we defined subtyping for pairs?

- For example:

-

if T <: U and V <: V then T x V <: U x V

-

Do you notice how the order of T and U are preserved in the

pair?

-

With T <: U, it’s T on the left and U on the right. In T x V <: U x V, it’s still T on the left and U on the right.

-

This is called covariance, and the pair type constructor is covariant.

-

On the other hand, let’s define some type constructor Foo that takes in a type and creates a new type, like Foo Int, Foo Char etc.

-

Covariance: If Foo is defined such that if T <: U, then Foo U <: Foo T, then Foo would be contravariant.

-

It’s contravariant because in T <: U, it’s T on the left and U on the right, but in Foo U <: Foo T, it’s U on the left and T on the right. They’re swapped!

-

An example of a contravariant type constructor is a

function (in the argument type).

-

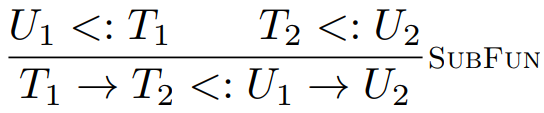

Here is a type rule for that:

-

As you can see, it’s covariant in the return type,

but contravariant in the argument type!

- Why?

-

Let’s give this some context:

-

Let’s say we have two types now: Dog extends Animal,

and Guppy extends Fish.

-

Let’s also say we have a function, f, that maps

Animals to Guppies.

-

We can pass in an Animal to our function f.

-

Because a Dog is an Animal, we can also pass in a Dog to

f.

-

The function f returns a Guppy.

-

Since a Guppy is a Fish, f can also return Fish.

-

Putting this together, f can map Animals to

Guppies...

-

... but it can also take in Dogs (which is a child

type)...

-

... and it can return Fish (which is a parent type).

-

Still doesn’t make much sense? Well, if you know

TypeScript, you’re in luck! Here is an implementation

of this example here.

-

Here is a Java example:

|

// note that Number is a super class of Integer

and Double

public static Number foo(Number x) {

return

x.intValue();

}

Number integer = foo(2.5);

|

-

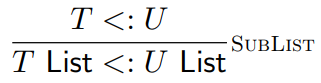

List types are covariant.

-

If you have a list of Dogs, you can cast it as a list of

Animals.

-

Here is a type rule for them:

-

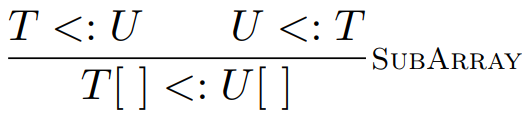

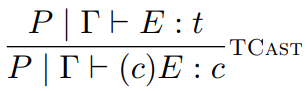

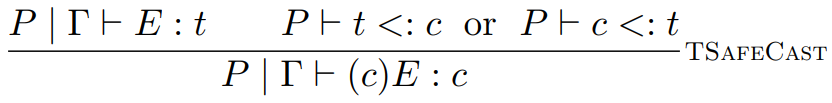

Arrays are weird.

-

Reading from an array is covariant, but writing to an array

is contravariant. Weird, huh?

- Why is this?

-

Let’s go back to our typing of Dog extends Animal

-

Let’s say you have an array of Dogs.

-

If you were to read an element from Dogs, you could cast it as an Animal, because a Dog is an Animal. It’s the same logic as to why lists are

covariant.

-

Now let’s try and describe writing to an array.

-

We can’t write an Animal to the Dog array, because an Animal could be anything: a Cat, a Bird etc.

-

So the only things we can write are Dogs or subtypes of Dogs.

-

That is, unless Animals were a subtype of Dogs. If Animal extends Dog, then we could put Animals into the Dog array. This sounds confusing and unintuitive but keep reading.

-

To write to an array, we need to swap the positions from Dog <: Animal to Animal <: Dog. Only then will writing to an array work, hence making it

contravariant.

-

So how do we have both reading from and writing to an

array?

-

We need to have Dog <: Animal and Animal <: Dog.

-

Obviously, in real-life, that relationship between dogs and

animals does not exist, but because arrays require this

subtype relationship, this makes arrays invariant.

-

However, arrays in Java are not invariant. They’re

covariant. Even though it’s more flexible, this makes

Java arrays not type-safe!

-

Click here to see an example of Java arrays not being

type-safe.

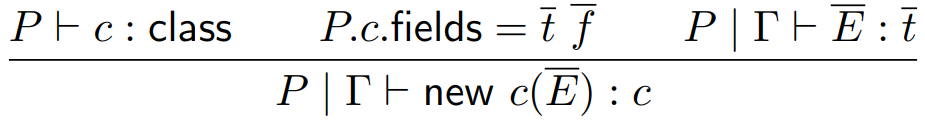

Objects

-

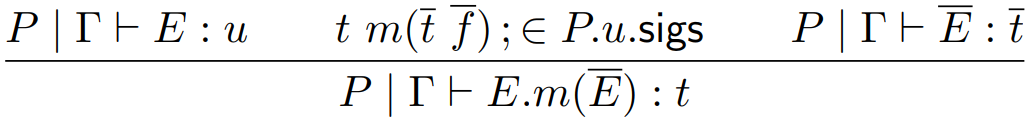

Don’t worry, there’s nothing new in this topic.

We’re going to apply what we’ve learned to

formally ensure Java Jr is well-typed!

-

First of all, we need to define Java Jr through a set of

declarations:

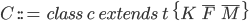

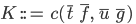

|

Declaration

|

Explanation

|

|

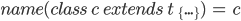

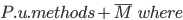

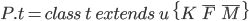

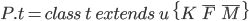

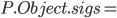

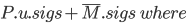

|

The main program, called  , contains a set of all the classes within the

program, , contains a set of all the classes within the

program,  . .

|

|

|

A class,  , needs to have a name , needs to have a name  , a parent class , a parent class  , a constructor , a constructor  , as well as a set of fields , as well as a set of fields  and a set of methods and a set of methods  . .

|

|

|

A constructor,  , needs to have the name of the class it

constructs, , needs to have the name of the class it

constructs,  , along with the inherited properties, , along with the inherited properties,  , and its own properties, , and its own properties,  , as parameters to the constructor. , as parameters to the constructor.

It must also start with the  method, along with the inherited properties as

the arguments, method, along with the inherited properties as

the arguments,  . Afterwards, the members must be assigned

their values as supplied by the parameters, . Afterwards, the members must be assigned

their values as supplied by the parameters,  . .

|

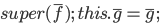

|

|

A field declaration,  , must have a type, , must have a type,  , and a name, , and a name,  . .

|

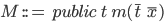

|

|

A method  must have a return type must have a return type  , a name , a name  , parameters , parameters  and a return expression and a return expression  . In Java Jr, that’s all a method can do.

Additionally, a method must return

something. . In Java Jr, that’s all a method can do.

Additionally, a method must return

something.

|

|

|

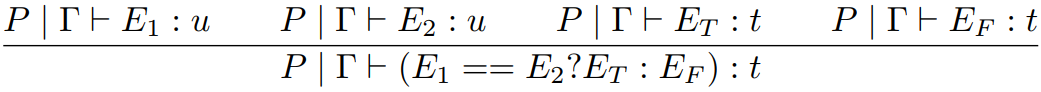

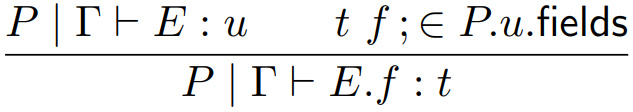

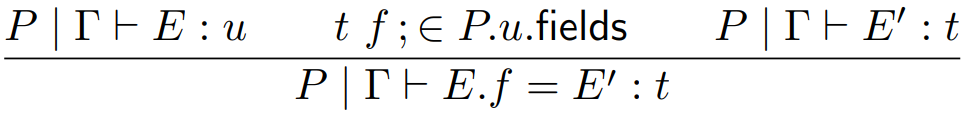

An expression  can be: can be:

-

A literal

-

A variable

-

A method call

-

A field reference

-

A field assignment

-

A class instantiation

-

A ternary expression (like an if statement,

but it returns something)

|

-

Java Jr is a subset of Java; you can compile Java Jr code

using javac and it’ll work.

-

Now we’ve defined the grammar for Java Jr,

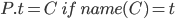

let’s give our language some context:

|

Rule

|

Explanation

|

|

|

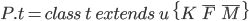

The  function takes in a class and returns the

name of the class. function takes in a class and returns the

name of the class.

|

|

|

We can only reference a class from the program  (remember, (remember,  contains a set of classes) if the field

name we use is the same name as the class

(pretty obvious). contains a set of classes) if the field

name we use is the same name as the class

(pretty obvious).

|

|

|

The  class has no methods (in Java it does,

but in Java Jr it doesn’t). class has no methods (in Java it does,

but in Java Jr it doesn’t).

|

|

|

When a class  inherits from class inherits from class  , the methods within , the methods within  are the methods defined in are the methods defined in  as well as the methods defined in as well as the methods defined in  . .

|

|

|

The  class has no fields. class has no fields.

|

|

|

When a class  inherits from class inherits from class  , the fields within , the fields within  are the fields defined in are the fields defined in  as well as the fields defined in as well as the fields defined in  . .

|

|

|

The  class has no method signatures (think of

them like how C declares functions). class has no method signatures (think of

them like how C declares functions).

|

|

|

When you try to reference the method signatures

of a class  , you’ll get all the signatures of the

methods of class , you’ll get all the signatures of the

methods of class  as well as the signatures of the parent

class as well as the signatures of the parent

class  . .

|

|

|

If you try to reference the signatures of a

method, you’ll just get the signature of

that method.

|

-

Phew! Well, now we’ve fully defined Java Jr.

-

If you still don’t fully understand how Java Jr

works, don’t worry. Just pretend we’re working

with a watered down version of Java (which is what Java Jr

is). Java Jr only exists for demonstrative purposes

anyway.

-

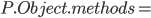

Now we can start implementing the typing relation and

subtyping relation for Java Jr!

-

First of all, let’s do the types.

-

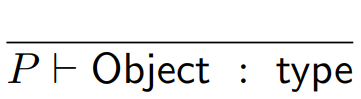

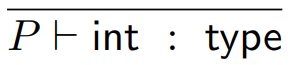

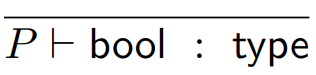

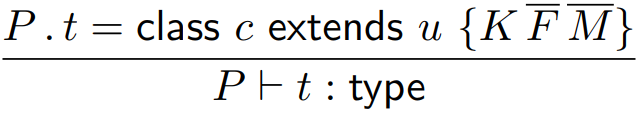

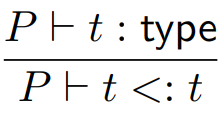

The first relations we’ll do are base cases:

|

Typing relation

|

Explanation

|

|

|

The Object class is a type.

|

|

|

The int primitive is a type.

|

|

|

The bool primitive is a type.

|

|

|

is a type, if it’s a class defined

within the program is a type, if it’s a class defined

within the program  . .

|

|

Subtyping relation

|

Explanation

|

|

|

The reflexive rule for types. Any type can be

seen as a subtype of itself.

|

|

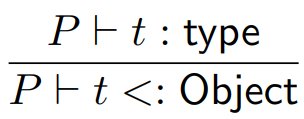

|

Every type is a subtype of the  class (in Java Jr, this includes

primitives, but in Java primitives are not

included). class (in Java Jr, this includes

primitives, but in Java primitives are not

included).

|

|

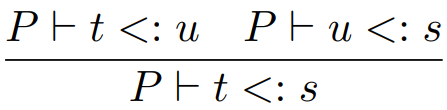

|

The transitive rule for types. If a JackRussell

is a Dog, and a Dog is an Animal, then

JackRussell is an Animal.

|

|

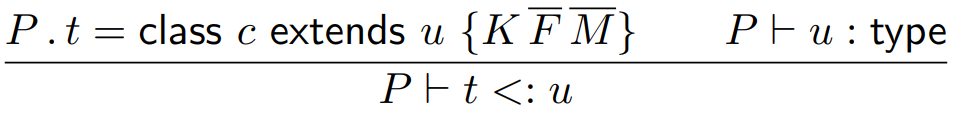

|

If a child class inherits from a parent, then

the child class is a subtype of the parent (yes,

this includes primitives as well, but not for

normal Java).

|

-

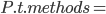

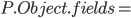

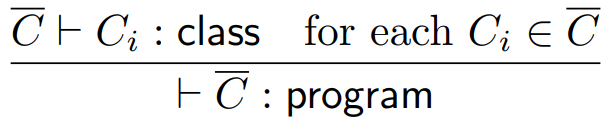

Now, let’s make sure all of the classes themselves

are well-typed:

-

This means that every class in the program must be

well-typed.

-

Here’s syntactic sugar for this:

-

Personally, I don’t like this syntax, because

it’s ambiguous as to what type

is, but it means the same thing as the top one.

is, but it means the same thing as the top one.

-

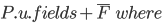

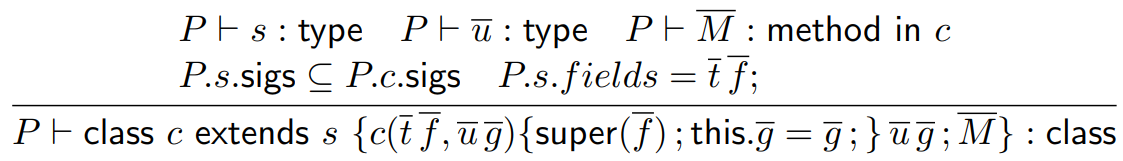

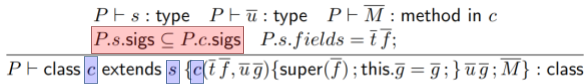

Time to do a type rule for class definitions!

-

This one’s a bit big, so let’s split it up and

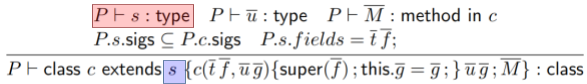

explain it piece by piece:

|

This bit states that the parent class must be

some valid type. Simple enough.

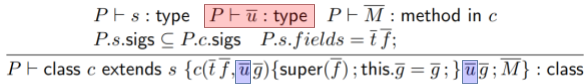

|

|

|

|

This bit states that the new fields that  introduces must also have valid

types. introduces must also have valid

types.

|

|

|

|

This bit states that all the methods in  must be valid. must be valid.

|

|

|

|

This bit states that all the signatures in the

parent class must also exist in the child class.

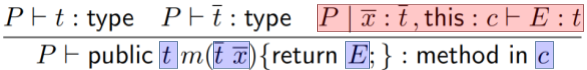

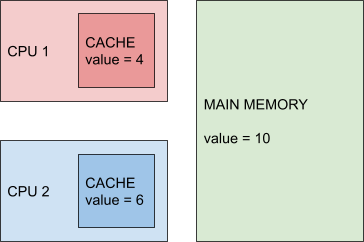

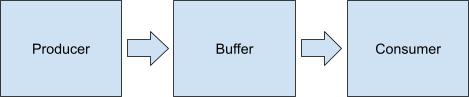

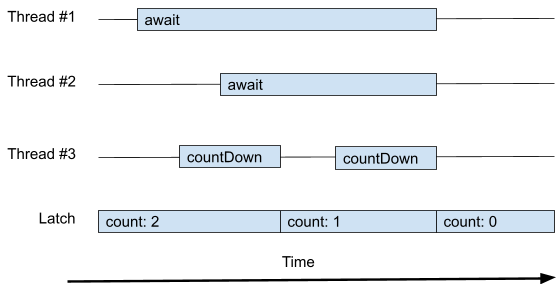

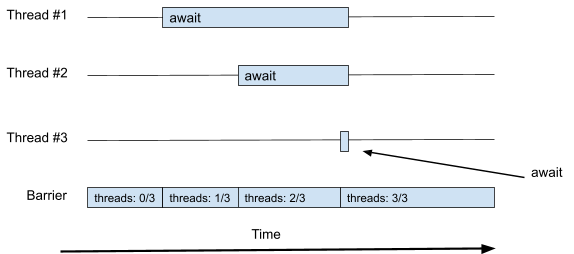

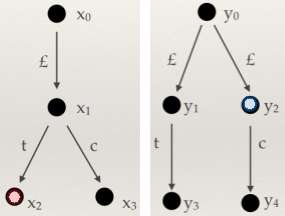

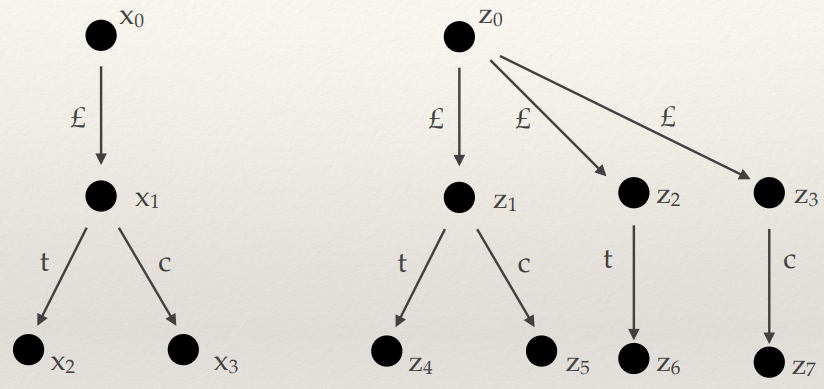

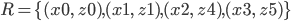

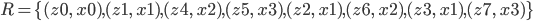

The child class doesn’t have to override