Intelligent Systems

Matthew Barnes, Mathias Ritter

Intro 5

Classical AI 6

Blind Search 6

Problem Types 6

Single-state Problem Formulation 8

State vs Nodes 10

Search Strategies 10

Searching using Tree Search 10

Repeated States 13

Bidirectional Search 14

Heuristic Search 14

Best-first Search 14

Greedy Best-first Search 14

A* Search 15

Admissible Heuristics 18

Consistent Heuristics 18

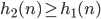

Dominance 18

Relaxed problems 18

History and Philosophy 18

Local Search 21

Hill-climbing Search 21

Simulated Annealing Search 23

Local Beam Search 24

Genetic Algorithms 25

Constraint satisfaction problems 26

Adversarial Games: Minimax 34

Alpha-beta Pruning 39

Planning 43

Recent Advances in AI 51

Classification 51

Decision Trees 51

Entropy 52

Conditional Entropy 54

Information Gain 54

Nearest Neighbour 57

Neural Networks 63

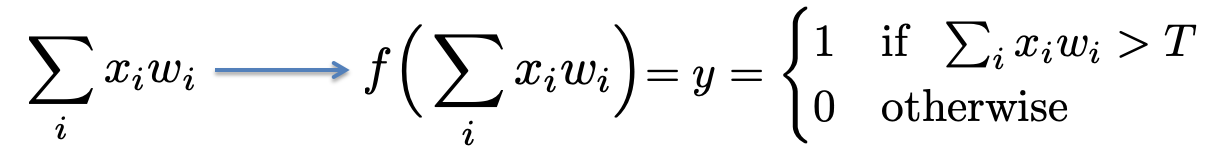

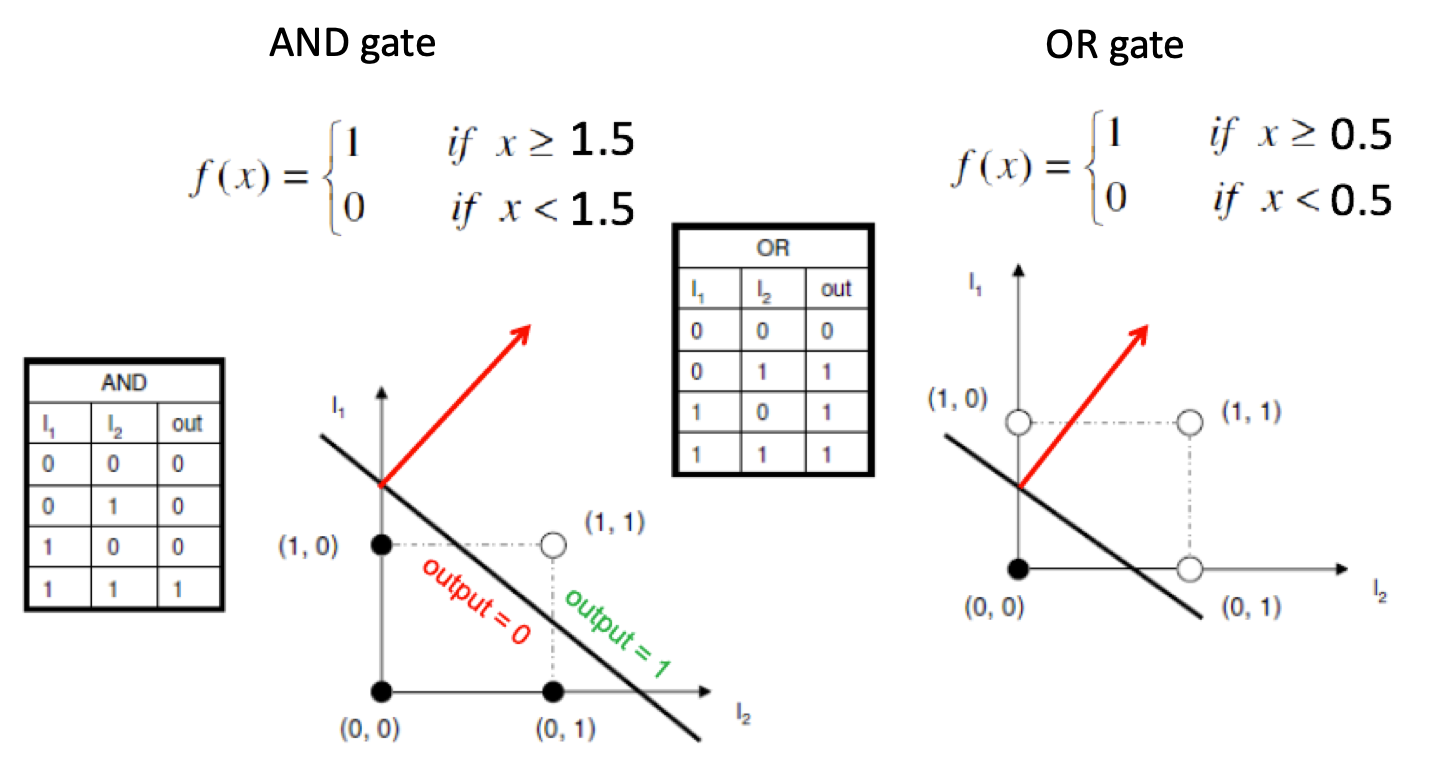

Perceptrons 63

Perceptron: Example 64

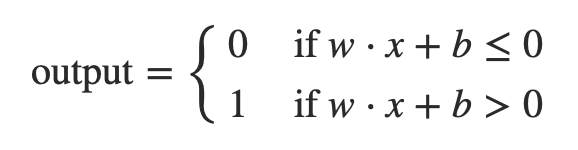

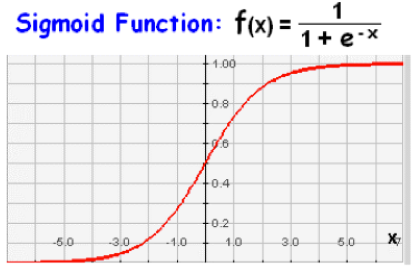

Perceptron: Rewriting the Activation Function 65

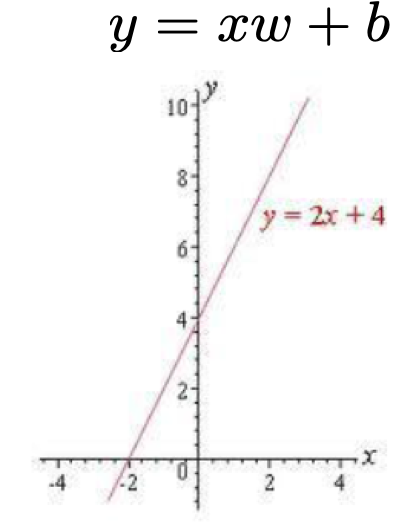

Perceptron: Visualisation of the Activation Function 65

Perceptron: Defining Weights and Bias 66

Perceptron: Expressiveness 67

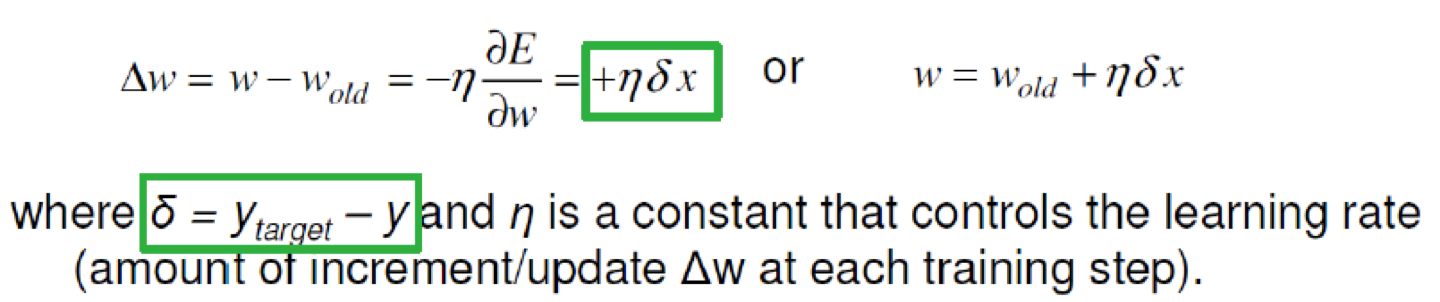

Perceptron: Training with the Delta-Rule (Widrow-Hoff

Rule) 68

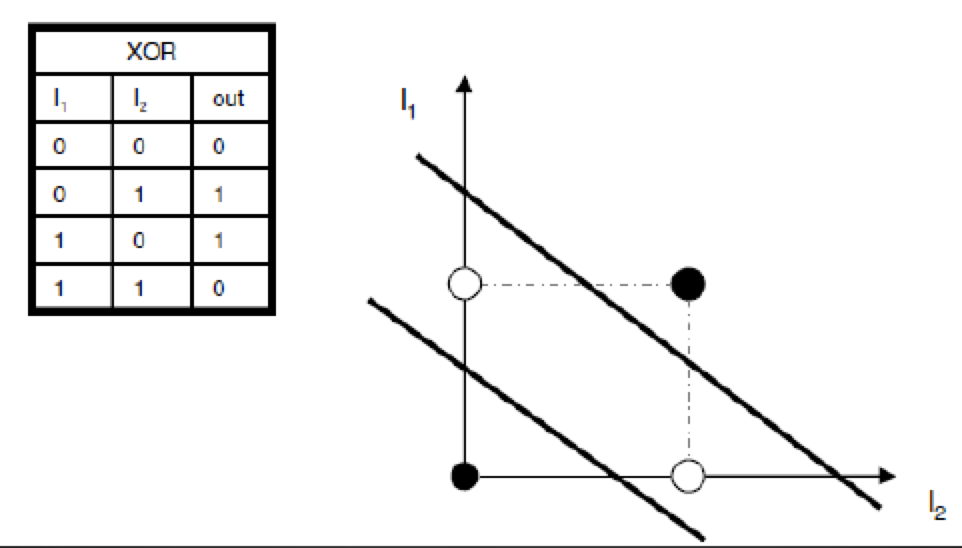

Perceptron: Limits 68

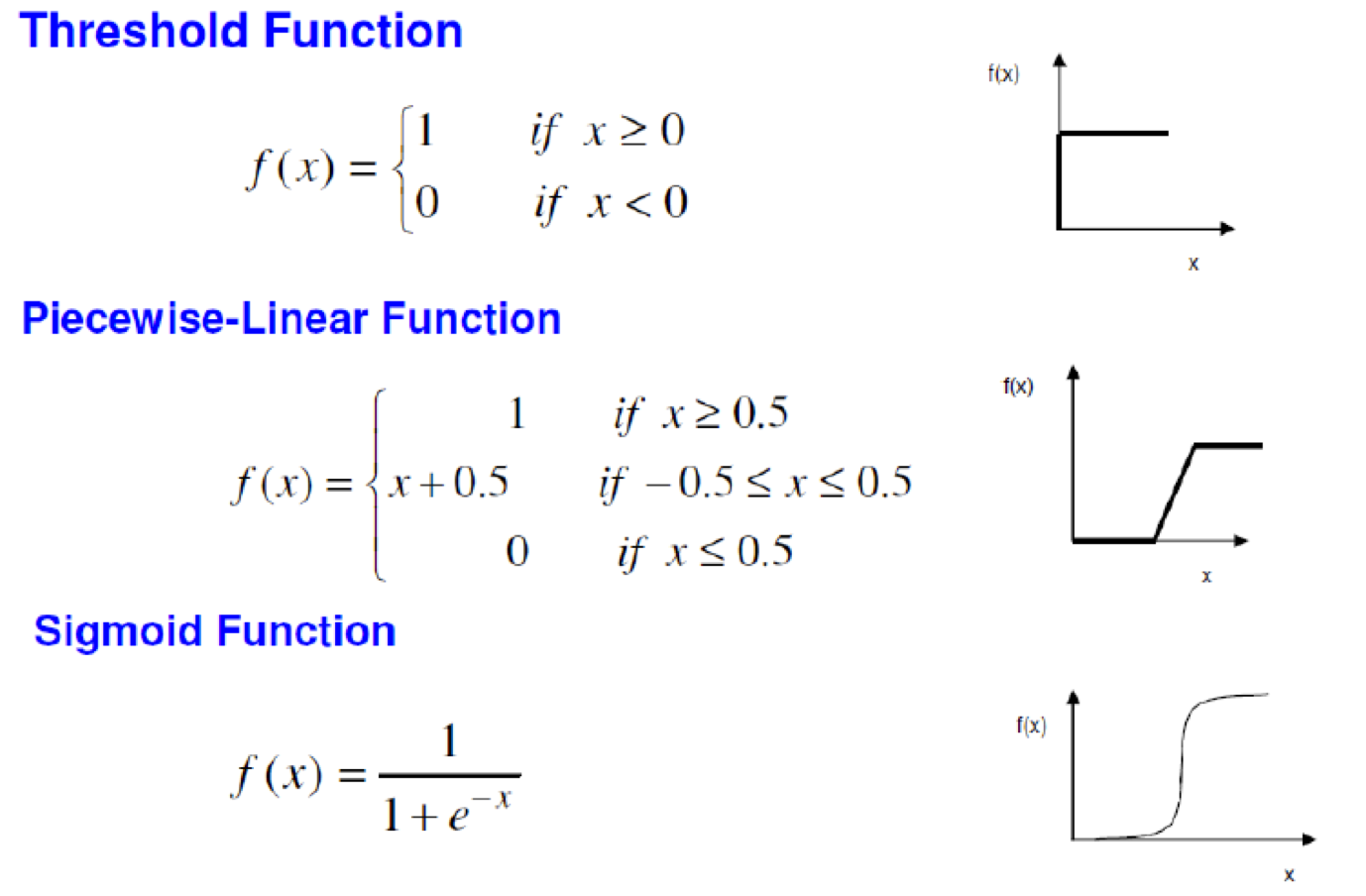

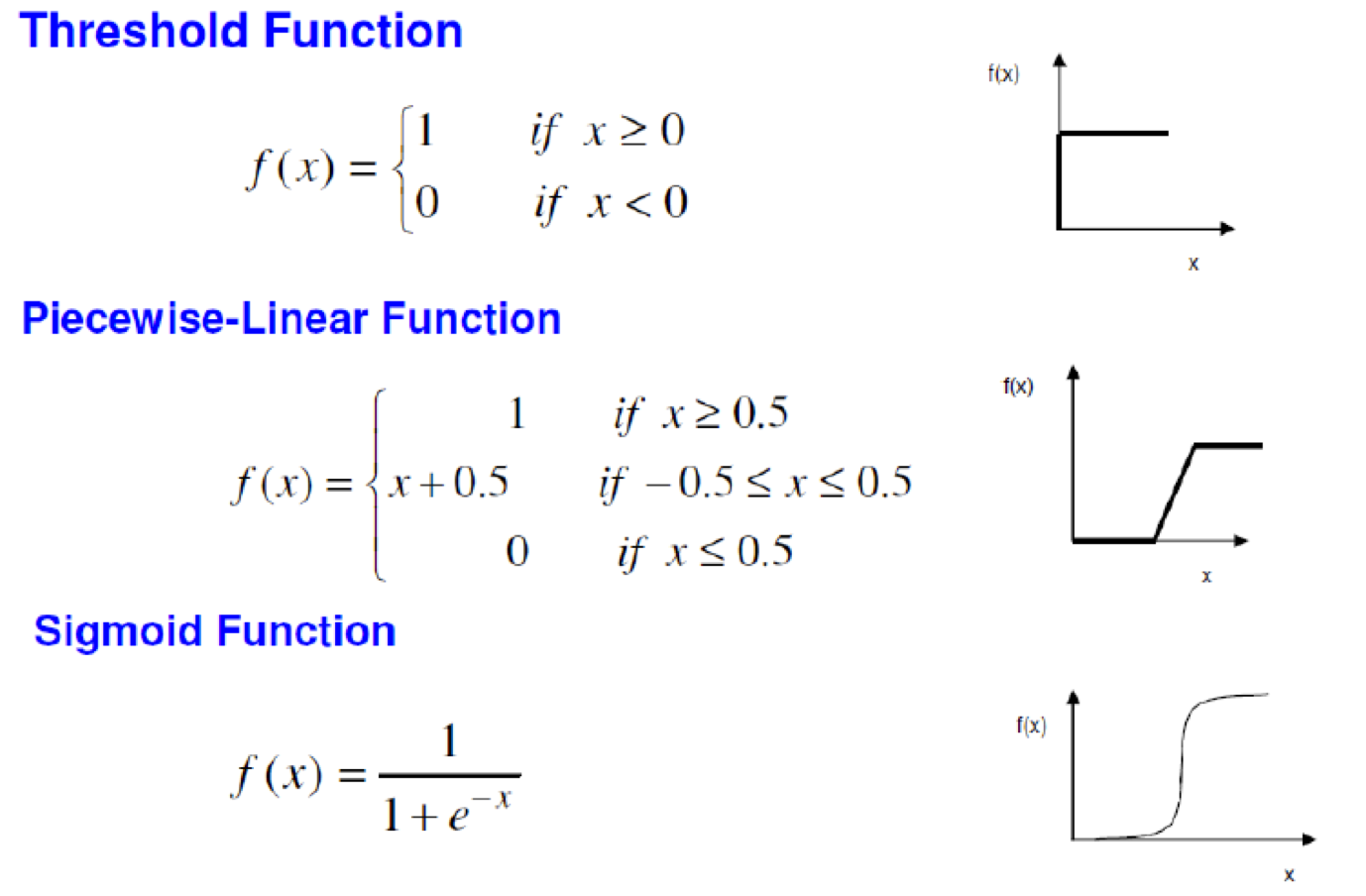

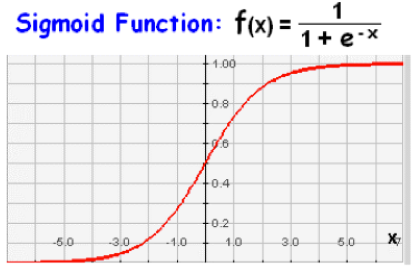

Types of neurons (activation functions) 69

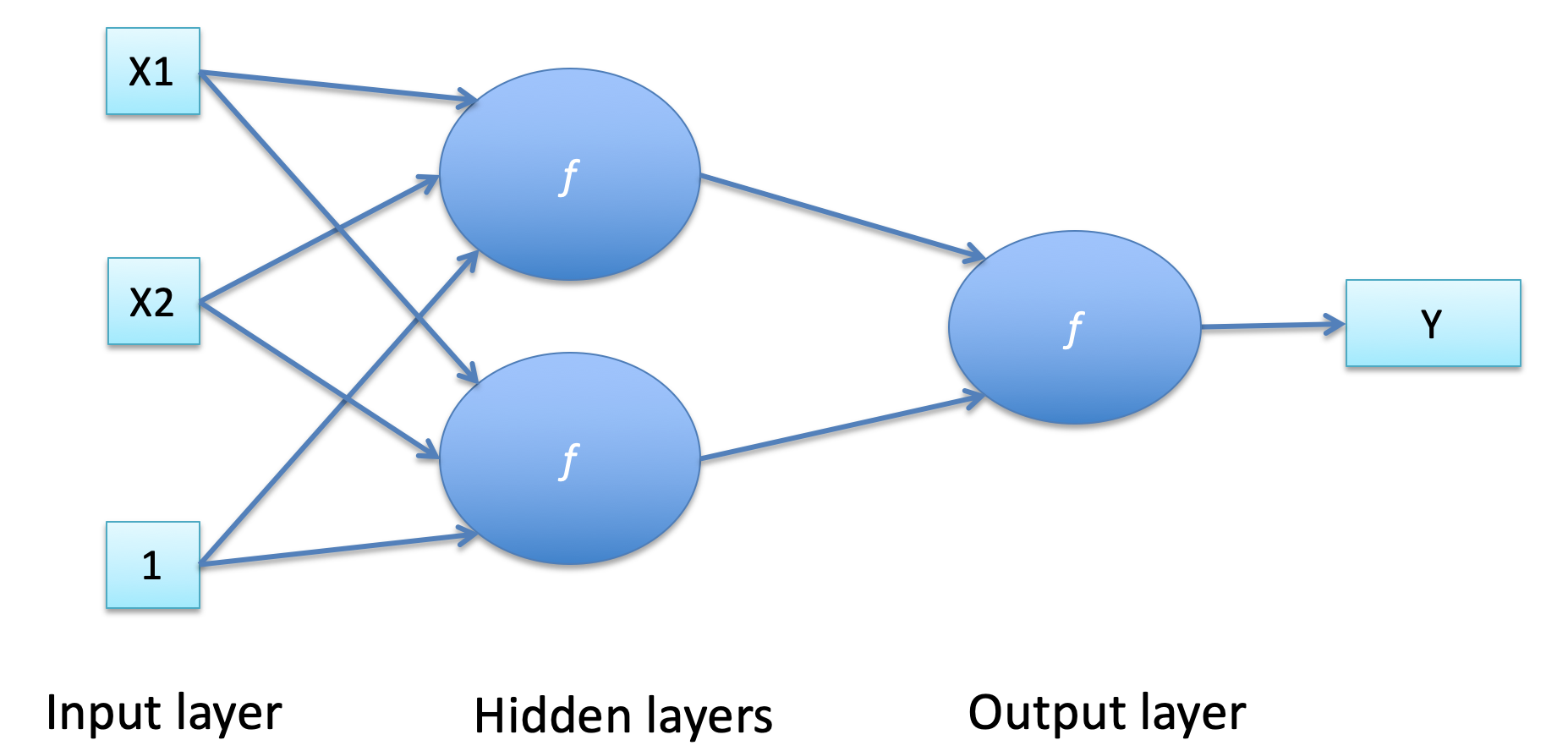

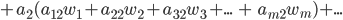

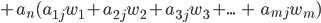

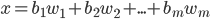

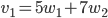

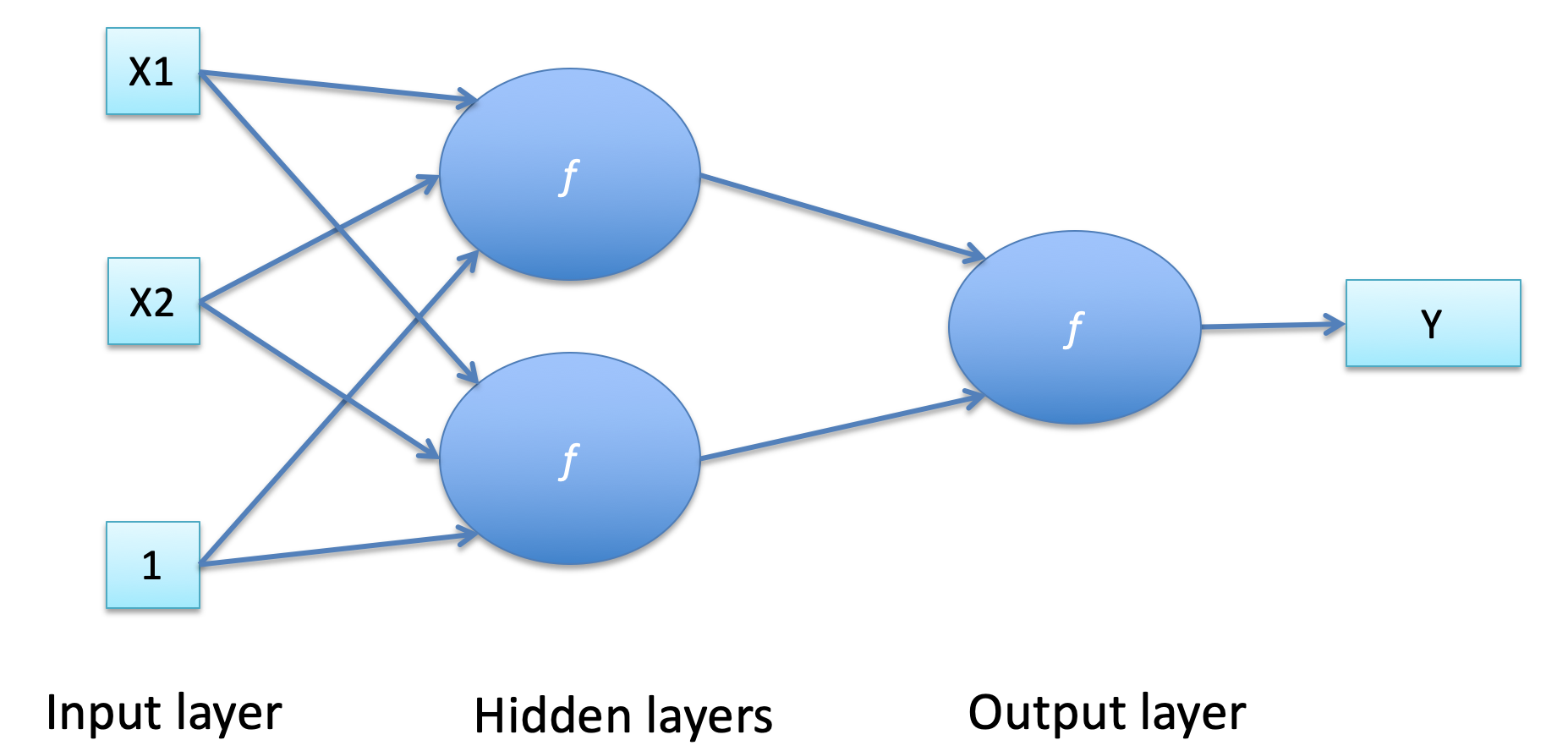

Multi-layered Neural Networks 70

Multi-layered Nets: Training 71

Further Issues of Neural Networks 71

Deep Learning 71

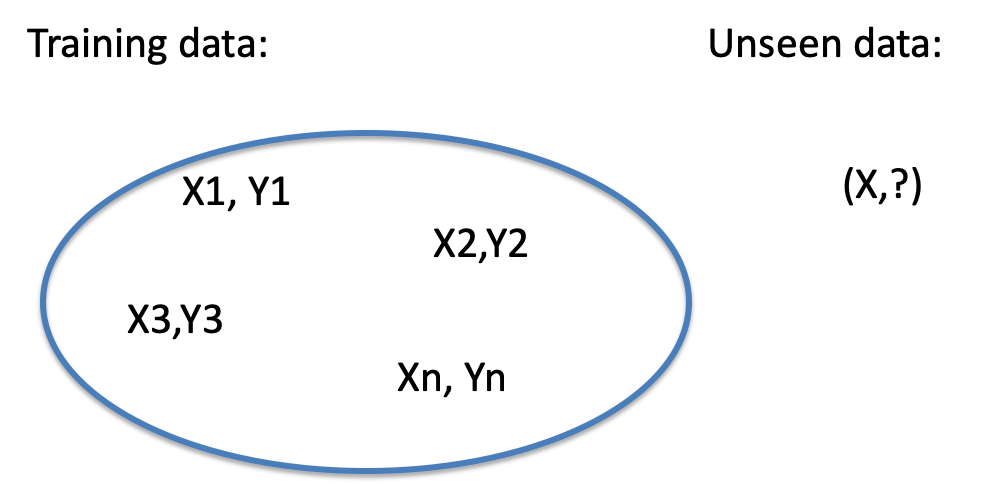

Supervised vs Unsupervised Learning 72

Offline vs. Online Learning 72

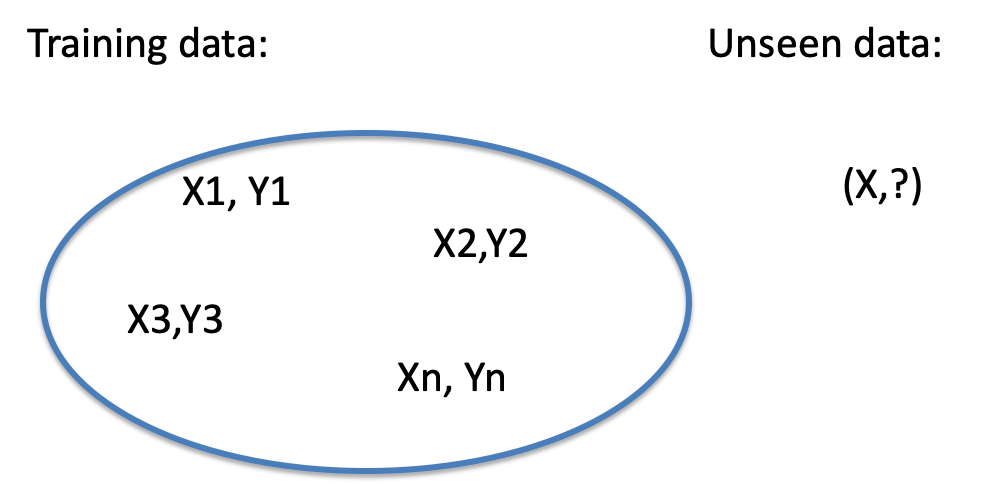

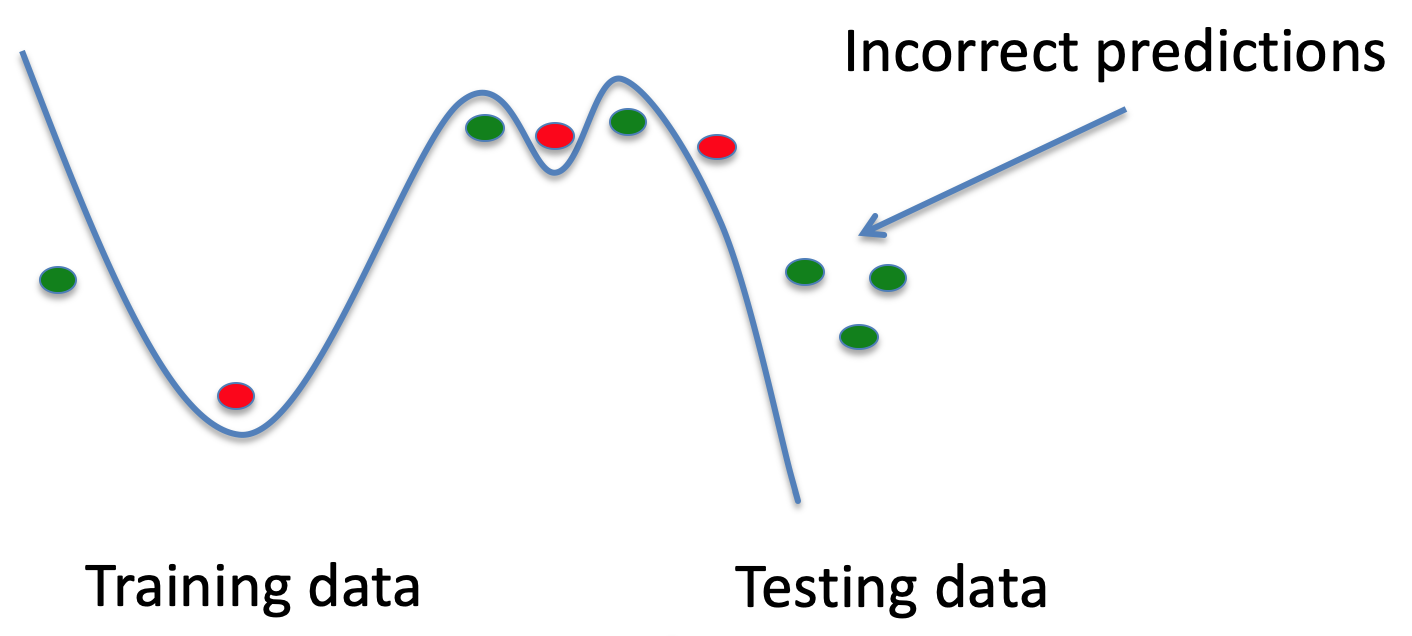

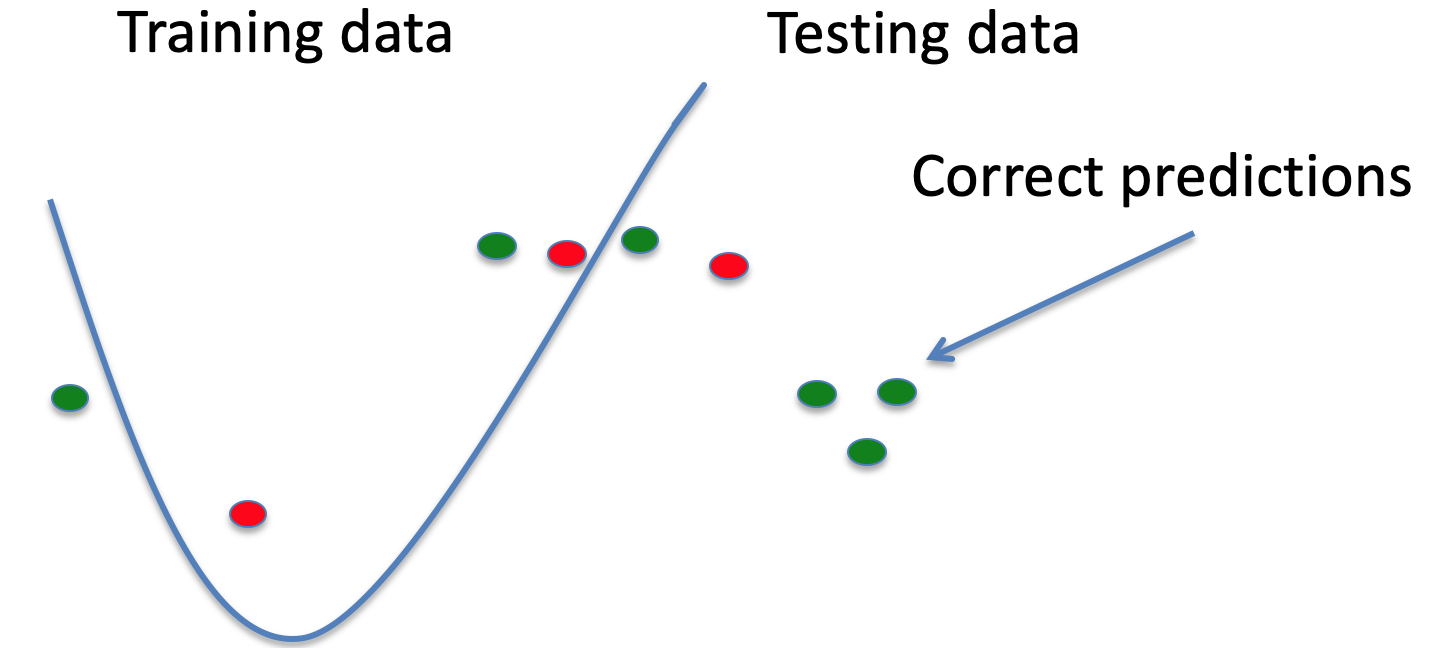

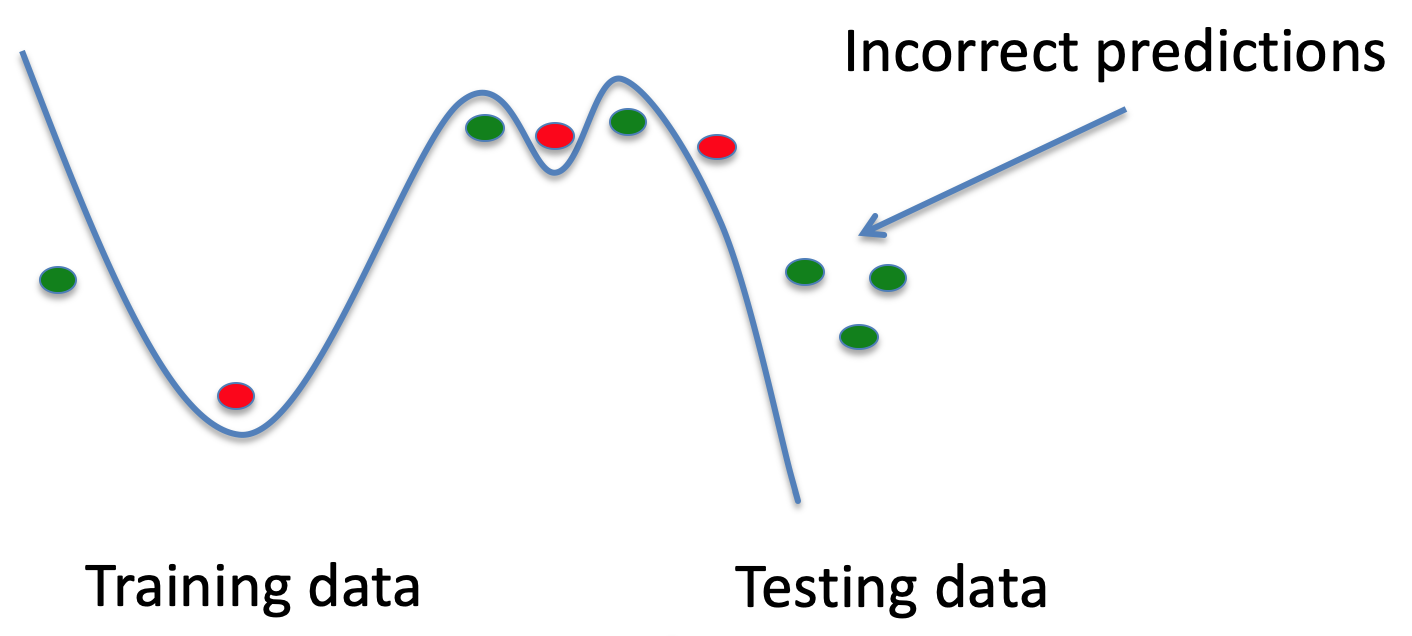

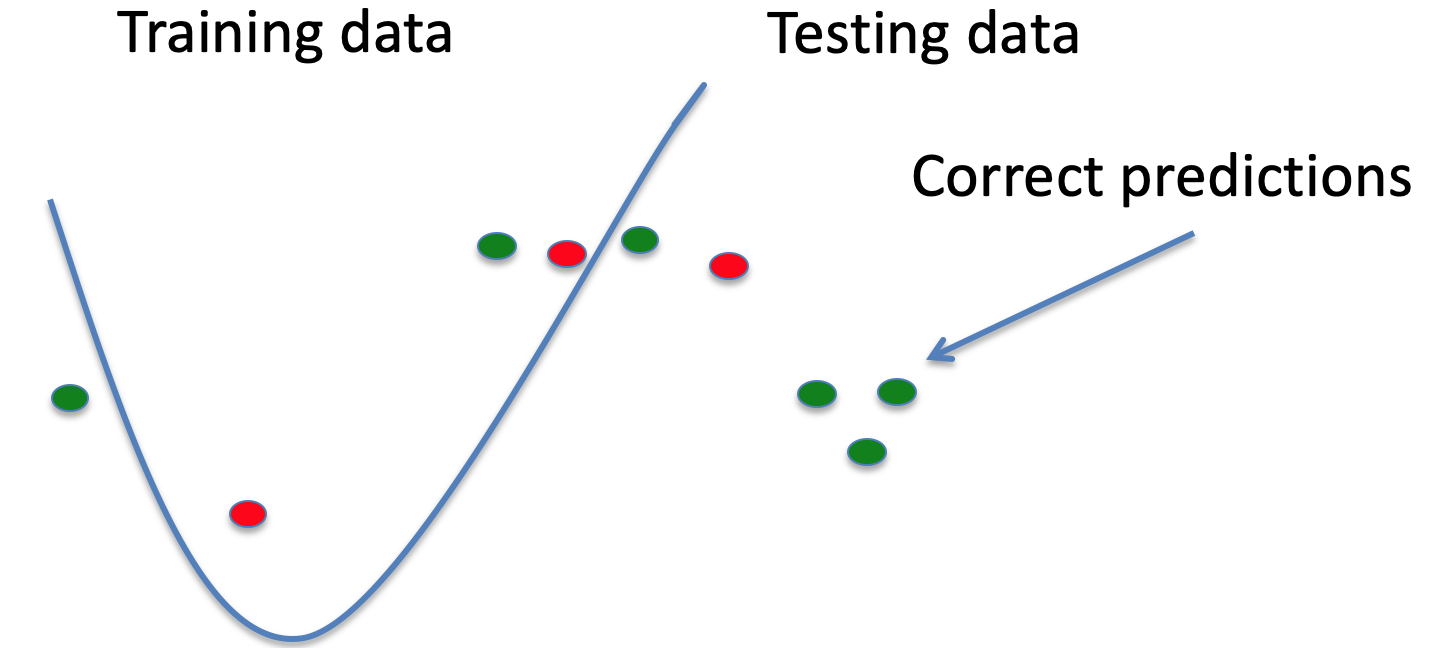

Generalisation & Overfitting 73

Training vs Testing 74

Reasoning 74

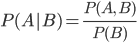

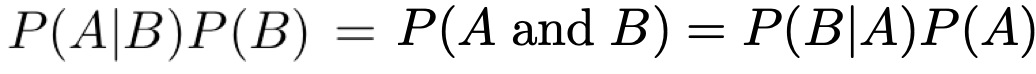

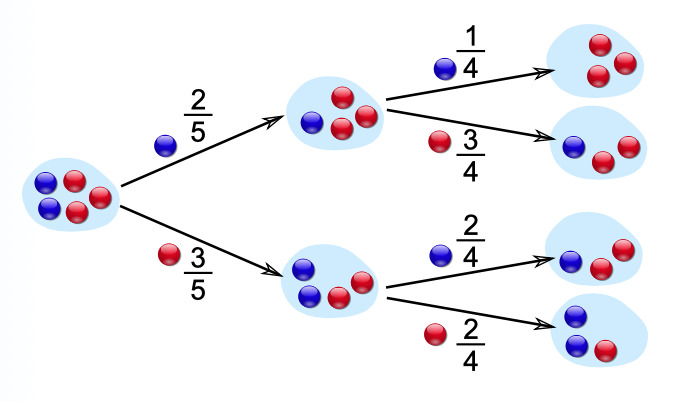

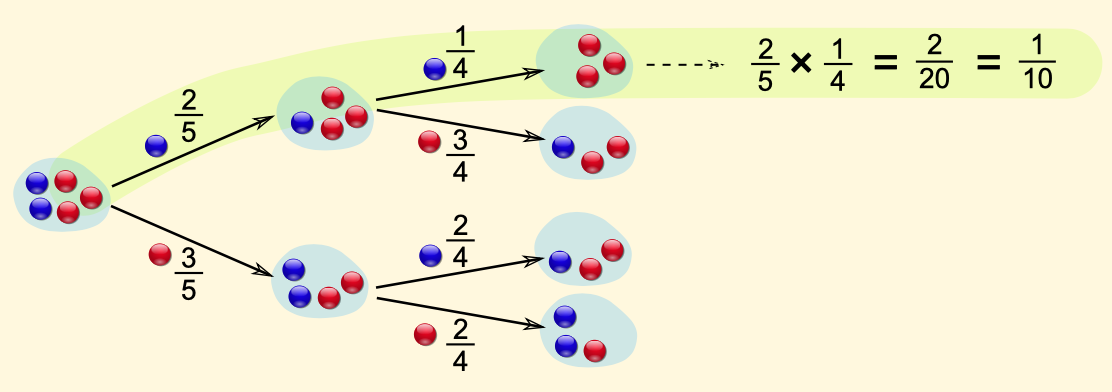

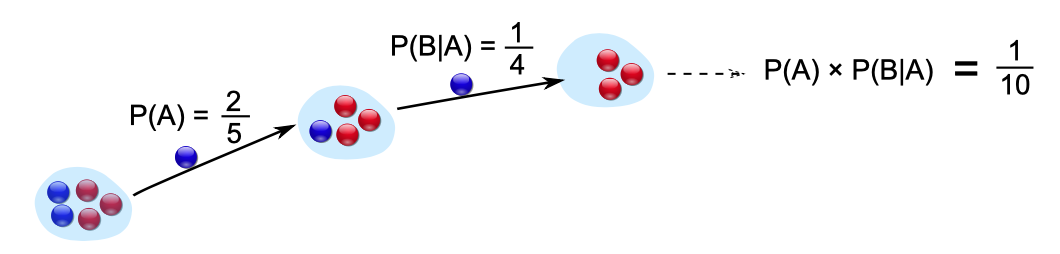

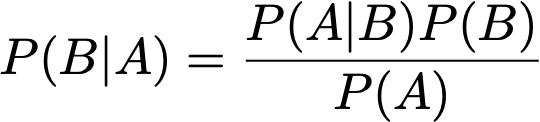

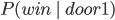

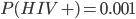

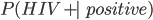

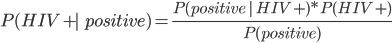

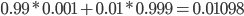

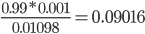

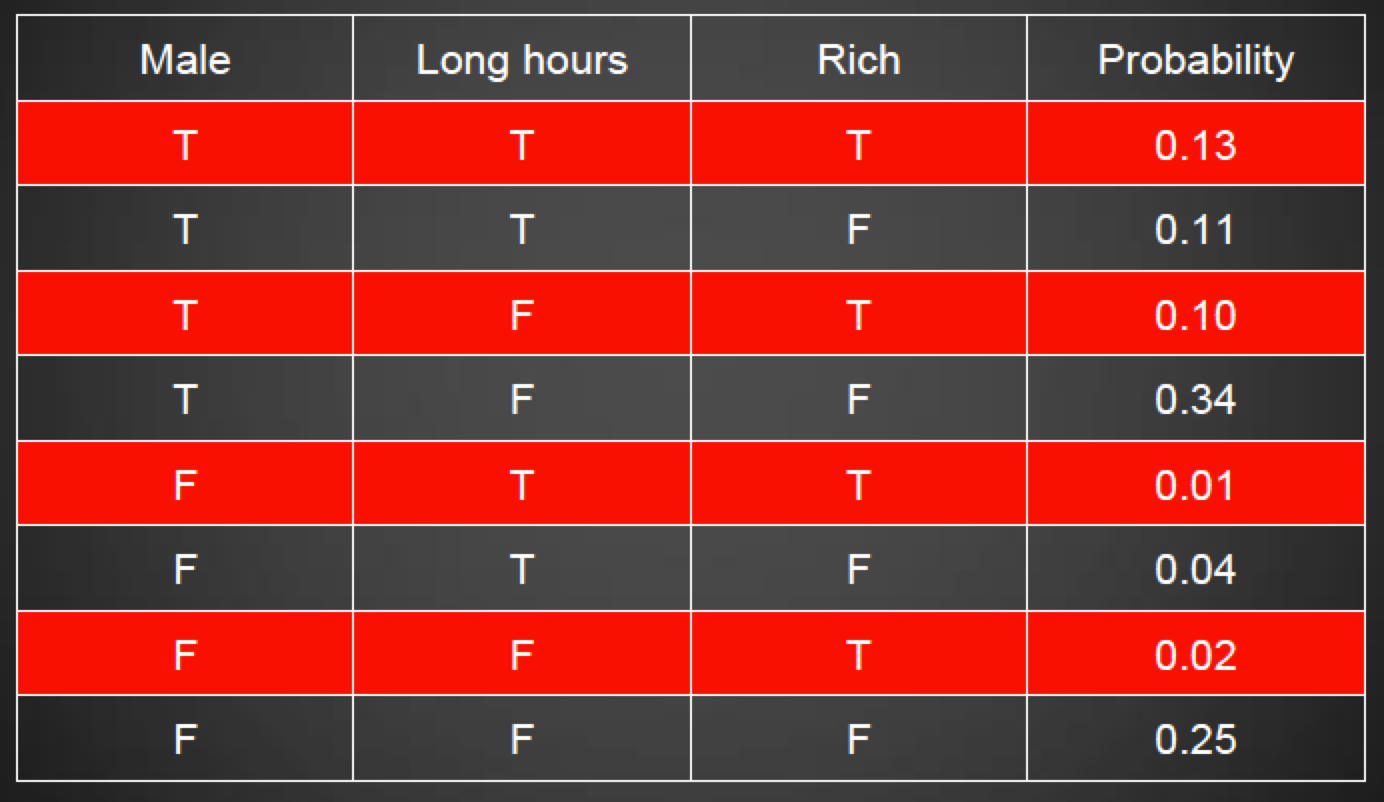

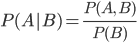

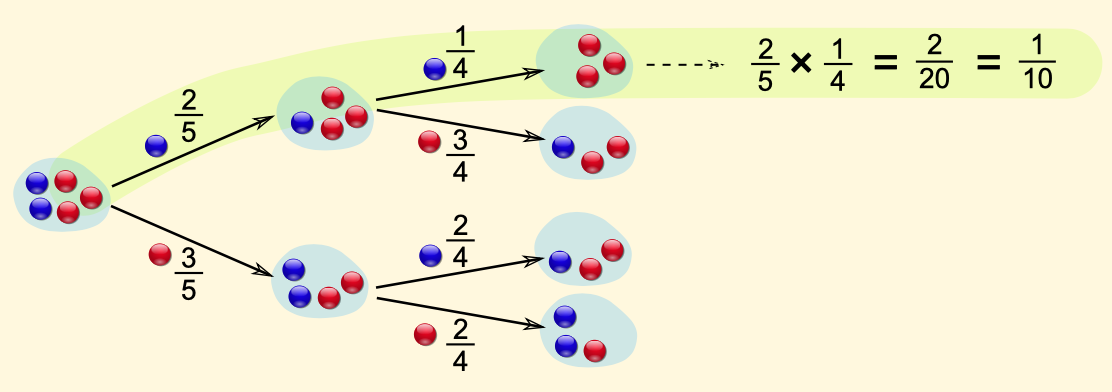

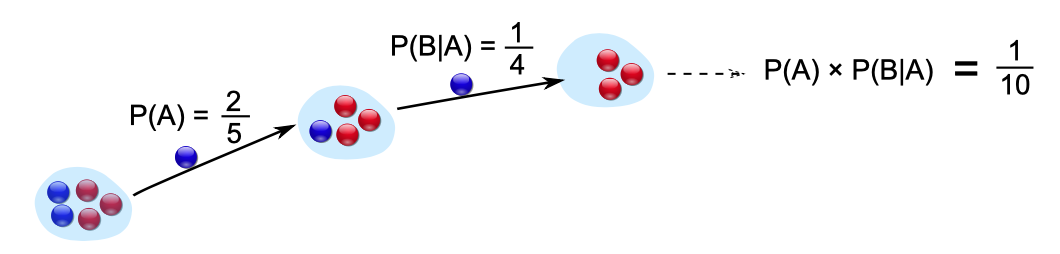

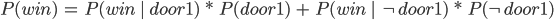

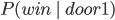

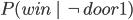

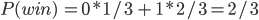

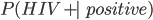

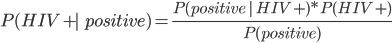

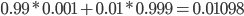

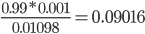

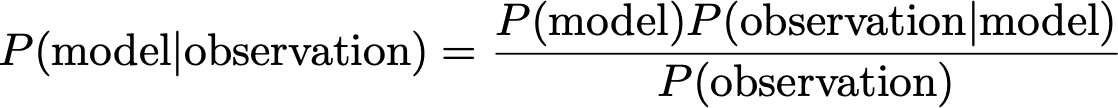

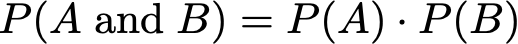

Bayes Theorem 74

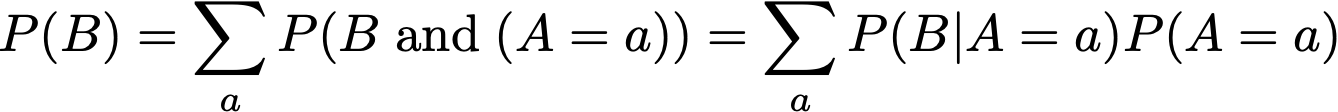

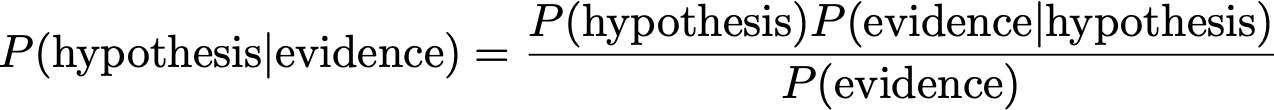

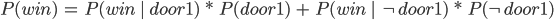

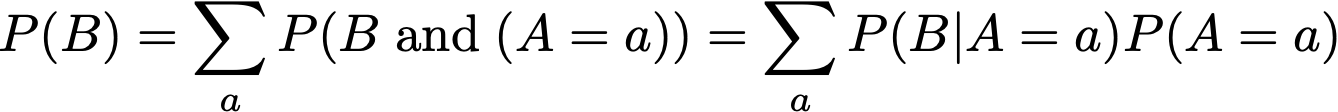

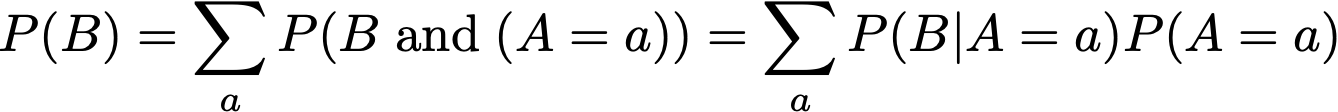

Total Probability 75

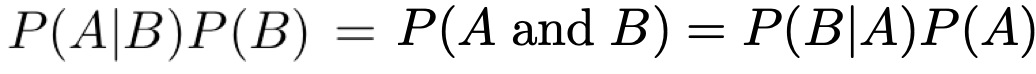

Conditional Probability 75

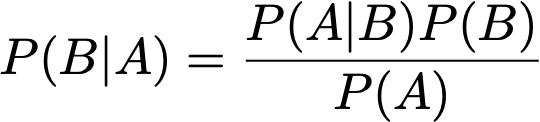

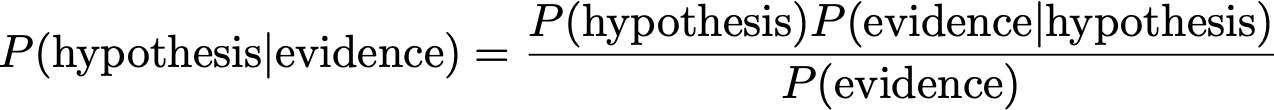

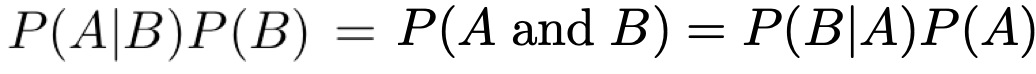

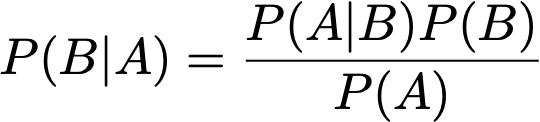

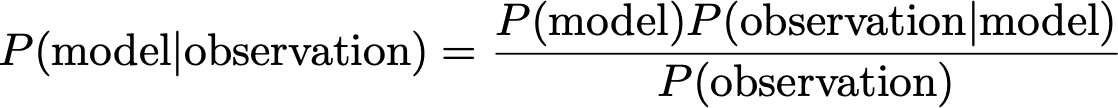

Bayes’ Rule 76

Example: Monty Hall Problem 76

Example: HIV test problem 77

Bayesian Belief Update 78

Complex Knowledge Representation 79

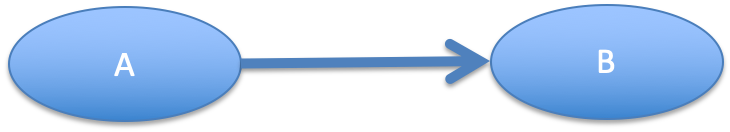

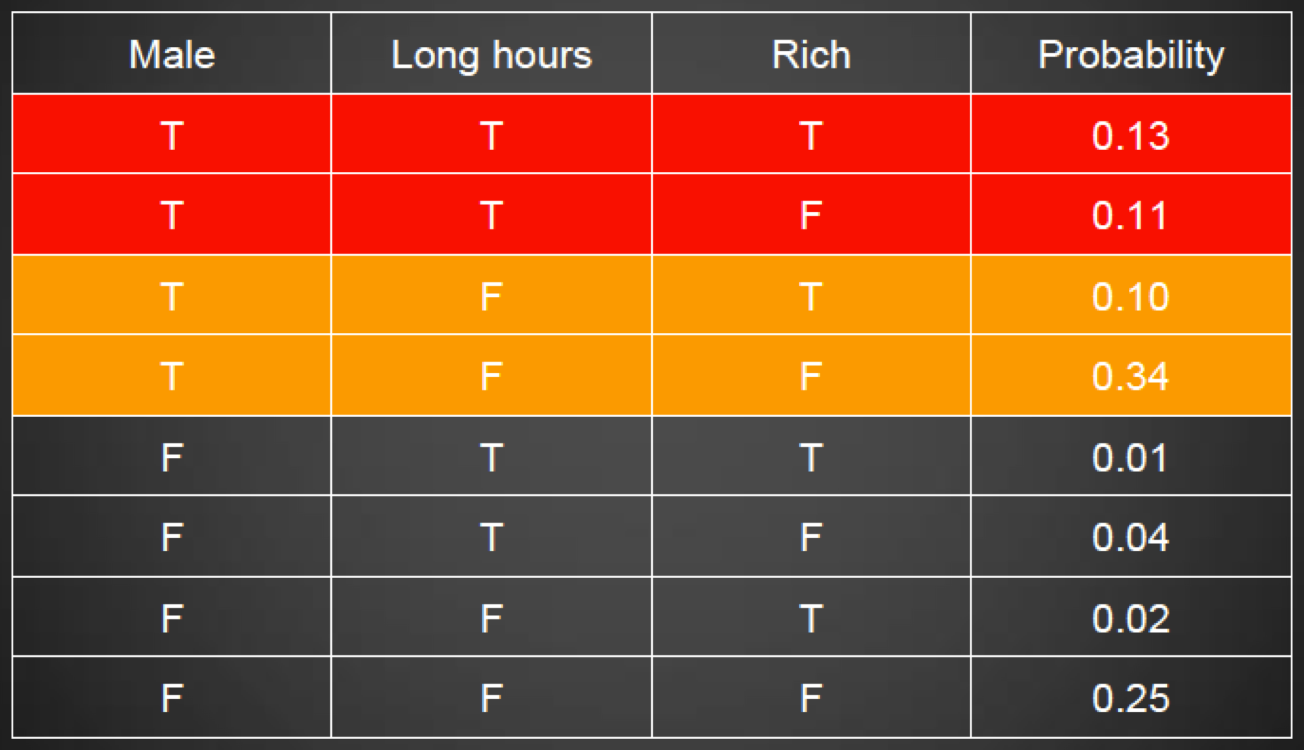

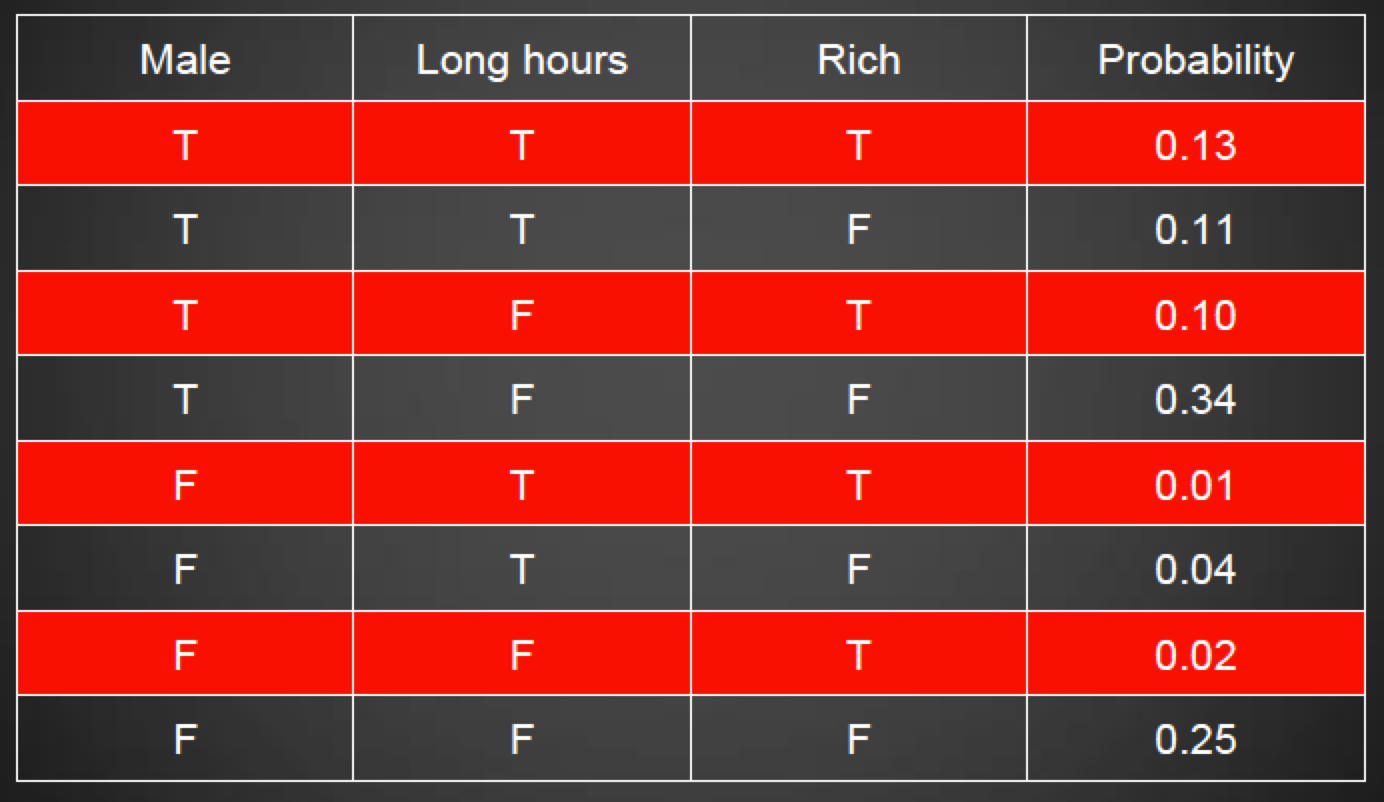

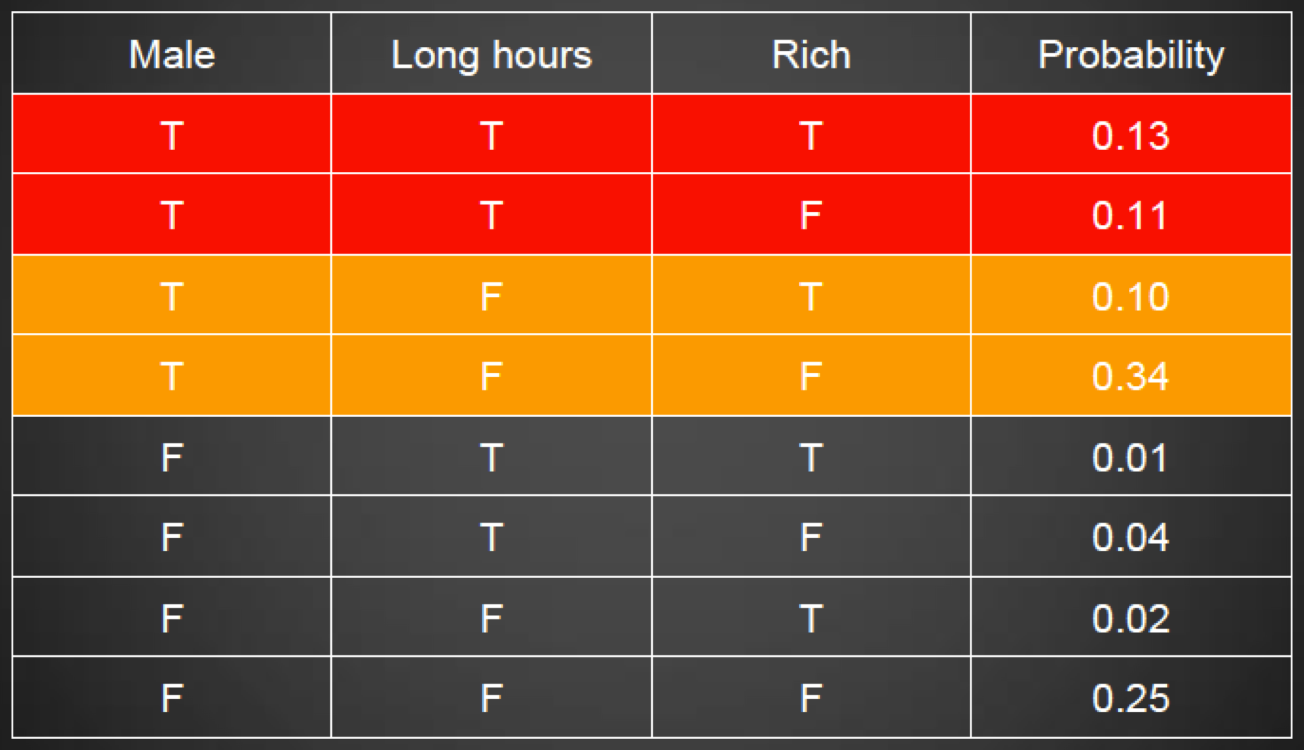

Joint Distribution 79

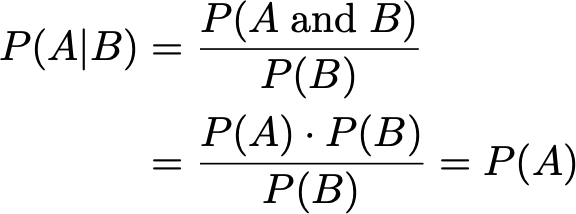

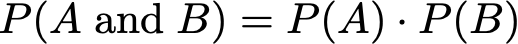

Independence 81

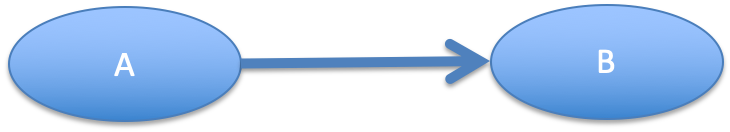

Bayesian Networks 81

Properties of Bayesian Networks 82

Pros and Cons of Bayesian Networks 83

Decision Making 83

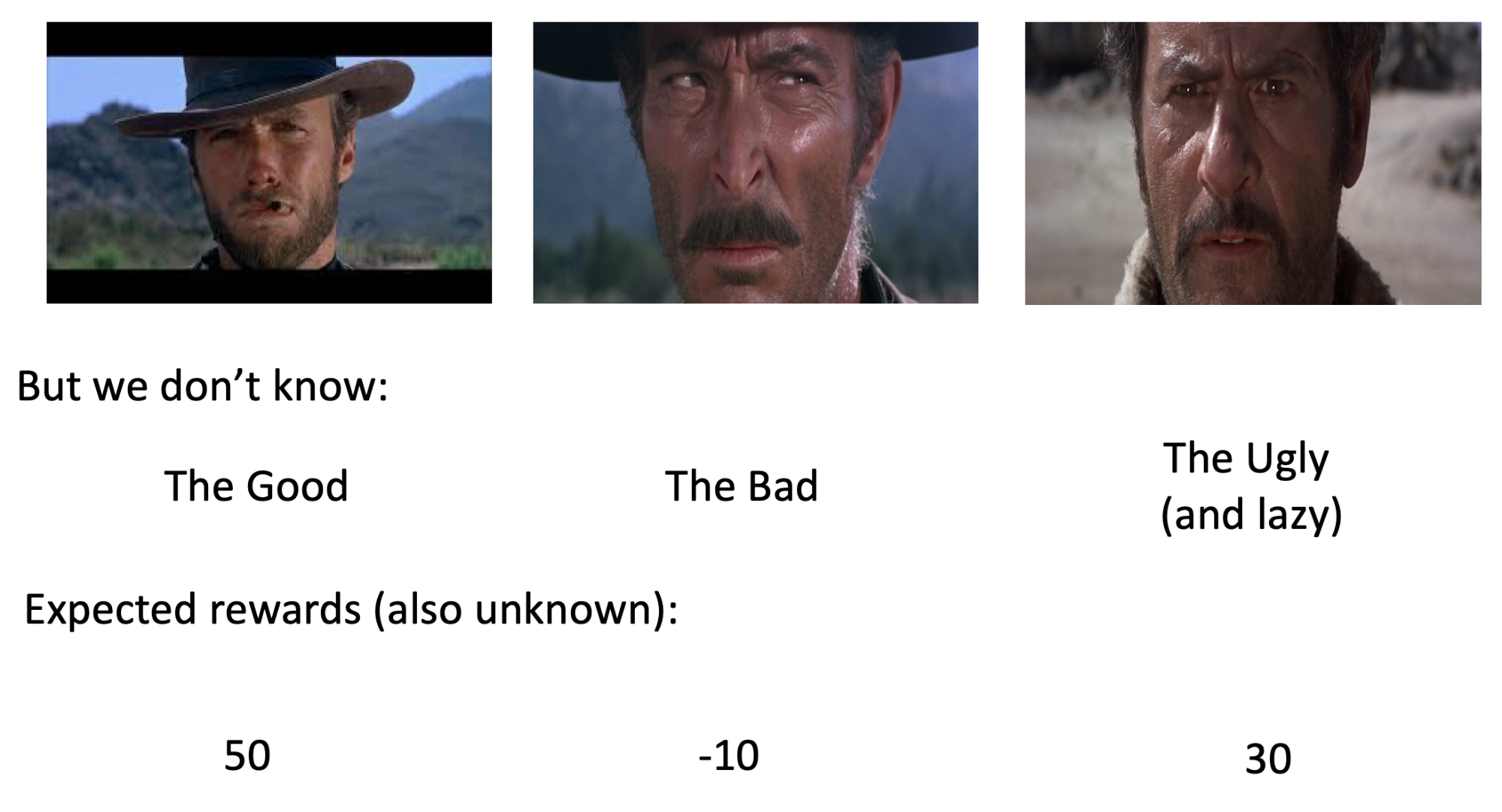

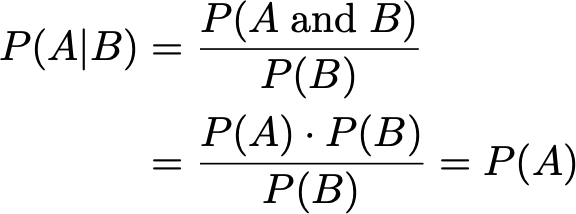

Bandit Theory 83

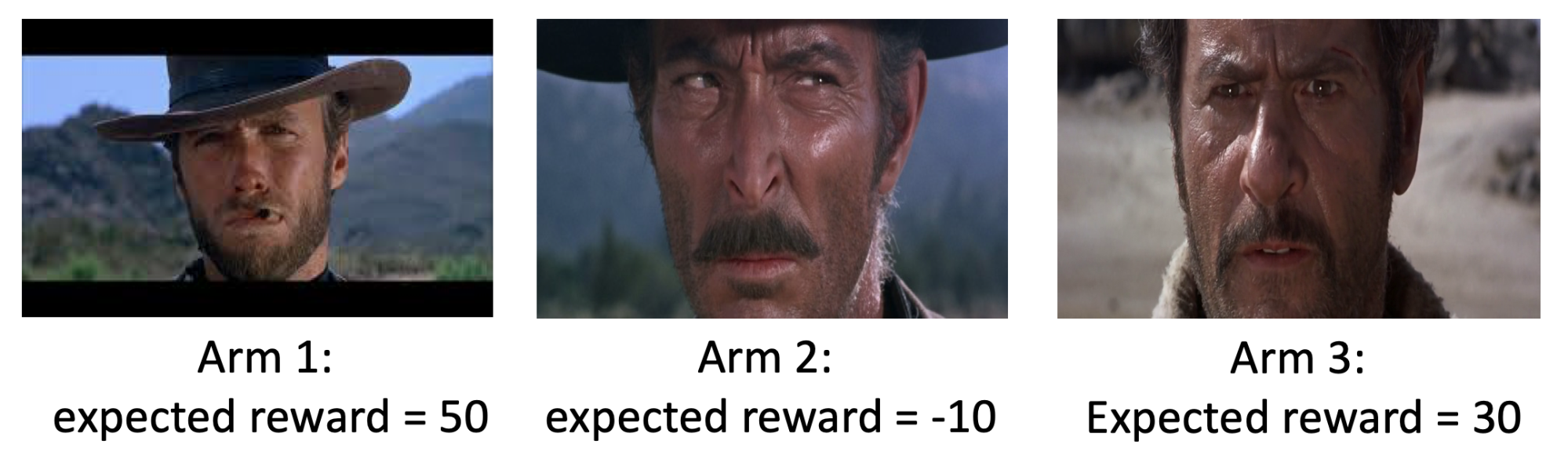

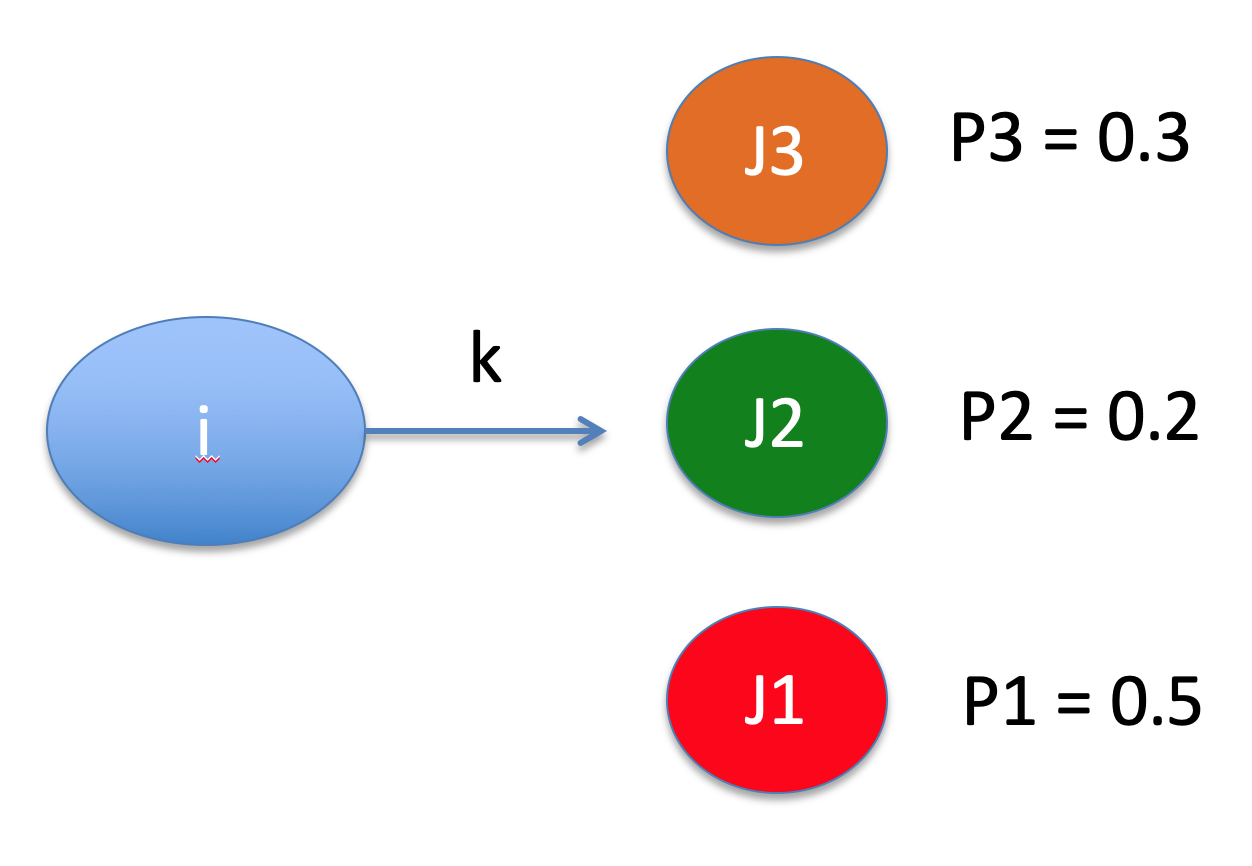

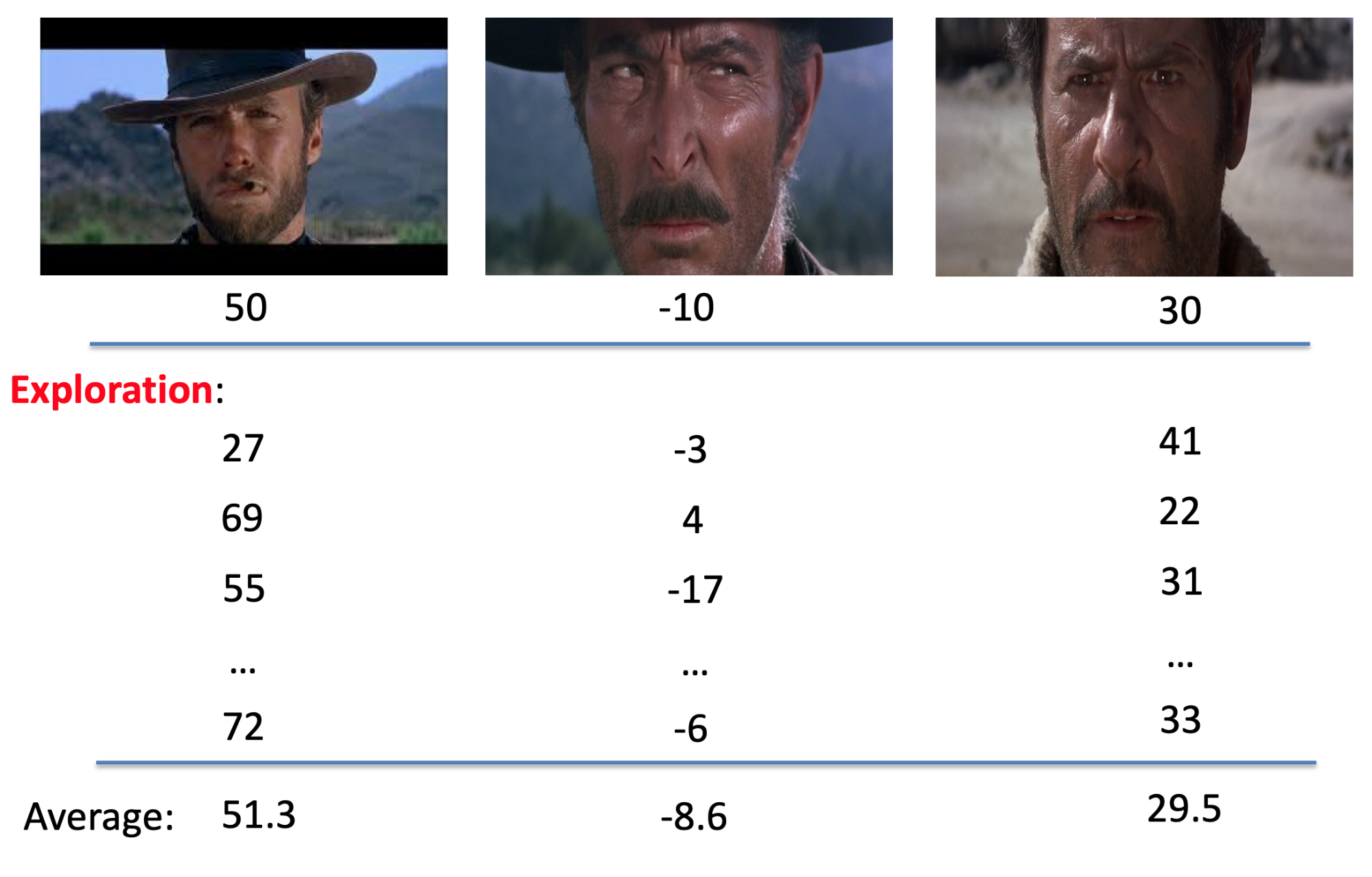

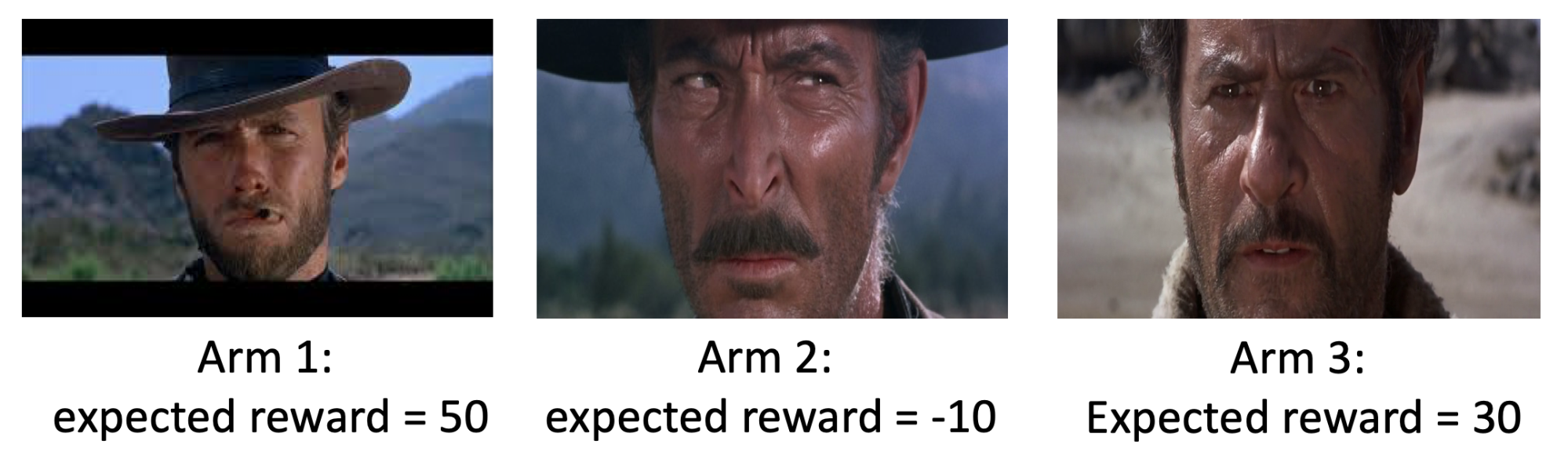

Multi-armed Bandit (MAB) Model 85

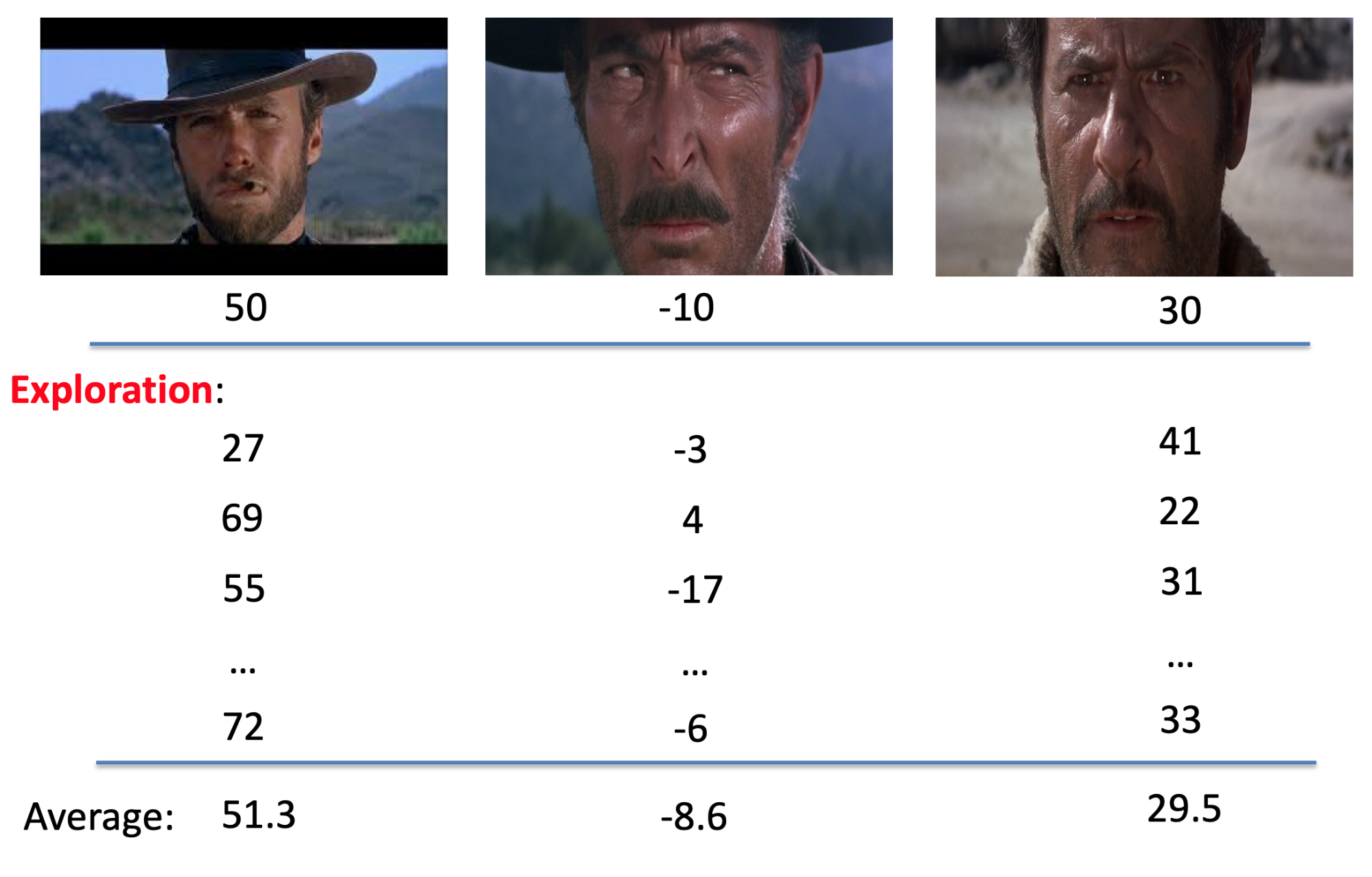

MAB: Epsilon-first Approach 85

MAB: Epsilon-greedy Approach 86

MAB: Epsilon-first vs. Epsilon-greedy 86

Other Algorithms 86

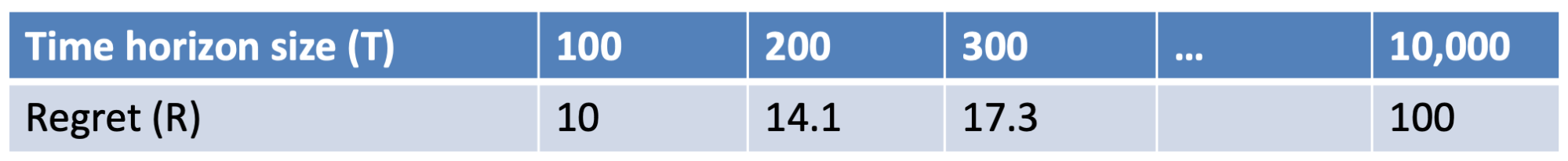

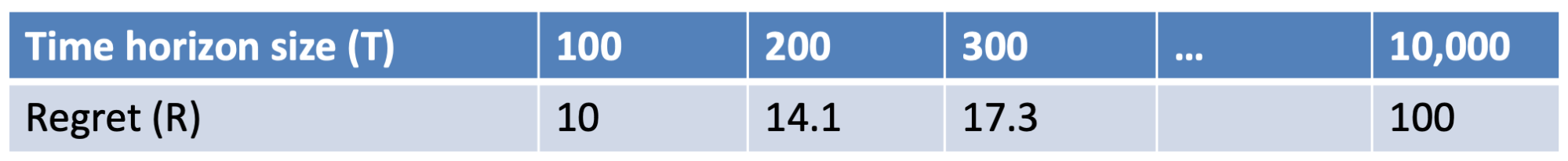

Performance of Bandit Algorithms and Regret 86

Extensions of the Multi-armed Bandit 88

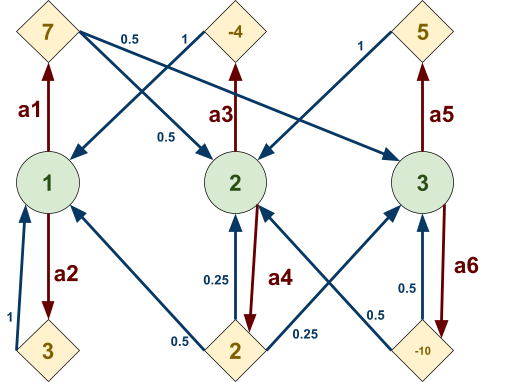

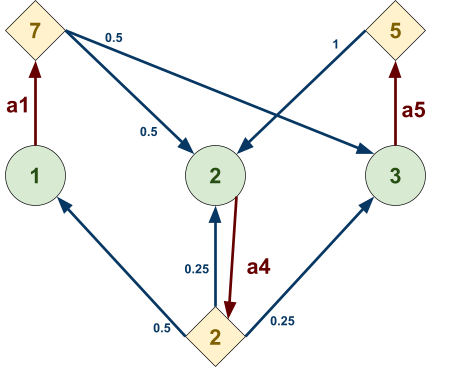

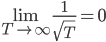

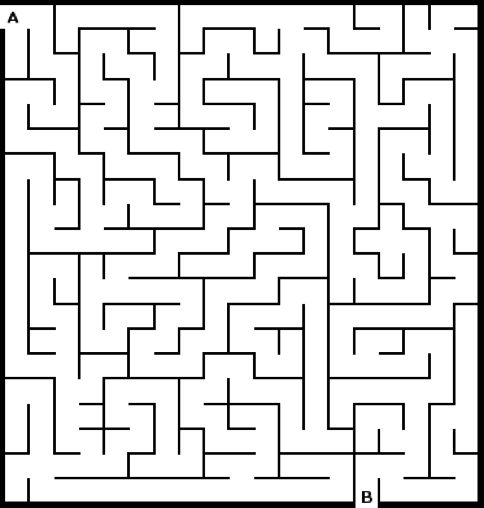

Reinforcement Learning & Markov Decision Process 88

Reinforcement Learning 90

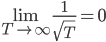

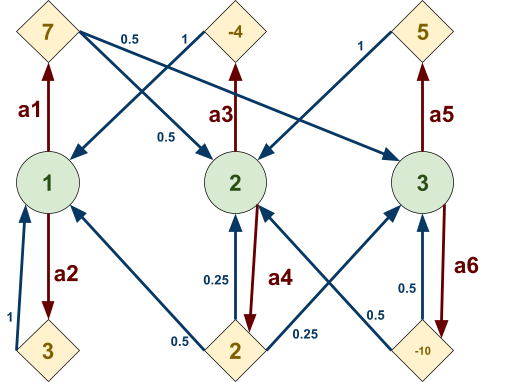

States, Actions and Rewards 91

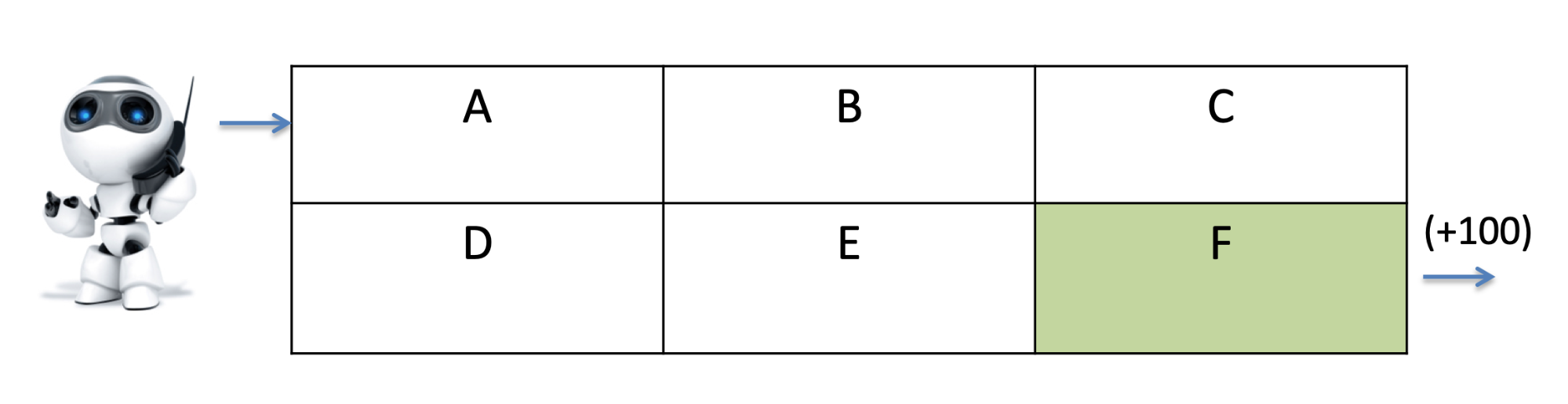

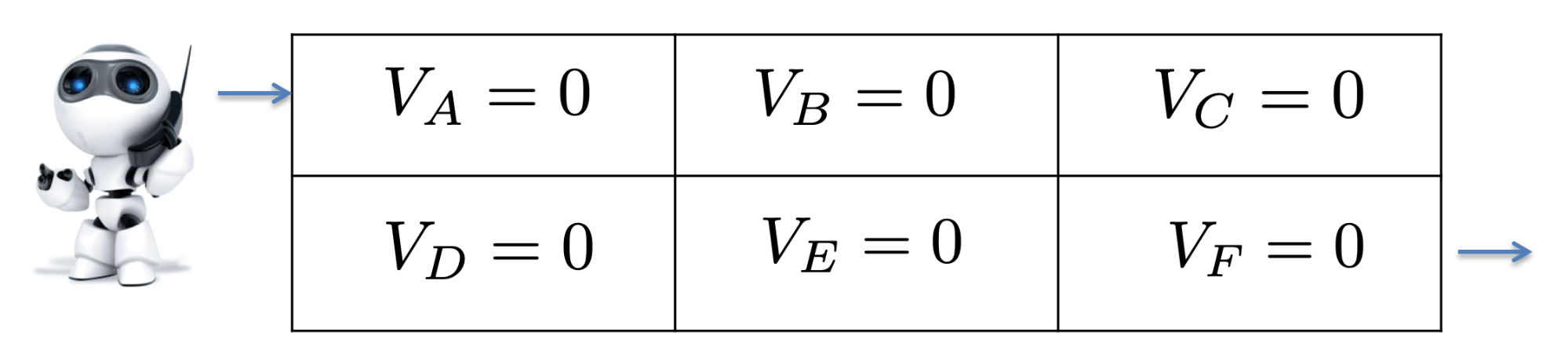

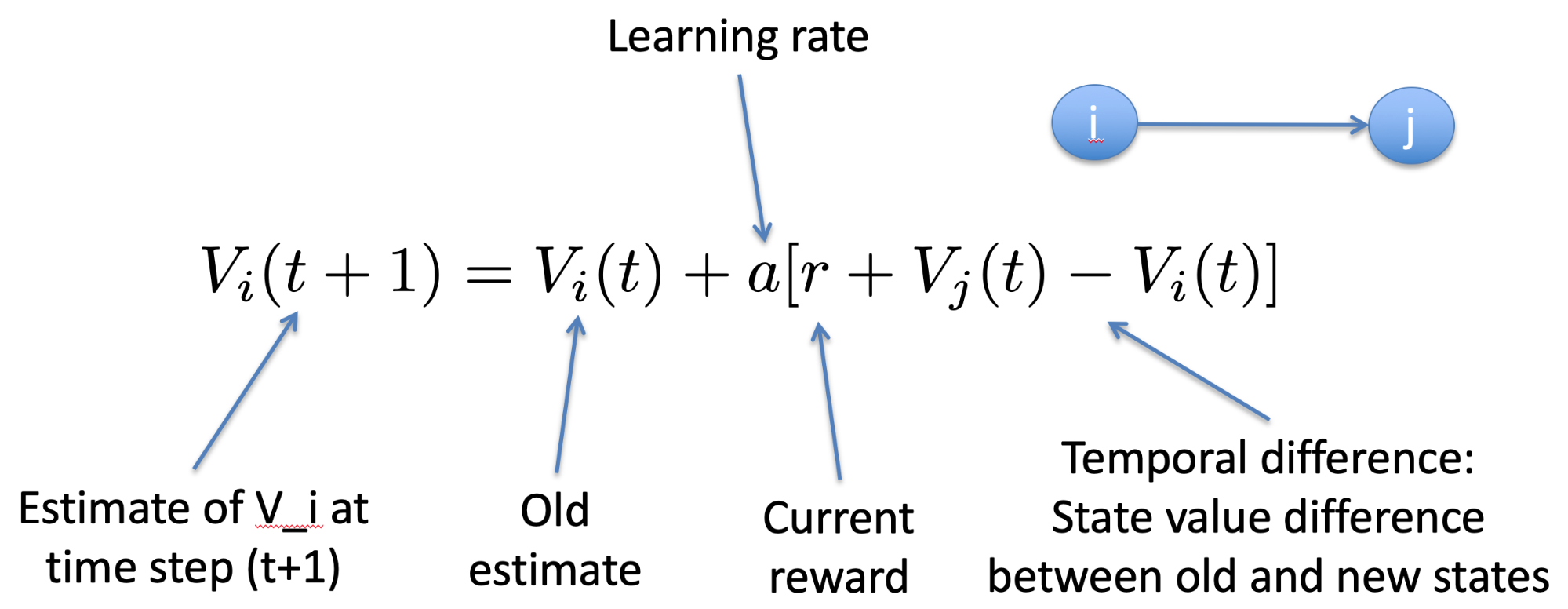

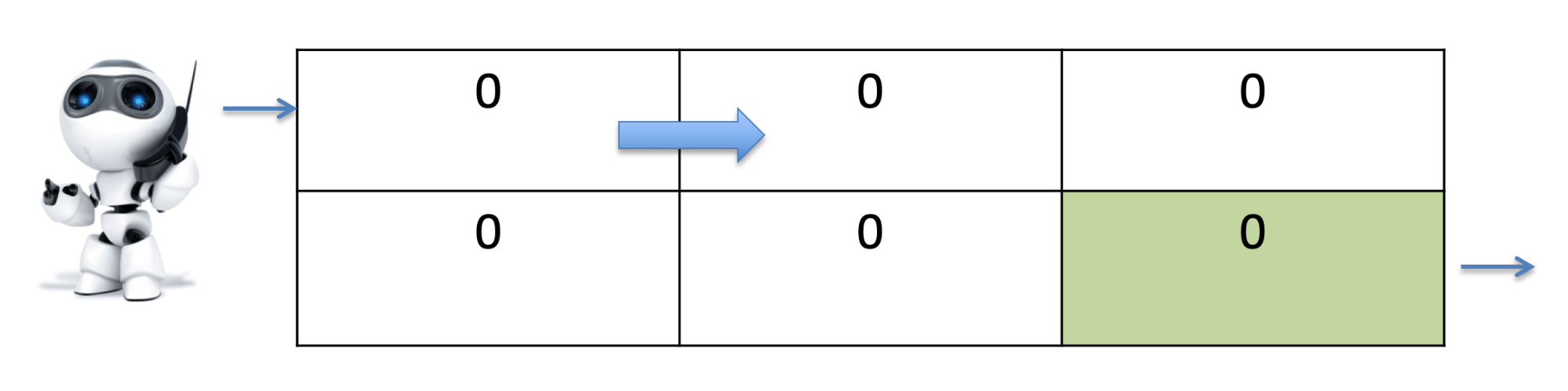

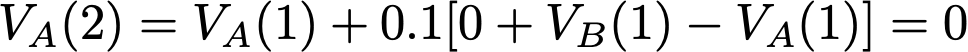

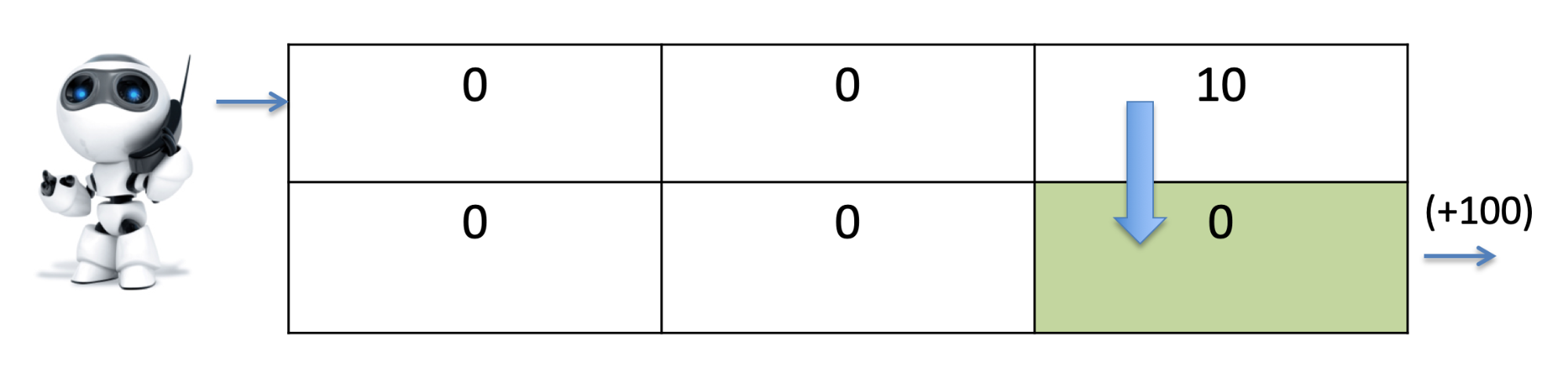

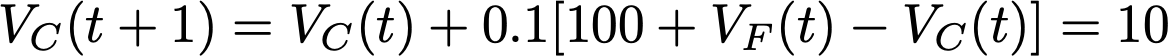

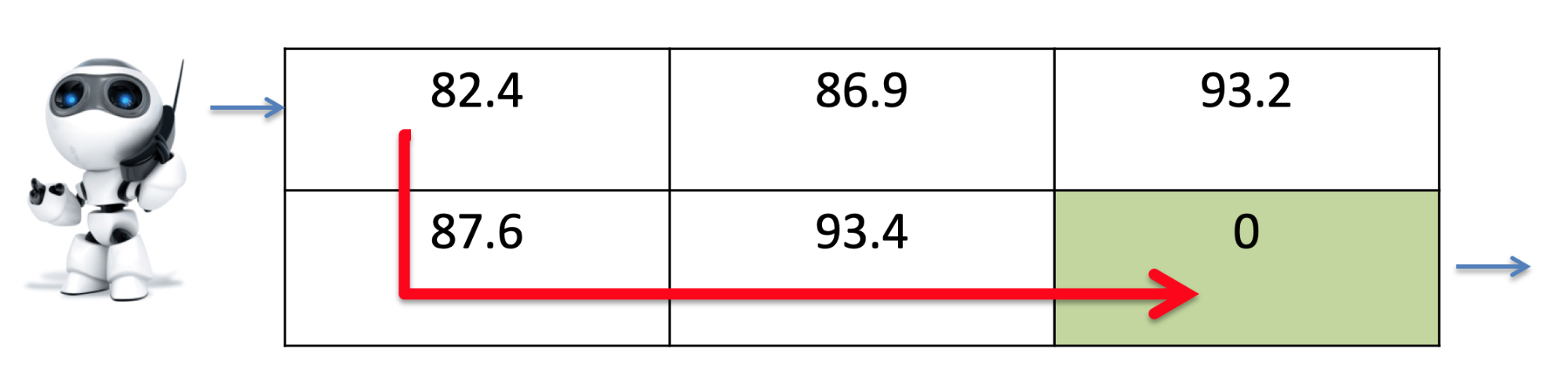

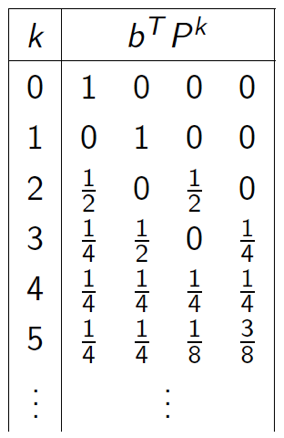

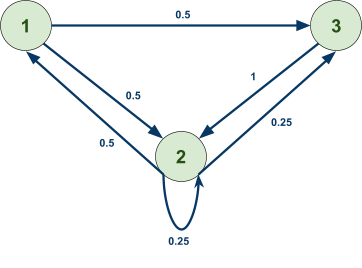

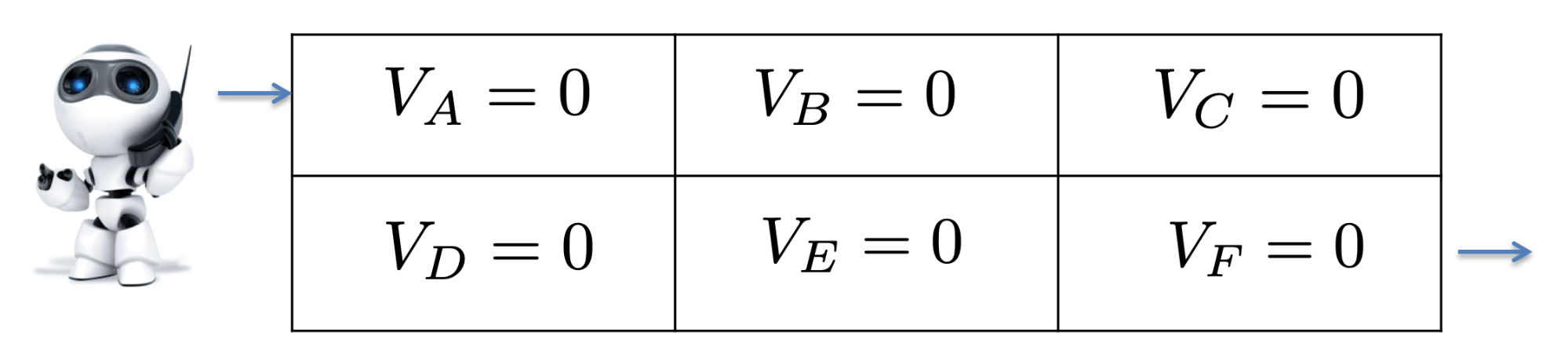

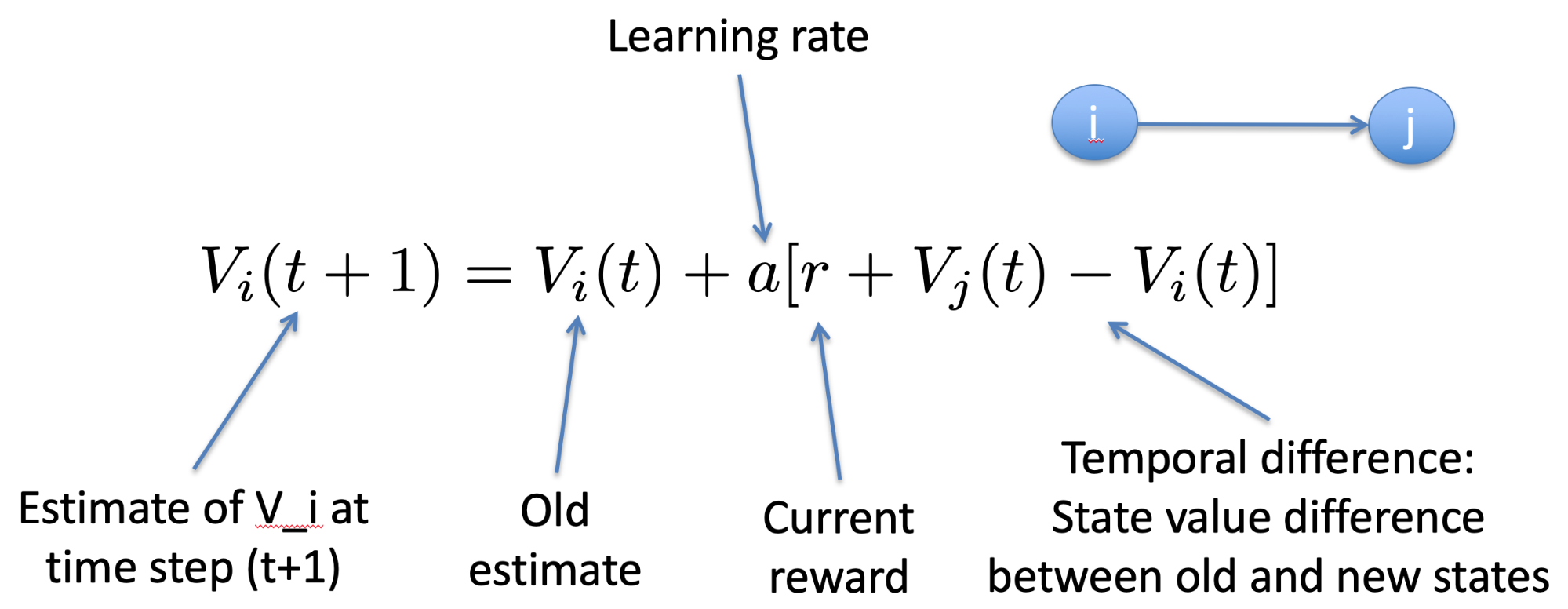

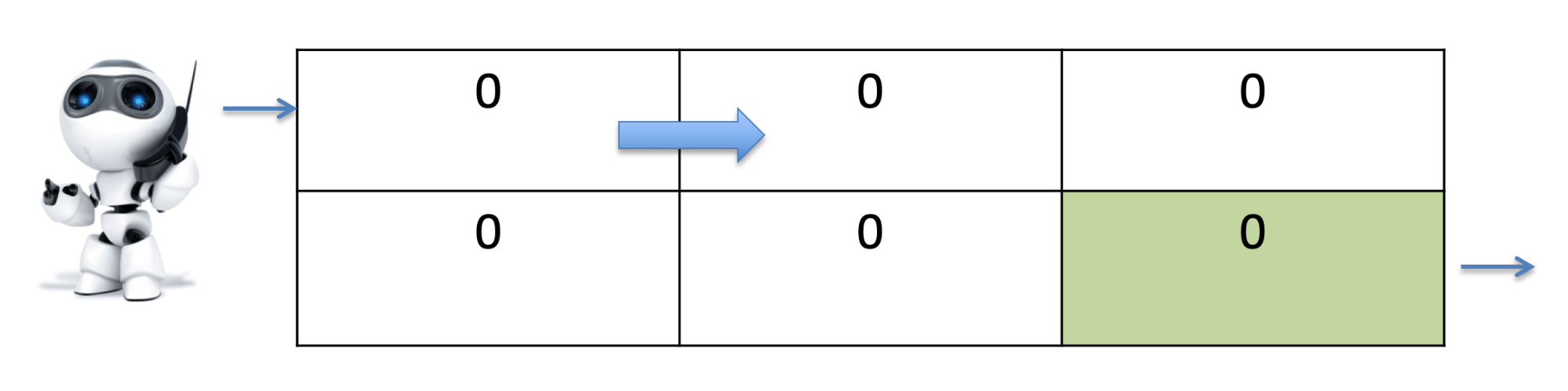

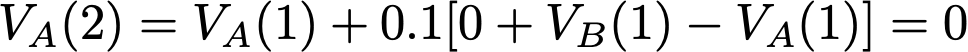

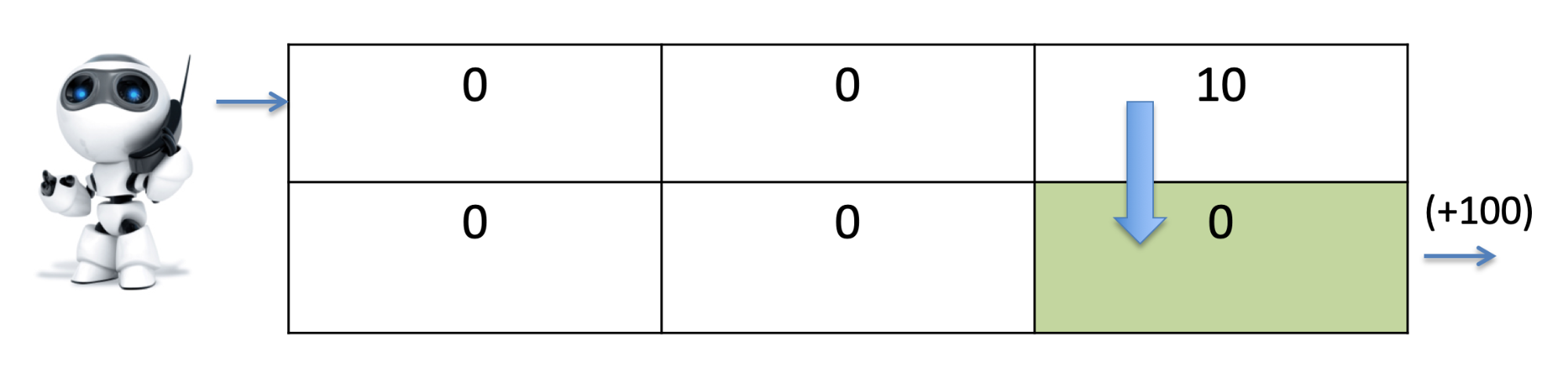

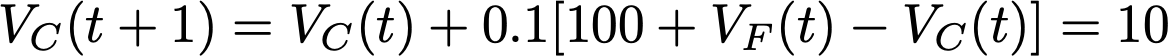

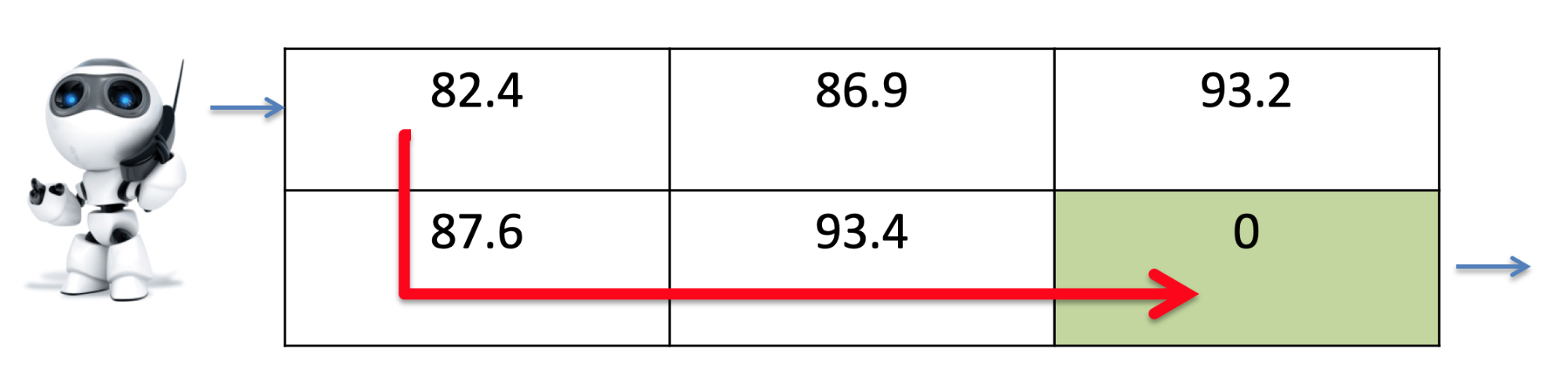

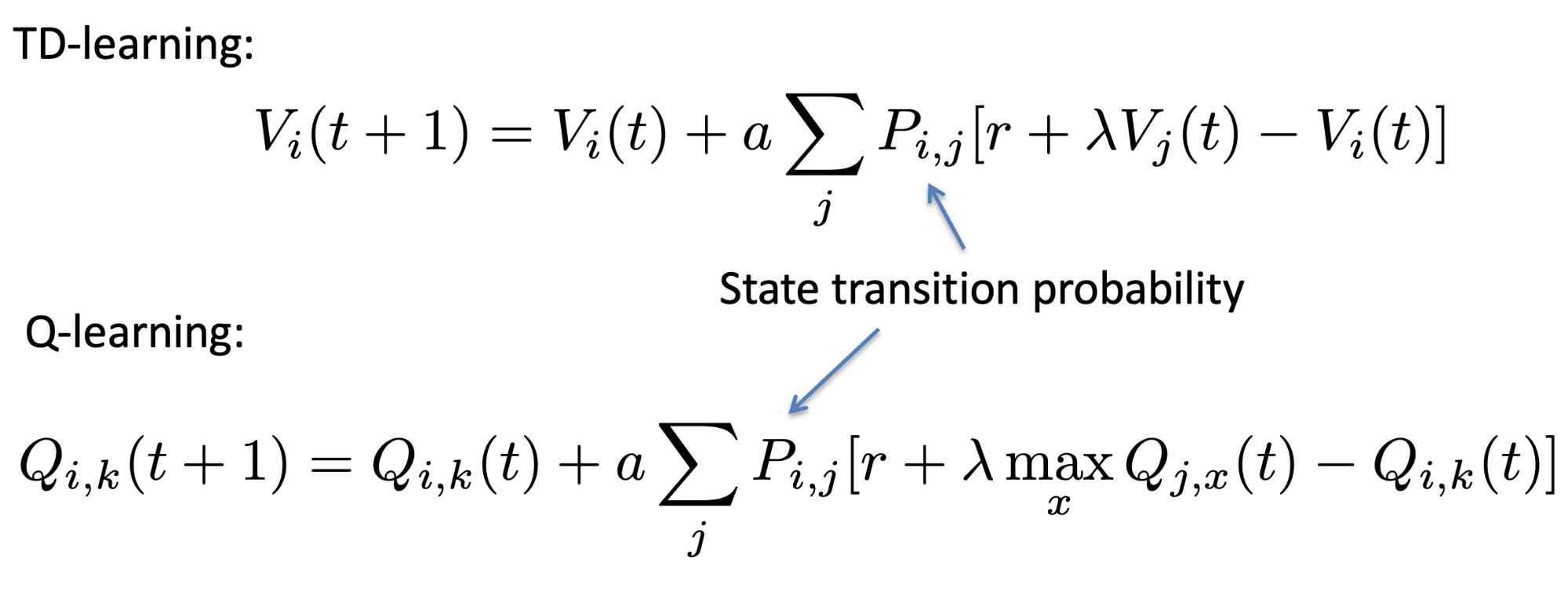

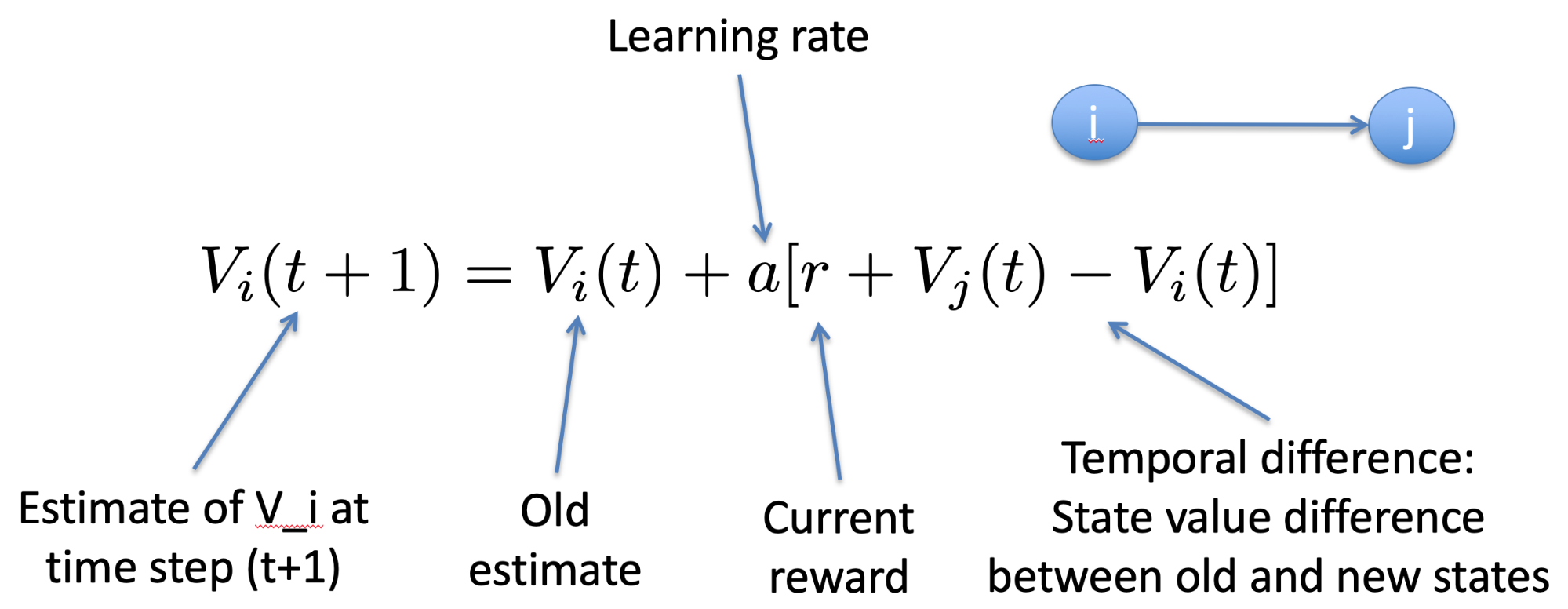

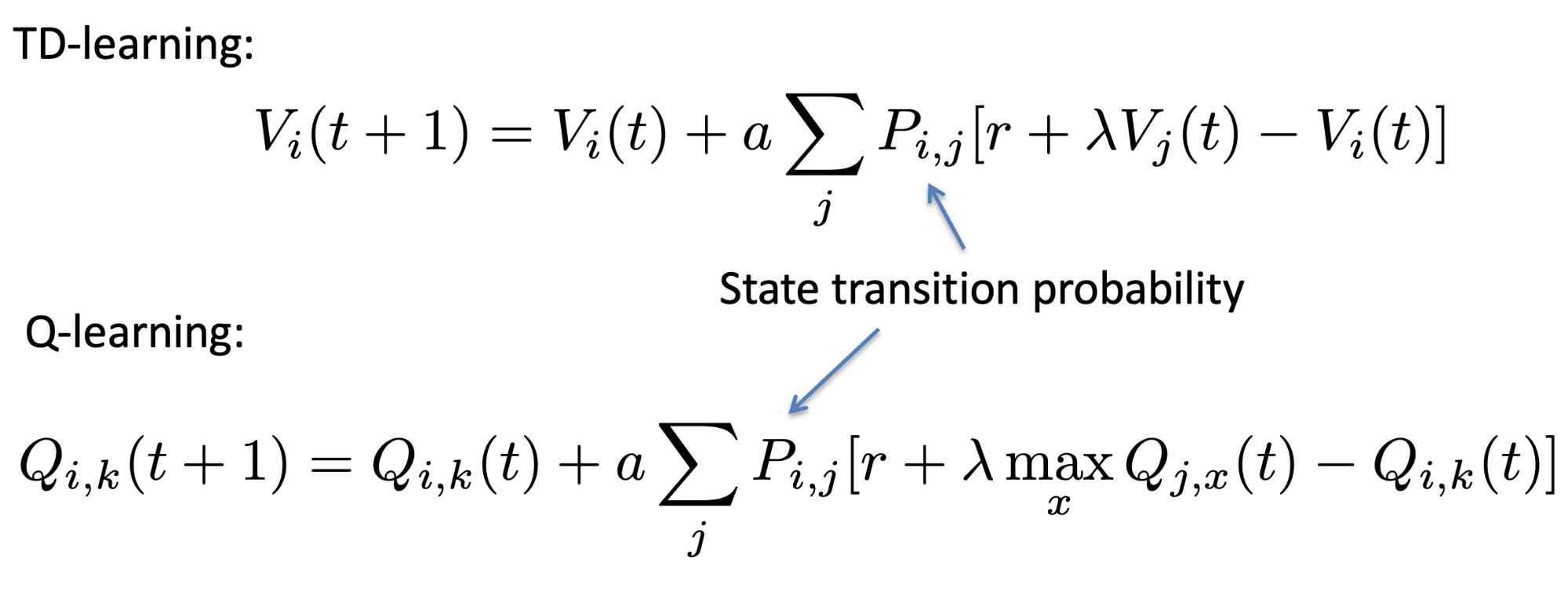

Temporal Difference Learning 91

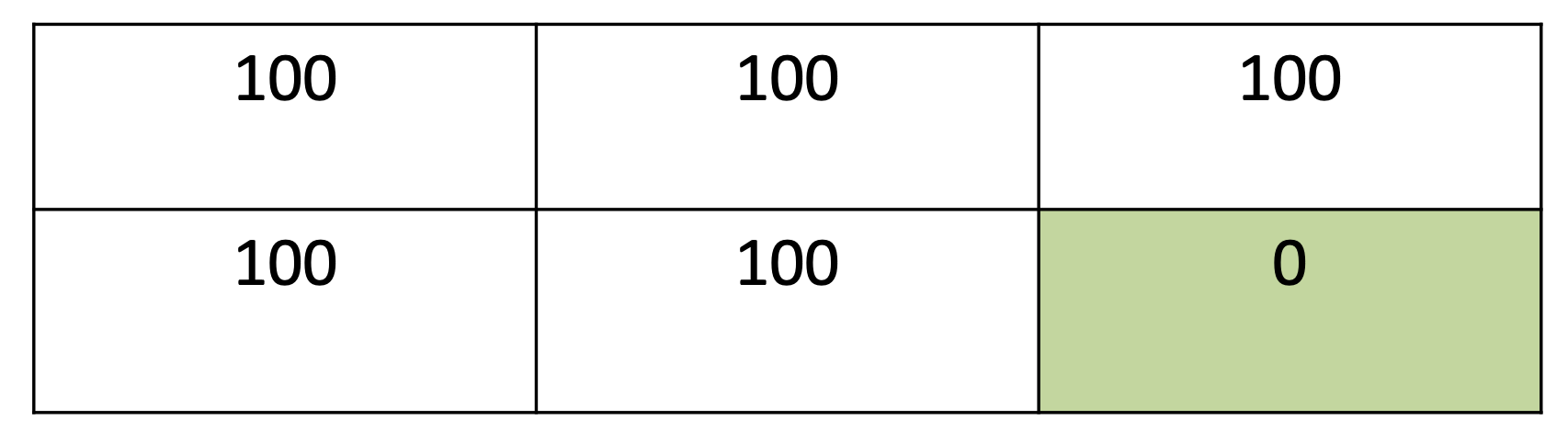

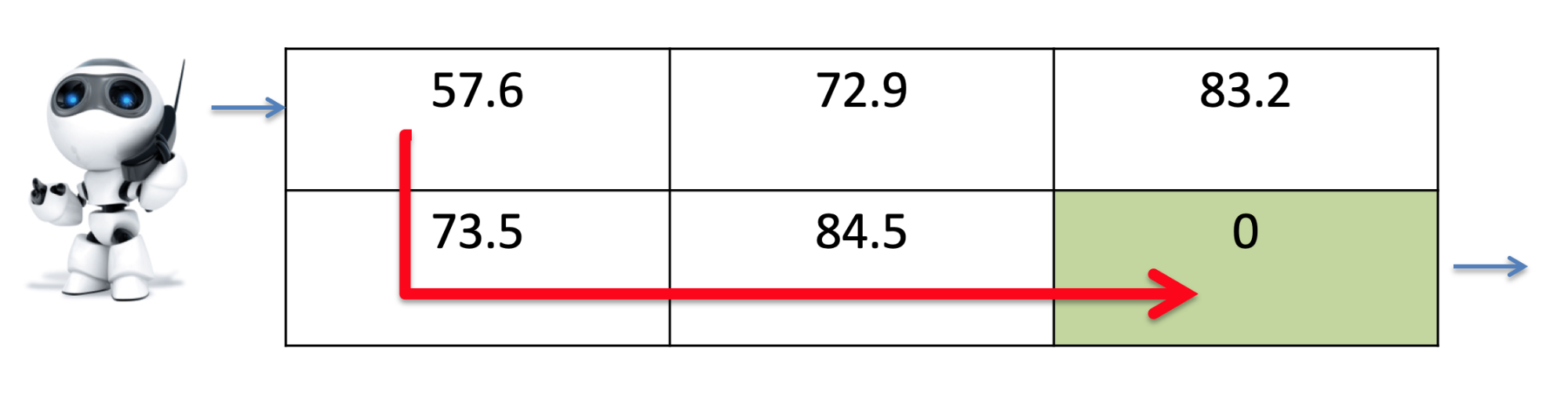

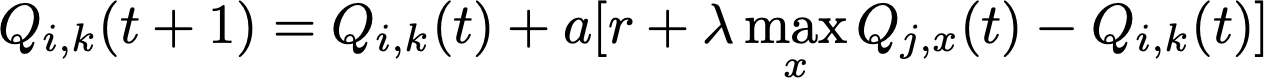

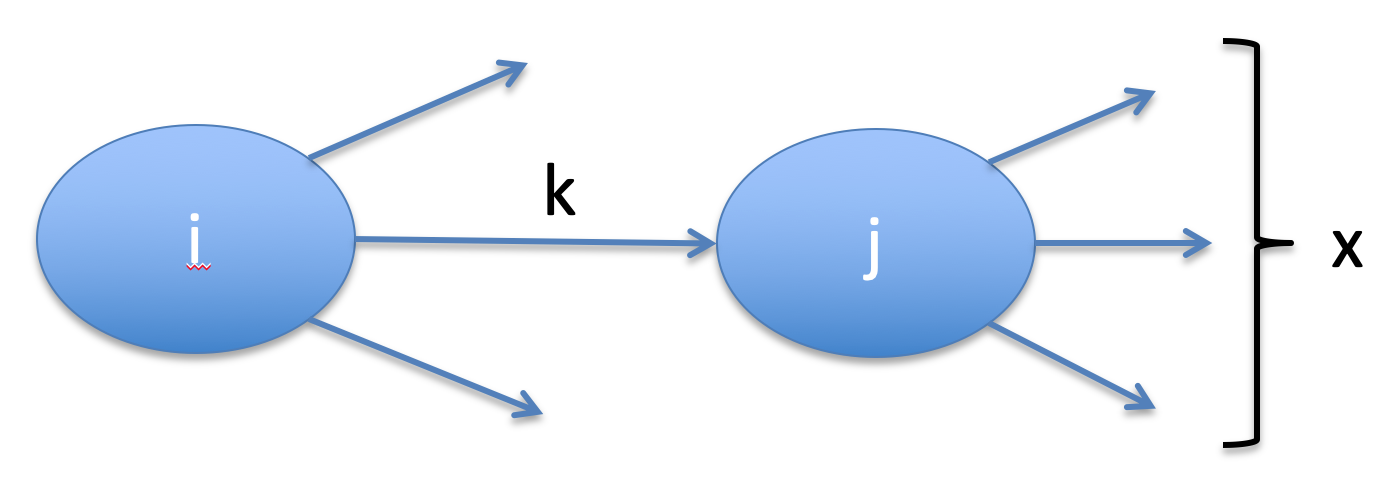

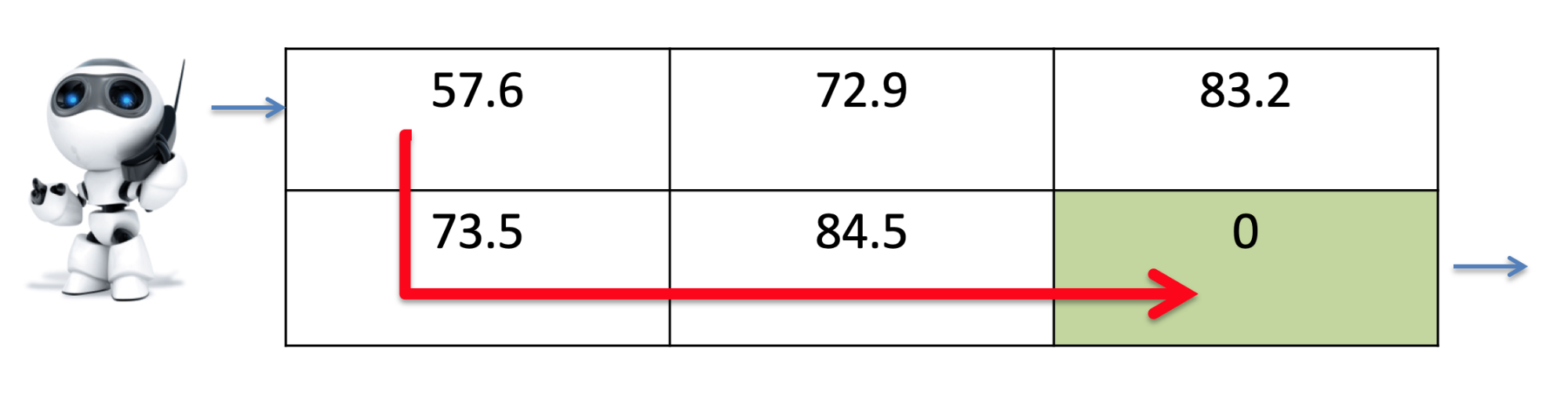

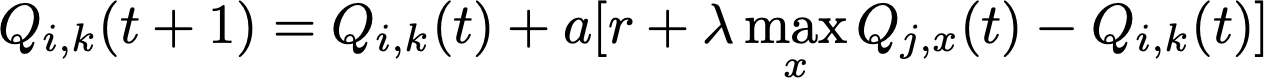

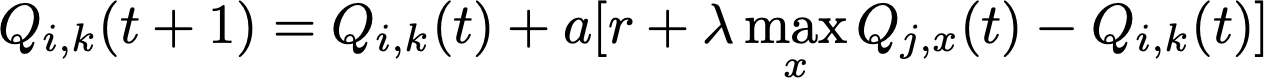

Q-Learning 94

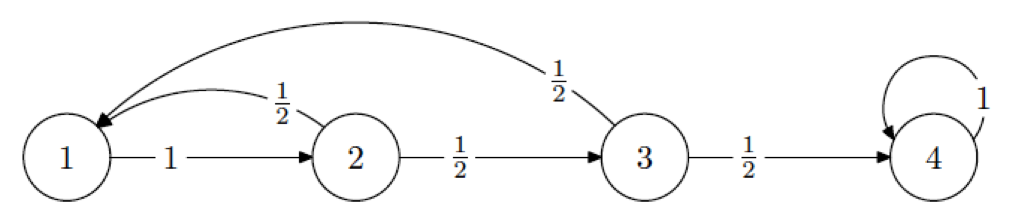

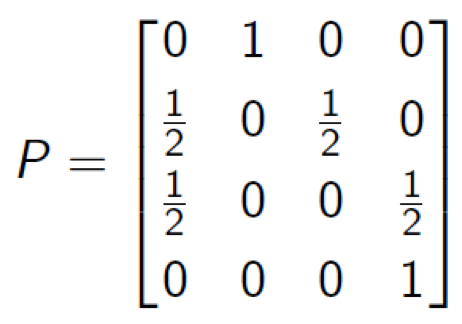

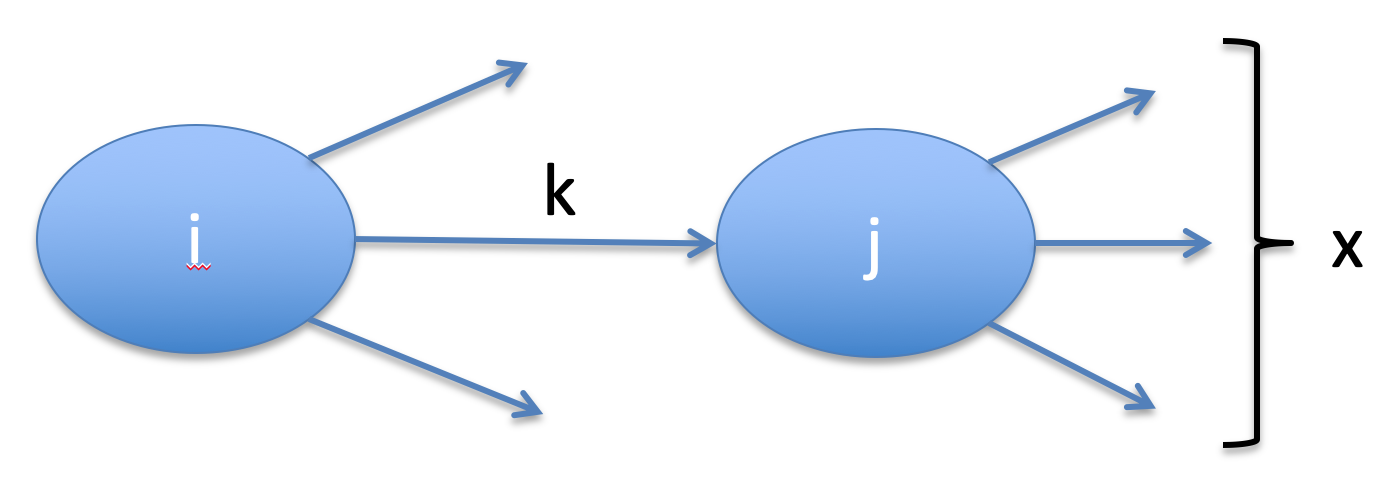

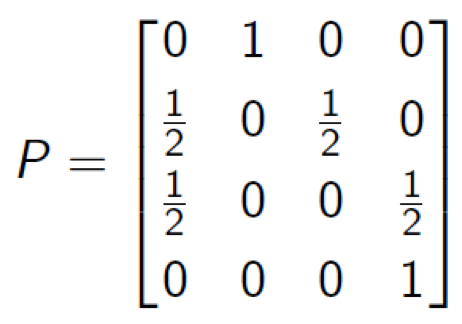

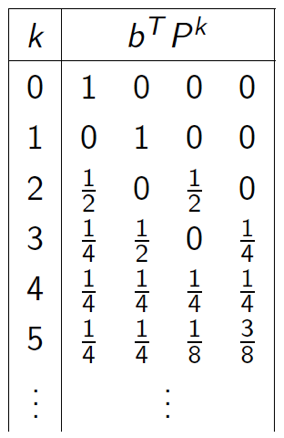

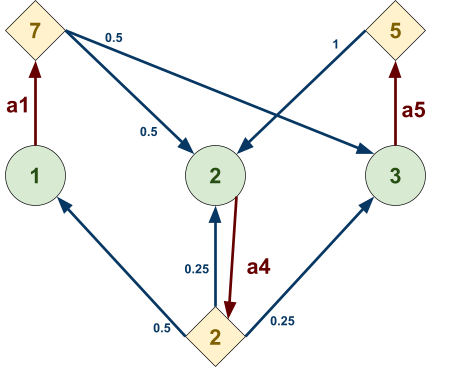

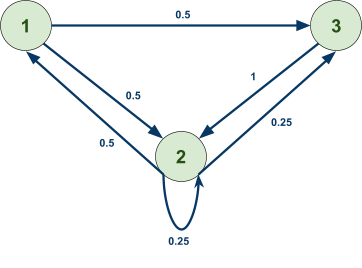

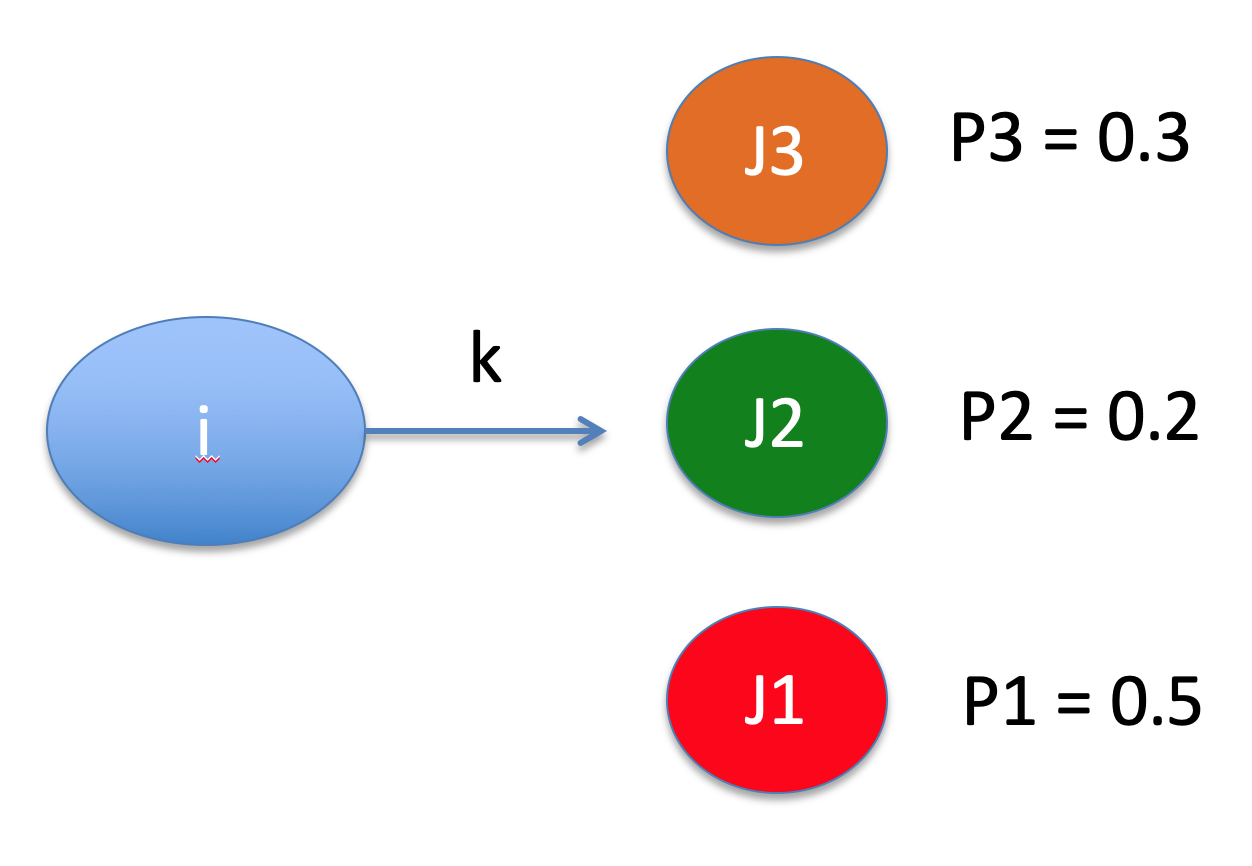

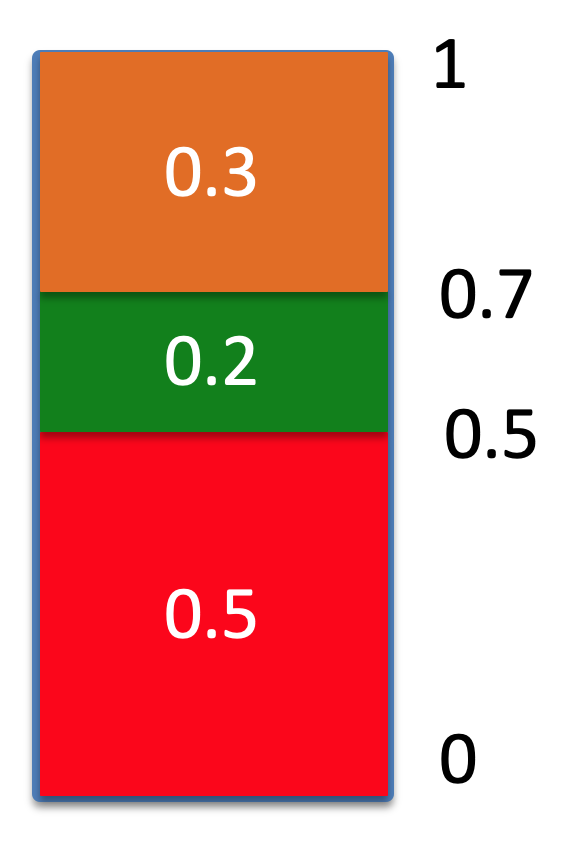

Markov Decision Processes (MDP) and Markov Chain 95

Monte Carlo Simulation (multi-armed bandits) 98

Extensions of Reinforcement Learning 100

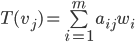

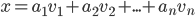

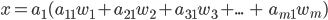

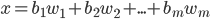

Mathematical techniques (not examinable) 101

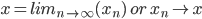

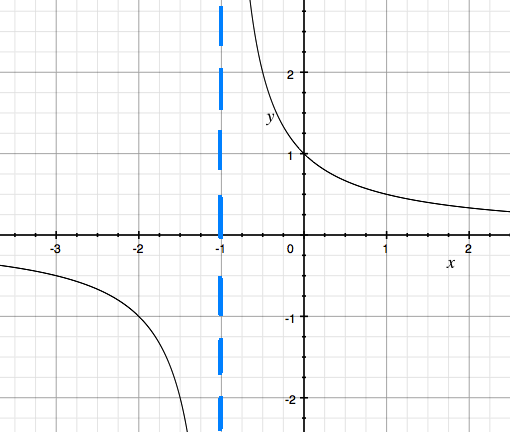

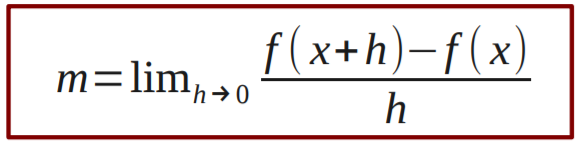

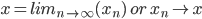

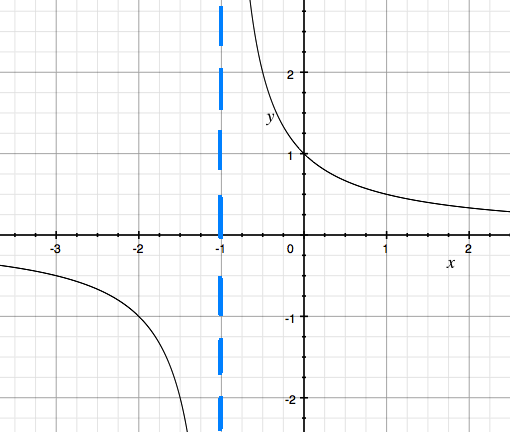

Functions, Limits, Derivatives 101

Continuity 101

Discontinuity 101

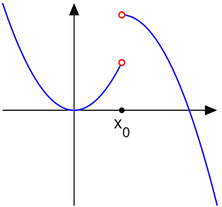

Derivatives 102

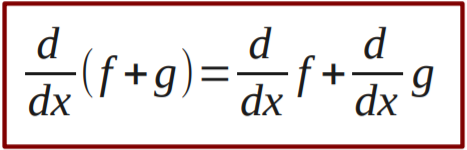

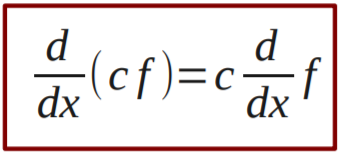

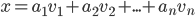

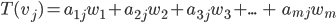

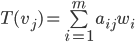

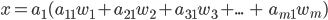

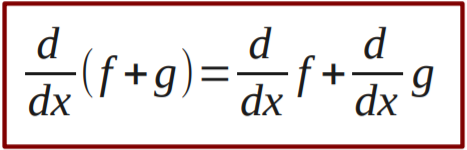

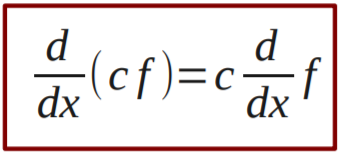

Linear combinations of functions 102

More derivative rules 102

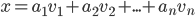

Vectors 103

What is a vector? 103

Norm 103

Scalar product 103

Linear independence 103

Basis 103

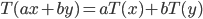

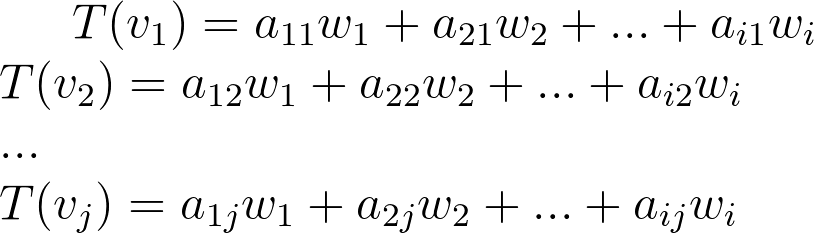

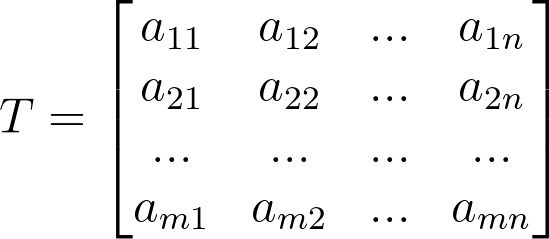

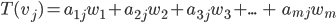

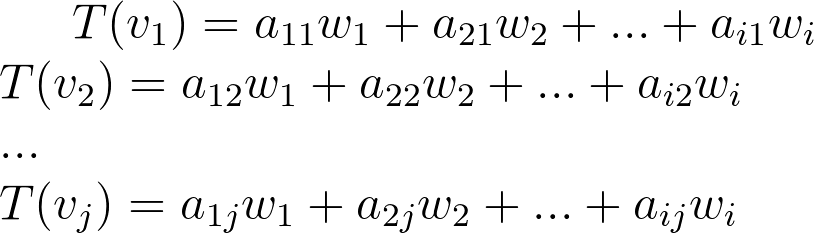

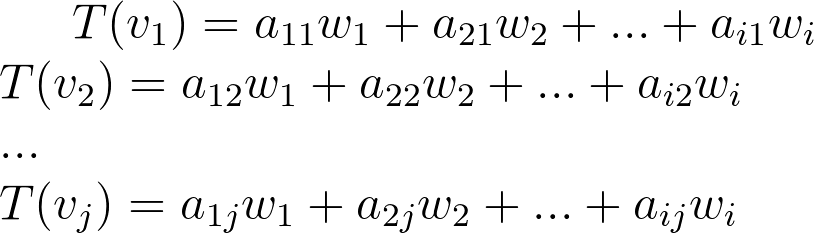

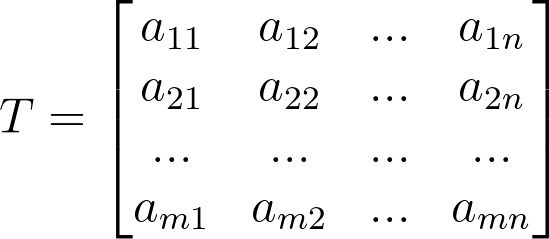

Linear maps 104

Matrices 104

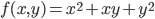

Scalar fields 105

Vector fields 105

Matrices 105

Partial derivatives 105

Gradient descent 105

Application, optimisation and regression problems 105

TL;DR 105

Classical AI 106

Blind Search 106

Heuristic Search 108

History and Philosophy 108

Local Search 109

Constraint satisfaction problems 110

Adversarial Games: Minimax 112

Alpha-beta Pruning 112

Planning 112

Recent Advances in AI 114

Classification 114

Decision trees 114

Nearest Neighbour 114

Neural Networks 115

Reasoning 116

Decision Making 117

Bandit Theory 117

Reinforcement Learning & Markov Decision Process 118

Intro

-

Current problems/challenges AI is facing:

- Ethics

-

AI might not work in the same way as the human brain and we

don’t know exactly how the human brain works

-

Specific AI is better/easier to create than a general

purpose AI

-

AI lacks life experience

-

AI might replace human jobs

-

AI might need a lot of computational power which requires a

lot of space

- Fear of AI

-

The main volume that covers the material is...

-

... of which you can download by clicking the link.

Classical AI

Blind Search

-

Blind search is a form of search which is applied in a

state space represented as a tree

-

There is always going to be a trade-off between speed,

memory usage and optimality

-

The optimality is defined by a cost function

Problem Types

-

There are four types of problems in classical AI:

|

Problem name

|

Properties

|

Example

|

|

Single-state problem

|

-

You always know what state it is in, at any

time during the problem.

|

The shortest path problem.

This is deterministic because when you input

the same graph, starting point and ending point,

you’ll always get the same shortest

path.

This is observable because you can always see

where you’re going.

|

|

Sensorless / multi-state problem

|

-

Deterministic

-

Non-observable

-

The initial state could be anything; this

requires a general solution that works for

all inputs.

|

Guessing someone’s random number.

This is deterministic because the solution will

always be the same number that your friend

picked (e.g. if they pick ‘6’, the

solution will always be ‘6’, it

can’t be different in any other

run).

Guessing numbers like 5 or 12 are only

solutions for 2 possibilities. Since you

don’t know the number to guess,

you’ll need a solution that satisfies

every number (like saying their number is

“x”).

|

|

Contingency problem

|

-

Nondeterministic

-

Partially observable (maybe)

-

Nondeterministic means that, even with the

same input, the action might perform

differently (this is due to things like race

conditions, random numbers etc.)

-

Partially observable means that you

can’t view the whole state; only parts

of it.

-

Basically, you don’t really know

enough until you do something. Once

you’ve performed an action, you see a

reaction, and you use that reaction (and

more actions) to eventually solve the

problem.

|

A piñata (even though it’s not a

computer science problem, it explains

nondeterministic and partially observable

well).

This is nondeterministic because you may not

hit the piñata. If you tried multiple

times, you’ll miss sometimes and hit the

other times. In a more technical sense, the

actions will randomly give the result of hit or

miss, therefore the solution to this problem is

‘n’ number of swings, where

‘n’ is a random number.

This is partially observable because you know

that the piñata is somewhere in-front of

you (unless everyone spins you around, then

it’s non-observable). You just don’t

know where exactly. You will find out some

information once you swing your bat, though. If

you hit it (solve the problem), you’ll

feel it hit your bat. If you miss, you’ll

know that the piñata is not where you

swung.

|

|

Exploration problem

|

-

You don’t even know what effect your

actions have, or how big the problem

is.

-

Therefore, you need to

‘explore’ the environment before

you try to solve the problem.

|

Robot exploration.

In this problem, a robot looks around using

actions and maps out the area it’s

in.

This has an unknown state space because the

robot has no idea where they are, but

they’re using their actions (such as

cameras, touch-sensitive peripherals etc.) to

find out and map out their environment.

|

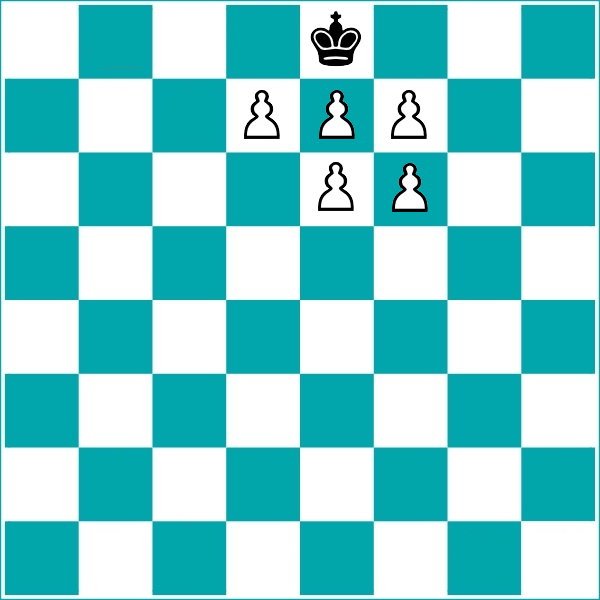

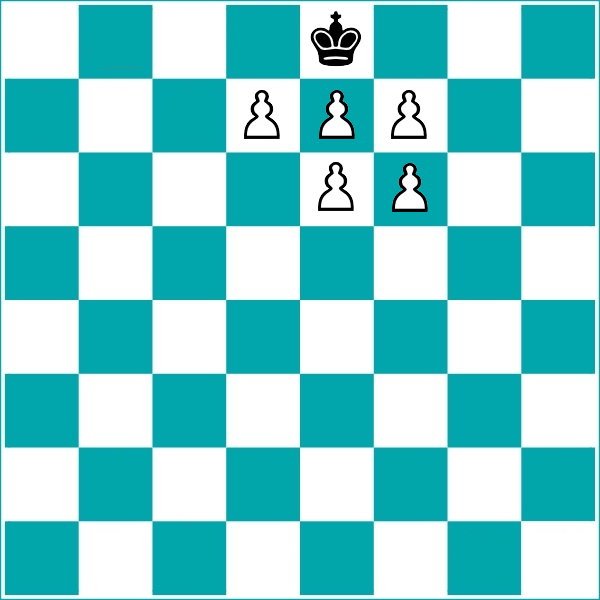

Single-state Problem Formulation

-

A problem (that has one state at a time) is defined by four

items:

|

Item name

|

Description

|

Example

|

|

Initial state

|

The state we start off at

|

When you start a chess game, the initial state

is always:

|

|

Actions or successor function

|

The transitions from one state to the

next

|

When you move a piece in chess, the state goes

from one to the other:

|

|

Goal test

|

The condition that, when true, means the

problem is done

Explicit: “board in state x”

Implicit: “check-mate”

|

When the opponent’s king is in

check-mate:

|

|

Path cost

|

A variable that should be reduced where

possible to get the optimal solution

|

There are multiple path costs that a chess

algorithm can adopt.

One could be ‘number of opponent

pieces’. This would make the algorithm

aggressive, since it would try to reduce the

number of opponent pieces as much as it

can.

Another could be ‘number of moves

taken’. This would make the algorithm more

strategic, achieving a checkmate in the least

amount of moves.

|

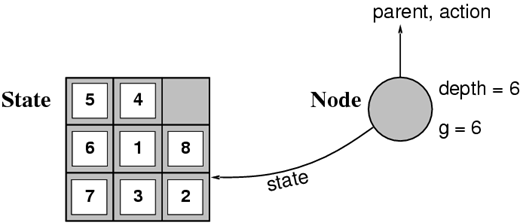

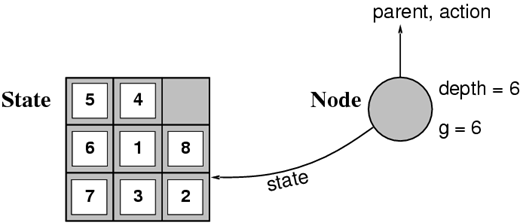

State vs Nodes

-

A state is a representation of a physical

configuration.

-

A node is a data structure constituting part of a search

tree used to find the solution.

-

A state is a property of a node.

-

A node has the properties “state”,

“parent”, “action”,

“depth” and maybe “cost”.

Search Strategies

-

Strategies are evaluated with the following factors:

-

completeness - does it always find a solution if one exists?

-

time complexity - how the number of nodes generated (and time taken) grows

as the depth of the solution increases

-

space complexity - how the maximum no. of nodes in memory (space

required) grows as the depth of the solution increases

-

optimality - does it always find a least-cost solution?

-

Time and space complexity are measured in terms of:

-

b: how many children each node can have in the search tree

(maximum branching factor)

-

d: depth of the least-cost (optimal) solution

-

m: maximum depth of the state space (could be

infinite)

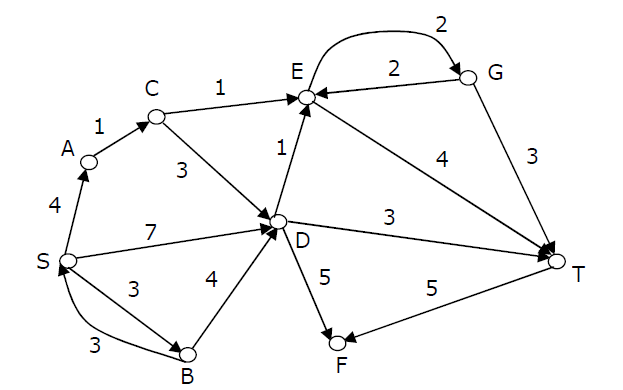

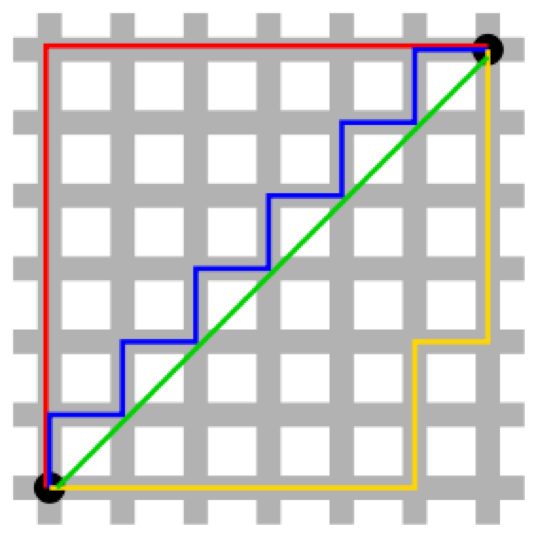

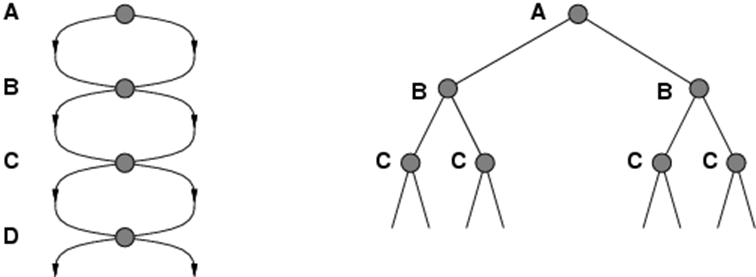

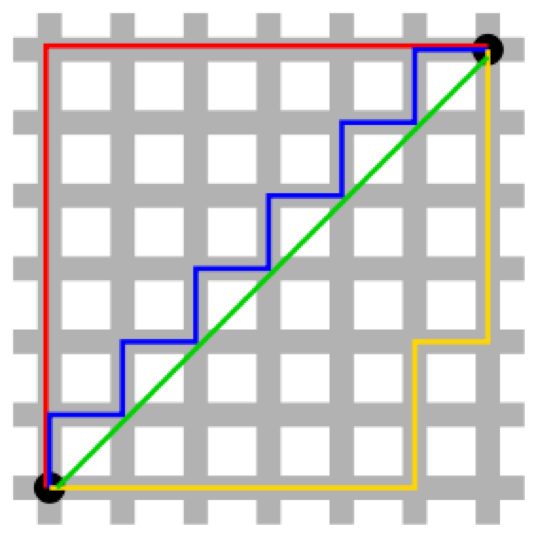

Searching using Tree Search

-

To find the solution to a problem, you need to find a path

from the initial state to the state where the goal test

returns true.

-

This is most commonly achieved using a type of tree

search.

-

There are four kinds of tree search algorithms used for

this.

|

Tree search

|

Description

|

Example / Animation

|

|

Breadth-first search

|

In breadth-first search, all of the nodes at

the current depth level are searched before

moving onto the next depth level.

This is complete (assuming the branching factor

isn’t infinite), even with infinite depth

or loops.

Only problem is, the space complexity is

terrible.

However, breadth-first search is optimal (finds

the least-cost solution) if the step cost is

constant. This is true because it finds the

shallowest goal node.

|

Complete?

|

Yes, if b (branching factor) is

finite

|

|

Time?

|

O(bd+1)

|

|

Space?

|

O(bd+1)

|

|

Optimal?

|

Yes, if step cost is

constant

|

|

|

|

Depth-first search

|

In depth-first search, it starts at the root

and goes all the way down to the far-left leaf,

then backtracks and expands shallower

nodes.

The space complexity is great (it’s

linear) because branches can be released from

memory if no solution was found in them.

However, it is not complete if the tree has

loops since the search is going to get stuck in

the loop and will never be able to exit it, or

if the depth is infinite as it’ll just

keep going down one branch without

stopping.

Depth-first search is not optimal because it

searches down the furthest branch and could miss

shallower goal nodes.

|

Complete?

|

No, if depth is infinite (or

has loops) it’ll go on

infinitely

|

|

Time?

|

O(bm)

|

|

Space?

|

O(bm)

|

|

Optimal?

|

No, because deeper solution

might be found first

|

|

|

|

Depth-limited search

|

It’s the same as depth-first search, but

there’s a depth limit (n), and anything

below that depth limit doesn’t

exist.

|

Complete?

|

No, because the solution might

be deeper than the limit

|

|

Time?

|

O(bn)

|

|

Space?

|

O(bn)

|

|

Optimal?

|

No, because deeper solution

might be found first

|

|

Limit: 2

|

|

Iterative deepening search

|

This is an applied version of depth-limited

search, where we start from depth limit 0 and

increment the depth limit until we find our

goal.

We do this to combine depth-first

search’s space efficiency with

breadth-first search’s completeness.

Although we get those benefits, IDS is a little

bit slower than depth-first.

|

Complete?

|

Yes

|

|

Time?

|

O(bd)

|

|

Space?

|

O(bd)

|

|

Optimal?

|

Yes, if step cost is

constant

|

|

Goal: Node 7

|

Repeated States

-

Remember that when searching a graph, you need to be able to spot repeated states. If not, your

implementation could go down unnecessary paths, or even go

through infinite loops!

Bidirectional Search

-

A bidirectional search does two searches, one at the

initial state and one at the goal state, and they keep going

until they meet in the middle.

-

The reason for this is because it’s quicker, bd/2 + bd/2 is much less than bd

-

At each iteration, the node is checked if it has been

discovered by both searches. If it has, a solution has been

found.

-

However, this is only efficient if the predecessor of a

node can be easily computed

Heuristic Search

Best-first Search

-

We have a function f(n) where, for each node

‘n’, it returns its

“desirability” - or in other words it returns how good that state

is.

-

Then, we just go from one node to the next, looking for the

most desirable one.

-

This also works for the reverse, using a “cost”

function.

-

There are two special cases for this:

-

Greedy best-first search

- A* search

Greedy Best-first Search

-

It’s just like best-first search, except our function

is a heuristic, so f(n) = h(n)

-

For example, h(n) could just be the linear straight-line

distance between node n and the goal.

|

Complete?

|

No – can get stuck in loops like depth

first if heuristic is bad

|

|

Time?

|

O(bm), but a good heuristic can give dramatic

improvement

|

|

Space?

|

O(bm) -- bad, keeps all nodes in memory

|

|

Optimal?

|

No

|

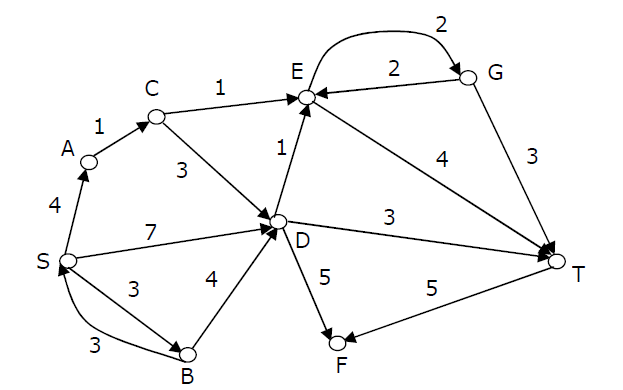

-

The animation above uses greedy best-first search on a

graph.

-

The heuristic function, h(n), is just the straight-line

distance from node ‘n’ to the goal.

-

Note how it’ll always pick the heuristic of the

smallest cost.

-

Notice how only the heuristic is visible. The actual cost

of travelling through the paths are abstracted.

-

Sometimes, this is OK. But what if the paths at the bottom

had really big costs, like 546, 234, 783 etc. and the paths

at the top had really low costs like 6, 3, 2 etc.? This

solution would be really bad, and the heuristic

wouldn’t be suitable.

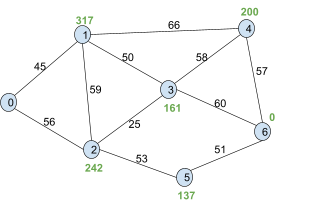

A* Search

-

Like greedy best-first search, but with an extra

function.

-

f(n) = g(n) + h(n) where:

-

g(n) is the cost to get up to that node n.

-

h(n) is the heuristic cost of n to the goal node.

-

f(n) is the estimated cost of taking the path from the

start to n and then to the goal node.

|

Step #

|

Description

|

Illustration

|

|

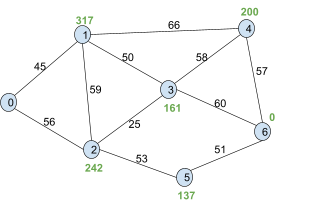

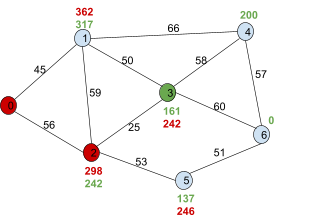

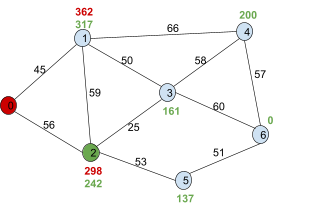

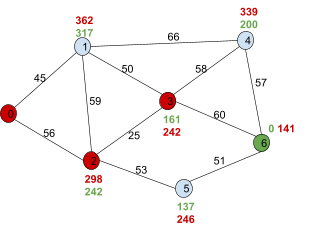

1

|

This is the graph we’ll use A* on.

We’ll go from node 0 to 6.

The black numbers are the weights of the paths, the green numbers are the heuristic h(n) and the red numbers will be f(n), which is the sum of the weight up to that

node and it’s heuristic.

The blue nodes are unvisited (open), the red nodes are visited (closed) and the green node will be the current node we’re

on.

|

|

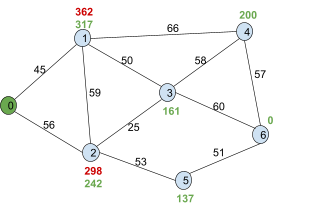

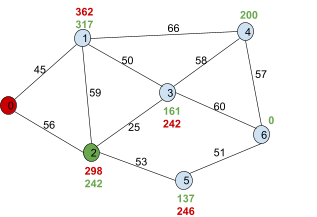

|

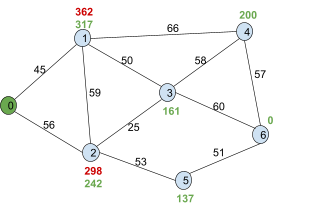

2

|

First, we look at the adjacent nodes. We need

to calculate their f(n)!

For node 1, we add g(1) and h(1), which is 45 +

317, which is 362.

For node 2, we add g(2) and h(2), which is 56 +

242, which is 298.

|

|

|

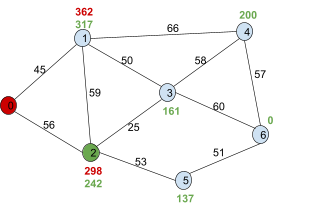

3

|

We need to pick a node with the least

f(n).

Right now, it’s 2, so we pick 2.

|

|

|

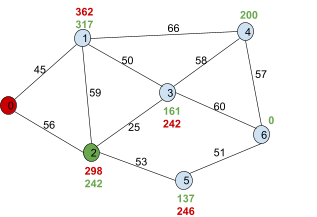

4

|

Now we need to find the f(n) of the adjacent

nodes of 2!

Firstly, 3. g(n) is 56 + 25, because it’s the cost of going from the

starting node up to that node. h(n) is 161, so

adding them together gets 242.

Second, 5. Again, it’s g(n) + h(n), which

is 246.

|

|

|

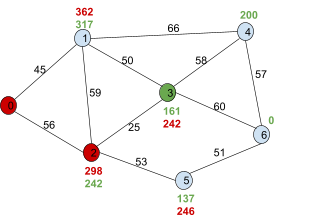

5

|

Now we need to pick the node of the lowest

f(n)!

This would be node ‘3’, so we go

with 3.

|

|

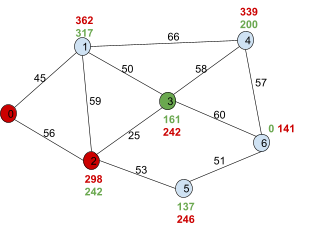

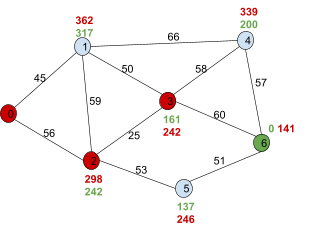

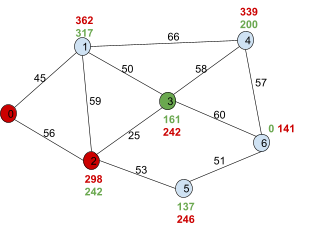

|

6

|

We calculate f(n) for 4 and 6, which are 339

and 141, respectively.

|

|

|

7

|

The next smallest unvisited f(n) is the goal

node, 6. Once we’ve discovered 6, we can

map out a path from the goal 6 to the start

0.

The shortest path is 0, 2, 3, 6.

|

|

-

If C* is the optimal path, then A* will never search

through a node n where f(n) > C*, but it will search

through all nodes where f(n) < C*.

-

A* is optimally efficient, meaning that no other algorithm

is guaranteed to expand fewer nodes than A* (unless there

are any tie-breaking nodes).

|

Complete?

|

Yes (unless there are infinitely many nodes

with f ≤ f(G) )

|

|

Time?

|

Exponential (in length of optimal

solution)

|

|

Space?

|

Keeps all (expanded) nodes in memory

|

|

Optimal?

|

Yes, if the heuristic is admissible

|

Admissible Heuristics

-

A heuristic that is admissible, or

“optimistic”, is a heuristic that always guesses

better than reality.

-

If h(n) is a heuristic function from n to the goal node,

and h*(n) is the optimal solution from n to the goal node,

then if h(n) ≤ h*(n), then h(n) is admissible.

-

An admissible heuristic never overestimates the true cost

of the solution.

Consistent Heuristics

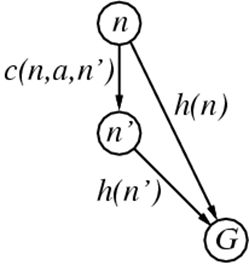

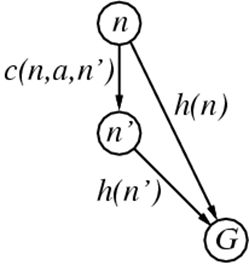

-

A consistent heuristic is a heuristic that’s always

shorter than any detour.

-

For example, let’s take this graph:

-

To go from n to G, we could take the path h(n). However, we

could also take a detour and do c(n, a, n’) to go to

n’, then do h(n’) to get to G.

-

If h is a consistent heuristic, then h(n) will always be

shorter than c(n,a,n’) + h(n’).

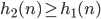

Dominance

-

If h2(n) >= h1(n) for all nodes of n, then h2 dominates h1.

-

If they’re both admissible, then h2 is better for

search, because h2 is closer than h1 to the true

value.

Relaxed problems

-

A relaxed problem is a mutation of an existing problem by

making it easier by placing fewer restrictions on the

actions.

-

You can use the optimal solution to a relaxed problem as an

admissible heuristic for the original problem.

History and Philosophy

-

Turing and Bletchley Park

-

During WWII, Alan Turing worked on code-breaking at

Bletchley Park.

-

Used heuristic search to translate Nazi messages in real

time

-

With others, e.g., Jack Good and Don Michie, he speculated

on machine intelligence, learning…

-

Much of this remained secret until after the war.

-

The military has retained a strong interest in AI ever

since…

-

1943: McCulloch & Pitts model of artificial boolean

neurons.

-

First steps toward connectionist computation and

learning

-

1950: Turing’s “Computing Machinery and

Intelligence” is published.

-

First complete vision of AI.

-

1951: Marvin Minsky builds the first neural network

computer

-

The Dartmouth Workshop brings together 10 top minds on

automata theory, neural nets and the study of

intelligence.

-

Conjecture: “every aspect of learning or any other

feature of intelligence can in principle be so precisely

described that a machine can be made to simulate

it”

-

Ray Solomonoff, Oliver Selfridge, Trenchard More, Arthur

Samuel, John McCarthy, Marvin Minsky, etc.

-

Allen Newell and Herb Simon’s Logic Theorist

-

For the next 20 years the field was dominated by these

participants.

-

Great Expectations (1952 - 1969)

-

Newell & Simon imitated human problem-solving

-

Had success with checkers.

-

John McCarthy (1958-) invented Lisp (2nd high-level

lang.)

-

Marvin Minsky introduced “microworlds”

-

Collapse in AI research

-

Progress was slower than expected.

-

Some systems lacked scalability.

-

Combinatorial explosion in search.

-

Fundamental limitations on techniques and

representations.

-

Minsky and Papert (1969) Perceptrons.

-

AI Revival (1969 - 1970s)

-

Exploiting encoded domain knowledge

-

DENDRAL (Buchanan et al. 1969)

-

First successful knowledge-intensive system (organic

chemistry/mass spec data).

-

MYCIN diagnosed blood infections (Feigenbaum et al.)

-

Introduction of uncertainty in reasoning.

-

Increase in knowledge representation research.

-

Logic, frames, scripts, semantic nets, etc., …

-

Marr’s (1980) posthumous Vision advances vision,

neuroscience and cog. sci. after he dies young

-

AI engages with cognitive philosophy:

-

Dennett’s (1981) Brainstorms and (1985) Mind’s

I (with Hofstadter)

-

Fodor’s (1983) Modularity of Mind

-

Chuchland’s (1984) Matter & Consciousness

-

Clark’s (1989) Microcognition

-

Port & van Gelder’s (1995) It’s About

Time

-

Connectionist Revival (1986 -)

-

Parallel distributed processing (Rumelhart & McClelland

‘86)

-

Multi-level perceptrons and backpropagation learning

-

Language, reasoning, perception, control + a little

mystery

-

Robust behaviour, graceful degradation

-

No representations? Sub-symbolic AI…

-

90s: Elman pioneers layered recurrent nets

-

90s: Fully recurrent networks and robot control (e.g.,

Beer)

-

Ultimately… “neural” networks as

data-mining, statistics…

-

Rodney Brooks and other roboticists challenge the

formalist, “representational” orthodoxy

-

Elephant don’t play chess, Brooks

-

Why not the whole iguana?, Dennett

-

Nevermind the blocksworld, Cliff

-

Situated, Embedded, Embodied cognition

-

Inspired by simple insects, rather than chess and

logic

-

Anti-representationalist, anti-reasoning,

anti-generality

-

Evolutionary robotics, artificial life, “the new

cybernetics”

-

Intelligent Agents (1995 -)

-

Combined whole organism perspective with a rational

utility-maximising framework borrowed from economics.

-

A response to nouvelle AI?

-

An empty label?

-

A hybrid? A bolt-hole for formalists? A

revolution?

-

“How does an agent act/behave embedded in real

environments with continuous sensory inputs”

-

Data, Data, Everywhere (2000 -)

-

Massive amounts of raw power and raw data fuel advances in

machine learning:

-

Eigenfaces

-

Corpus linguistics

-

Kernel methods

-

Computational learning theories

-

Offline vs. Online AI?

-

Pattern Recognition in a Bucket?

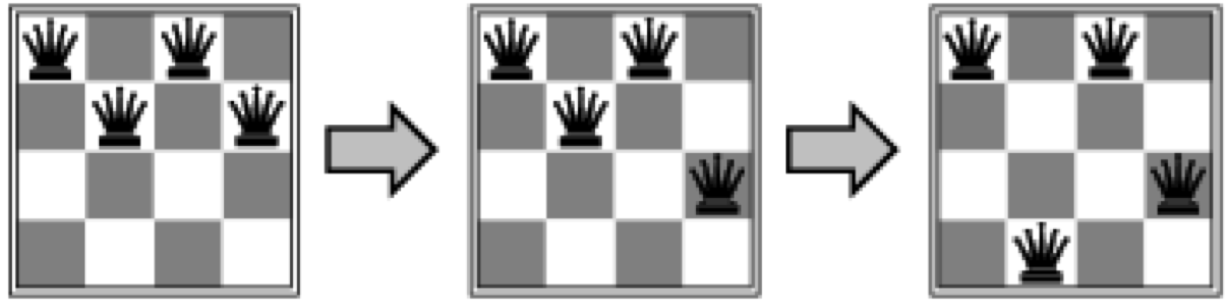

Local Search

-

So far we have been looking at searches for an optimal

path

-

However, sometimes only the goal state matters

-

It is irrelevant how you get to this state because the goal

state itself is the solution

-

For these kind of problems the state space is the set of

complete configurations

-

We will look for a configuration that satisfies certain

constraints

-

For example, in the n queens problem the constraint is that

no two queens can be on the same row, column or

diagonal

-

To find a solution we use local search algorithms

-

We have a single current state which we try to

improve

-

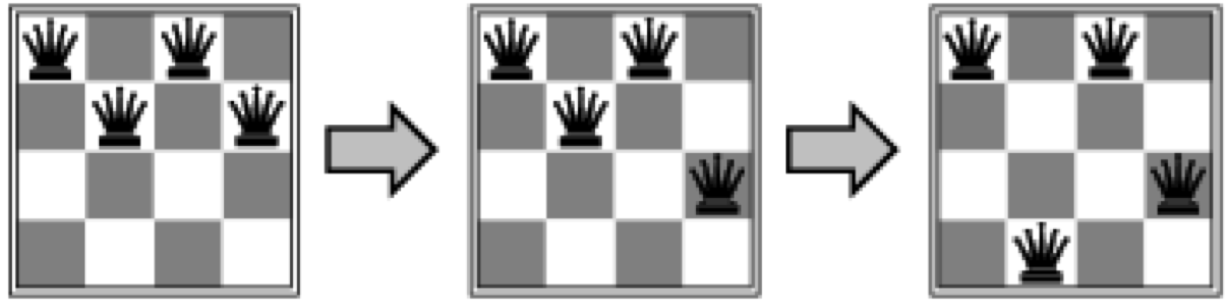

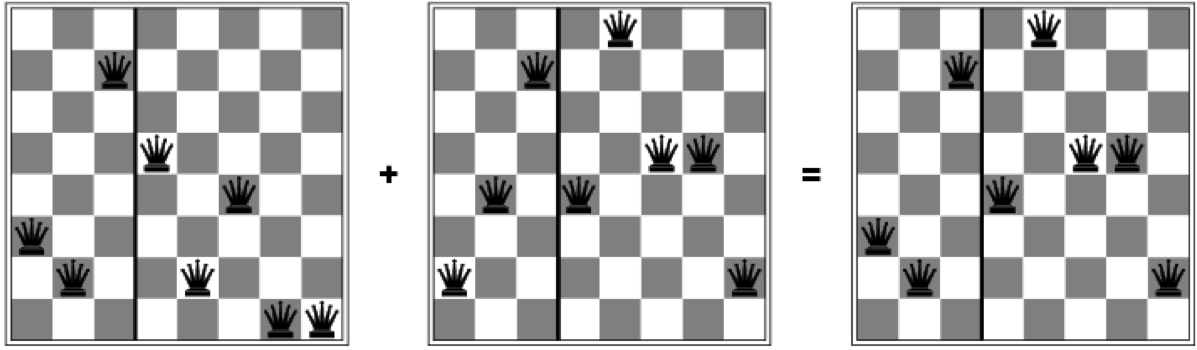

For instance, for the n queens problem we could go through

states (configurations) like this:

-

Let’s look at some algorithms

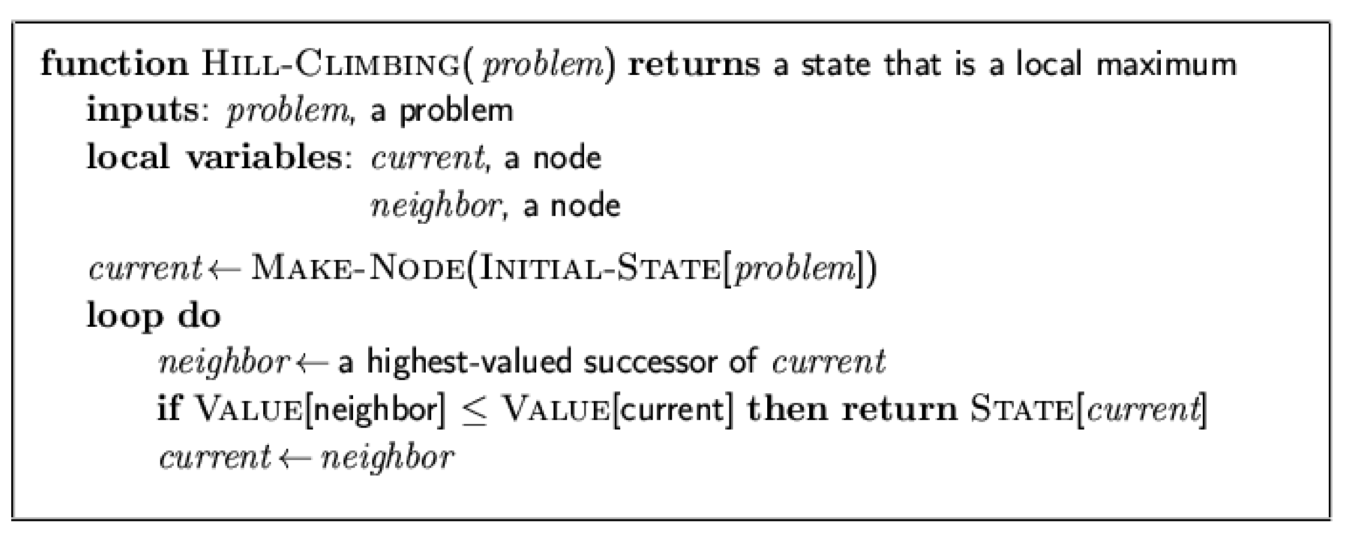

Hill-climbing Search

-

Hill-climbing search is one of those algorithms that tries

to find the solution of a problem by gradually improving an

initial state

-

This requires that we have an objective function that

determines how good each state is, i.e. that computes the

fitness of each state

-

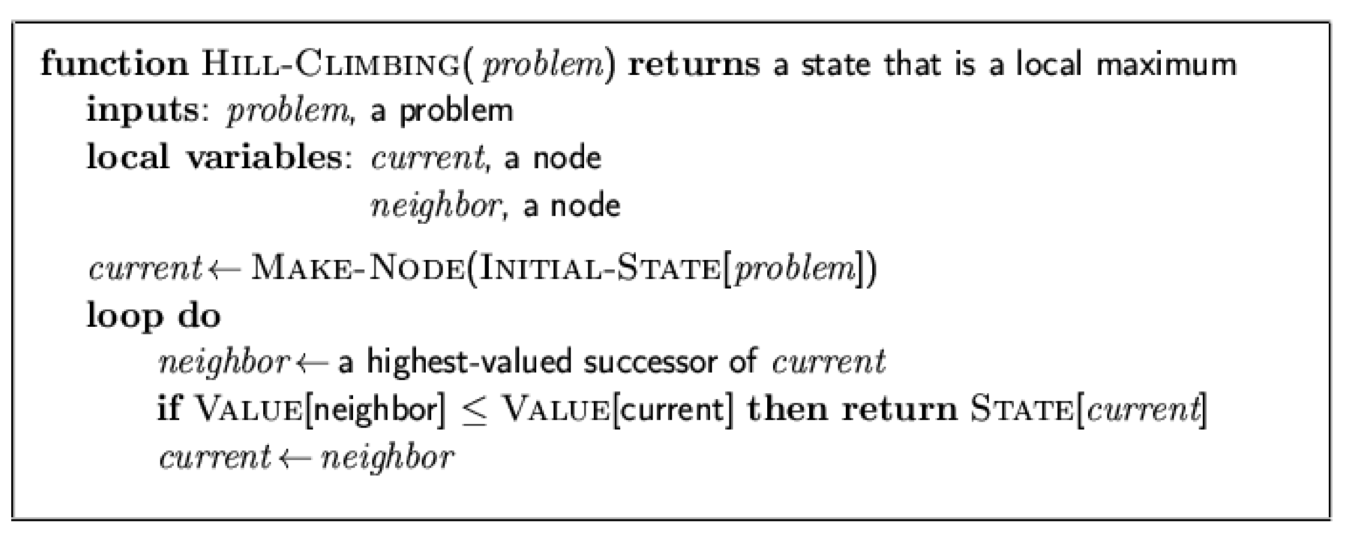

The algorithm works as follows

-

We will start at some (possibly random) initial state and

let this state be the current state

-

Then we will check the neighbour of the current state

-

The neighbour state is the highest valued successor of the

current state, determined by the objective function

-

We are not going to check any states which are further away

than our neighbour

-

If the value of this neighbour state is higher than the

current state we will assign the neighbour state to the

current state and go back to the last step

-

If the value of the neighbour state is lower than the

current state we will stop and return the current state as

the solution

-

This is because there is no better state within our

neighbours that we can reach

-

If you would like to see some pseudo code, here it

is:

-

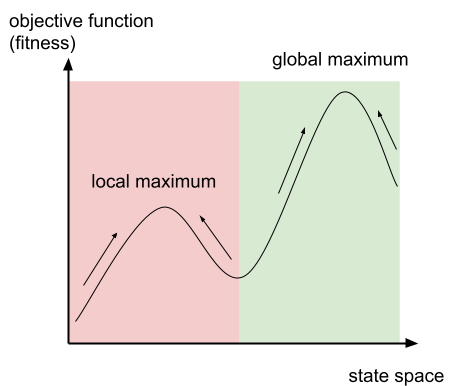

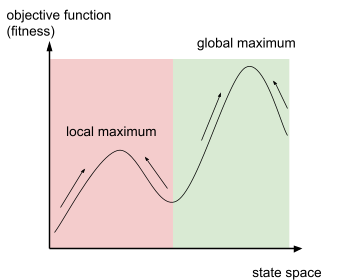

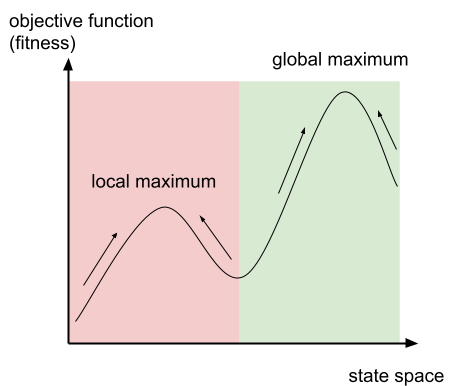

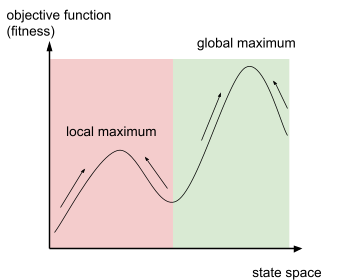

The main problem of this algorithm is that it can get stuck

at a local maximum if you use certain initial start

states

-

This is because of the way the algorithm works: It improves

intermediary solutions but stops as soon as it has reached a peak

-

However, sometimes you have to go through some worse

intermediary solutions in order to find the global

maximum

-

I will illustrate this as a graph

-

The arrows illustrate in what direction the algorithm is

going to improve the current solution

-

If you start in the red area, you will end up at the local maximum

-

However, if you start in the green area, you will end up at

the global maximum

-

To show how big this problem is, take the 8-queens

problem

-

For random initialisation of the start state, hill climbing

gets stuck 86% of the time!

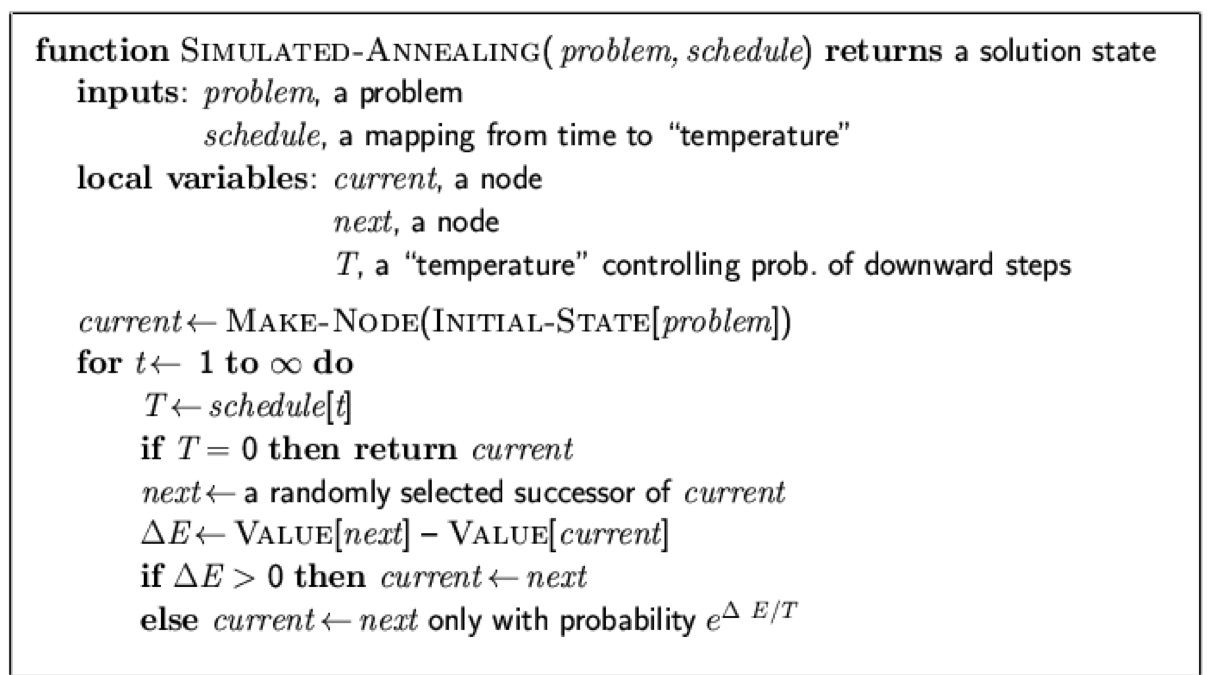

Simulated Annealing Search

-

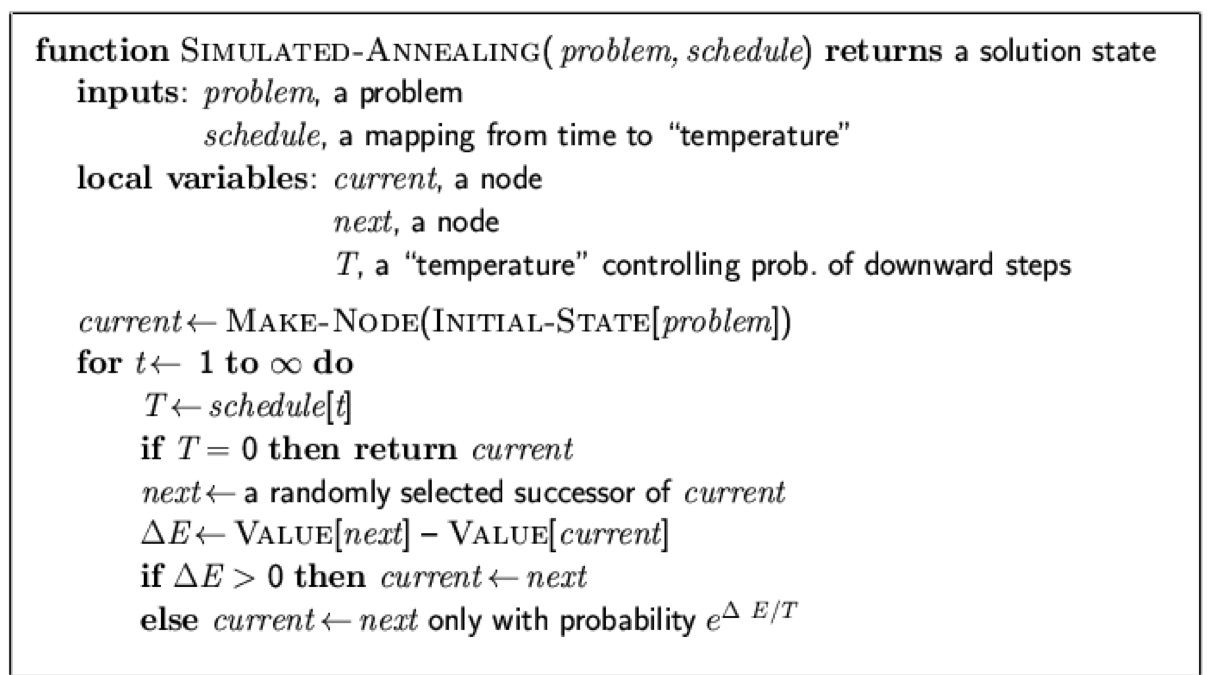

Simulated annealing is an algorithm that tries to solve the

main problem of hill climbing

-

The idea is to sometimes allow “bad” moves (in

contrast to hill climbing) but gradually decrease the

likelihood of making such a “bad” move

-

In the optimal case we will use the “bad” moves

to escape from local maxima

-

The probability of taking a bad move is controlled via a so

called “temperature”

-

At the start the temperature will be high, meaning that bad

moves are likely to happen

-

Over time we are going to reduce the temperature / cool

down, meaning that bad moves are less likely to happen

-

The algorithm works as follows

-

We will start at some (possibly random) initial state and

let this state be the current state

-

We will check if the temperature is 0

-

If it is 0 then we will return the current state as the

solution

-

We will randomly select a successor of the current node as

the next node

-

We will check if the next node is better or worse than the

current node

-

If it is better, we will select it, i.e. assign it to the

current node

-

If it is worse, we will only select it with a probability

denoted by the temperature

-

We will go back to the second step

-

If the temperature decreases slowly enough, then simulated

annealing search will almost certainly find a global

optimum

-

The time complexity is exponential in the problem size for

all non-zero temperatures

-

Some use cases are VLSI layout, airline scheduling,

training neural networks

Local Beam Search

-

The idea of local beam search is to keep track of k states

rather than just one

-

K is called the beam width

-

The algorithm works as follows

-

We start with k randomly generated states and let them be

the current states

-

If any of those current states is the goal state then

return this state

-

Otherwise, generate all their successors let the k best children

be the current states

-

Go back to step 2

-

This is like breadth-first search with the difference that

by keeping only the k best children

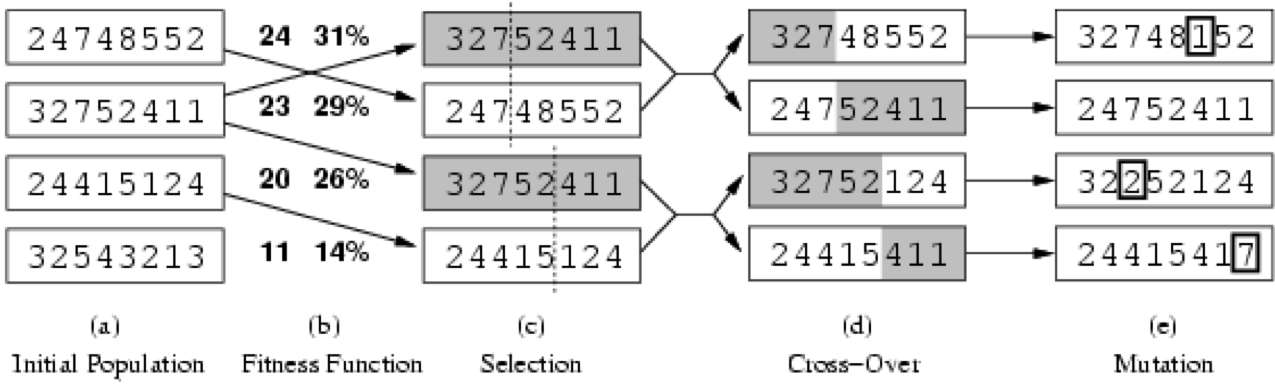

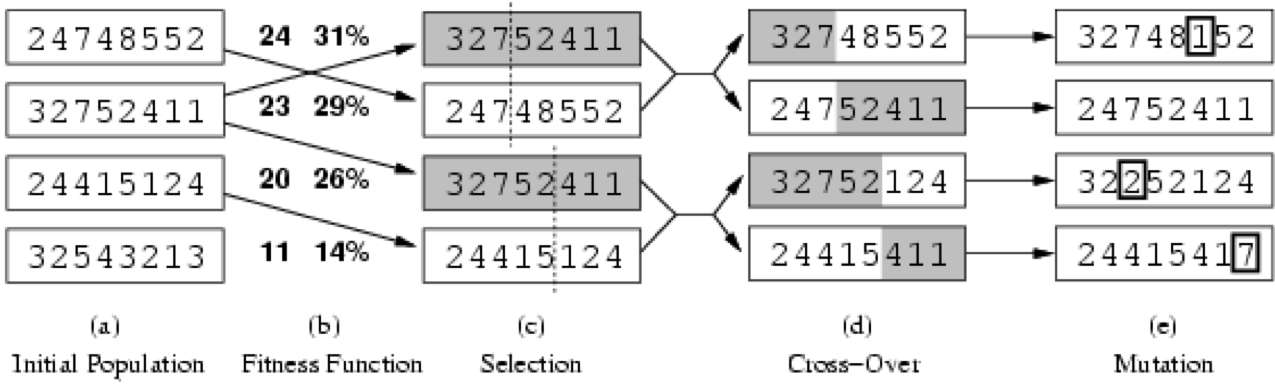

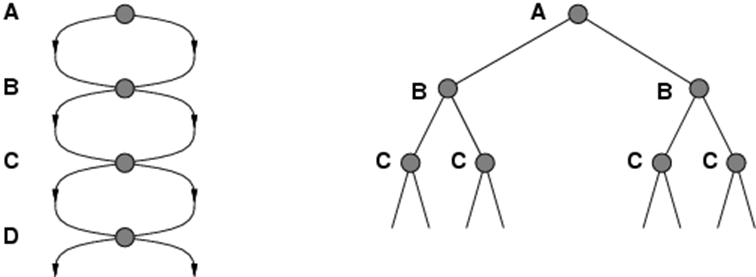

Genetic Algorithms

-

At the beginning, we start with k randomly generated

states

-

These are called the population

-

A state is represented (encoded) as a string over a finite

alphabet

-

Often it is a string of 0s and 1s

-

We need an objective function (fitness function) to

“rate” states

-

A higher value means that the state is better

-

A successor state is generated by combining two parent

states

-

Methods for generation are selection, crossover and

mutation

-

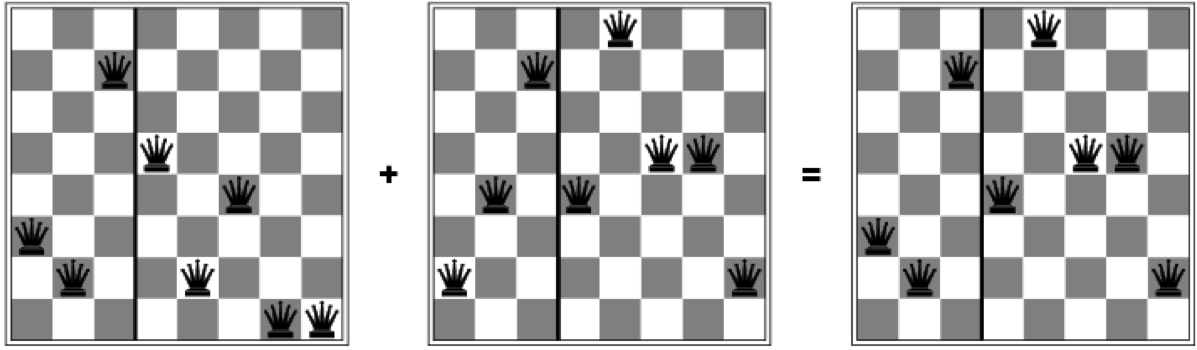

Let’s look at an example for the 8-queens

problem:

-

The numbers indicate the positions of the queens

-

The nth number = the nth row

-

The number itself notates the column

-

The fitness function is the number of non-attacking pairs

of queens

-

The minimum is 0, the maximum is 8 x 7/2 = 28

-

The percentage next to this value indicates how good the

solution is compared to other solution of the

population

-

For the first one: 24/(24+23+20+11) = 31%

-

For the second one: 23/(24+23+20+11) = 29%

- etc

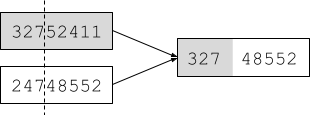

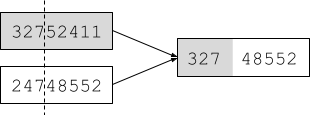

-

Let’s look at the following selection and cross-over

step graphically:

Constraint satisfaction problems

-

In a standard search problem, the state is viewed as a

black box

-

A state can be any data structure that supports

-

a successor function

-

an objective function (fitness function)

-

and a goal test

-

In CSP (constraint satisfaction problems) we will define

the data structure of the state

-

In particular, a state is defined by variables

with values from the domain

with values from the domain

-

In the goal test we will use a set of constraints

-

The constraints specify the allowable values for the

variables

-

Some examples are the graph colouring problem and the

n-queens problem

-

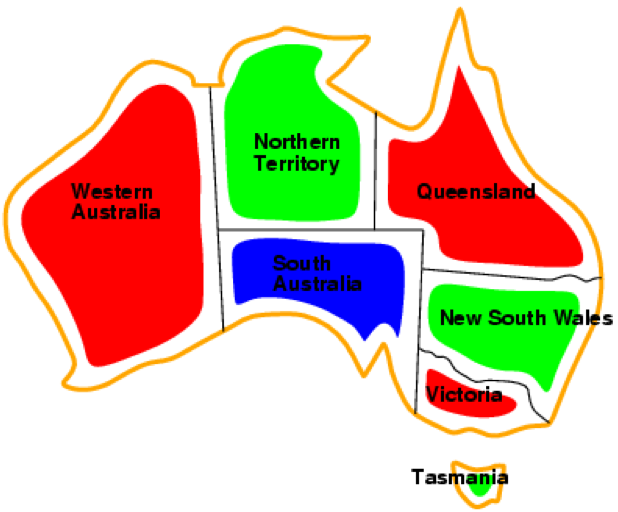

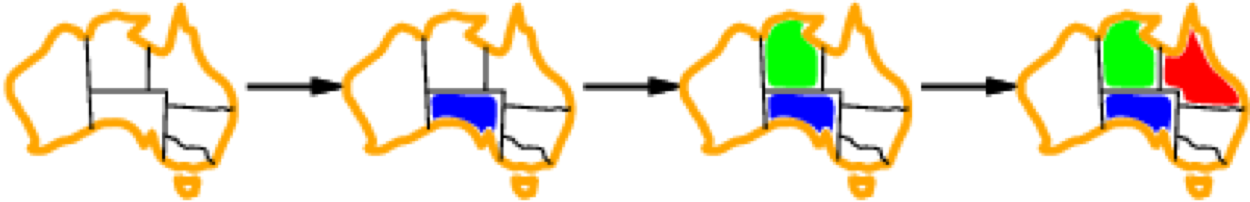

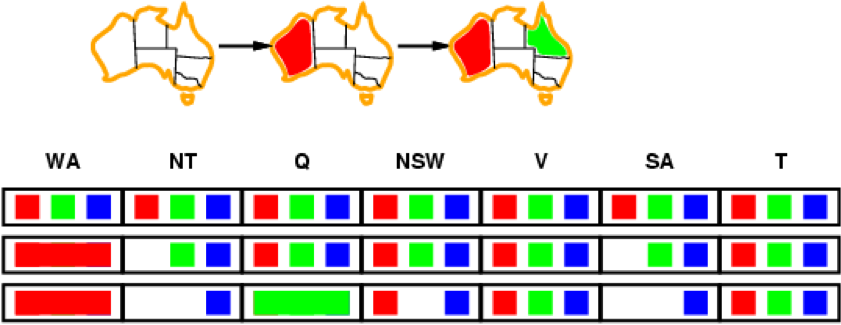

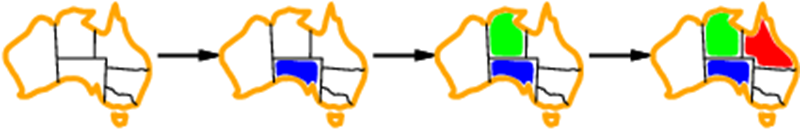

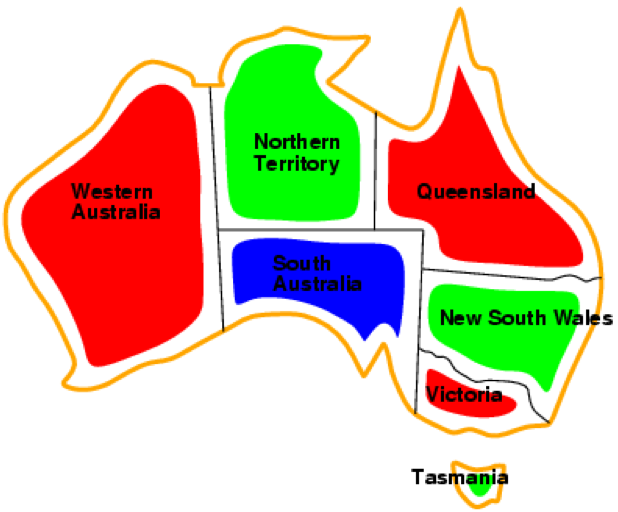

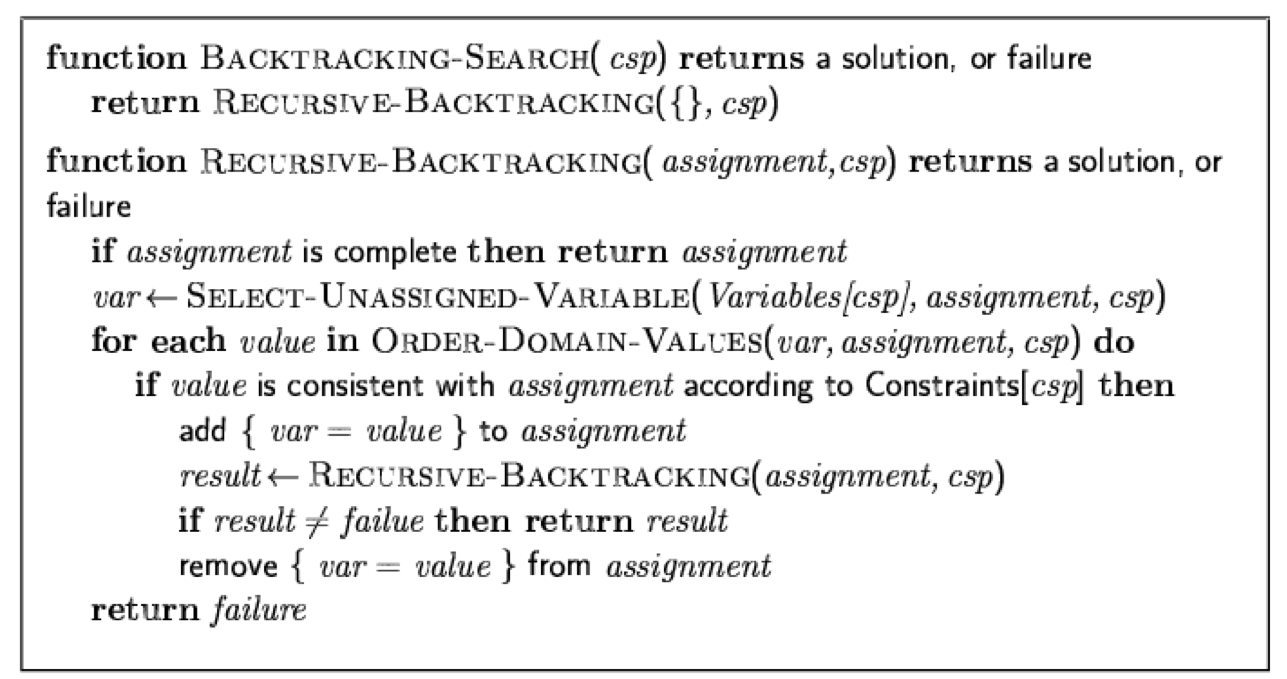

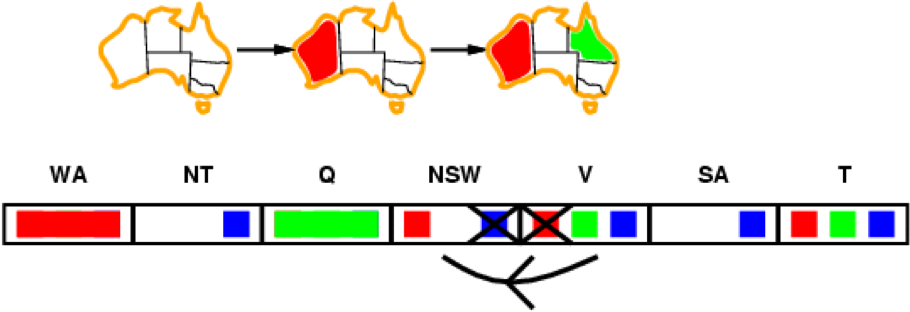

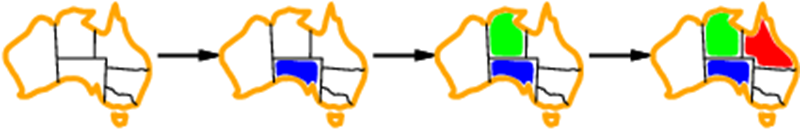

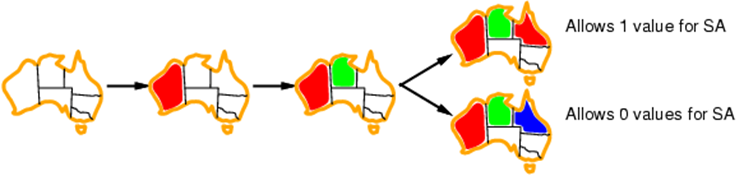

Now we will look at the example of map colouring

-

In map-colouring we try to colour regions with three

different colours such that adjacent regions have a

different colour

-

For this map, the problem is defined as follows:

-

Variables are the regions: WA, NT, Q, NSW, V, SA, T

-

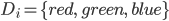

Domains are the three colours:

-

Constraints: adjacent regions must have different

colours

-

For example, WA and NT cannot have the same colour

-

Solutions are complete and consistent assignments

-

Complete: Every variable is assigned a value

-

Consistent: No constraint is violated

-

This is an example solution for the problem:

-

Clearly, every region has a colour and no two adjacent

regions have the same colour, so this solution is complete and consistent

-

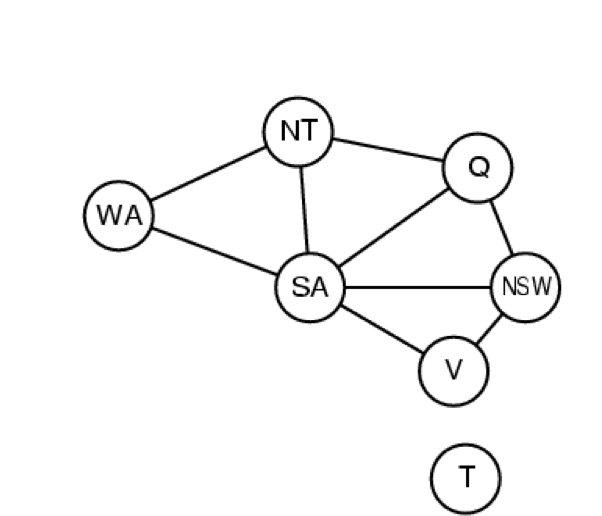

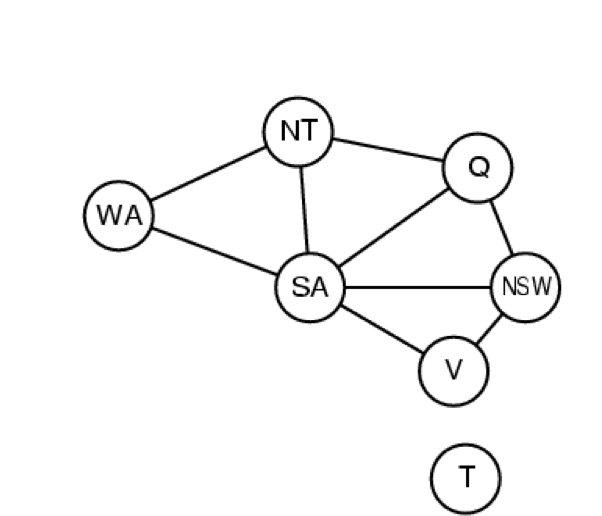

We can also generalise this problem as a constraint

graph

-

The nodes are the variables and the edges are the

constraint

-

No two connected nodes can have the same assignment

-

In addition, the resulting graph is a binary CSP because

each constraint relates two variables, i.e. each edge

connects two nodes

-

CSPs come in different variants

-

If the CSP has discrete variables

-

We have

variables and the domain size is

variables and the domain size is  , therefore there exist

, therefore there exist  complete assignments

complete assignments

-

Examples are boolean CSPs, including boolean satisfiability

(SAT, kind regards from theory of computing) which is NP-complete

-

and the domain is infinite

-

Examples for infinite domains are integers and

strings

-

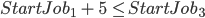

An example for such a CSP is job scheduling where the

variables are the start/end days for each job

-

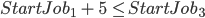

The constraints could be defined like this:

-

If the CSP has continuous variables

-

An example is start/end times for Hubble Space Telescope

observations

-

The constraints are linear constraints solvable in

polynomial time by linear programming

-

Constraints come in different variants as well

-

Unary constraints involve a single variable

-

For example, a certain variable cannot be equal to some

value

-

E.g. SA

green

green

-

Binary constraints involve pairs of variables

-

For example, two variables must not be equal

-

E.g. SA

WA

WA

-

This is the type of constraints we used in our

map-colouring example

-

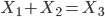

Higher-order constraints involve 3 or more variables

-

For example, the addition of two variables must be equal to

a third variable

-

E.g.

-

Some more real-world CSPs are

-

Assignment problems, e.g. who teaches what class

-

Timetabling problems, e.g. which class is offered when and

where

-

Transportation scheduling

-

Factory scheduling

-

Note that many real-world problems involve real-valued

(continuous) variables

-

Let’s start to develop an algorithm for CSPs

-

We are going to look at a straightforward approach and will

then fix/improve it

-

States are defined by the values assigned so far

-

The initial state is the empty assignment

-

The successor function assigns a value to an unassigned

variable such that there is no conflict

-

This function will fail if there are no legal

assignments

-

The goal test is that the current assignment is complete,

i.e. all variables have been assigned a value

-

This is the same for all CSPs

-

Every solution appears at depth n with n variables

-

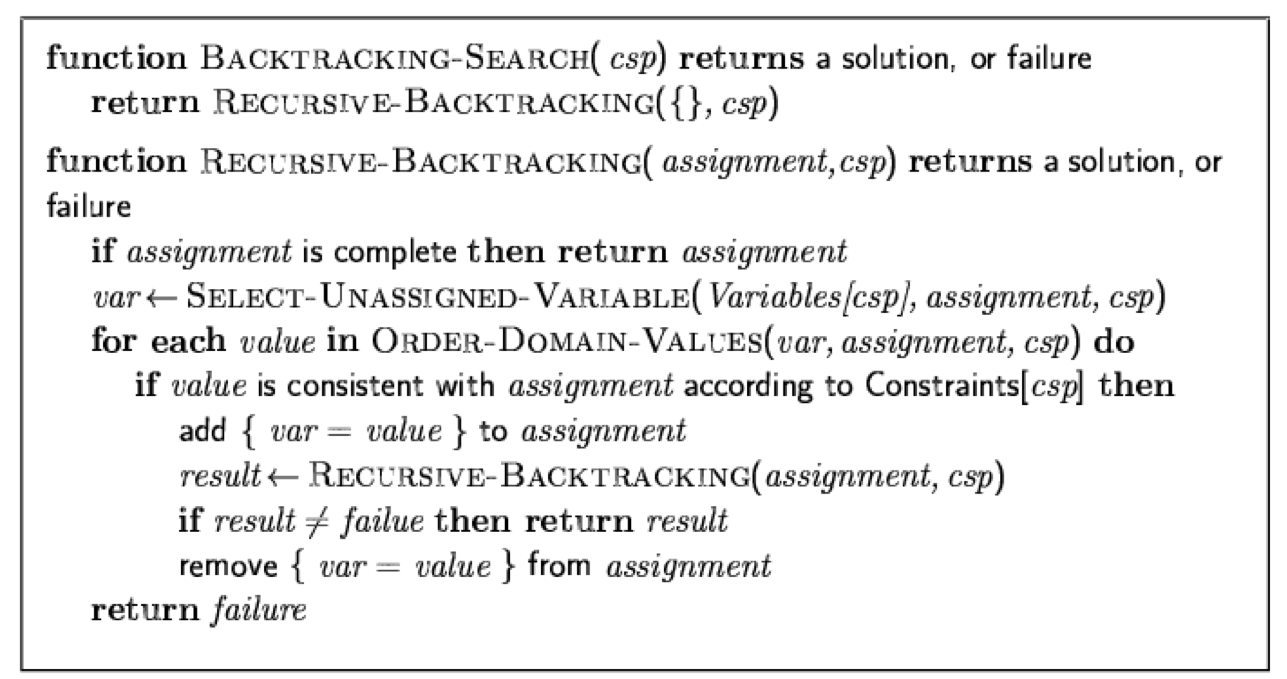

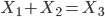

In our first approach we will use backtracking search to

find the solution

-

This is conceptually very similar to depth-first search as

you will see

-

We will use recursion to implement backtracking

search

-

Our inputs are the current variable assignment and the

csp

-

The function will either return a solution or a

failure

-

If the assignment is complete then return the assignment

(solution)

-

This is a base case - we have found the solution!

-

Select any unassigned variable

-

It doesn’t matter which one you select first in order

to find a solution

-

However, it will matter for performance reasons as we are

going to explore later

-

For each value of all values in our domain

-

If the selected variable would be assigned this value: Is

it consistent with the assignment according to constraints,

i.e. does it not violate any constraints?

-

Add it to our assignments

-

Recursively call this function with the new assignments

set, and store whatever it returns as a result

-

If it didn’t return a failure (so it returned a

solution), then return the result

-

If it returned a failure, then remove this particular

assignment from our assignments set

-

This is the backtracking part of the algorithm

-

It means that we have gone down a path that didn’t

lead to a solution, therefore we are going to undo what we

have tried

-

Return a failure since we have already checked all values

for the unassigned variable

-

This is another base case - there doesn’t exist any

solution

-

The main difference between backtracking and DFS is how

they expand nodes

-

DFS expands all successors of a node at the same time, and

puts them on the fringe

-

Backtracking only expands one successor of a node and, if

necessary, will return to this node later to expand further

nodes, if available

-

Backtracking is the basic uninformed algorithm for

CSPs

-

Let’s improve this algorithm

-

Which variable should be assigned next?

-

In what order should its values be tried?

-

Can we detect inevitable failure early?

-

Improving means reducing the amount of search by pruning

the search tree

-

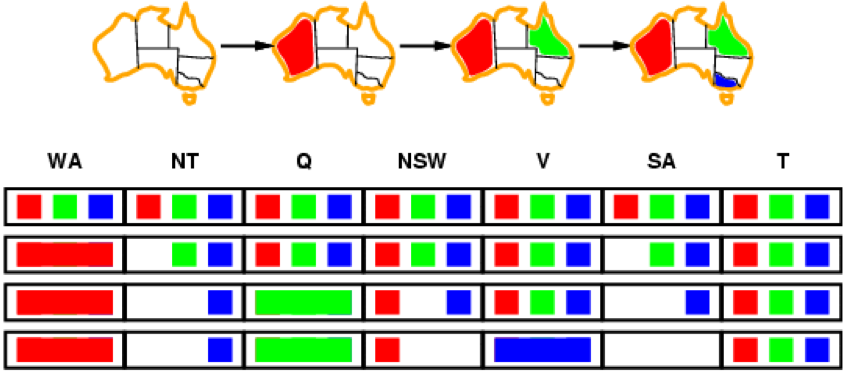

Let’s start with: Which variable should be assigned

next?

-

Improvement: Choosing the most constrained variable

-

It means choosing the variable with the fewest legal values

first

-

This is called a minimum remaining values (MRV)

heuristic

-

Because these are the variables that are most likely to

prune the search tree

-

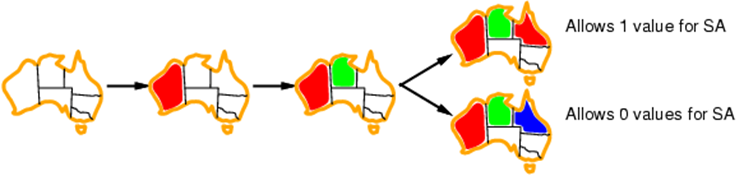

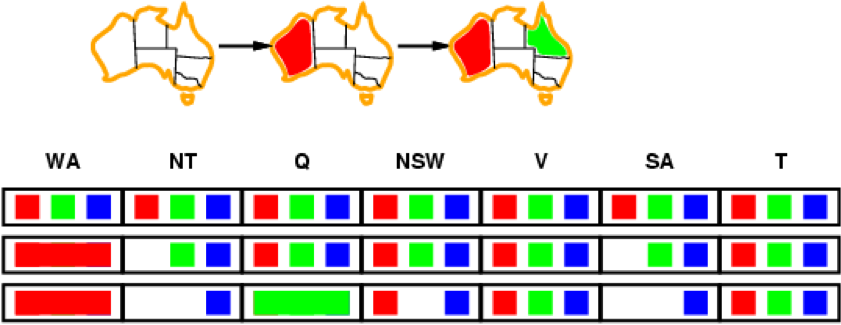

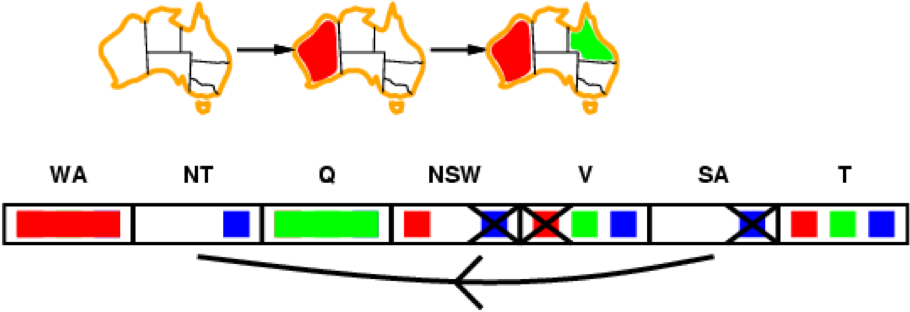

In this example, after we coloured WA red, we want to

continue with either NT or SA because these are the most

constrained territories:

-

Further improvement: Choosing the most constraining variable

-

This is a useful tie-breaker among the most constrained

variables

-

It means choosing the variable with the most constraints on

remaining variables first

-

Similar reason: Because the variable involved in the most

constraints is most likely to prune the search tree

-

In this example, after we coloured SA blue and NT green, we want to continue with Q because it further

constrains the value of NSW

-

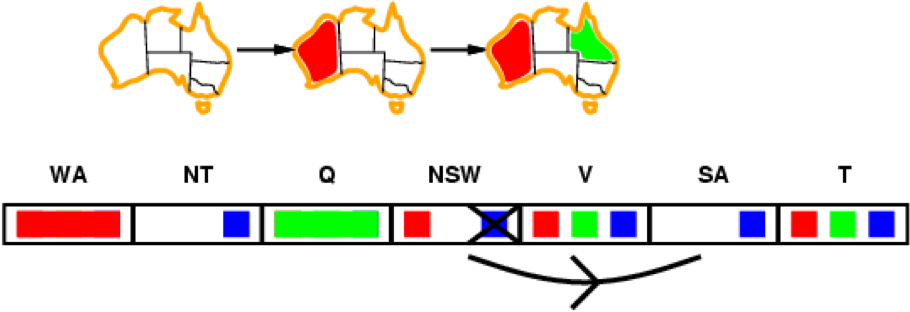

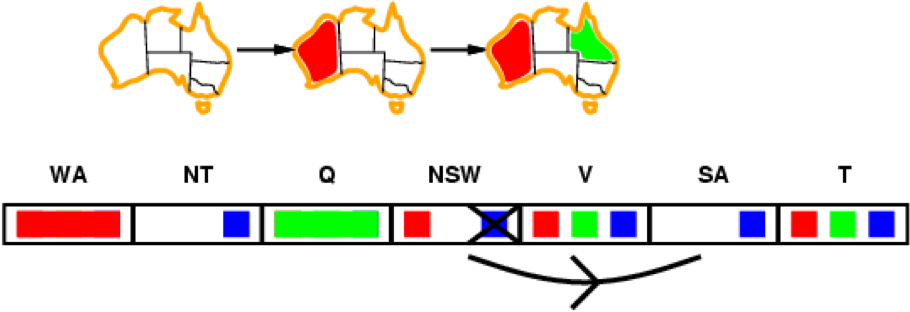

Now think about: In what order should its values be

tried?

-

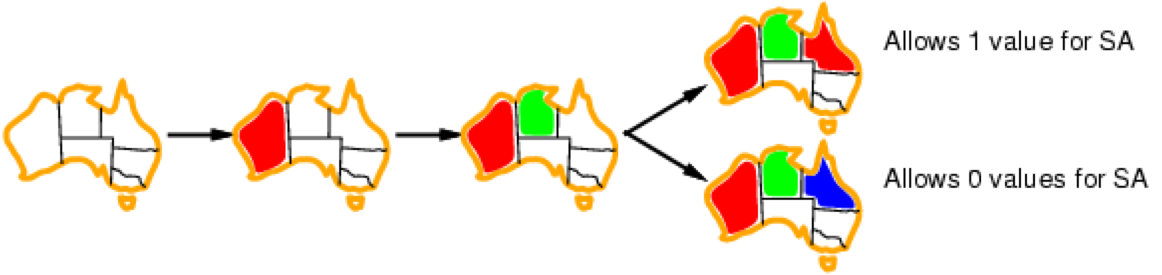

Improvement: Least constraining values

-

Given a variable, assign the least constraining value to

it

-

That is the value that rules out the fewest values in the

neighbouring variables

-

We want to introduce as few restrictions as possible for

our neighbours

-

In this example, we are going to choose red for Q in order

to allow blue for SA

-

With these improvements so far, the 1000-queens problem is

already feasible

-

Now think about: Can we detect inevitable failure

early?

-

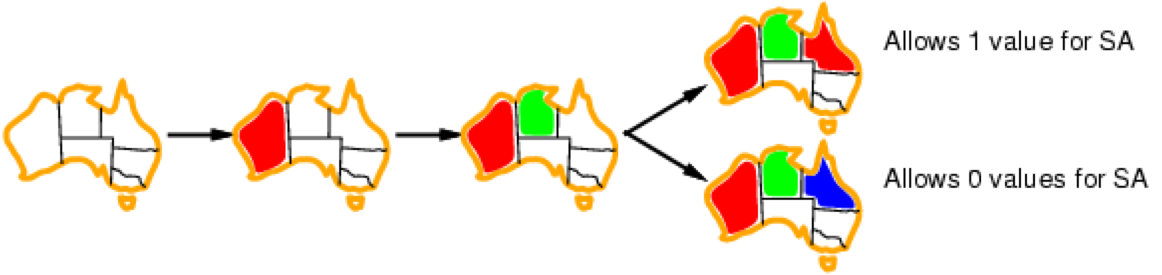

Improvement: Forward checking

-

We are going to keep track of remaining legal values for

all unassigned variables

-

Terminate search as soon as any variable has no legal

values

-

For example, we will terminate the search after 3

assignments have been made because there are no options

remaining for SA

-

However, we do not have early detection for all kind of

failures

-

For example, NT and SA cannot both be blue here:

-

We would eventually find this out via search, but there is

a faster way

-

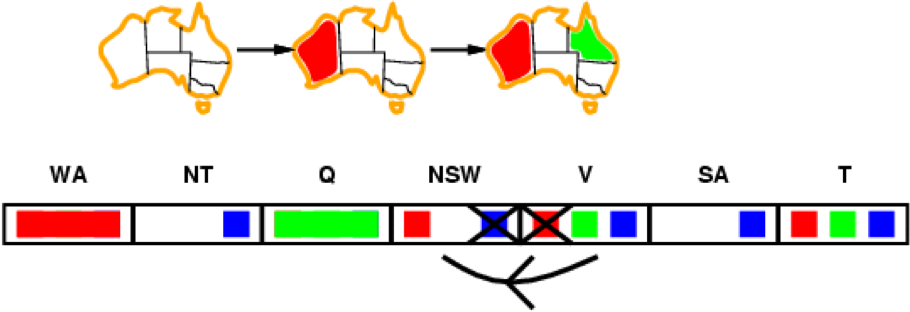

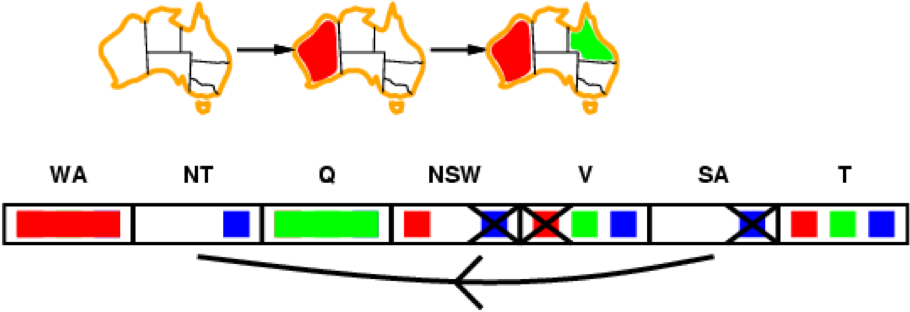

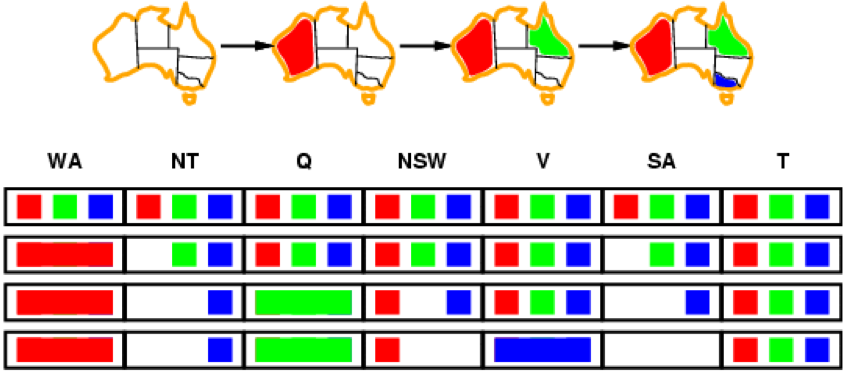

Improvement (of forward checking): Arc consistency

-

We will check the consistency of connected nodes

-

Two connected nodes X → Y are consistent if and only

if for every value x of X there exists some allowed y

-

We will check all neighbours of X every time X loses a

value

-

In our map example, we have arcs between all neighbouring

regions, e.g. between NSW and SA

-

When NSW loses a value, we have to check all neighbours,

which is SA only:

-

If NSW is going to be red, then blue is a valid option for

SA

-

However, if NSW is going to be blue, we have no valid

option for SA

-

Therefore, we can rule out the blue option for NSW

-

Because NSW has just lost a value, we now need to check all

neighbours of NSW

-

V is such a neighbour we need to check

-

We can cross out the red option for V, otherwise there

wouldn’t be a valid option for NSW left

-

SA is the next neighbour we have to check

-

We can now see that we have a problem here: SA and NT both

have only blue remaining, which violates our

constraint

-

Therefore, we can already give up and detect the

failure

-

This is way earlier than if we just used forward

checking

-

A different approach to CSPs is to use local search

-

Hill climbing and simulated annealing typically work with

“complete” states, i.e. states where all

variables are assigned but some constraints are

violated

-

Therefore we have to allow constraint violations for

intermediary states until we reach the goal state

-

We will continuously select a conflicted variable and

change its assignment

-

We will select the variable to change randomly

-

We will select the value for this variable using the

min-conflicts heuristic

-

Choose the value that violates the fewest constraints

-

E.g. hill climb with the fitness function being equal to

the total number of violated constraints

-

For example, in this 4-queens problem we randomly select a

conflicting queen and move it in it’s column such that

we reduce the conflicts the most

-

The number of conflicts is evaluated by our fitness

function h

-

While we are not at a solution

-

Choose a random conflicted queen

-

Pick a row for this queen that has minimum conflicts

-

This strategy works surprisingly well on the n-queens

problem

-

It is almost independent of problem size due to the dense

solution space

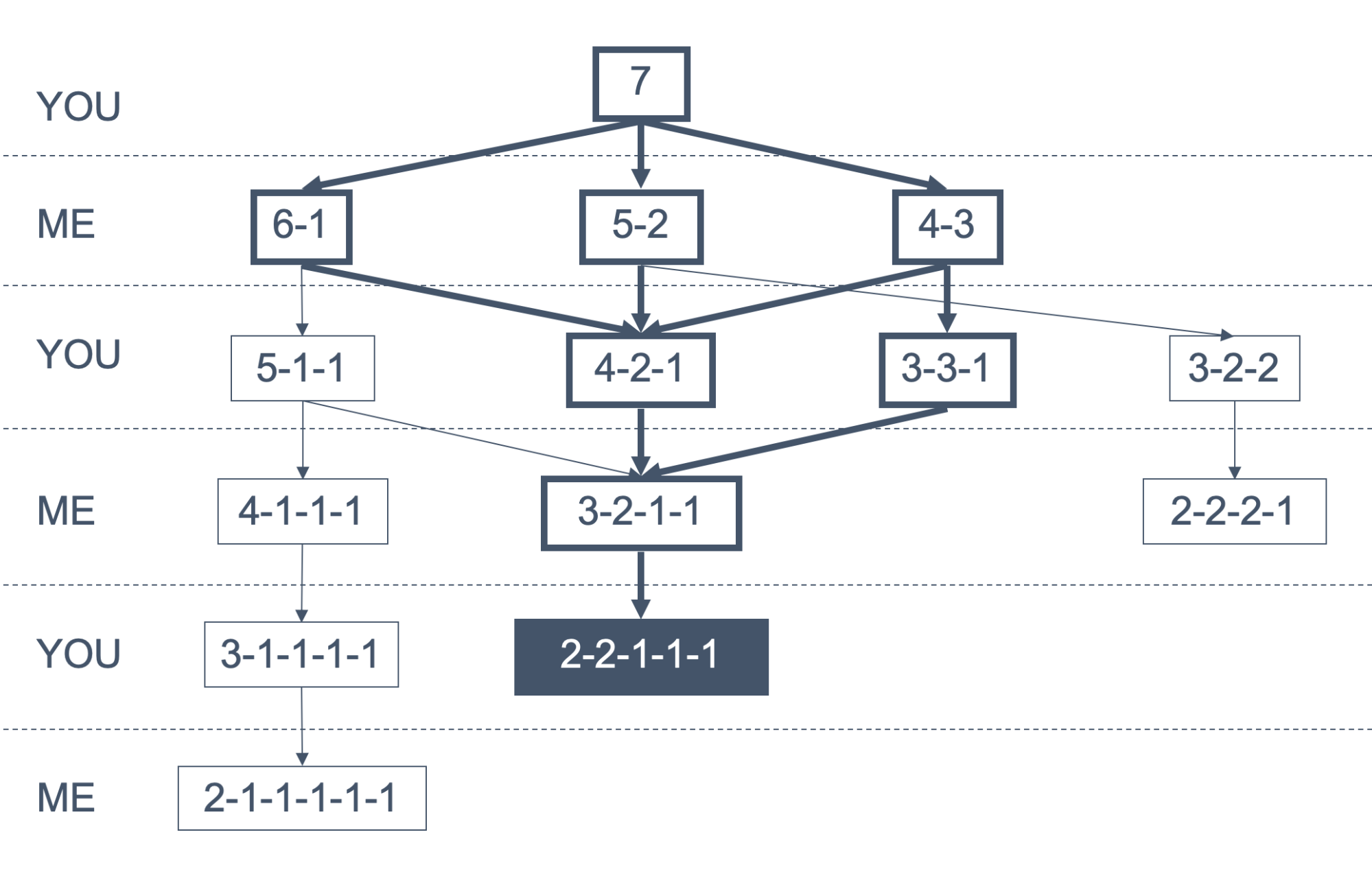

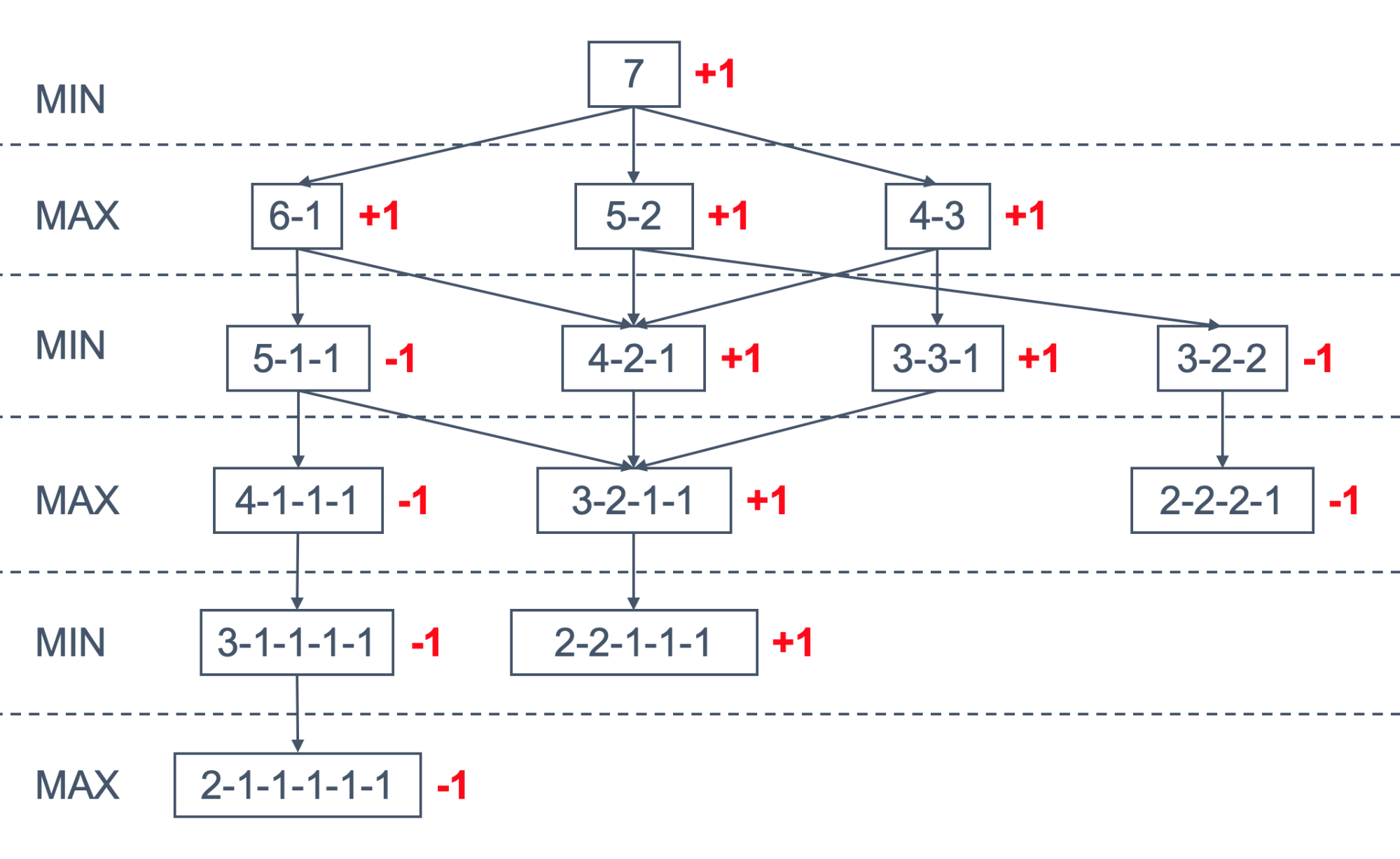

Adversarial Games: Minimax

-

So far, we have concentrated on unopposed search

problems

-

Now we will look at some games with opponents

-

Game playing is a traditional focus for AI

-

We must take account of the opponent

-

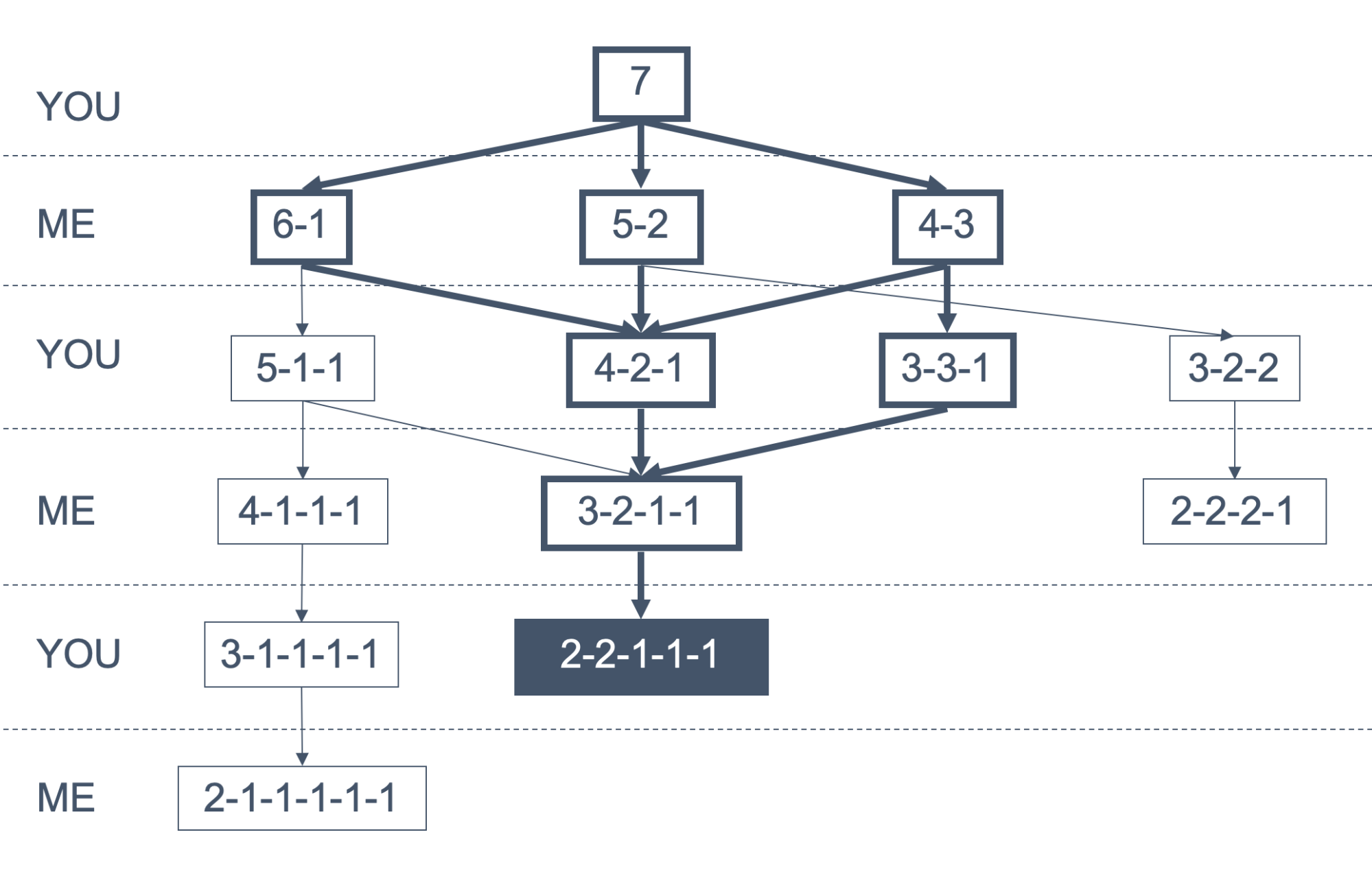

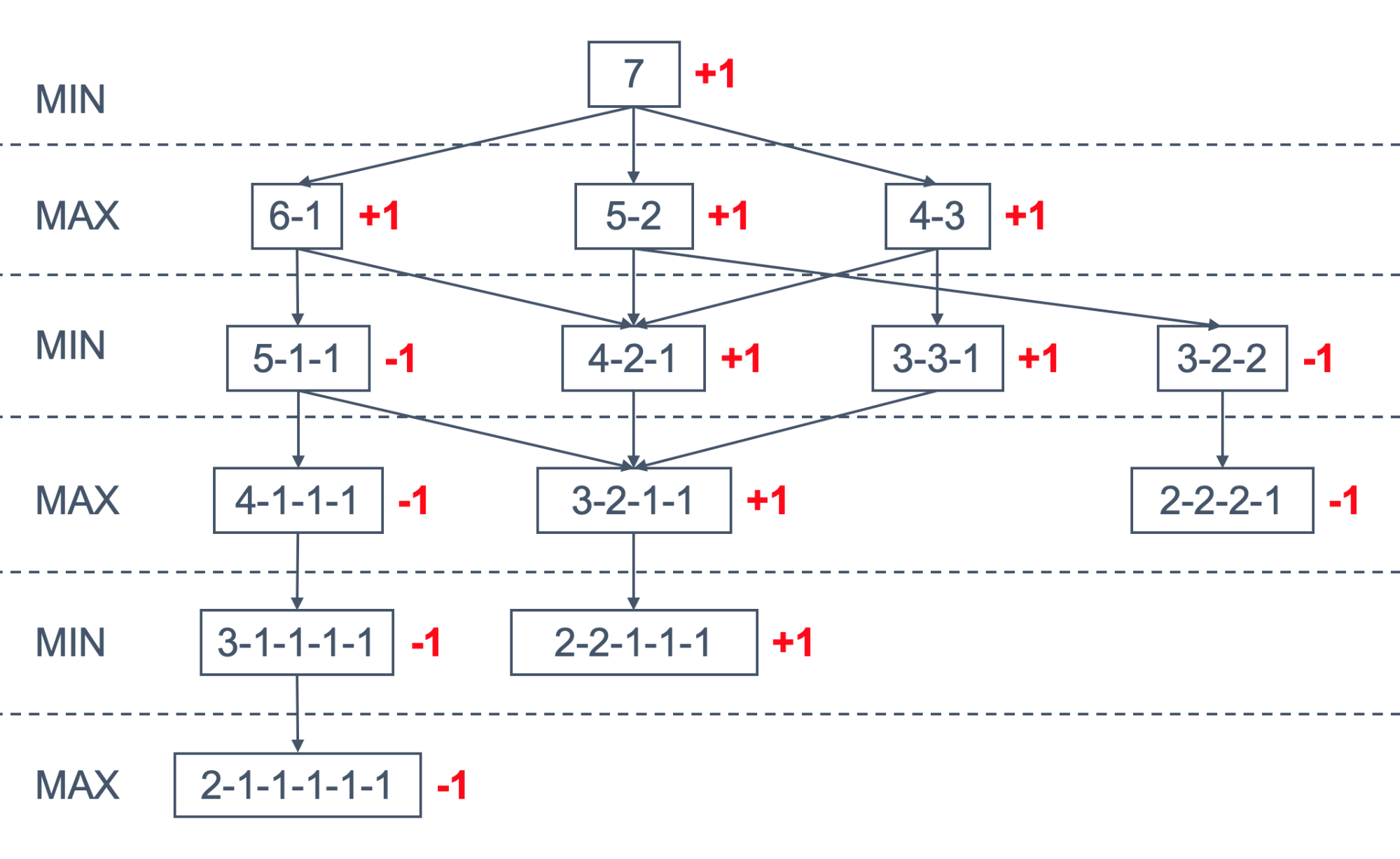

Let’s take a look at the game of nim

-

We start with a pile of seven matches

-

Each player takes it in turn to take a pile of matches and

split into two differently-sized piles of matches

-

The last player who is able to make a move is the winner

-

This is a very small and simple game because there are few

legal states and few legal moves from each state

-

The game doesn’t last long (only a few turns)

-

Player who plays second has an unfair advantage

-

We can construct a tree representing all the states of the game

-

Our strategy is to always stay on the bold path, and to win

by reaching the state with the grey background

-

In general we will use game tree search to win

-

Initial state is the initial board state

-

Goal states are all the winning positions

-

There is one action for each legal move

-

The expand function generates all legal moves

-

The evaluation function assigns a score (fitness) to each

board state

-

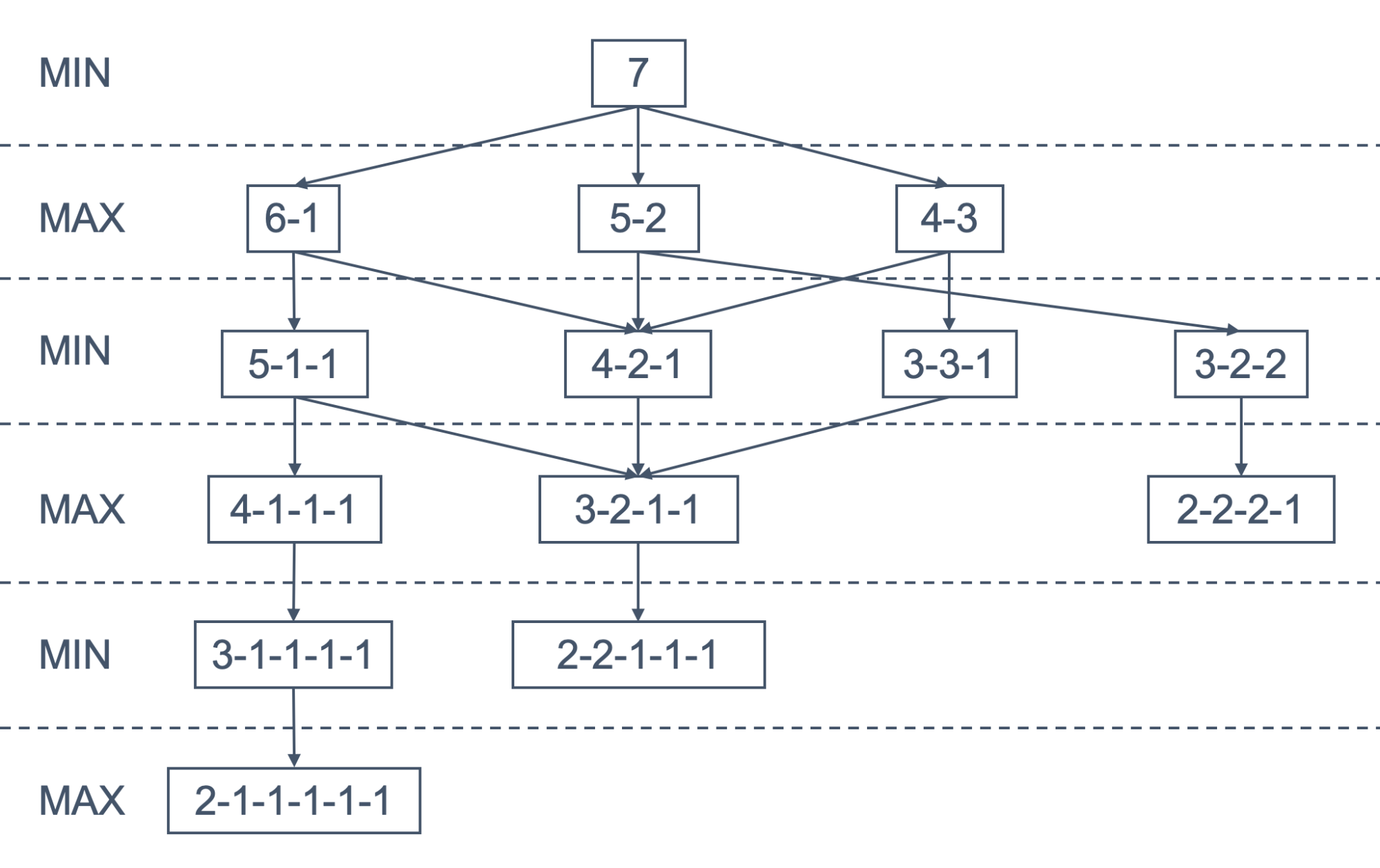

Minimax search is form of game tree search

-

MAX is traditionally the computer player

-

The computer picks the moves that maximise his winning

chances

-

They pick moves that minimise the computer’s winning

chances

-

We can divide the game tree into MIN and MAX nodes

-

In Minimax search we are going to search to a given ply

(depth-limited search)

-

Reason is that the game tree usually is too large to

completely search it

-

We will evaluate the heuristic for leaf nodes and then

propagate the values towards the root of the tree

-

MAX nodes take the maximum of their child values because we

will make the best move we can

-

MIN nodes take the minimum of their child values because we

assume that our adversary makes the move that is worst for

us

-

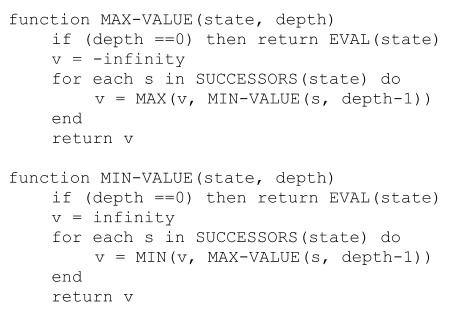

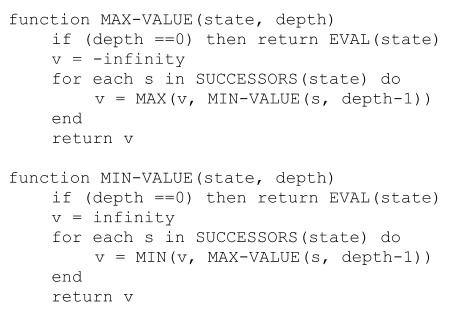

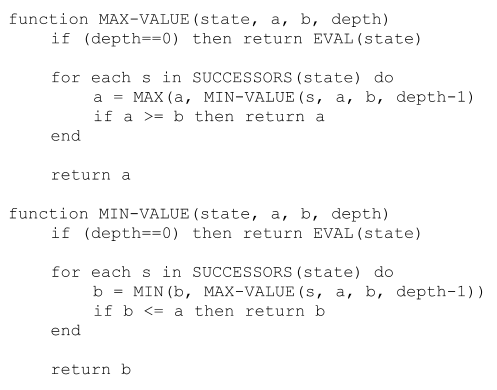

We can write an recursive algorithm for minimax search by

defining functions for max and for min value

-

Here is an animated example

-

If we want to use this strategy for Nim we are going to use

an evaluation function that is

-

+1 for a winning move for MAX

-

-1 for a winning move for MIN

-

We have assigned the values this way by only looking at the

leaf nodes and then back-propagating the values

-

Since the root is +1 we can see that the computer (who is

second) always wins the game

-

Let’s take a look at some properties of minimax

search

|

Complete?

|

Yes (if the tree is finite)

|

|

Time?

|

O(bm)

|

|

Space?

|

O(bm) (depth-first exploration)

|

|

Optimal?

|

Yes (against an optimal opponent)

|

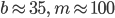

-

For chess,

for “reasonable” games

for “reasonable” games

-

Exaction solution completely infeasible

-

Evaluation functions are typically a linear function in

which coefficients are used to weight game features

-

It is unlikely that there is a perfect, computable

evaluation function for most games

-

Games with uncertainty (backgammon, etc) add notations of

expectation

|

Game

|

Evaluation Function

|

|

Noughts and Crosses

(or Tic-Tac-Toe for the foreigners)

|

The number of potential nought lines minus the

number of potential cross lines

|

|

Chess

|

c1 * material + c2 * pawn structure + c3 * king

safety + c4 * mobility …

Where c1 to c4 are some constants

(weights)

Each of those sub-functions (material etc.) are

other linear functions

|

-

Do you remember that I mentioned “search until a

given ply” earlier?

-

We cannot exhaustively search most game trees because they

are huge

-

Therefore, we can only search to some given ply depth

-

However, significant events may exist just beyond that part

of the tree we have searched

-

This is called the horizon effect

-

The further we look ahead the better our evaluation of a

position

-

If we are searching the game tree to a depth of n ply, what

happens if our opponent is looking n+1 moves ahead?

-

It might be the case that we are fucked have a problem

-

In order to counteract the horizon effect we can use the

following two techniques

|

quiescent search

|

-

Quiescent search is varying the depth limit

of the search

-

For noisy (interesting) states, the tree is

searched deeper

-

For quiet (less interesting) states, the

tree is not searched this far

-

We will use a heuristic function to

distinguish between noisy and quiet

states

|

|

singular extensions

|

-

Explore a move in greater depth if

-

One move is significantly better than the

others

-

There is only one legal move

|

-

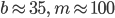

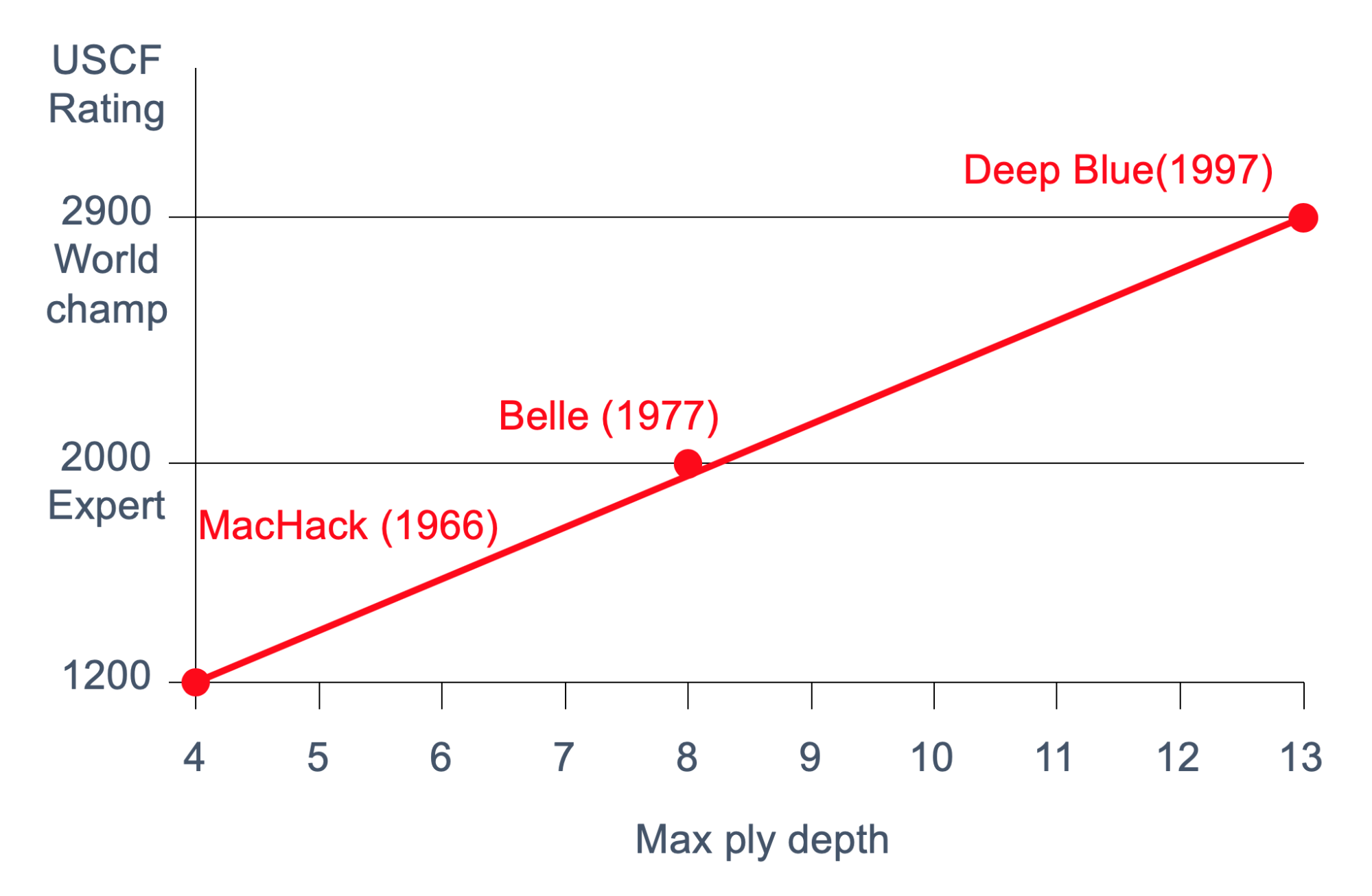

The following graphic illustrates the importance of ply

depth in chess

-

The branching factor of a game is the number of actions

which can be chosen

-

Nim (our first example) has a very low branching factor,

but many games do not

-

Chess: about 36

-

Go: about 200

-

This significantly affects the complexity of decision

making with increasing tree depth

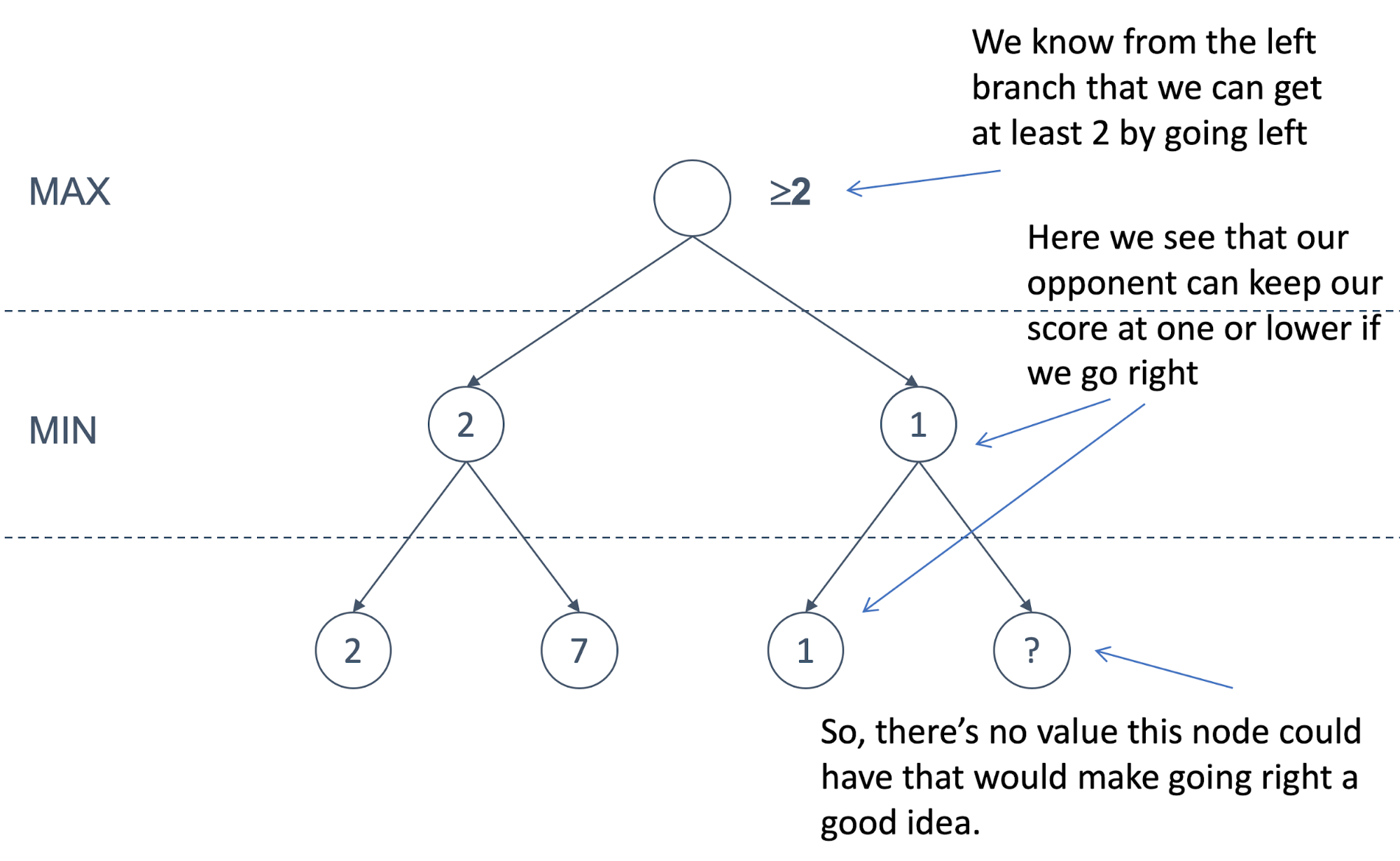

Alpha-beta Pruning

-

We are now going beyond minimax

-

We have strong and weak methods to improve minimax

-

Strong methods take account of the game itself

-

E.g. board symmetry of tic tac toe

-

Weak methods can apply to any game

-

One of those is called alpha-beta pruning

-

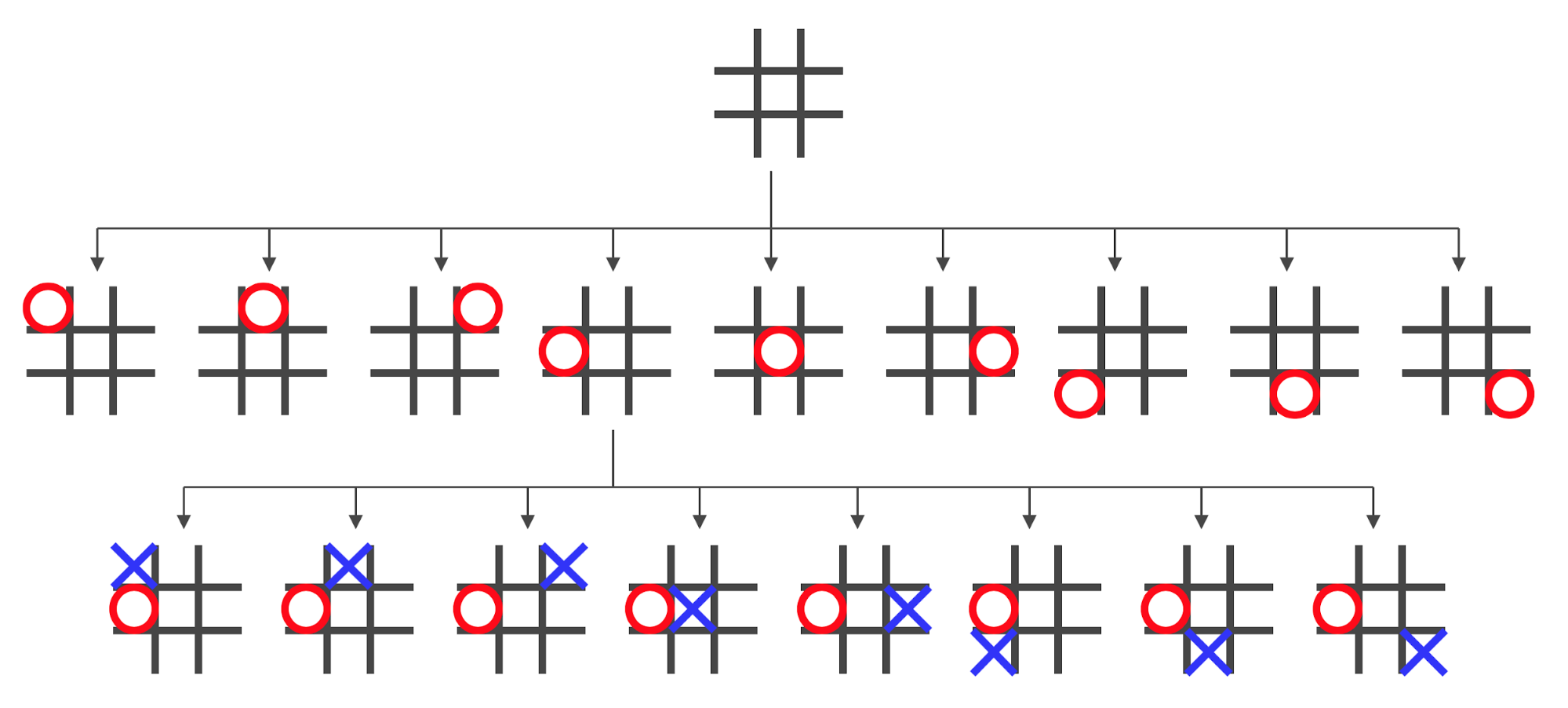

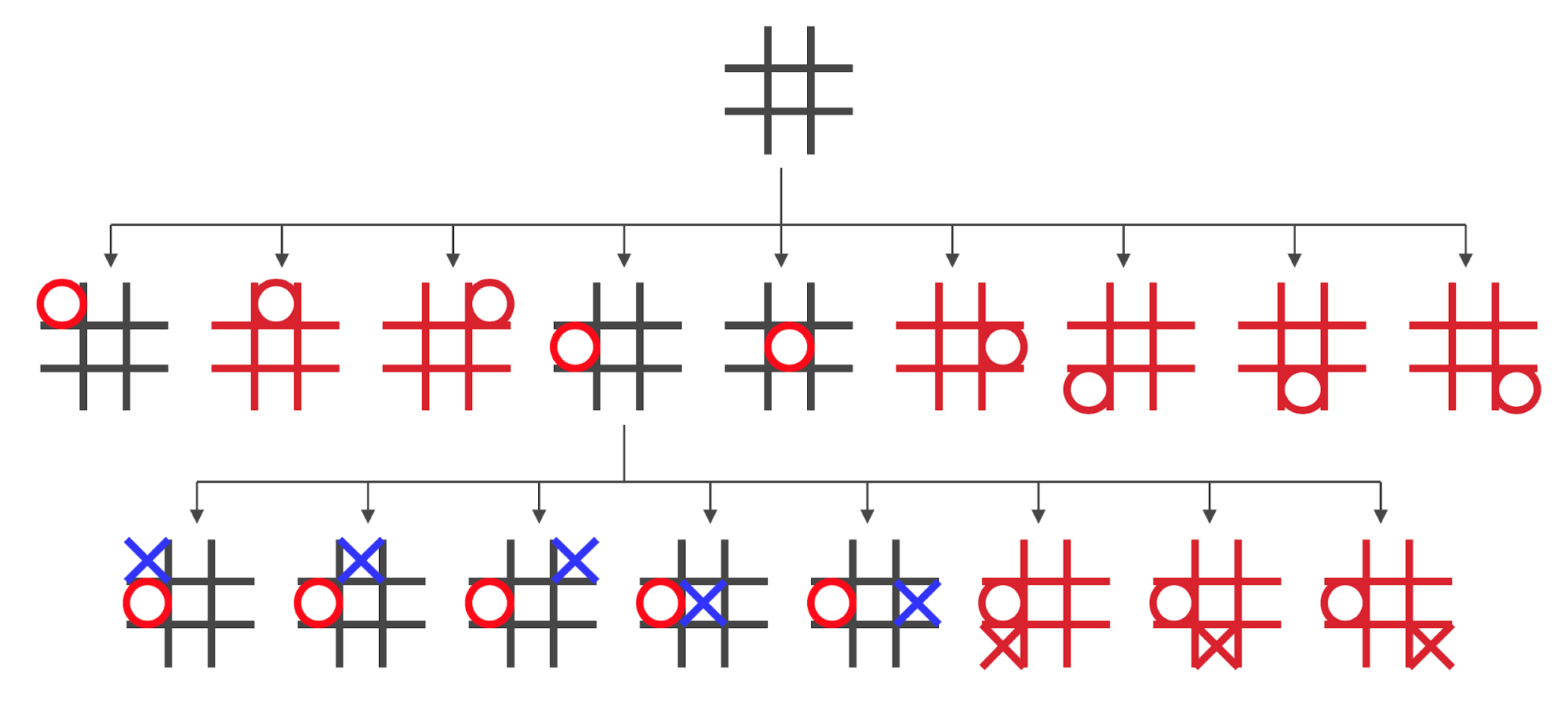

Before we are going to explore alpha-beta pruning we are

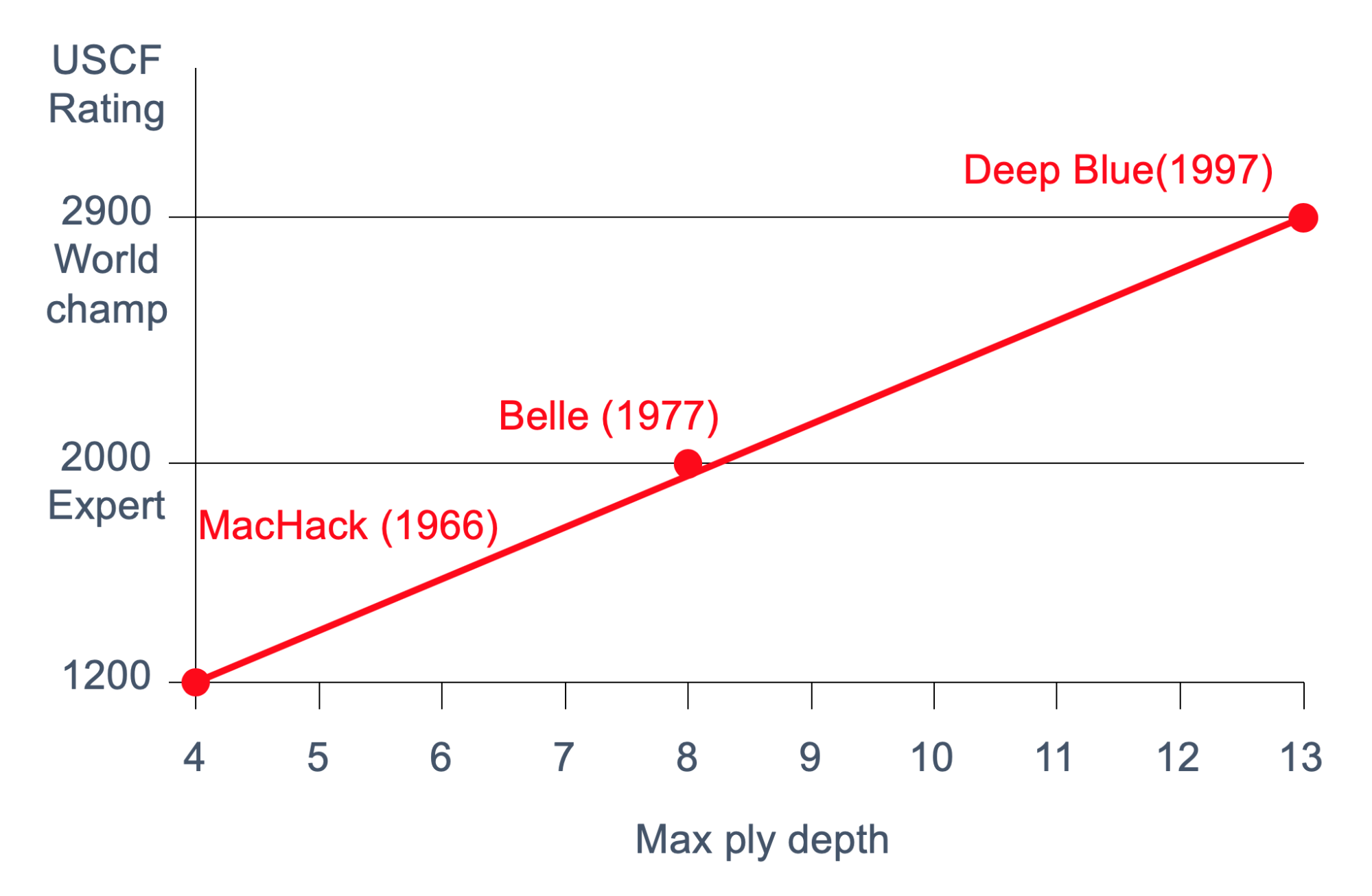

going to take a look at board symmetry of tic tac toe

-

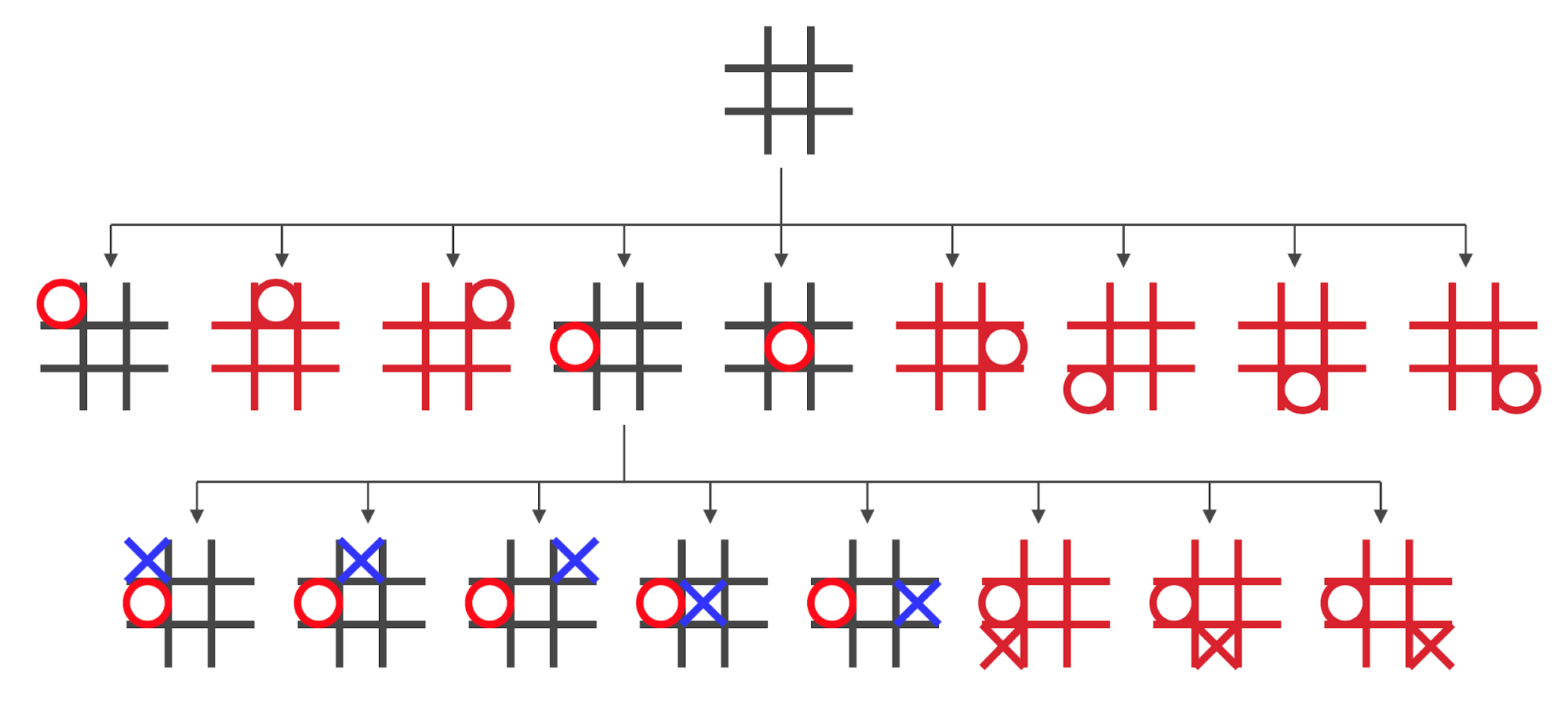

A part of the full game tree looks like this:

-

However, some of those states are redundant: we can achieve

them easily by rotating the game board

-

The redundant states are marked red:

-

By removing the redundant states we can prune the search

tree a lot

-

However, not every game allows for pruning this way,

therefore we need a more general method to reduce the amount

of search…

-

So now we will dive into alpha-beta pruning

-

Minimax explores the entire tree to a given ply depth

-

It evaluates the leaves

-

It propagates the values of the leaves back up the

tree

-

We have seen this procedure in the last chapter

-

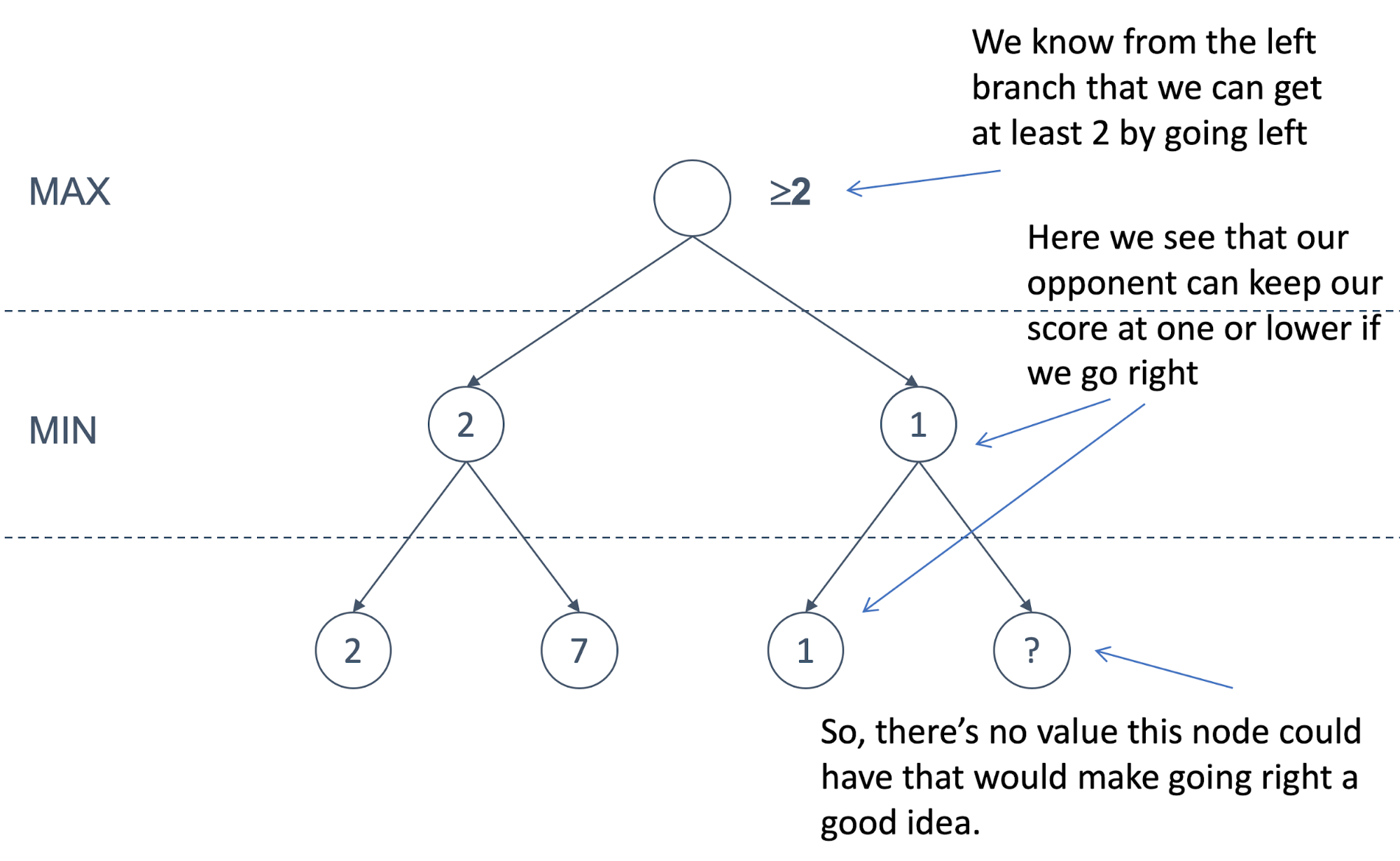

Alpha-beta pruning performs DFS but allows us to

prune/disregard certain branches of the tree

-

Let’s define alpha and beta

-

Alpha represents the lower bound on the node value. It is

the worst we can do

-

It is associated with MAX nodes

-

Since it is the worst, it never decreases

-

Beta represents the upper bound on the node value. It is

the best we can do

-

It is associated with MIN nodes

-

Since it is the best, it never increases

-

If the best we can do on the current branch is less than or

equal to the worst we can do elsewhere, there is no point

continuing on this branch

-

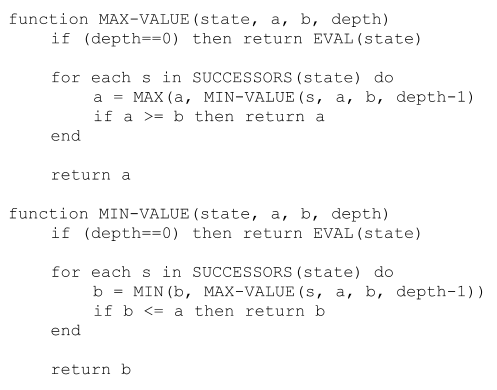

Now we can define recursive functions for calculating alpha

and beta values

-

If you compare this with the minimax search functions we

defined earlier, you can see that these functions are very

similar to them

-

The difference is that when we check the successors we

return immediately if our alpha/beta values allow us to do

so

-

For the max-value function we return alpha if alpha

beta because beta used to be the best we can do so

far, but we have just found a better value.

beta because beta used to be the best we can do so

far, but we have just found a better value.

-

For the min-value function we return beta if beta

alpha because alpha used to be the worst we can do so

far, but we have just found a value that is even

worse.

alpha because alpha used to be the worst we can do so

far, but we have just found a value that is even

worse.

-

Let’s look at an example tree

-

Animation pls

-

Alpha-beta is guaranteed to give the same values as

minimax

-

The only difference is that we can reduce the amount of

search a lot

-

If the tree is ordered, the time complexity is

-

Minimax is

-

This means that we can search twice as deep for the same

effort

-

However, perfect ordering of the tree is not possible

-

If it was we wouldn’t need alpha-beta in the first

place

Planning

-

First define what a plan/planning is

-

A plan is a sequence of actions to perform tasks and

achieve objectives

-

Planning means generating and searching over possible

plans

-

The classical planning environment is fully observable,

deterministic, finite, static and discrete

-

Planning assists humans in practical applications such

as

-

Design and manufacturing

-

Military operations

- Games

-

Space exploration

-

What are the problems/difficulties of planning in the real

world?

-

In the real world we have a huge planning environment

-

We need to think about which parts of this environment are

relevant for our planning problem

-

We need to find good heuristic functions so that we can

reduce the amount of search

-

We need to think about how to decompose the problem

-

In order to define plans, we need a planning language

-

What is a good planning language?

-

It should be expressive enough to describe a wide variety

of problems

-

It should be restrictive enough to allow efficient

algorithms to operate on it

-

The planning algorithm should be able to take advantage of

the logical structure of the problem

-

Examples of languages are

-

The STRIPS(Stanford Research Institute Problem Solver)

model

-

ADL (Action Description Language)

-

What general language features do we want?

-

We have to represent states

-

We do this by decomposing the world in logical conditions

and represent a state as a conjunction of positive

literals

-

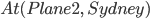

For instance, to represent that one plane is at Melbourne

and the other plane is at Sydney we can write

-

We will assume that everything that is not part of the

model does not exist, i.e. every other predicate not

included is false

-

For instance, the predicate “plane 3 is at

Sydney” is false because it is not in the model

-

This is called closed world assumption

-

We have to represent goals

-

The goal is a partially specified state

-

If the state contains all the literals of the goal then the

goal is satisfied

-

For example, the goal could be defined as

-

We do not care about where plane 1 is, and this goal would

be satisfied with the state given above

-

We have to represent actions

-

Actions consist of a precondition and an effect

-

If the precondition is true, we can use the action to

achieve the effect

-

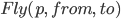

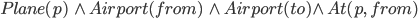

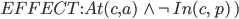

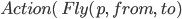

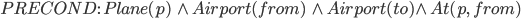

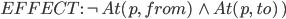

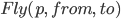

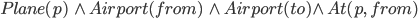

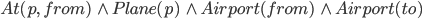

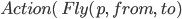

Let’s define an example action Fly:

Action:

Precond:

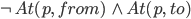

Effect:

-

The precondition says “p must be a plane and from must be an airport and to must be an airport and p must be at from”

-

As you can see we also have to do something like type

checking in the precondition because the definition of the

action does not specify any types

-

The effect is then “p will not be at from and p will be at to”

-

How do actions affect states?

-

An action can be executed in any state that satisfies its

precondition

-

There needs to exist a substitution for all variables of

the precondition

-

For example for the action fly we need to substitute p,

from and to

-

When we execute the action it will change some part of the

state

-

We will add any positive literal in the effect of the

action to the new state

-

We will remove any negative literal in the effect of the

action from the new state

-

We will not change any literal that is not in the effect,

i.e. every literal not in the effect remains unchanged

-

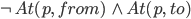

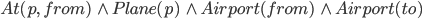

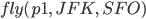

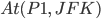

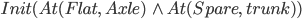

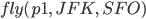

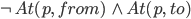

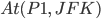

For example, given the following state:

-

We can execute fly because the state satisfies the

precondition for p = p1, from = JFK and to = SFO:

-

Let’s execute

-

The effect is

-

And therefore the resulting state is

-

Note that we added

and we removed

and we removed  due to the effect of the action

due to the effect of the action

-

Let’s look at some more examples

|

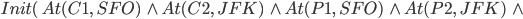

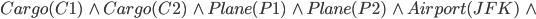

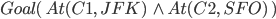

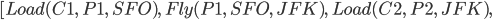

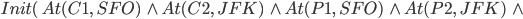

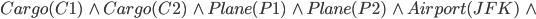

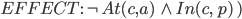

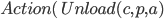

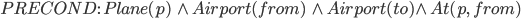

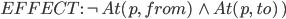

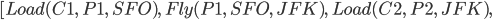

Air cargo transport

|

|

Initial State

|

|

|

Goal State

|

|

|

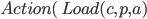

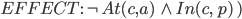

Actions

|

|

|

|

|

|

|

Example plan

|

|

|

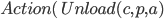

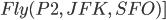

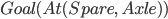

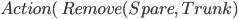

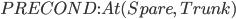

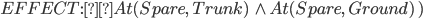

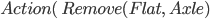

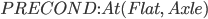

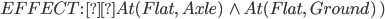

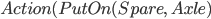

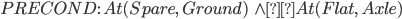

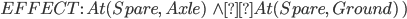

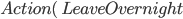

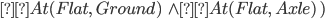

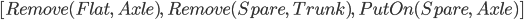

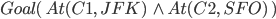

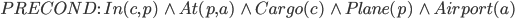

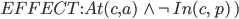

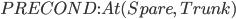

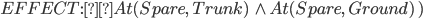

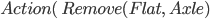

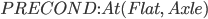

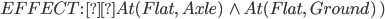

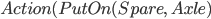

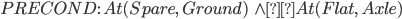

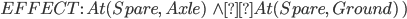

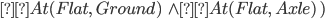

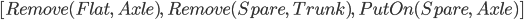

Spare tire problem

|

|

Initial State

|

|

|

Goal State

|

|

|

Actions

|

|

|

|

|

|

|

|

|

|

|

|

Example plan

|

|

-

How can we design an algorithm that comes up with a

plan?

-

There are two main approaches: forward and backward

search

-

Progression planners do forward state-space search

-

They consider the effect of all possible actions in a given

state

-

They search from the initial state to the goal state

-

Regression planners do backward state-space search

-

To achieve a goal, what must have been true in the previous

state?

-

They search from the goal state to the initial state

-

Progression algorithm is nothing more than any graph search

algorithm that is complete, e.g. A*

-

Regression algorithm

-

How do we determine predecessors of actions?

-

We have to find actions which will have an effect that

satisfies the pre-conditions of the current action

-

Actions must not undo desired literals

-

The main advantage is that only relevant actions are

considered

-

Therefore, the branching factor is often much lower than

forward search

-

Heuristics for progression and regression algorithms

-

Neither progression nor regression are very efficient without a good heuristic

-

The heuristic could be the number of actions needed to

achieve the goal

-

The exact solution is NP hard, therefore we should try to

find a good estimate

-

There are two approaches to find admissible

heuristic:

-

The optimal solution to the relaxed problem, e.g. where we

remove all preconditions from actions

-

The sub-goal independence assumption: The cost of solving a

conjunction of subgoals is approximated by the sum of the

costs of solving the sub-problems independently.

Partial-Order Planning (POP)

-

Progression and regression planning are totally ordered

plan search forms

-

They cannot take advantage of problem decomposition

-

Decisions must be made on how to sequence actions on all

the subproblems

-

We can improve this by specifying some actions which can be

done in parallel

-

For these actions it doesn’t matter which one comes

first

-

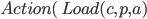

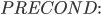

Let’s look at an example to understand this

concept

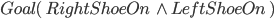

|

Shoe example

|

|

Initial State

|

|

|

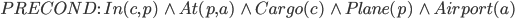

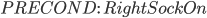

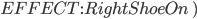

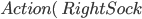

Goal State

|

|

|

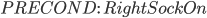

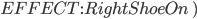

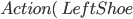

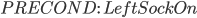

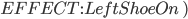

Actions

|

|

|

|

|

|

|

|

-

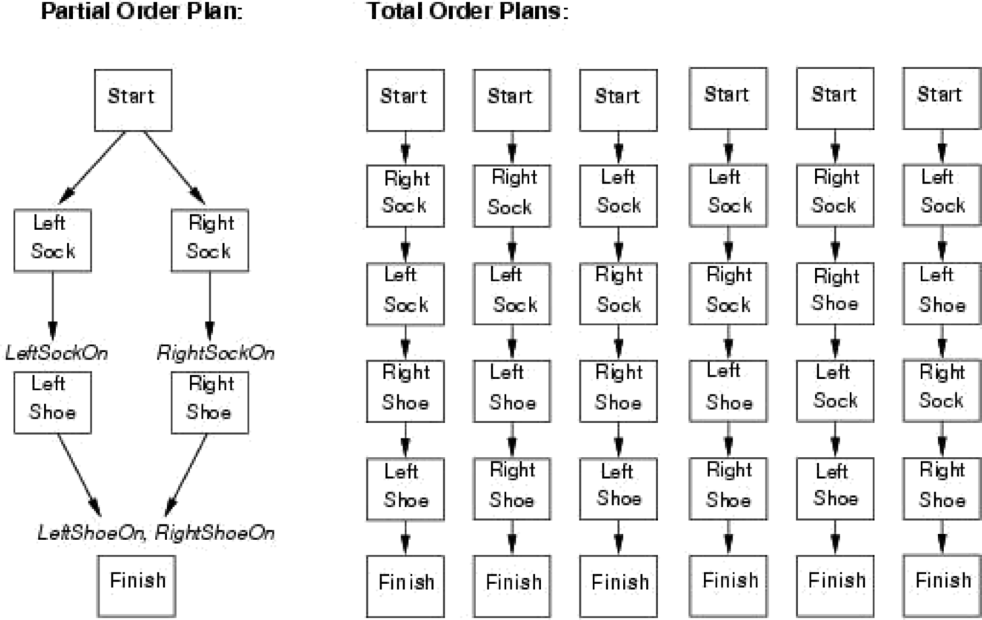

We want our planner to combine two action sequences

-

LeftSock and LeftShoe

-

RightSock and RightShoe

-

Therefore our planner can create a partially ordered

plan

-

A partially ordered plan contains actions which can be done

in parallel, without fixing which action comes first

-

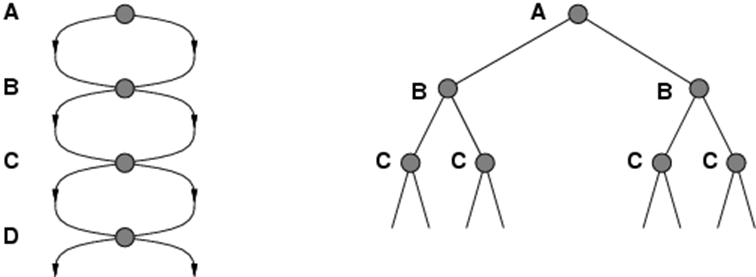

Here is a comparison between a PO (partially ordered) and a

TO (totally ordered) plan:

-

Let’s look at POP states in a search tree

-

Each state in the tree is a mostly unfinished plan

-

At the root of the tree we have an empty plan containing

only start and finish actions

-

Each plan has 4 components

-

A set of actions (steps of the plan)

-

A set of ordering constraints

-

A set of causal links written as

or A → p → B

or A → p → B

-

A set of open preconditions

-

If the precondition is not achieved by any action in the

plan

-

For our shoe example, a final plan would look like

this:

-

Actions = {Rightsock, Rightshoe, Leftsock, Leftshoe, Start,

Finish}

-

Those are all the actions that are available plus a Start

and Finish action

-

Orderings = {Rightsock < Rightshoe; Leftsock <

Leftshoe}

-

Read as “Rightsock has to come before

Rightshoe”

-

Links = {Rightsock → Rightsockon → Rightshoe,

Leftsock → Leftsockon → Leftshoe, Rightshoe →

Rightshoeon → Finish, …}

-

Read as “Rightsock achieves Rightsockon for

Rightshoe”

-

When is a plan a solution, i.e. when is a plan final?

-

A consistent plan with no open preconditions is a

solution

-

A plan is consistent when there are no cycles in the

ordering constraints and no conflicts with the links

-

A POP is executed by repeatedly choosing any of the

possible next actions

-

Next, we are going to look at search in POP space

-

We will start with the initial plan that only

contains

-

Actions Start and Finish

-

No causal links

-

All preconditions in Finish are open

-

Picks one open precondition p on an action B

-

Generates a successor plan for every possible consistent

way of choosing action A that achieves p

-

More on how to generate a successor plan a bit later

-

We need to test if we have reached the goal

-

Why should we search in POP space rather than FOP

(fully-ordered) space?

-

The number of fully-ordered plans can be exponentially more

than the number of partially ordered plans

-

Look at the sock example above, there is one POP but 8

FOPs

-

Therefore, searching in space of POPs is much more

efficient because the search space is much smaller

-

Also, when the plan is executed, there is more flexibility

because there is no strict order of all actions like in a

FOP

-

How do we generate a successor plan (a successor node in

the search tree)

-

We have to add the causal link A → p → B

-

We have to add the ordering constraint A < B

-

If A is new we also add Start < A and A <

Finish

-

Try to resolve any conflicts between the new causal

link and all existing actions.

-

If A is new also try to resolve any conflicts between A and

all existing causal links

-

Here is a summary how to process POP

- Operators

-

Add link from existing plan to open precondition

-

Add a step to fulfill an open condition

-

Order one step with regards to another to remove possible

conflicts

-

Gradually move from incomplete/vague plans to

complete/correct plans

-

Backtrack if an open condition is unachievable or if a

conflict is irresolvable

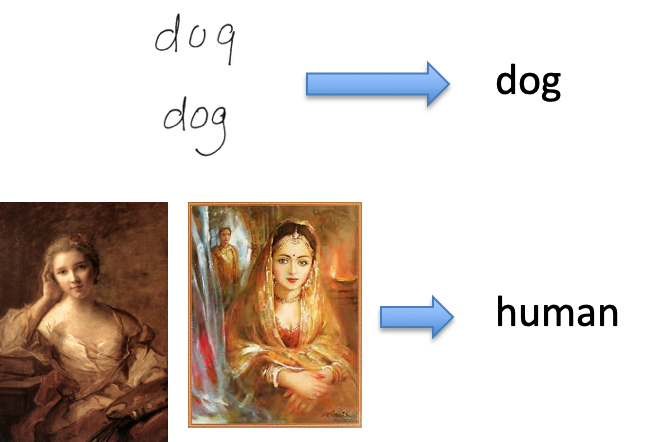

Recent Advances in AI

Classification

-

We want to recognise the type of situation we are in right

now:

-

Credit card transaction: Is it a fraud or not?

-

Autonomous weapons: Should it shoot or not?

-

There are two main approaches, top-down and bottom-up

-

Top-down takes inspiration from higher abstract

levels

-

Bottom-up takes inspiration from biology like neural

networks

Decision Trees

-

A decision problem is a problem that can be represented as

a yes-or-no question.

-

How do we humans get an answer to such a decision problem,

like checking whether a person shown in a picture is a

professor or not?

-

We check certain attributes of the person shown in the

picture

-

For example clothes, beard, glasses, etc.

-

We check them in a certain order

-

We keep checking them until we are certain that we can make

a decision

-

Decision trees work exactly like that

-

The main idea is to check some attributes in some specific

order until the algorithm can make a yes/no decision

-

A decision tree takes a series of inputs defining a

situation, and outputs a binary

decision/classification

-

A decision tree spells out an order for checking the

properties (attributes) of the situation until we have

enough information to make a decision

-

It is a top-down classification algorithm as we start at

the root of the tree and work down towards reaching a

leaf

-

We use the observable attributes to predict the

answer/outcome

-

In which ordering do we check the attributes?

-

This is an important question as we want to check as least

attributes as possible to make a decision

-

We can achieve this by first checking those attributes from

which we learn the most

-

We want to gradually reduce our uncertainty until we can

make a decision

-

We want to do this as quick as possible

-

So we should choose the attribute first that provides the

highest information gain

-

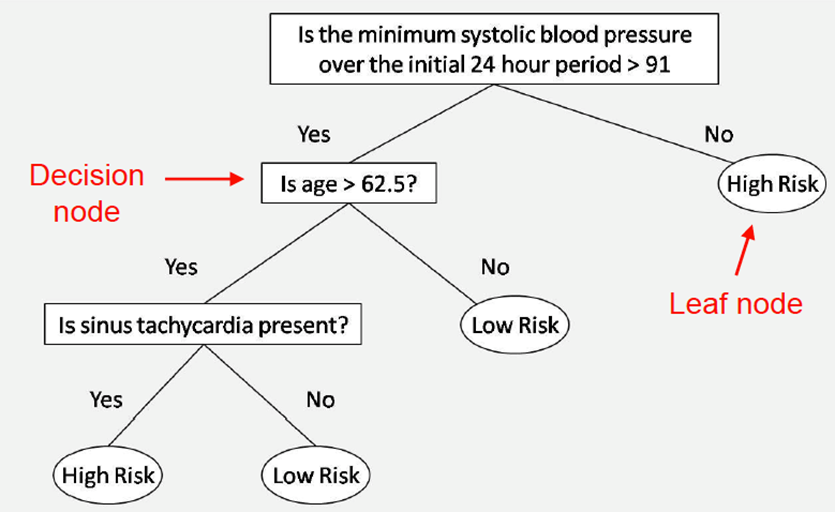

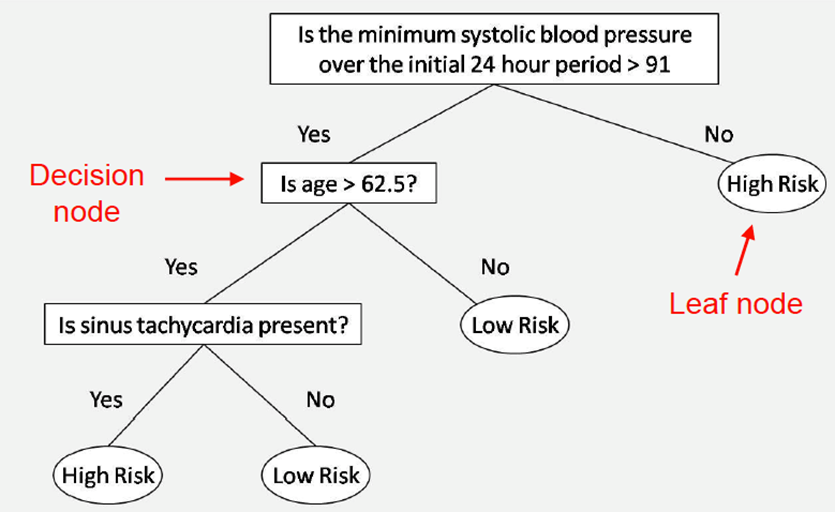

This example decision tree is about a high or low risk of

getting an STD:

-

So why are the questions ordered in this particular

way?

-

For example, in the above example, why don’t we ask

about sinus tachycardia before asking about systolic blood

pressure?

-

The answer is because we learn a lot more about our input

by asking that question, so we ask it first.

-

This question provides the highest information gain

-

Alright, that’s pretty easy. But what if we have a

problem with 1000 attributes? Are we going to read each one

and pick out an order? We’ll be there all day!

-

Instead, we will define how to calculate the information

gain, which we will use to define the order of

attributes.

-

But first, we need to define the entropy and the

conditional entropy.

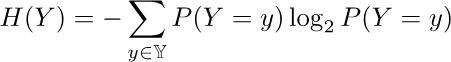

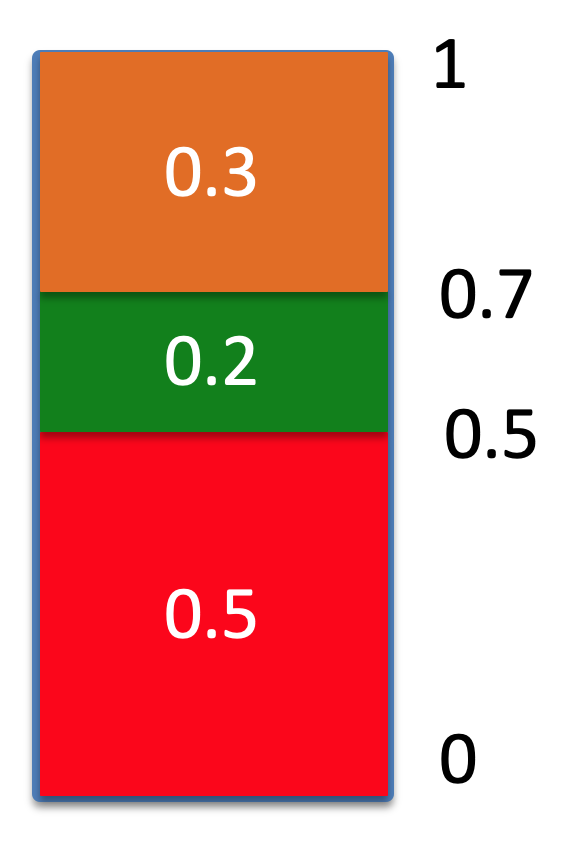

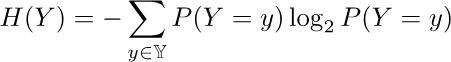

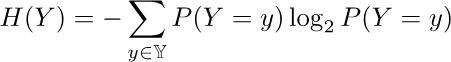

Entropy

-

Entropy is a measure of how much uncertainty there exists

in a system.

-

The equation goes like this:

-

Where we input a set of probabilities that represent our

problem X into H like

and you get the entropy in “bits”.

and you get the entropy in “bits”.

-

A bit is simply a unit for entropy; the higher it is, the

more uncertain is the event. When we are certain, the

entropy is 0.

-

For example, if we have the following table for the weather

with respective probabilities:

|

City

|

Good

|

OK

|

Terrible

|

|

Birmingham

|

0.33

|

0.33

|

0.33

|

|

Southampton

|

0.3

|

0.6

|

0.1

|

|

Glasgow

|

0

|

0

|

1

|

-

Let’s calculate the entropy for all of the three

cities

|

Birmingham

|

P

|

log2P

|

P*log2P

|

|

Good

|

0.33

|

-1.58

|

0.53

|

|

OK

|

0.33

|

-1.58

|

0.53

|

|

Terrible

|

0.33

|

-1.58

|

0.53

|

|

|

|

SUM =

|

1.58 (bits)

|

|

Southampton

|

P

|

log2P

|

P*log2P

|

|

Good

|

0.3

|

-1.74

|

0.52

|

|

OK

|

0.6

|

-0.74

|

0.44

|

|

Terrible

|

0.1

|

-3.32

|

0.33

|

|

|

|

SUM =

|

1.29 (bits)

|

|

Glasgow

|

P

|

log2P

|

P*log2P

|

|

Good

|

0

|

-infinity

|

0

|

|

OK

|

0

|

-infinity

|

0

|

|

Terrible

|

1

|

0

|

0

|

|

|

|

SUM =

|

0 (bits)

|

-

What do these entropies tell us now?

-

Well, as defined before, the entropy denotes the

uncertainty in a system

-

If we look at the probabilities of the weather in

Birmingham, they are 0.33 for each type of weather.

-

That means if we were to predict the future weather based

on this data, we cannot be certain at all, i.e. we are very

uncertain about our prediction

-

This is reflected in the calculated entropy, which is

1.58.

-

High entropy means a lot of uncertainty, and this is the

highest of the 3 cities.

-

Now look at the probabilities of the weather in

Southampton. This time the probabilities are not distributed

equally

-

This means that if we were to predict the future weather

based on this data, we can surely be more certain compared

to Birmingham. However, we will still have some uncertainty

in our prediction

-

The entropy is 1.29, so the second highest.

-

Finally look at the probabilities of the weather in

Glasgow. The probability for terrible weather is one.

-

This means that if we were to predict the future weather

based on this data, we can be absolutely certain that the

weather is going to be terrible.

-

The entropy is 0, meaning we have no uncertainty in our

weather forecast.

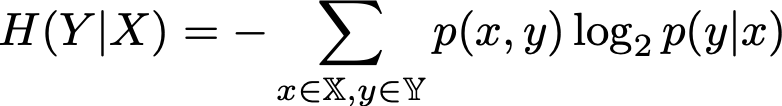

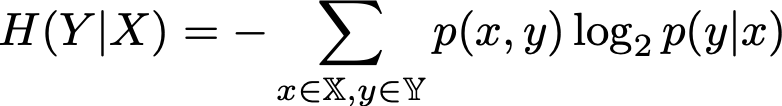

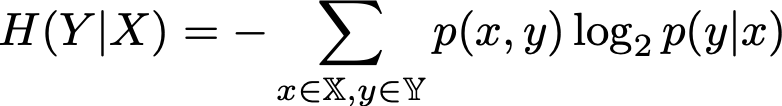

Conditional Entropy

-

We have seen that the entropy denotes the amount of

uncertainty in a system.

-

Another measure which we will need later is the conditional

entropy.

-

The conditional entropy denotes a possible new level of

uncertainty, given that some other event is true.

-

It is defined as follows:

-

p(x, y) is the joint probability of two events, i.e. the

probability that both events are true

-

p(y | x) is the conditional probability, i.e. the

probability of y given that x is true

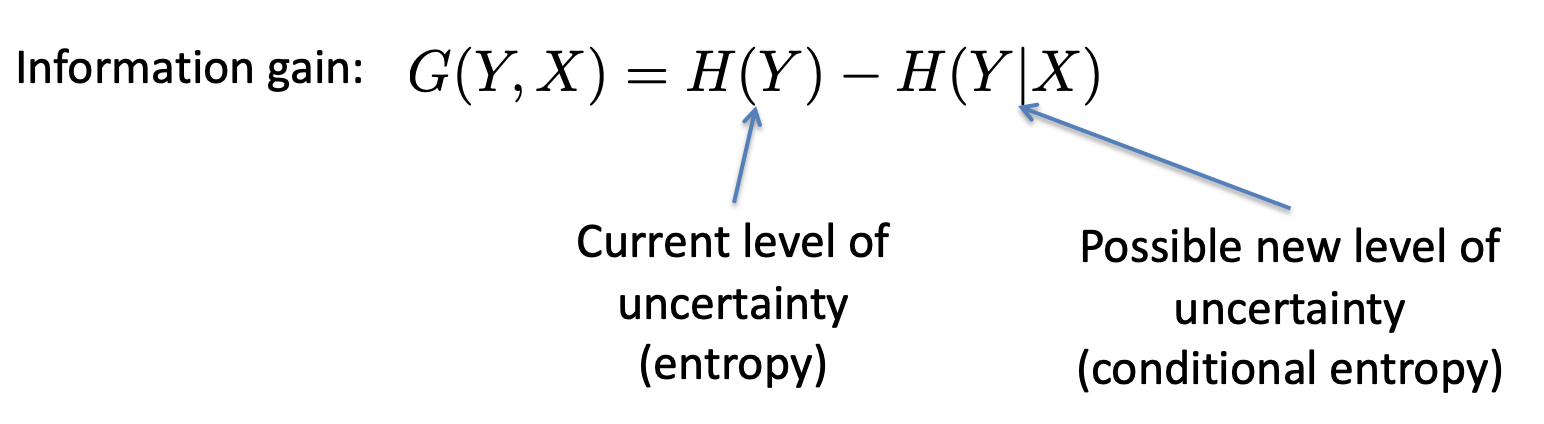

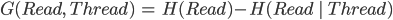

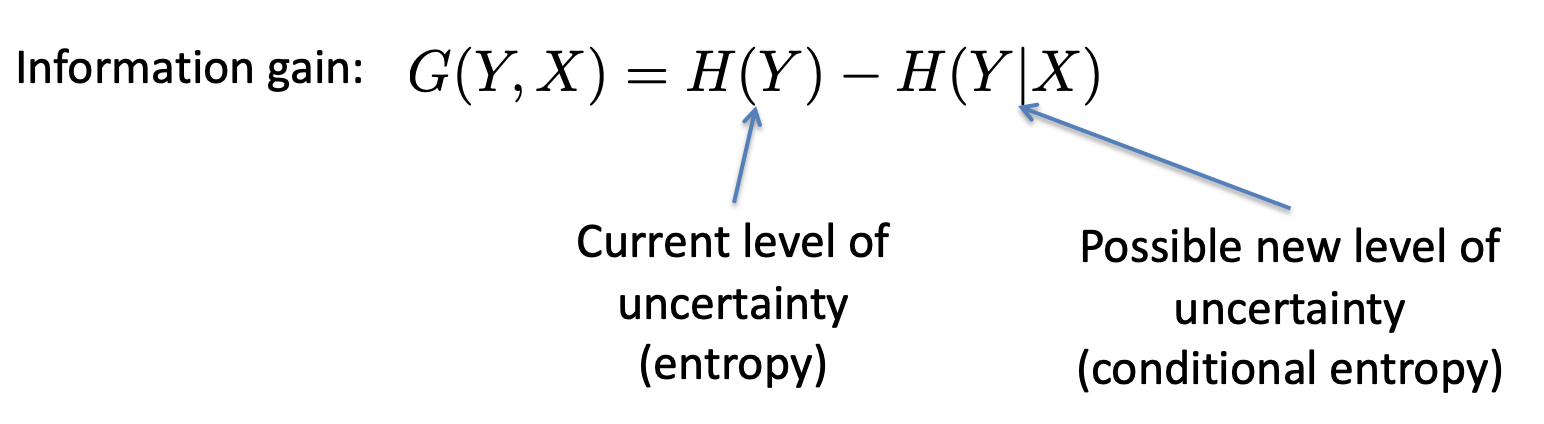

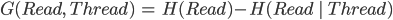

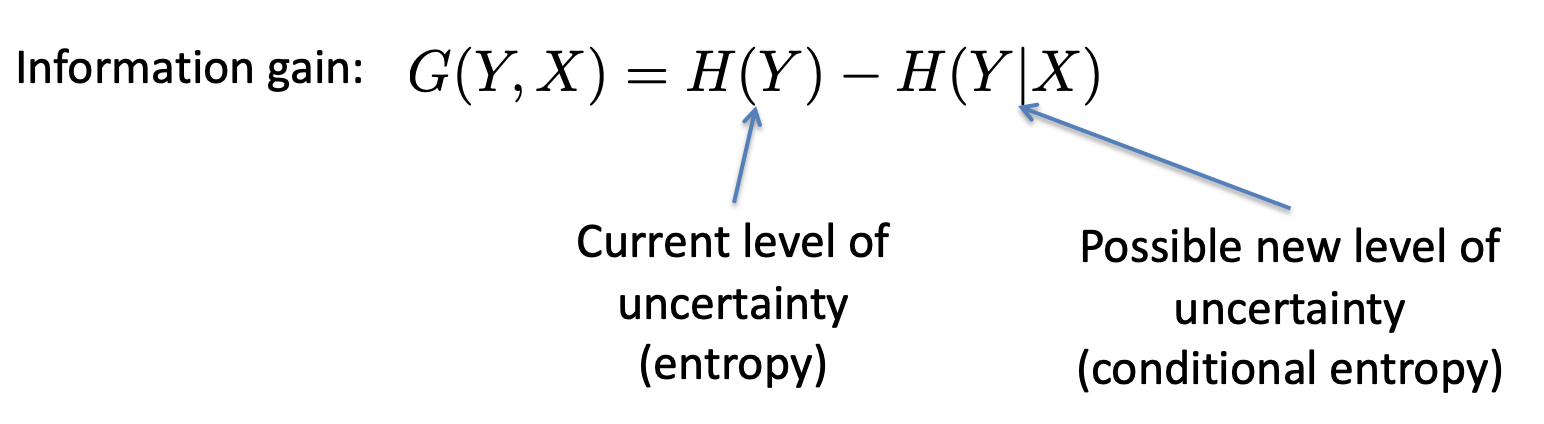

Information Gain

-

Now that we have defined the entropy and the conditional

entropy, we can finally define the information gain.

-

This will denote how much information we gain for some

prediction when we query a specific attribute.

-

It is defined as follows:

-

Y is the event that we want to predict and X is the

attribute

-

Another way to think of information gain is this:

let’s say you’re playing 20 questions and you

know the object is a type of fish.

-

If you ask some dumbass question like “Can it live in

water?”, you won’t learn anything new, because

they’ll almost always answer with

“yes”.

-

If you ask a really good question, like “Does it live

in fresh water?”, you’ll learn a lot from the

answer, so it has information gain.

-

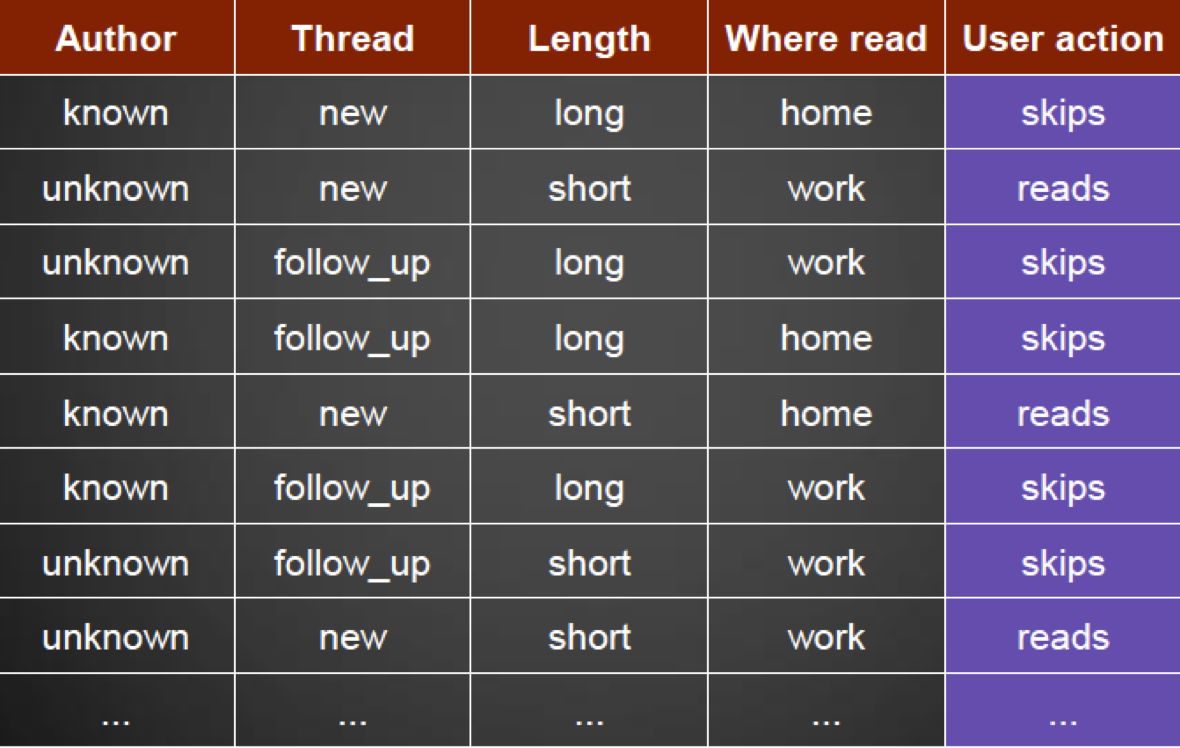

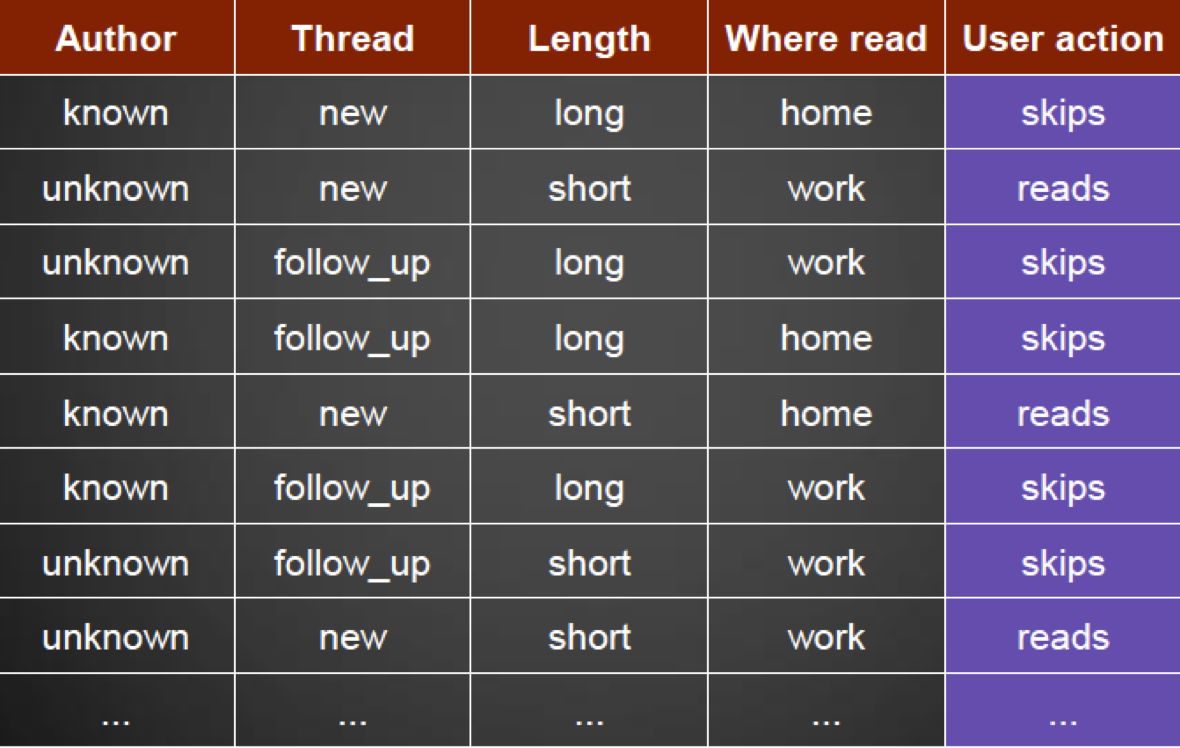

Let’s look at one example

-

We want to predict whether a user will read an email

-

We have recorded the following data in the past:

-

Now we want to find out what the information gain would be

if we chose the attribute “Thread”

-

For the Thread attribute, we have recorded the following

read/skips behaviour

|

|

Reads

|

Skips

|

Row total

|

|

new

|

7 (70%)

|

3 (30%)

|

10

|

|

follow_up

|

2 (25%)

|

6 (75%)

|

8

|

|

|

|

SUM =

|

18

|

-

This table gives the probability that an email is going to

be read, or is going to be skipped, given that we know the

email is new or is a reply to another email.

-

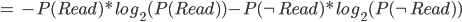

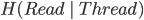

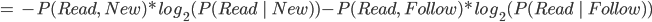

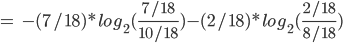

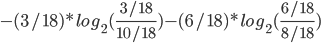

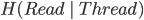

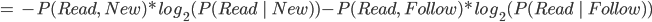

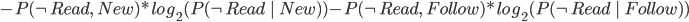

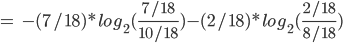

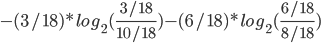

To calculate the information gain for the event read of the attribute Thread, we need to calculate the following:

G(Read, Thread)

= H(Read) - H(Read | Thread)

-

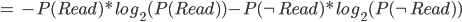

First we need to calculate H(Read), the entropy of

Read

-

We have 18 emails in total, in 9 cases they are read and in 9

cases they are skipped

-

Therefore the probability for read and skip is 0.5

-

We have to consider exactly these two cases

-

Let’s calculate:

-

We also need to calculate H(Read | Thread), the conditional

entropy of read given that we know the thread.

-

We have to consider 4 cases because read/thread can be

true/false.

-

We will need the conditional probability, so remember the

following rule:

-

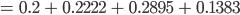

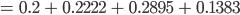

Let’s calculate:

-

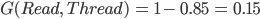

Back to calculating G(Read, Thread)

-

So our information gain is 0.15

-

The higher this value is, the better

-

It cannot be below 0. 0 means we gain no information at

all.

-

After we have calculated the information gain for all

attributes (Length, Thread, Author) we will eventually come

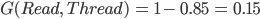

up with this decision tree:

-

This means that Length has the highest information gain,

followed by Thread and Author

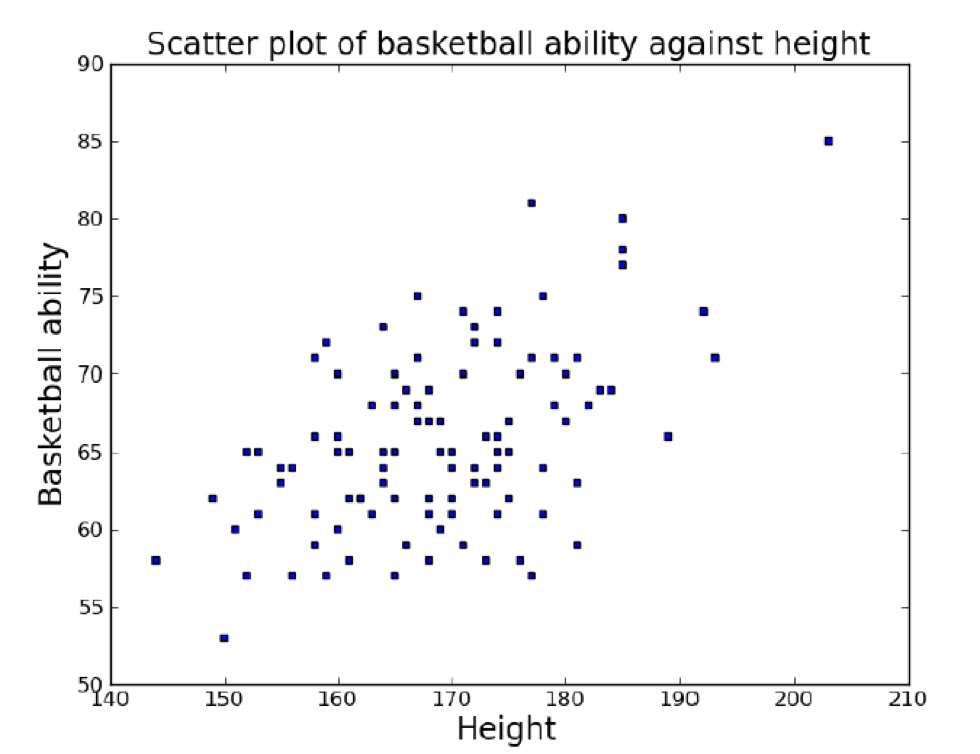

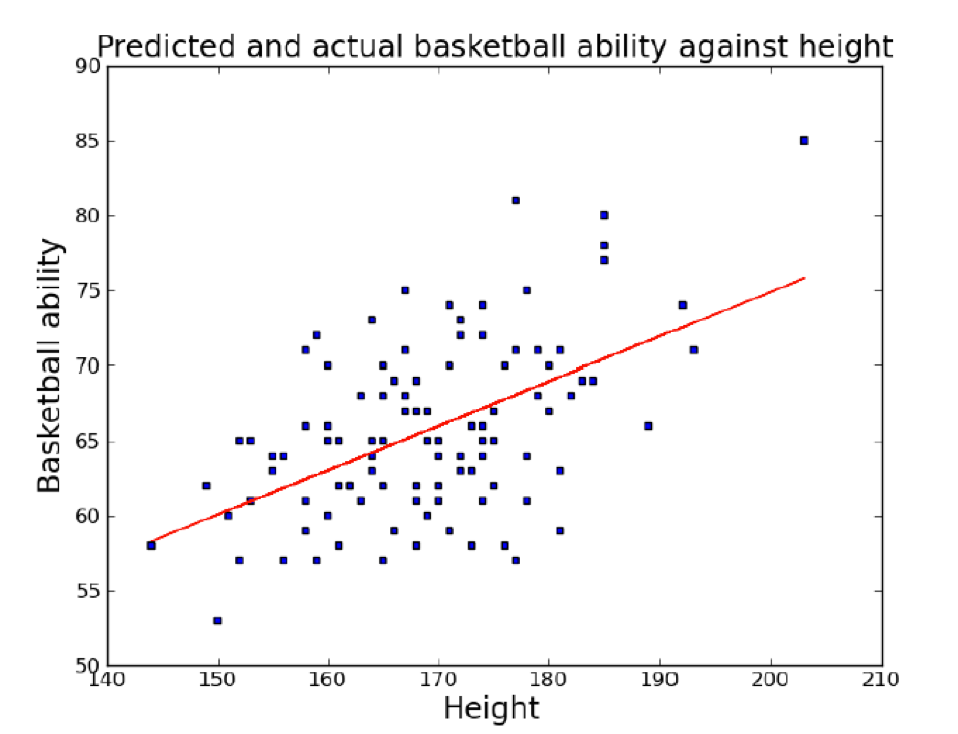

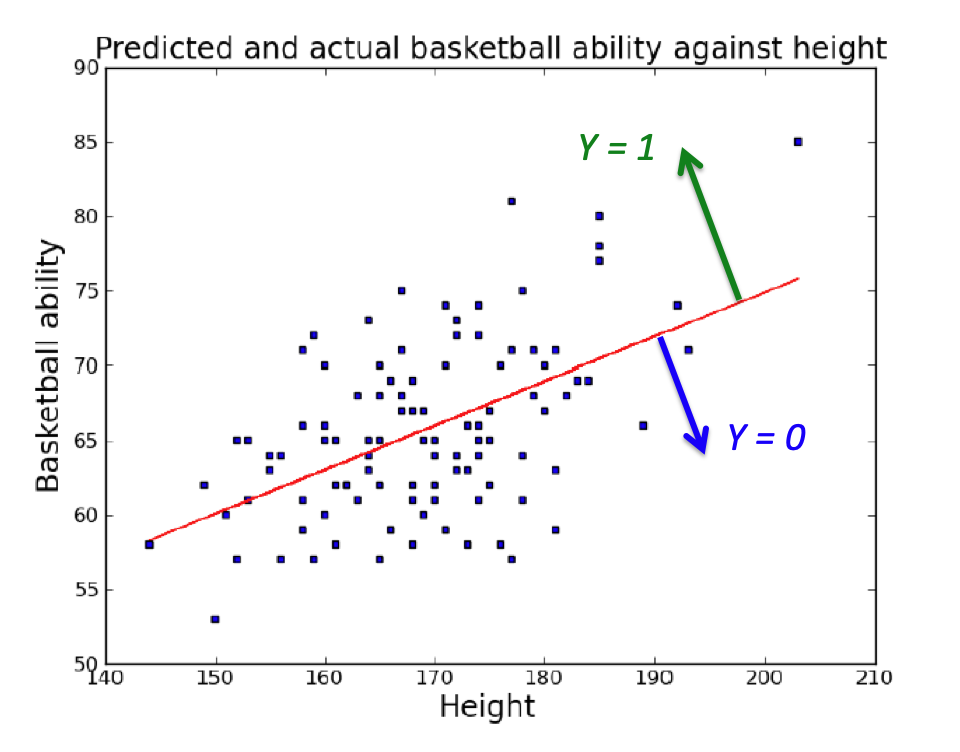

Nearest Neighbour

-

Let’s take a look at a different way of

classification.

-

Humans usually categorise based on how similar a new object

is compared to other known things, e.g. dog, cat, desk,

…

-

So we want to find an algorithm which does the same

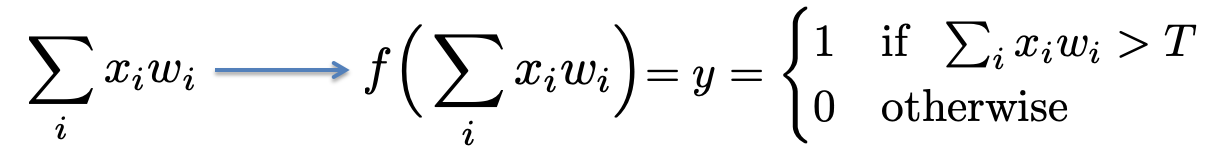

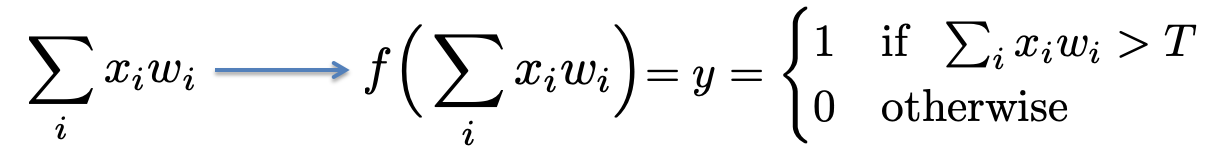

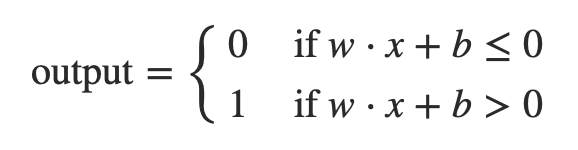

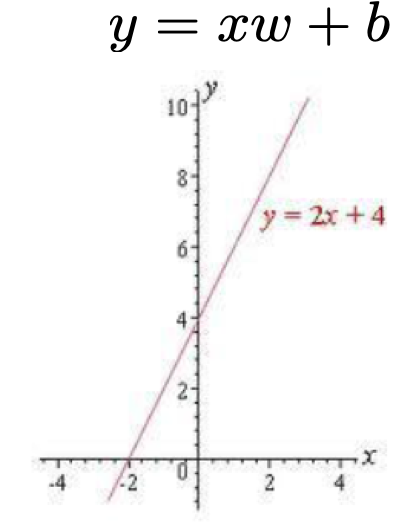

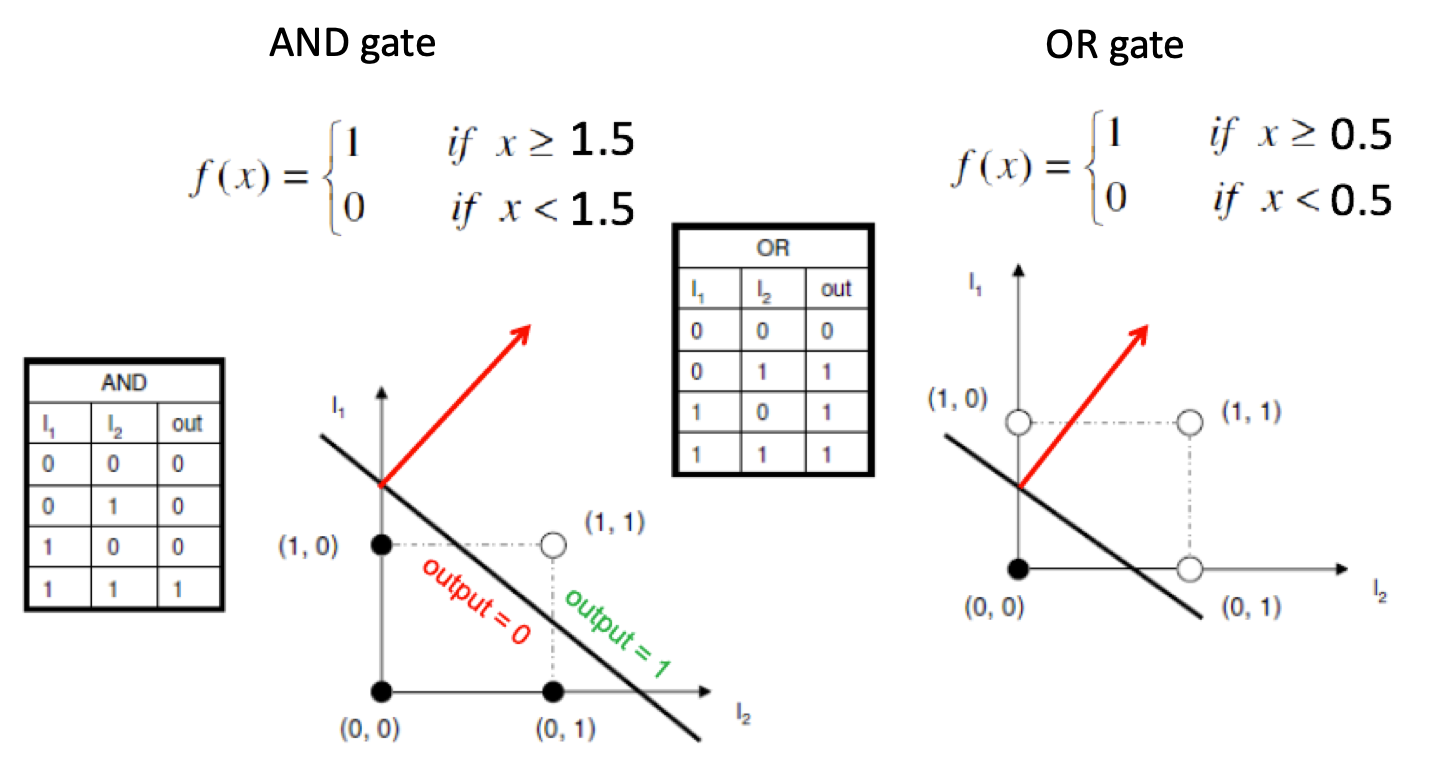

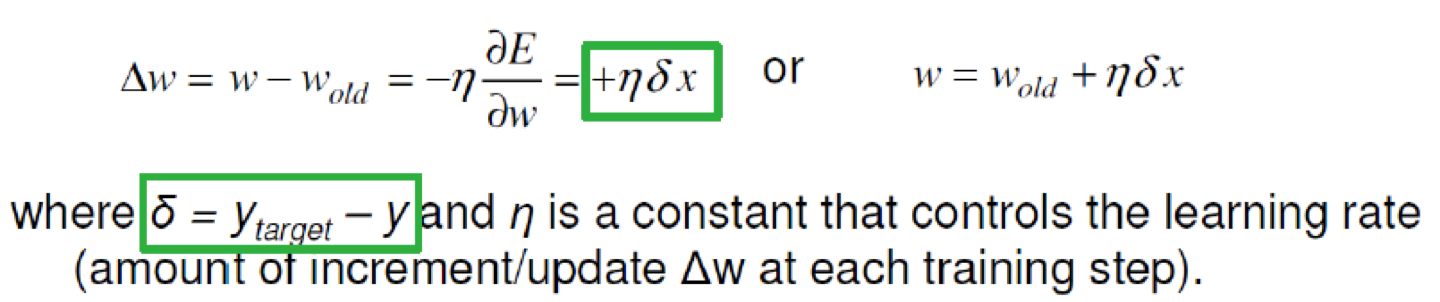

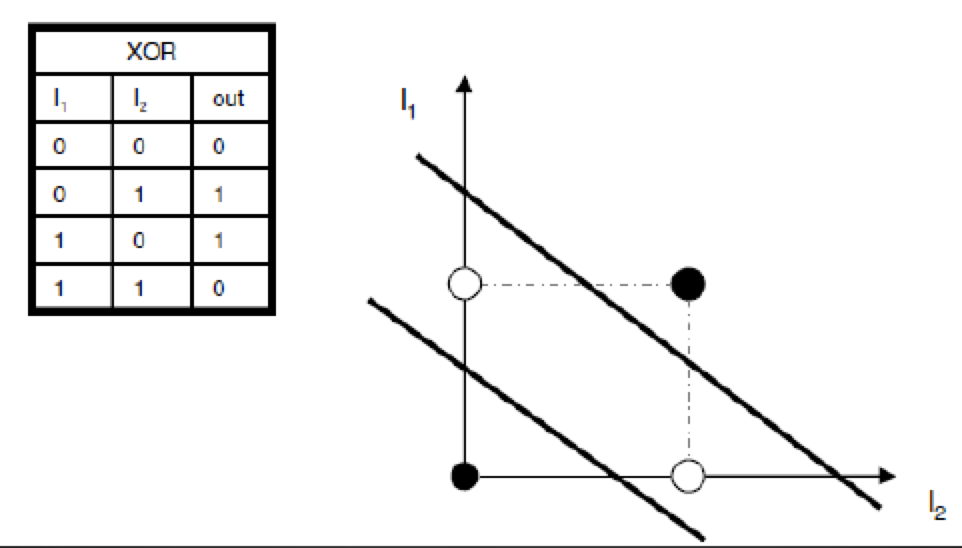

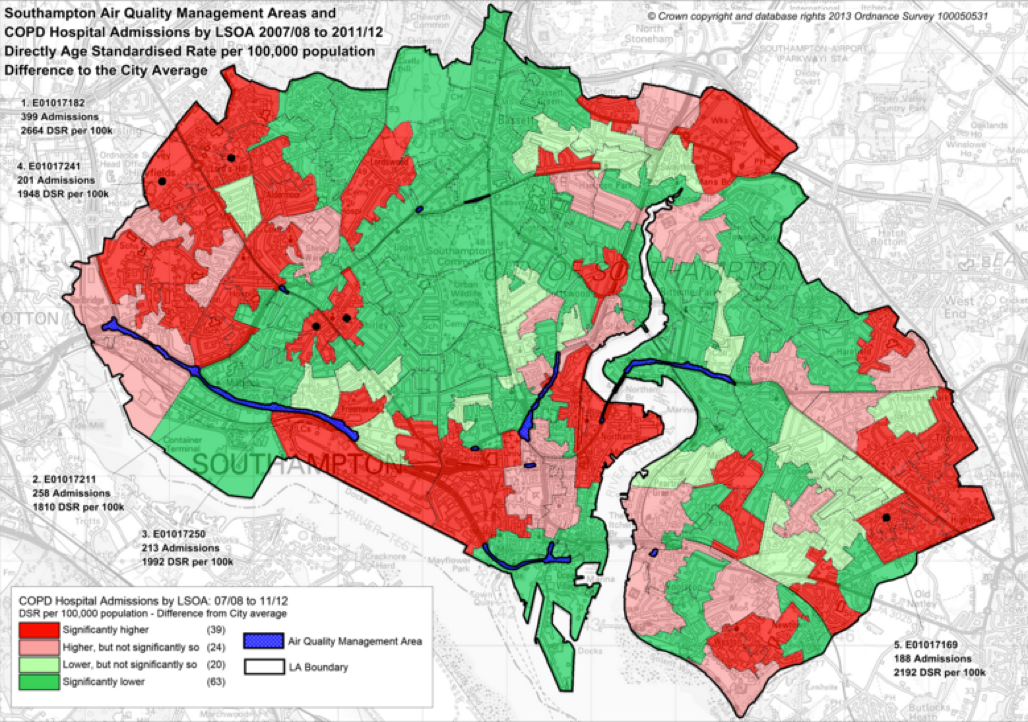

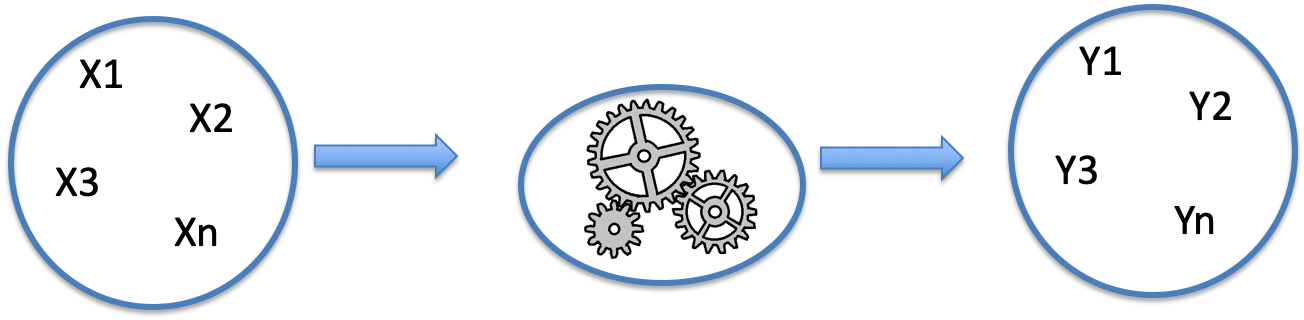

-