Intelligent Agents

Matthew Barnes

Overview 3

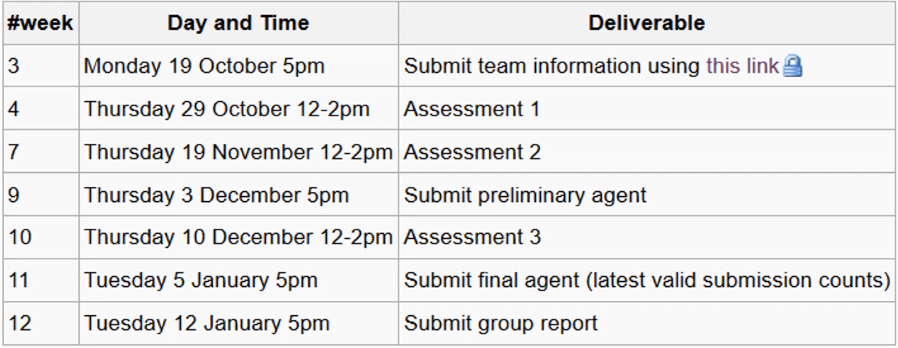

Introduction to Intelligent Agents 3

Agents 4

Intelligent Agents 5

Multi-Agent System (MAS) 6

Agent-Based Negotiation 8

Part 1 8

Ultimatum Game 11

Alternating Offers 11

Monotonic Concession Protocol 12

Divide and Choose 14

Part 2 14

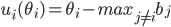

Utility functions 15

Utility space 15

Fairness 19

Part 3 21

Basic negotiation strategies 21

Uncertainty about the opponent 23

Preference uncertainty 25

Utility Theory 25

Decision under certainty 26

Decision under uncertainty 28

Pure Strategy Games 32

Strategic-form Games 33

Strictly dominated strategies 34

Weakly dominated strategies 35

Pure Nash Equilibria 36

Mixed Strategy Games 39

Mixed Nash Equilibria 42

Computing Mixed Nash Equilibria 43

Best Response Method 43

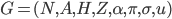

Indifference Principle 45

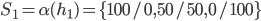

Extensive-Form Games 48

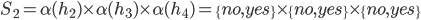

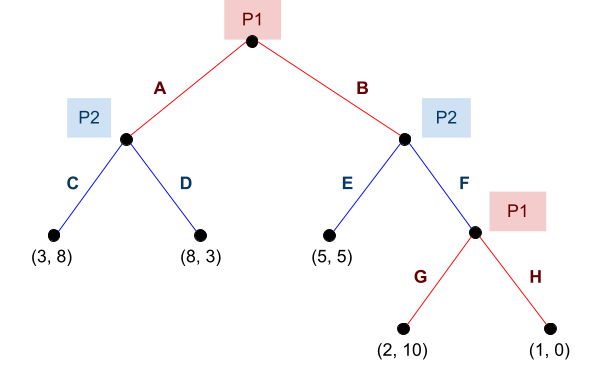

Strategic-Form Transformation 51

Equilibria 53

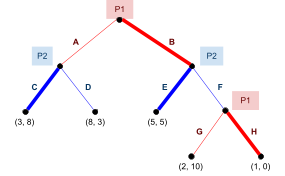

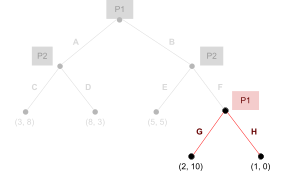

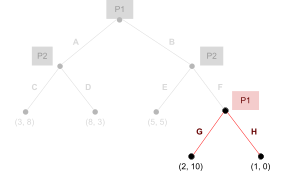

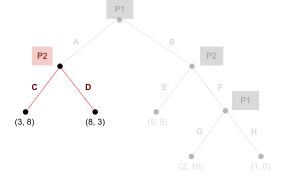

Backward Induction 56

Linear Programming 59

Computing Nash Equilibrium with LP 59

Structure of linear programs 62

Transforming linear programs 63

Beyond linear programming 65

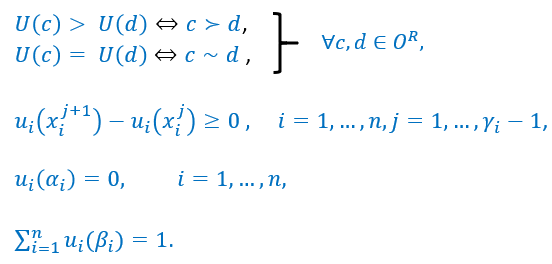

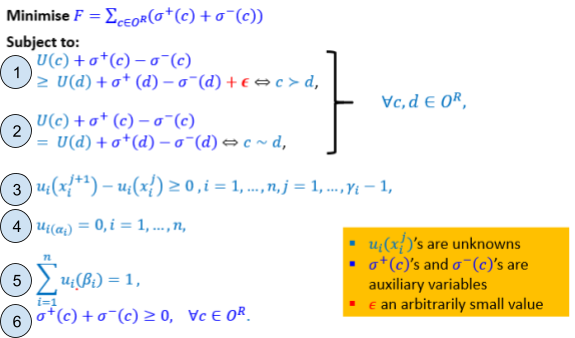

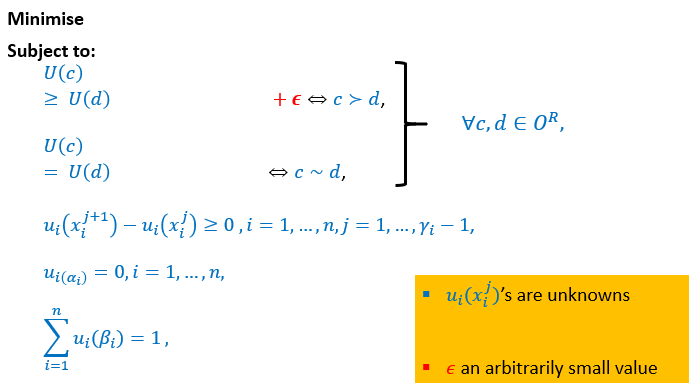

Preference Elicitation 65

Preference ordering 65

Preference uncertainty 66

Multi Criteria Ranking using Multi-Attribute Additive

Functions 67

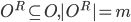

Ordinal Regression via Linear Programming (UTA) 68

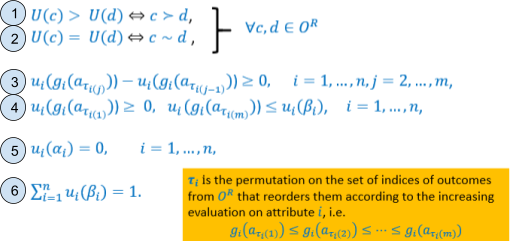

UTAGMS - Robust Ordinal Regression 73

Auctions 78

Types of auctions 80

Analysing Auctions 80

First-price auction expected utility 81

Vickrey Auction: Discrete bids expected utility 83

Vickrey Auction: Continuous bids expected utility 83

Strategy-proofness 84

Bayes-Nash Framework 85

Revenue Equivalence 86

Trading Agents 86

Voting 88

The Social Choice Problem 89

Plurality Vote 90

Copeland Method 91

Borda Count 91

Properties of voting procedures 92

Social Welfare Functions 93

Social Choice Functions 94

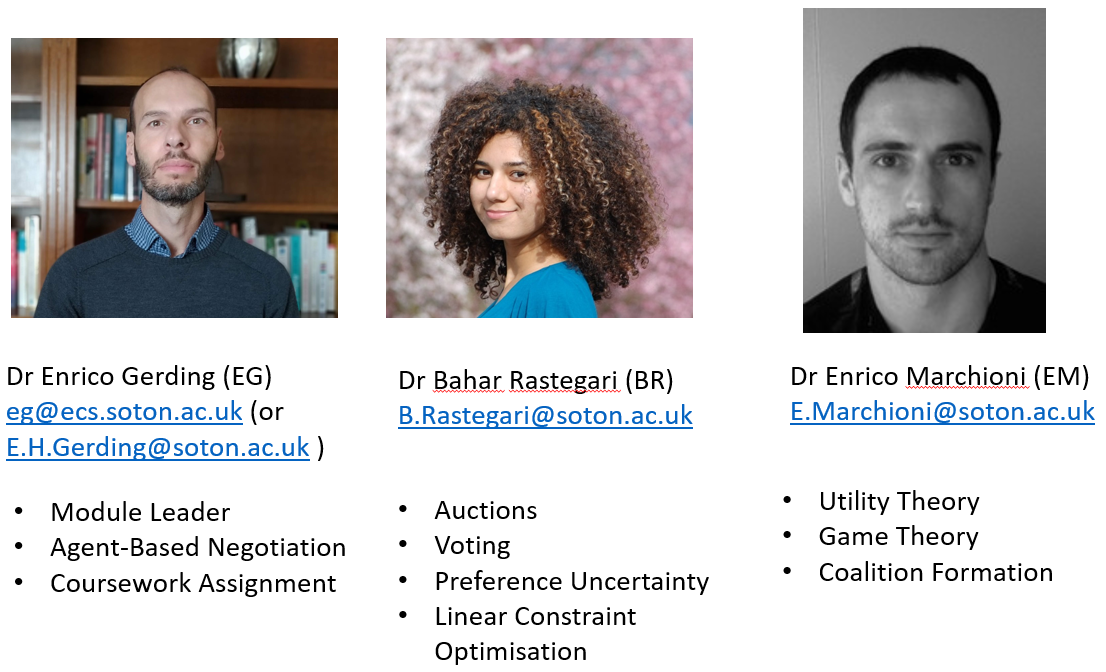

Overview

Introduction to Intelligent Agents

-

Five ongoing trends have marked the history of

computing:

-

Computing costs less

-

We can introduce processing power into places and devices

that would’ve otherwise been uneconomic

-

Edge computing: data is processed at the edge of the network (real-time

“instant data” generated by sensors or

users)

-

Computers are networked into distributed systems

-

They can talk to each other and help each other

-

Computers are smart (sort of)

-

They can complete really complex tasks, like making a move

in chess, or deciding if a picture has a cat in it or

not.

-

We are passing down more and more tasks to computers.

-

We’re giving control to them, even for

safety-critical tasks, such as aircrafts and self-driving

cars.

-

Computers are meant to augment human intelligence.

-

They’re meant to do stuff for us.

-

That means they need input from us, such as decision

preferences.

- So what?

-

Well, each of these trends imply that:

-

Computers need to act independently

-

Computers need to represent our best interest

-

Computers need to cooperate, reach agreements or even compete.

-

Together, they’ve led to the emergence of a new

field:

-

Wow! Lots of agents working together in a system!

-

... what’s an agent?

Agents

“A computer system capable of autonomous action in some

environment, in order to achieve its delegated

goals”

-

In layman’s terms, an agent is a computer system that lives in some kind of changing

environment, and it performs automatic actions to try and

achieve a goal defined by us: the human overlords.

-

The key points are:

-

Autonomy: capable of independent action without need for constant

intervention (can do stuff by itself)

-

Delegation: acts on behalf of its user or owner (represents our best interests)

-

We think of an agent as being in a close-coupled, continual

interaction with its environment:

-

sense → decide → act → sense → decide → ...

-

Perceives the environment through sensors (cameras, TCP/UDP socket, keyboard etc.)

-

Acts on the environment through actuators (variables, web pages, databases, robotic arms etc.)

-

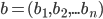

Here’s a simple example of an agent: a

thermostat.

-

Delegated goal: maintain room temperature

-

Perception: heat sensor

-

Action: switch heat on / off if room temp. is too cold /

hot

-

Input: the heat threshold at which the heat should be turned on

at

A pretty plain-looking thermostat. It doesn’t even have

RGB.

-

This one’s a bit boring because the decision is easy:

it’s just checking the room temperature against a

given threshold.

-

How do we define an agent that’s a bit...

smarter?

Intelligent Agents

-

An intelligent agent has three behavioural properties:

-

The agent maintains an ongoing interaction with its

environment, and responds to change that occurs in it.

-

Basically, the agent needs to be able to respond to

change.

-

The agent needs to generate and attempt to achieve goals

and not be driven solely by reacting to events; it needs to

take the initiative.

-

In other words, the agent needs to actually do stuff by

itself to achieve its goals and not just react to things

that change.

-

This also includes recognising opportunities, and taking

them.

-

The agent needs the ability to interact with other agents

and (usually) humans via cooperation, coordination and negotiation.

-

Simply, the agent needs to be able to talk to other

agents.

-

There are other properties, such as:

-

Tries to maximise its performance measure with all possible

actions.

-

Agent improves performance over time

-

Intelligent Agent Challenges:

-

How to represent goals and user preferences

-

How to optimise the decision making

-

How to learn and adapt and improve over time

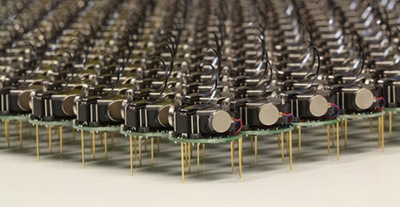

Multi-Agent System (MAS)

-

A multi-agent system (MAS) is one that consists of a number of agents, which

interact.

A multi-agent system of smol robots.

-

Generally, agents act on behalf of users.

-

They each have different goals and motivations with each

other.

-

Despite that, they all work together to achieve a common

goal, like humans (or not; depends on your group assignment)

-

There are some problems that arises with a MAS:

-

If it’s every-agent-for-themselves, how do they

cooperate?

-

How can conflicts be resolved, and how can they

(nevertheless) reach agreement?

-

How can autonomous agents coordinate their activities so as

to cooperatively achieve goals?

-

Agents owned by the same person / organisation

-

Can be controlled and made to coordinate

-

More robust than “centralised” system

-

No incentive needed, as all agents are controlled by the

same thing

-

Each agent works on behalf of someone else

-

Each agent is different

-

“Every agent for themselves”

-

Still need to cooperate, but needs mechanisms to resolve

conflicts

-

Link with other disciplines:

- Robotics

-

Complex Systems Science

- Economics

- Philosophy

- Game Theory

- Logic

-

Social Sciences

-

Infusing well-founded methodologies into the field

-

There are many different views as to what the field is

about

You could even teach a bunch of robot agents to play football

with each other.

-

Algorithms to solve hard decision problems; e.g.

planning

-

Algorithms to interpret complex data; e.g. Machine Learning

and Machine Vision (I know that’s not classical AI but whatever)

-

Knowledge representation and reasoning

-

Agents combine techniques:

-

Robotics: vision + planning

-

Software assistants: machine learning + scheduling

-

E-Commerce ‘bots’: search + machine learning

-

There are applications of agents:

-

As a paradigm for software engineering

-

As a tool for understanding human societies

Agent-Based Negotiation

Part 1

-

When agents work with each other, there can be

conflict.

-

Conflict is when there is a clash between agents’ different

preferences or aims.

-

This can happen with people, too:

-

Who’s going to do the washing up?

-

Where do we go out for dinner?

-

Conflicts happen in multi-agent systems when:

-

Agents are self-interested (maximise their own benefit)

-

Agents represent different stakeholders (different sets of interests / aims)

-

A buyer wants to pay as little as possible

-

The seller wants to sell as much as possible

-

This is a conflict; the buyer wants to reduce the price, and the seller wants

to increase it.

-

Conflict resolution is possible when there is a mutual benefit to reach an

agreement.

-

In other words, it’s better to reach an agreement

than to call the whole thing off.

-

There’s different types of conflict resolution:

- Auctions

- Voting

- Negotiation

-

These different types are suited for different

situations.

-

Auctions are used to allocate scarce resources or tasks, such

as:

-

Items to buyers

-

Advertising space to advertisers

-

Cloud computing

-

Tasks to robots

-

Stocks and shares

To enable auctioning within agents, you must

3D-print a small gavel for them to use.

-

Auctions are characterised by:

-

Clearly defined protocol (rules or mechanism) e.g. English auction, Dutch auction,

Vickrey auction

-

Typically requires trusted third party (e.g. auctioneer)

-

Involves continuous resource such as money

-

Exploits competition between agents (works well with lots of agents)

-

Voting is used for group based decisions, called social choices.

- Examples:

-

Where to go out to dinner

-

Where to build new bridge / services / housing

-

Which is the best JoJo part

Voting: a core component of a civilised society,

according to Lord of the Flies.

-

Voting is characterised by:

-

A single decision from a (typically finite) number of

options

-

Each agent can have different preferences for each option,

which is given by a preference order (a.k.a ordinal utility function)

-

Clearly defined protocol

-

Negotiation (a.k.a Bargaining) is governed by a protocol which defines the

“rules of encounter” between agents,

including:

-

Type of communication allowed

-

Type of proposals that are allowed

-

Who can make what proposal at what time

-

Basically, in a negotiation, different kinds of proposals

are made at certain times.

-

Usually, proposals are made until an agreement is reached

or the agents are too stubborn and quit.

-

The way it works depends on the kind of negotiation

protocol we’re using.

An illustration of a negotiation;

not to be confused with TCP/IP.

-

Negotiations are more flexible compared to other

approaches:

-

You can exchange offers / proposals, but you can also

exchange other information such as arguments (reasons why)

-

Allows for less structured protocols

-

Often bilateral (between two agents) but can also support multi-party negotiation (more than two agents)

-

Enables more complex types of agreements (e.g. multi-issue negotiation)

-

Often decentralised (can involve a mediator)

-

In case you haven’t noticed, this topic is called

Agent-Based Negotiation.

-

Therefore, we’ll focus on negotiation

protocols.

-

Negotiations are characterised by:

-

Single-issue negotiation (distributive bargaining): e.g. haggling prices. Competitive setting,

short-term

-

Multi-issue negotiation (integrative bargaining): include more issues, allows for mutual benefit and

cooperation, long-term

-

The preference over possible agreements.

-

Basically, how “picky” an agent is given an

offer.

-

Specified using utility functions.

-

Agent negotiation strategies

-

How the agent behaves.

-

Defined by the actions taken by the agent at each possible

decision point, given information available

-

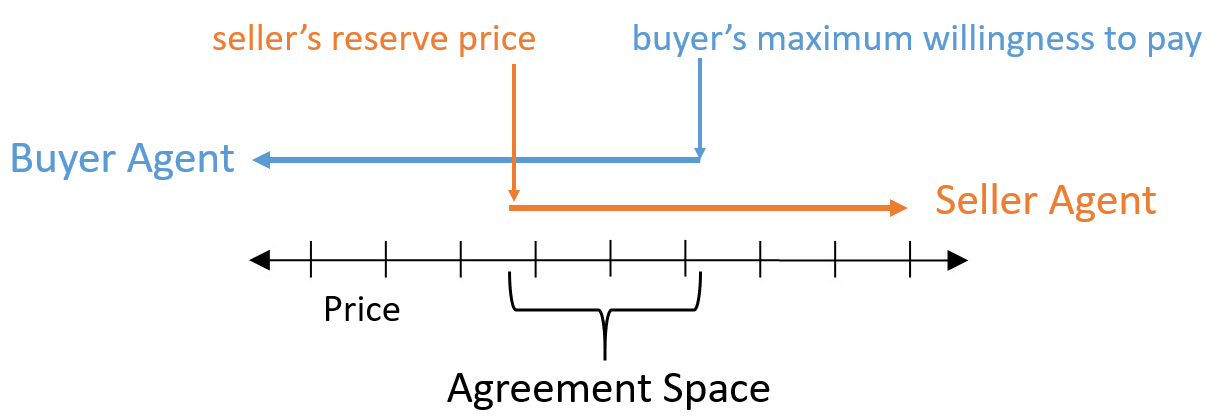

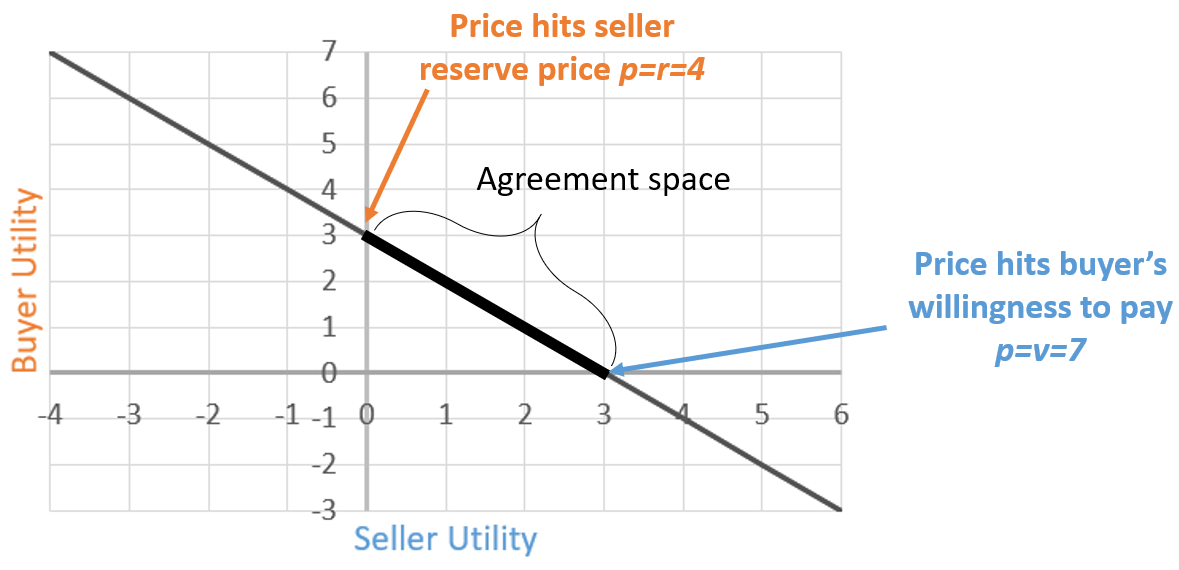

Before we move on to the negotiation protocols, it’s

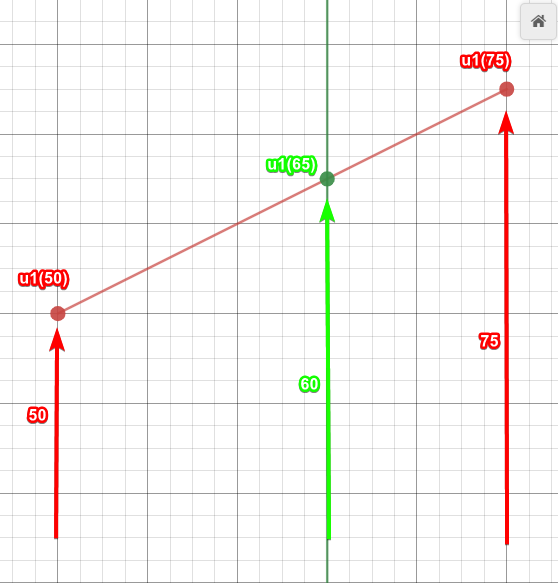

worth modelling a single-issue negotiation:

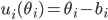

-

This graph represents a buyer/seller negotiation, and the

bottom axis represents the argued price.

-

The blue arrow is the range of prices the buyer is willing

to purchase at, and the orange arrow is the range of prices

the seller is willing to sell their product for.

-

The range of prices at which the buyer and seller arrows

intersect is called the agreement space.

-

That’s the range of prices that both agents are

willing to settle for.

-

The whole point of a negotiation is to scope out the

agreement space, but the agents also want to make offers

that maximise their aims, e.g. the buyer would want a deal

on the far left of the agreement space.

Ultimatum Game

-

Let’s say you have a cake and need to split it

between two agents.

-

In the Ultimatum Game:

-

Agent 1 suggests a division of the cake

-

Agent 2 can choose to accept or reject the cake

-

If agent 2 rejects, nobody gets the cake

-

This is also known at the take it or leave it game.

Agent 1 is not a very reasonable agent.

-

If agent 1 splits the cake in half, it seems fair.

-

But what if agent 1 leaves agent 2 with a small slither of

cake?

-

Either agent 2 has to accept the tiny slither, or get

nothing.

-

Does that seem fair?

-

This is called the first-mover advantage.

-

It means whoever makes the first offer has a significant

advantage in the negotiation.

-

The Ultimatum Game assumes that the agreement space is

already known between the agents (the seller’s reserve and buyer’s willingness

to pay are shared knowledge).

-

There’s also no ability to explore the negotiation

space, which isn’t so important in this example, but

is important when there’s multiple issues.

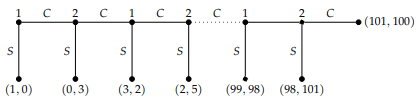

Alternating Offers

-

The alternating offers protocol consists of a number of rounds, in which offers

are exchanged in an alternating fashion.

-

Agent 1 starts off with an offer.

-

Agent 2 can accept or reject. If agent 2 rejects, agent 2 can make a

counter-offer.

-

Agent 1 can accept or reject the counter-offer. If agent 1 rejects, agent 1 can

make a counter-counter-offer.

-

This keeps on looping until an agreement is made.

-

The loop can also end after a deadline of a set number of rounds.

How can they negotiate how much cake to eat

if they don’t have hands to eat with?

-

The vendor suggests ¥1,000 for five kebabs.

-

Joseph makes a counter-offer of ¥250.

-

The vendor makes a counter-counter-offer for

¥700.

-

They converge with the offers ¥300, then ¥600, then

¥350, then ¥550, then ¥400, then ¥450, until

eventually they reach a consensus with ¥425.

-

This is better, but is there any reason for an agent to

concede (change their previous offer)?

-

The vendor could’ve just kept saying ¥1,000, no

matter what Joseph said, and created an Ultimatum Game

situation.

-

True, if the vendor kept prices high, Joseph could’ve

just walked away.

-

But in the context of agents, a rational agent will always

accept in an ultimatum game.

-

There needs to be some way to incentivise a

concession...

Monotonic Concession Protocol

-

In the Monotonic Concession Protocol, negotiations proceed in rounds as well, except agents

simultaneously propose offers at the same time (without seeing the other offer).

-

If an agent makes an offer that the other agent rates at

least as high as their own offer, an agreement can be made (if both offers are agreeable, one is picked at

random).

-

If not, another round begins.

-

In the next round, at least one of the agents needs to

concede (change their offer).

-

If neither agent concedes, the negotiation ends without a

deal.

-

What was that? You want an example? The slides are too

incompetent to provide a proper explanation?

|

Buyer

|

Seller

|

Description

|

|

£5

|

£30

|

The buyer wants to buy something, and the

seller is selling it at a haggled price.

The buyer makes an offer for £5, and the

seller makes an offer for £30. These are

shown simultaneously.

The buyer thinks their offer of £5 is way

better for them than the seller’s deal of

£30.

The seller thinks their offer of £30 is

way better for them than the buyer’s deal

of £5.

Therefore, another round begins.

|

|

£10

|

£20

|

Both agents concede and make new offers. The

prices converge.

The buyer still thinks their offer is better

off for them than the seller’s offer, and

vice versa.

Another round!

|

|

£10

🐐

|

£20

|

The seller does not concede and sticks with the

same offer they had before. They’re

certain that their product is worth £20 at

the least.

The buyer is still making an offer of

£10, but is also throwing in their best

goat into the deal.

The seller thinks. Yesterday, at the market,

the cheapest goat went for about £12. That

means the buyer is basically paying £22,

with the value of the goat included.

That’s even more than the seller is

offering!

Right now, the seller is rating the

buyer’s offer as greater than or equal to the rating of the seller’s own

offer. That means an agreement can be

made.

The seller walks away with £10 and a new

goat, and the buyer walks away with whatever it

was the seller was selling (use your imagination).

|

Divide and Choose

-

The Divide and Choose protocol is pretty self-explanatory:

-

Agent 1 divides a continuous resource into partitions.

-

Agent 2 chooses one of the splitted partitions.

-

So in the Ultimatum Game example, even if agent 1

partitioned a slither of cake for agent 2, agent 2 could

just pick the larger partition, leaving agent 1 with the

slither.

Mmmm, cake 🍰

-

Because the other agent is gonna pick the best slice, agent

1 would want to slice the cake equally, to maximise how much

they’ll get.

-

Therefore every agent will get an equal partition in divide

and choose.

-

This protocol only works for resource allocation problems, where some continuous resource needs to be divided

between multiple agents (like cake).

-

It also works for non-homogenous resources, like if parts

of the cake are more attractive (like the candy corn on top of that cake picture

above), or in the case of land division.

-

There’s equivalent protocols that handle more than 2

agents, but it gets complicated.

-

All in all, the desirable properties of a negotiation

are:

-

Individual rationality: A deal should be better than no deal for all agents

-

Pareto efficiency: There should be “no money” (or cake) left on

the table

-

The agreement should be “fair”

Part 2

-

In this part, we’re going to define these concepts

more formally.

-

So I hope you’re fluent in maths syntax.

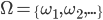

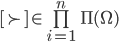

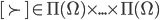

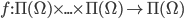

Utility functions

-

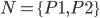

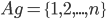

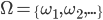

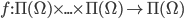

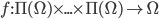

First of all, we’ll define the utility function.

-

It is used to model an agent’s preference given an

offer.

-

In other words, this function tells us how much an agent

likes an offer.

-

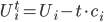

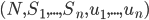

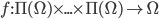

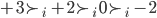

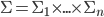

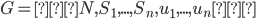

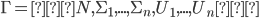

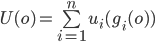

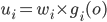

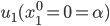

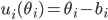

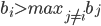

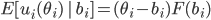

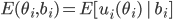

The utility function is defined as:

-

where

where  is the set of all possible offers

is the set of all possible offers

-

This function returns how much the agent likes that

offer.

-

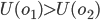

There are two kinds of utility functions:

-

Ordinal preferences: a preference order is defined, but there is no numerical

utility

-

In other words, we can say

and declare that

and declare that  is more preferable than

is more preferable than  , but that’s it.

, but that’s it.

-

Cardinal preferences: each outcome has a numerical utility value

-

For example, we could say that

and

and

-

We can always infer ordinal preferences from cardinal

ones

-

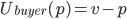

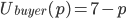

A buyer and seller negotiate.

-

The set of possible outcomes

are the price, denoted by

are the price, denoted by  , or a disagreement.

, or a disagreement.

-

The cardinal utility functions are given by:

-

is the minimum price the seller is willing to sell

for (reserve)

is the minimum price the seller is willing to sell

for (reserve)

-

is the maximum price the buyer is willing to buy for (value)

is the maximum price the buyer is willing to buy for (value)

-

Here, the buyer will prefer to pay less, and the seller

will prefer to pay more.

-

When

goes up,

goes up,  will go up and

will go up and  will go down, and vice versa if

will go down, and vice versa if  goes down.

goes down.

-

If the price goes below the minimum selling price

or above the willing buying price

or above the willing buying price  , the seller / buyer will prefer a disagreement, as

, the seller / buyer will prefer a disagreement, as  or

or  will dip below zero.

will dip below zero.

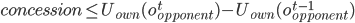

Utility space

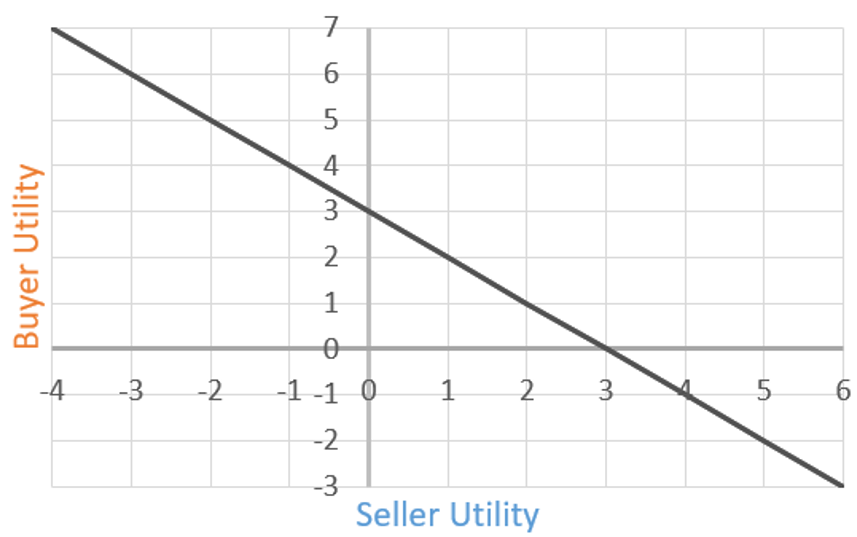

-

We can visualise this using a utility space.

-

A utility space is a graph that maps one utility function

on one axis, and another utility function on the

other.

-

It allows you to easily see the agreement space given a

negotiation.

-

If that makes no sense, don’t fear: here’s an

example.

-

Given the buyer / seller example above, let’s say

and

and  .

.

-

We can map the seller utility as the x axis and the buyer

utility as the y axis.

-

Then, we can plot points for all seller / buyer utility

values, for all values of

.

.

-

This will form a line:

-

For every point on that line, there is a value of

that, when plugged in to the utility functions of the

seller and buyer, results in the values of the x and y

coordinate (a.k.a the utility values of that point on the line).

that, when plugged in to the utility functions of the

seller and buyer, results in the values of the x and y

coordinate (a.k.a the utility values of that point on the line).

-

For example, say we picked the point (1, 2) on the line.

That means there is a value of

where the seller’s utility is 1, and the

buyer’s utility is 2 (spoilers: it’s 5).

where the seller’s utility is 1, and the

buyer’s utility is 2 (spoilers: it’s 5).

-

What can we do with this graph?

-

Well, each agent will accept a deal if their utility value

is over zero (because if it’s under zero, they’d rather not

make a deal).

-

That means both agents will accept prices if both their

utility values are over zero.

-

We can find that range by looking at the graph:

-

Beyond the point (0,3), the price exceeds how much the

seller is willing to sell for.

-

Beyond the point (3,0), the price subceeds how much the

buyer is willing to buy for.

-

That means the line segment between those two points is the

sweet spot, or the “agreement space”, at which

both agents will be happy.

-

That also means any value of

within that line segment will make those two agents

happy.

within that line segment will make those two agents

happy.

-

The agreement space is

-

We can’t negotiate forever.

-

We probably have better things to do than spending all

night arguing.

-

There’s different ways to model time pressure:

-

You must finish the negotiation after a set number of

rounds.

-

Imposed by the protocol (Ultimatum game can only be 1 round

long)

-

Can also be determined by individual constraints

-

There’s always a chance that the negotiation will end

in any round.

-

With monotonic concession, if agents don’t concede,

everything ends anyway.

-

Costs are applied to the utility functions that grow each

round.

-

Here’s two ways you can model bargaining costs:

-

Like the name says, a fixed cost is applied to the utility

function each round.

-

The cost grows linearly as rounds progress.

-

More formally, let

denote the costs for agent

denote the costs for agent  , and

, and  is time or bargaining round, then the utility at time

is time or bargaining round, then the utility at time  is given by:

is given by:

-

After each round, the utility function is shrinked by

multiplying it by a number between 0 and 1.

-

An analogy for this is a melting ice cake; the longer you

wait, the more the cake shrinks.

-

More formally, let

be the discount factor of agent

be the discount factor of agent  , then the utility is given by:

, then the utility is given by:

Is it worth putting icing on an ice cake?

-

This all assumes that we have only one issue: one cake to

split, one price to negotiate over etc.

-

But what if we have many issues to take into account, like

time, quality of service, delivery time etc.?

-

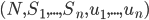

In that case, we can make our offers into vectors, so it

would be

for each issue

for each issue  .

.

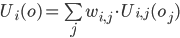

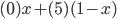

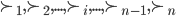

-

We can use a weighted additive utility function to calculate a utility value given an offer vector:

-

That sum looks scary, but don’t worry; it’s

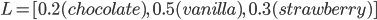

just a sum of the utility values of all the issues in the

offer vector, but some of them have weights applied to them,

so one issue could be more important than another to an

agent.

-

Let’s see an example.

-

Let’s say we have two cakes: one strawberry and one,

uh... chilli flavoured.

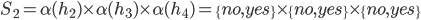

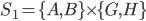

I just wanted to be different.

-

These two cakes represent our two

“issues”.

-

Offer

represents the share that agent 1 receives for cake

represents the share that agent 1 receives for cake  .

.

-

This means agent 2 will get

.

.

-

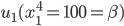

Agent 1 prefers the strawberry cake, and agent 2 prefers

the chilli cake.

-

The weights will be 7 for a preferred cake and 3 for the

other cake.

-

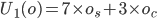

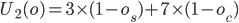

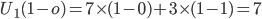

This results in the utility functions:

-

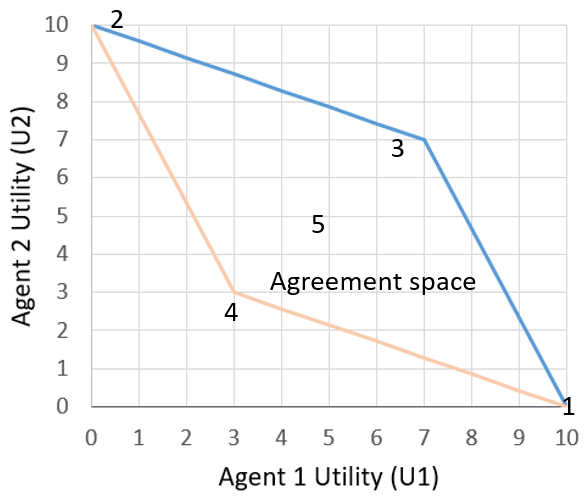

What are we waiting for? Let’s see the utility space

for this!

-

The slides and online lecture go into a bit more detail,

but basically:

-

Point (7,7) is when both agents get all of their preferred

cake

-

Point (5,5) is when both agents get half of both

cakes

-

Point (3,3) is when both agents get all of their

non-preferred cake

-

Point (10,0) is when agent 1 gets both cakes

-

Point (0,10) is when agent 2 gets both cakes

-

Within this kite shape are all the possible agreements for

this negotiation.

-

It’s kind of clear that point (7,7) sounds like the

best offer, but hear me out: there’s more to it.

-

Did you notice that the top right half of the kite

perimeter is blue?

-

All the offers within that blue segment are all Pareto efficient.

-

That means that there’s no further improvement to

that offer without making one of the other agents worse

off.

-

The Pareto efficient frontier is the set of all Pareto efficient agreements; in

other words, the blue segment.

-

Basically, there’s no reason not to accept on a

Pareto efficient offer.

-

If you have an offer that is not Pareto efficient, there

must be some way to make that offer better for

everyone.

-

There’s even more properties we desire from an

offer.

-

Here’s three of the main ones:

-

Should be individually rational (

>

>  )

)

-

Should be Pareto efficient

-

Should be fair

-

Wait, “fair”?

- What does fair mean?

Fairness

-

Fairness is an extra condition for an agreement that makes it more

desirable than the others.

-

Here’s four of them:

-

Utilitarian social welfare: maximise the sum of utilities

-

Pick the offer that maximises the sum of all the utility

values.

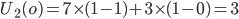

- Formally:

-

Egalitarian social welfare: maximise the minimum utility

-

Pick the offer that maximises the smallest of the utility

values.

- Formally:

-

Nash bargaining solution: maximise the product of the utility of the agents (minus

the disagreement payoff)

-

Pick the offer that maximises the product of the utility

values.

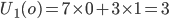

- Formally:

-

This one is especially cool because if an offer satisfies

the Nash bargaining solution, it has these properties:

-

Individual rationality: it’s gonna be better than doing nothing

-

Pareto efficiency: we’ve just covered that above

-

Invariance to equivalent utility representations: it’s immune to stuff like multiplying the entire

utility function by a constant (cool because ranges of some utility functions may be

different)

-

Independence of irrelevant alternatives (IIA): if you remove all non-optimal agreements and recalculate

the Nash bargaining solution, you’ll end up with the

same offer again; it won’t change

-

Symmetry (SYM): if the agents have the same preferences or are

“symmetric”, then the solution gives them the

same utilities (the kite example above is symmetric along x=y)

-

Envy-freeness: no agent prefers the resources allocated to other

agents

-

An agent is envious of the other agent if it would prefer

the allocation received by that agent.

-

A solution is envy free if no agent prefers the allocation of another agent.

-

This only makes sense in the case of resource allocation

problems.

-

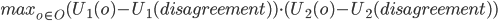

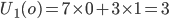

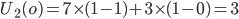

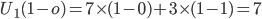

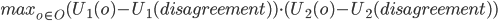

Following the example above, let’s say agent 1 got

chilli cake and agent 2 got strawberry cake.

-

Let’s see their preferences if we reverse all the

allocations and give them their preferred cakes:

-

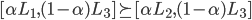

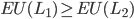

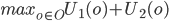

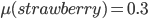

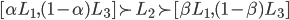

We can see that

is greater than

is greater than  and vice versa for agent 2, therefore both agents are

envious of each other (they both desire what the other has).

and vice versa for agent 2, therefore both agents are

envious of each other (they both desire what the other has).

Part 3

Basic negotiation strategies

-

There’s two kinds of negotiation strategies:

-

This is pretty much all the stuff we learned above.

-

To use a game theoretic strategy, you need to know the

rules of the game.

-

Preferences & beliefs of all the players needs to be

common knowledge.

-

It’s assumed that all players are rational

-

Preferences are encoded into a set of types

-

Closed systems, predetermined interaction, small sized

games

-

No common knowledge about preferences

-

Players don’t have to be rational

-

Agent behaviour is modeled directly

-

Suitable for open, dynamic environments

-

Space of possibilities is very large

-

Basically, we have no idea what’s going to happen,

but we use heuristics to make educated guesses.

-

We’ve had a look at game theoretic strategies

already.

-

So let’s have a look at heuristic strategies.

-

Heuristic strategies are split into two parts:

-

What target utility should I aim for at a particular point

in the negotiation?

-

So given a point in the negotiation, the concession

strategy is in charge of deciding what minimum target

utility value we should strive for in our offers.

-

Basically, it determines how picky we are with offers at a

given moment.

-

Multi-Issue offer producing strategy

-

Once a target utility is established, what offers should I

produce to achieve an agreement where my utility satisfies

the target utility?

-

We also make sure to satisfy Pareto efficiency.

-

This is trivial in the case of single issues.

-

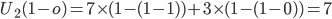

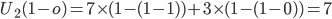

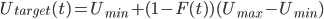

There are two well-known concession strategies:

-

With time dependent tactics, we have a maximum and a

minimum utility value, Umax and Umin.

-

We start off by aiming for the maximum, and we gradually

move our target down to the minimum over time.

-

Formally, we define it like this:

-

Where F(t) is a function that takes in the time and returns

a value between 0 and 1.

-

It gives the fraction of the distance between the best and

the worst offer.

-

So for example, if F(t) = 0, we get Utarget(t) = Umax.

-

If F(t) = 1, we get Umin.

-

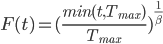

This function is defined as follows:

-

β → 0 - Hard-headed

-

No concessions; stick to the initial offer throughout (hope the opponent will concede)

-

β = 1 - Linear time-dependent concession

-

Linearly approach minimum utility

-

β < 1 - Boulware

-

Initial offer is maintained until just before

deadline

-

β > 1 - Conceder

-

Concedes to minimum utility very quickly

-

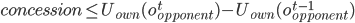

The agent detects the concession the opponent makes during

the previous negotiation round, in terms of increase in its

own utility function.

-

The drop in utility value of the offer the agent will

concede to in the next round is equal to (or less than) the

concession made by the opponent in the previous round:

-

If that made no sense, let me show you an example.

-

Your utility function is U(o) where o is an offer.

-

You make an offer to your opponent, o1, where U(o1) = 0.8.

-

Let’s say your opponent makes a counter-offer o2, and U(o2) = 0.5.

-

The next round, your opponent offers you a slightly better

offer, o3, and U(o3) = 0.6.

-

So we like o3 slightly more than o2; by 0.1, to be exact.

-

That means, for our next offer, we can reduce the utility

value for our initial offer by any value that is equal to or

less than 0.1.

-

For example, our next offer could be o4 where U(o4) = U(o1) - 0.1 = 0.7.

-

Or it could be less: U(o4) = U(o1) - 0.05 = 0.75.

-

Basically, the rate at which our offers go down in our

utility is the same / similar rate to which the

opponent’s offers go up in our utility.

Uncertainty about the opponent

-

Imagine if you knew everything.

-

What would you do with that information?

-

One of the things you could do is give optimal concessions

at any point in a negotiation.

If you really did know everything,

you probably wouldn’t be reading these notes.

-

But the reality is that we don’t know

everything.

-

We don’t know exactly what our opponent likes and

what they’re going to do.

-

However, we can create models of our opponent and guess what they’re like

based on what they’ve done so far.

-

So now we have a target utility from the concession

strategies, and we have a model of the opponent.

-

From those two, how do we create the best offers

that’ll maximise our chance of getting an agreement we

like?

-

We use an offer-producing strategy!

-

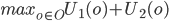

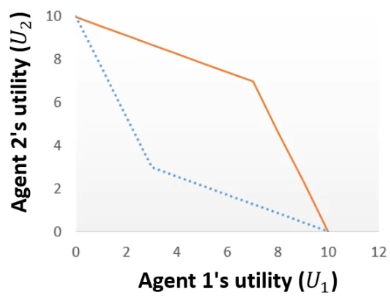

First, we create a graph of the agreement space:

-

Keep in mind that our opponent in this graph, agent 1, is

just a model. An estimation.

-

Now let’s say our target utility is 8:

-

We want any offer in that shaded green region, because

their utility values are all above / equal to 8.

-

However, it would be best to make the offer where the red X is.

-

That’s because it’s the offer that:

-

Satisfies our target utility

-

Is Pareto efficient

-

Maximises the opponent’s utility too, so

they’re more likely to agree with it

-

When the opponent utility is not known, this is called private information.

-

We can guess the opponent’s utility function by

looking at their behaviour.

-

For example, opponents are likely to concede on their least

preferred issues first, so you can guess the weight of that

issue.

Preference uncertainty

-

Sometimes we might not even know our own utility

function.

-

“Huh? But I’m me! Of course I know my own

utility function!”

-

But have a think about where the utility function actually

comes from.

-

The utility function is just a mathematical model of your best interests, used by an agent.

-

The agent has to ask you a bunch of questions to optimise

its utility function, but it’ll never perfectly map

out your interests exactly.

-

This process is called preference elicitation.

-

Asking you questions is called cognitive costs.

-

If there’s a lot of possible outcomes, there’s

a lot of questions the agent has to ask.

-

The agent wants to ask you lots of questions to get the

best utility function that represents you, but it

doesn’t want to bombard you with so many questions

that it’ll take you the rest of your life just to

answer them all.

-

So there’s a trade-off between cognitive costs and

maximising the utility.

-

There’s also some recent work that looks at

negotiation with incomplete information about our own

utility, called preference uncertainty.

-

We’ll go into more detail about preference

uncertainty later.

Utility Theory

-

A game is a mathematical model of interactive decision

making.

-

Basically, agents go head-to-head and interact to try and

achieve the best possible outcome.

-

There are different kinds of best outcomes, like winning,

maximising payoff, etc.

An example of a game. Although the kind of

games we’re reasoning with are a bit simpler.

-

The kind of game we’ll be talking about will go like

this:

-

An agent picks from one of a few finite decisions called choices.

-

When a choice is made, an outcome is reached (a.k.a result of their decision).

-

The outcomes could be anything:

-

Possible outcomes in a game of chess

-

Possible outcomes of negotiations between nations

-

Possible outcomes of an eBay auction

-

Let’s formalise some of this...

-

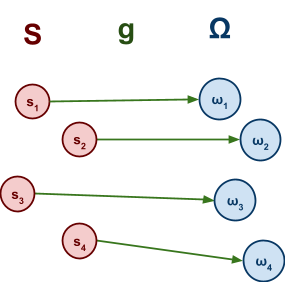

Let

denote the choices available to an agent.

denote the choices available to an agent.

-

Let

denote the outcomes.

denote the outcomes.

-

Let

denote the outcome function, which maps each choice to an outcome.

denote the outcome function, which maps each choice to an outcome.

-

Alright, so in our formalised maths world, we can pick a

choice and get an outcome.

-

But how do we know which choice will give us the best

outcome?

-

To start off, we can model a preference between two outcomes.

-

In other words, given a pair of outcomes

, we can say which one we prefer.

, we can say which one we prefer.

-

There’s actually two ways we can model

preference:

-

We know exactly what the consequences of our choices will

be

-

For every choice, there is exactly one known certain

consequence

-

We don’t know exactly what the consequences will

be

-

For every choice, there are multiple possible consequences,

each with an attached probability

-

For now, we’ll focus on under certainty, because

it’s the easier one.

Decision under certainty

-

A preference is a binary relation

on

on  .

.

-

So we can say

if our agent prefers

if our agent prefers  at least as much as

at least as much as  .

.

-

We assume that it’s:

-

We prefer an outcome as much as itself.

-

for all

for all

-

We have to either prefer one outcome or the other.

-

For all

, either

, either  or

or

-

(either

or

or  has to be true)

has to be true)

-

If we prefer the second outcome over the first one, and the

third outcome over the second one, then we’ll

definitely prefer the third outcome over the first

one.

-

For all

, if

, if  and

and , then

, then

-

When it satisfies all these properties, mathematics calls

that a total preorder.

-

If

and

and  , then we are indifferent between the two.

, then we are indifferent between the two.

-

That means we don’t care which one we pick.

-

We denote that with

.

.

-

If

but not

but not  , then we strictly prefer

, then we strictly prefer  over

over  .

.

-

That means we definitely prefer

over

over  .

.

-

We denote that with

.

.

-

This outcome function is an ordinal preference.

-

We can also define something with cardinal preference.

-

We can define a utility function, which maps an outcome to a numerical utility value.

-

A utility function

over

over  is said to represent a preference relation

is said to represent a preference relation  iff, for all

iff, for all  :

:

iff

iff

-

What does that mean?

-

With a preference relation, you can check if you prefer one

outcome over another.

-

You can also do that with a utility function, by checking

if the output of an outcome is greater than another.

-

So if you have a utility function that prefers all the same

outcomes as a preference relation, you can say that utility

function “represents” that preference

relation.

-

Given a finite set

, for every preference relation

, for every preference relation  on

on  there exists a utility function

there exists a utility function  that represents it (basically, there’s always a utility function for

every preference relation).

that represents it (basically, there’s always a utility function for

every preference relation).

-

Keep in mind that agents do not choose outcomes based on

the numerical value of the utility function; they choose it

via their preference.

-

It doesn’t matter what the value is; the range could

be between 1 to 10, or 1 to a billion.

-

We’re only using numerical values for adopting

techniques for mathematical models, but keep in mind that

only the relative order of the values is important.

-

Because the ranges of utility values aren’t defined,

utilities of different agents cannot be compared.

-

Also remember that utility values are NOT money!

-

The difference is that with money, the value matters.

-

You’d say that going from £1 to £1,000 is

way better than going from £1 to £10,

right?

-

But if they were utility values, they’d both be

equally as enticing, because it only matters that one number

is bigger than the other.

Utility values are not legal tender and cannot be used in

shops.

-

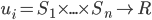

We can formally represent decision-making as a tuple:

-

and

and  are the set of choices and consequences

are the set of choices and consequences

-

is an outcome function that specifies the

consequences of each choice

is an outcome function that specifies the

consequences of each choice

-

is the agent’s preference relation

is the agent’s preference relation

-

is the utility function representing

is the utility function representing

-

A rational decision maker is one that makes the best

possible choice.

-

That means the agent makes the choice that maximises their

utility:

where

where

-

Don’t worry so much about the syntax; it just means

we’re picking the choice that best maximises our

utility function.

-

Because we’re using numerical utilities, we can

express this decision problem as an optimisation

problem.

Decision under uncertainty

-

When there is uncertainty, we don’t know what outcome

will come from a choice.

-

When we make a choice, there are many possible outcomes,

each with a probability of occurring.

-

Therefore a simple preference relation over two outcomes is

not enough.

-

We need more!

-

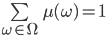

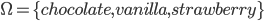

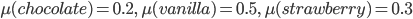

To represent the uncertainty of an outcome, we use a probability distribution.

-

A probability distribution over a non-empty set of outcomes

is a function:

is a function:

-

So what does this mean?

-

It means that every outcome has a probability between 0 to

1.

-

If you add up all the probabilities of each outcome,

it’ll sum up to 1 (naturally).

-

This whole concept is called a lottery.

-

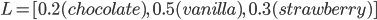

Lotteries are denoted by

.

.

-

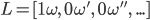

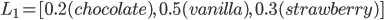

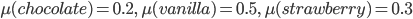

For example, let’s say you sent a kid over to buy ice

cream.

-

You tell the kid they can pick whatever flavour they

like.

-

The probability distribution over what flavour they pick

might look like:

-

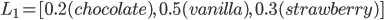

As a lottery, this can be written as:

Mmmm, ice cream 🍨

-

When you think about it, a lottery is a generalised

outcome.

-

If

is the outcome of a choice

is the outcome of a choice  , this is the same as a lottery where

, this is the same as a lottery where  and, for all

and, for all  such that

such that  ,

,  (basically

(basically  has a chance of 1 and all other outcomes have a

chance of 0).

has a chance of 1 and all other outcomes have a

chance of 0).

-

That lottery would be

or just

or just  .

.

-

A lottery like that is called a degenerate lottery.

-

In the same way we can define a preference relation

over a set of outcomes

over a set of outcomes  ...

...

-

... we can define a preference relation

over a set of lotteries

over a set of lotteries  .

.

-

So say we could pick from between lottery L1 or lottery L2.

-

If we preferred L1 over L2, we could arbitrarily define

.

.

-

We can also do the same with a utility function.

-

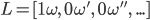

A compound lottery is a lottery of lotteries.

-

Given a set of lotteries

, a compound lottery is a probability distribution

, a compound lottery is a probability distribution  over

over  and is given by:

and is given by:

-

Where

.

.

-

You want another example involving ice cream?

Alright!

-

Let’s say you send the kid to get some ice cream

again.

-

The kid has no money. You’re 70% sure it’s

£1 a scoop, but you’re not totally sure.

-

You give the kid £1 anyway and send them to the ice

cream van.

-

If a scoop is £1, the ice cream lottery takes effect:

-

If the scoop is more than £1, the kid can’t get

anything, so this degenerate lottery takes effect:

-

As a whole, this is all one big compound lottery:

-

There’s another example about weather in the slides,

but I wanted to expand on the previous example, and I also

like ice cream.

-

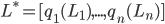

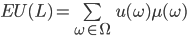

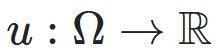

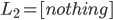

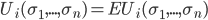

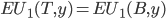

The expected utility (EU) of a lottery

is the average utility we can expect from that

lottery.

is the average utility we can expect from that

lottery.

-

It can be calculated like this:

-

is the set of outcomes

is the set of outcomes

-

is the utility function

is the utility function

-

is the lottery

is the lottery

-

is the probability distribution of the lottery

is the probability distribution of the lottery

-

What’s that? You want another example involving ice cream? Sure!

-

Let’s define a utility for each ice cream

flavour:

-

Yeah yeah, you can argue about it in the Google docs

comments.

-

Just a reminder, the probability distribution of the

flavours go like this:

-

So we can calculate the EU of lottery L like so:

-

+

+ +

+

-

To simplify this, EU(L) = 2.1.

-

That’s great, but who cares?

-

Now, I know what you’re thinking.

-

“What’s the relation between preferences over

lotteries and expected utility?”

-

What? You weren’t thinking that?

-

Well, von Neumann and Morgenstern were definitely thinking

that.

-

A preference relation over outcomes is ordinal, and utility functions are cardinal.

-

A preference relation over lotteries is ordinal, and expected utilities are cardinal.

-

So you could try to think of things like this:

|

|

Outcomes

|

Lotteries

|

|

Ordinal

|

Preference relation over outcomes

|

Preference relation over lotteries

|

|

Cardinal

|

Utility functions

|

Expected utilities

|

-

Utility functions can “represent” a preference

relation over outcomes.

-

So can an expected utility “represent” a

preference relation over lotteries?

-

Not exactly... there’s slightly more to it.

-

A preference relation over lotteries is arbitrary and

vague.

-

We can just say

if we prefer L1 to L2 and call it a day.

if we prefer L1 to L2 and call it a day.

-

However, expected utilities (EUs) are calculated. It’s an actual value we can derive (as opposed to utility functions, where the value

doesn’t matter).

-

Therefore these two aren’t really the same things in

the same way that utility functions can

“represent” a preference relation over

outcomes.

-

Now don’t worry, von Neumann and Morgensten already

found a relation between preference relations and expected

utilities.

-

But we must define two axioms that preferences can satisfy

first:

-

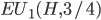

IFF

IFF

-

Don’t worry so much if these don’t make sense.

It’s only important that you know they exist.

-

The von Neumann - Morgenstern theorem goes like this:

-

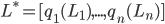

Let

be the set of compound lotteries over a finite set

be the set of compound lotteries over a finite set  and let

and let  be a preference relation over

be a preference relation over  . Then, the following are equivalent:

. Then, the following are equivalent:

-

satisfies continuity and independence.

satisfies continuity and independence.

-

There exists a utility function over

such that for all

such that for all  :

:

-

IFF

IFF

-

English please?

-

You can look at this in two ways:

-

If

satisfies continuity and independence, then that means

satisfies continuity and independence, then that means  when

when

-

If

when

when  , then

, then  satisfies continuity and independence

satisfies continuity and independence

-

If that still doesn’t make sense, don’t worry;

just know that there is a connection between preference

relations over lotteries and expected utilities.

Pure Strategy Games

-

Now, we’re going to be talking about strategic-form

non-cooperative games.

-

Strategic-form non-cooperative games are games where:

-

Each player has different choices called strategies.

-

Players act alone, do not make joint decisions, and pursue

their own goals.

-

Players simultaneously choose a strategy, and these

combined strategies result in one of many outcomes.

-

Each player has their own preference over these

outcomes.

-

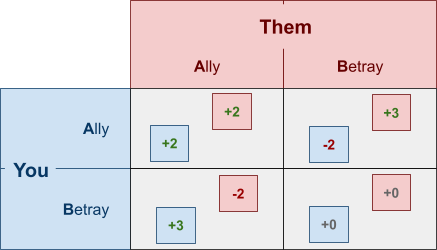

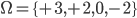

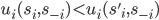

You are drugged one day, and you wake up in a

warehouse.

-

You are wearing an electronic bracelet with the number

“3” displayed on it.

-

If you can get the number to 9 or above, it comes off and you can escape.

-

If the number gets to 0 or below, you are killed.

-

To change the number on your bracelet, you need to play the

AB game with others in the same situation as you.

-

When you play the AB game with someone, you both make two

choices each, independently:

-

If you both pick “ally”, you both get 2

points.

-

If you pick “betray” and the other picks

“ally”, you get 3 points and the other loses 2

points (and vice versa).

-

If you both pick “betray”, nobody gets any

points.

-

Here, there are four possible outcomes for each

player:

-

We can define a preference relation

for each player

for each player  such that:

such that:

-

It’s all like plus and minus and arghhh it’s

confusing 🥴

-

It’d be easier if we assigned utility values to each

outcome, so we can look and go “bigger number means

better”

Strategic-form Games

-

Before we go on further, let’s formally define

strategic form games a bit more.

-

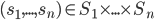

A strategic-form game is a tuple:

-

is a finite set of players

is a finite set of players

-

is a finite set of strategies for each player

is a finite set of strategies for each player

-

is a utility function for player

is a utility function for player  (

( is the set of reals)

is the set of reals)

-

A strategy profile or strategy combination is a tuple:

-

It’s basically one of the possible combinations that

players can pick their strategies.

-

They can also be denoted by:

-

... to highlight the strategy of player

.

.

-

denotes the strategy combination without player

denotes the strategy combination without player  :

:

-

is the set of all strategy combinations of the form

is the set of all strategy combinations of the form  excluding the player

excluding the player  .

.

-

This syntax seems weird, but it’s just so we can

focus on player

.

.

-

Throughout this section, we assume that all players know

each other’s strategies and utilities.

-

We also assume we’re all rational

decision-makers.

-

A rational decision-maker is one that always chooses the option that maximises their

utility.

-

With this in mind, how do we predict the outcome of these

games?

-

We can define solution concepts, i.e. criteria that will

allow us to predict solutions of a game under the

assumptions we make about the players’

behaviour.

Strictly dominated strategies

-

So what sort of options will a player choose?

-

What if one of the choices always yielded worse results, no

matter what happened?

-

You’d never pick that choice, right?

-

Therefore, we can rule out that choice.

-

That’s the main concept of strictly dominated

strategies.

-

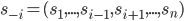

A strategy

of player

of player  is strictly dominated if there exists another strategy

is strictly dominated if there exists another strategy  of player

of player  such that for each strategy vector

such that for each strategy vector  of the other players,

of the other players,

-

In this case, we can say that

is strictly dominated by

is strictly dominated by  .

.

-

What this basically says is that if you pick strategy

over

over  , then no matter what the opponent picks, you’re

gonna be missing out on some extra utility you

could’ve gotten had you picked

, then no matter what the opponent picks, you’re

gonna be missing out on some extra utility you

could’ve gotten had you picked  .

.

-

Because we’re assuming that players are rational,

they’ll never pick strictly dominated strategies, so

we can rule them out.

-

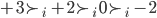

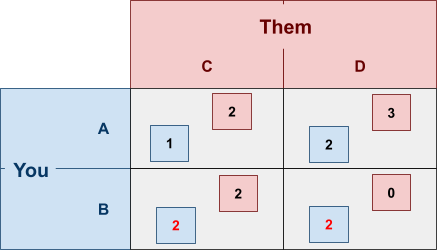

Let’s have a quick example:

-

Take a look at your “betray” strategy.

-

If you pick “betray” and they pick

“ally”, you get an extra +2 utility that you

wouldn’t have gotten had you picked

“ally”.

-

If you pick “betray” and they pick

“betray”, you get an extra +5 utility that you

wouldn’t have gotten had you picked

“ally”.

-

So no matter what, when you pick “betray”,

you’re getting more utility than

“ally”.

-

This means “ally” is strictly dominated by

“betray”.

-

Therefore we can eliminate the “ally”

strategy.

-

This is called iterated elimination of strictly dominated strategies.

-

We can eliminate these strategies in any order; it

doesn’t matter.

-

So, according to this model, there’s really no reason

to pick “ally”.

-

Although, I don’t think you’d have many friends

if you followed models like these.

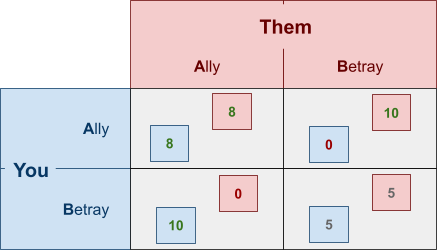

Weakly dominated strategies

-

Sometimes, there are no strictly dominated

strategies.

-

What do we do then?

-

We can eliminate strategies where you either:

-

Miss out on utility had you picked another strategy

-

The utility you get is no different had you picked the

other strategy

-

That’s the main concept of a weakly dominated

strategy.

-

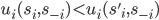

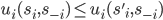

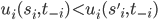

A strategy

of player

of player  is weakly dominated if there exists another strategy

is weakly dominated if there exists another strategy  of player

of player  such that:

such that:

-

For every strategy vector

of the other players,

of the other players,

-

There exists a strategy vector

of the other players such that,

of the other players such that,

-

In this case, we can say that

is weakly dominated by

is weakly dominated by  .

.

-

What this basically says is that

is at least as good as strategy

is at least as good as strategy  .

.

-

When you pick

over

over  , depending on what the opponent picks, it will either not

make any difference between picking

, depending on what the opponent picks, it will either not

make any difference between picking  or

or  , or you’ll get more utility by picking

, or you’ll get more utility by picking  over

over  .

.

-

However, for it to be weakly dominated, there needs to be

at least one strategy profile where picking

over

over  yields you more utility (so indifferent strategies can’t weakly dominate each

other).

yields you more utility (so indifferent strategies can’t weakly dominate each

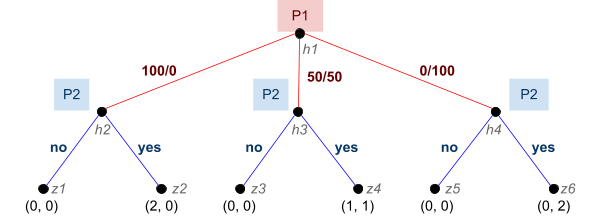

other).

-

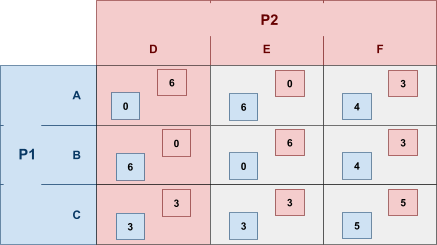

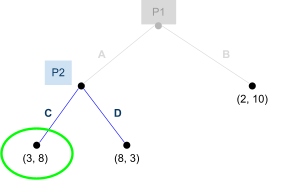

Let’s give another example:

-

Take a look at your “B” strategy.

-

If you pick “B” and they pick “C”,

you’d get an extra +1 utility by picking

“B” instead of “A”.

-

If you pick “B” and they pick “D”,

it would’ve made no difference had you picked

“B” or “A”.

-

This makes “A” weakly dominated by

“B”.

-

We can eliminate weakly dominated strategies, and that’s

called iterated elimination of weakly dominated strategies.

-

But there’s two things to remember:

-

The order of elimination matters; you could end up with

different results!

-

You may eliminate some Nash equilibria from the game (more on this later).

Pure Nash Equilibria

-

Sometimes, there are no strongly / weakly dominated

strategies to eliminate.

-

We can use a different concept, though.

-

The concept of stability: Nash Equilibrium!

-

But first, we need to define the concept of a “best

response”.

-

Like the name suggests, a best response is the optimal strategy a player can take that maximises

their utility, when used in response to the other player’s

strategy.

-

Formally, let

be a strategy vector for all the players not

including

be a strategy vector for all the players not

including  . Player

. Player  ’s strategy

’s strategy  is called a best response to

is called a best response to  if:

if:

-

-

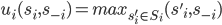

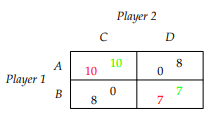

Take this example: let’s say you’re player 1,

and player 2 just picked D.

-

What would be your best response?

-

If you look at the possible

strategies:

-

Picking A would give you a utility of 0.

-

Picking B would give you a utility of 6.

-

Picking C would give you a utility of 3.

-

So picking B would be the best response to P2’s

choice of D, because it’ll yield you the highest

utility.

-

So now we know what a ‘best response’ is,

what’s a Nash equilibrium?

-

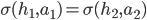

A Nash equilibrium is a situation of stability in which both responses

are best responses to each other.

-

Formally, a strategy combination

is a Nash equilibrium if

is a Nash equilibrium if  is a best response to

is a best response to  for every player

for every player  .

.

-

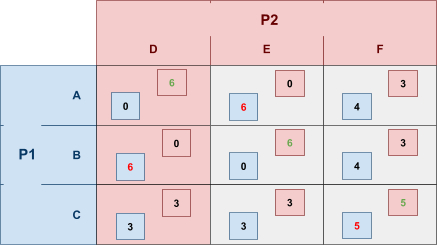

To illustrate this, I’m going to show the table

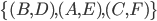

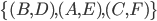

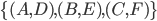

again, but each green number is P2’s best response to P1, and each red number is P1’s best response to P2.

-

We can see that (C,F) are the best responses to each

other!

-

Therefore, (C,F) is a Nash equilibrium.

-

This means if P1 picks C and P2 picks F, that means none of

them will benefit from changing their choice, because

they’ve already picked the best responses to each

other.

-

How do we find these Nash equilibria?

-

There’s two main ways: best response intersection,

and iterated elimination.

-

Let’s try the first for now: we find the best

responses of both players, and we find the

intersection.

-

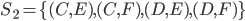

Here’s the best responses for both players:

-

For player 1:

-

For player 2:

-

Now, we find the intersection of these two sets, and we

have our Nash equilibria.

-

is shared between the two, so that’s our Nash

equilibrium for this example.

is shared between the two, so that’s our Nash

equilibrium for this example.

-

It is possible that there might be multiple Nash

equilibria.

-

A game with multiple Nash equilibria are called Coordination games.

-

Equilibria arise when players coordinate on the same

strategy.

-

In addition, some games might not have any Nash equilibria,

like the matching pennies game.

-

Now, we’ll go over that other strategy for finding

Nash equilibria: iterated elimination.

-

If you perform iterated elimination of strictly dominated

strategies and end up with one strategy profile to pick

from, then that profile is a Nash equilibrium (and the only one in that game).

-

Eliminating strictly dominated strategies does not eliminate equilibria...

-

... but eliminating weakly dominated strategies might.

-

To put it more formally, given a game G, let G* be the game

obtained by iterated elimination of weakly dominated

strategies.

-

The set of equilibria of G* is a subset of the set of

equilibria of G.

-

If you eliminate weakly dominated strategies, you may

eliminate none, some, or all of the Nash equilibria in the

game.

-

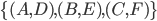

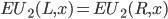

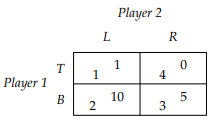

Here’s an example:

-

The equilibria are

and

and  .

.

-

In this example, we can eliminate L for being weak to R,

and then eliminate B for being weak to T.

-

But... oops! We’ve eliminated the B row and L column.

That means the Nash equilibrium

is no longer present.

is no longer present.

-

So remember, be careful with elimination. If you’re

looking for Nash equilibria, it’s good to eliminate

strictly dominated strategies, and then calculate the best

responses for each player and then intersect.

Mixed Strategy Games

-

Before, in our pure strategic-form games, players could only pick one strategy

and that’s it.

-

But what if we added a probability to each strategy?

-

What if you had, say, a 50% chance of picking one strategy

and 50% chance of picking another one?

-

That is the core concept of mixed strategy games!

-

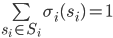

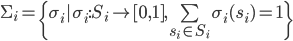

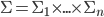

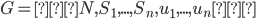

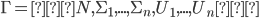

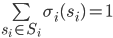

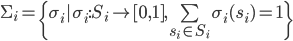

More formally, in a strategic-form game, a mixed strategy

for player

for player  is a probability distribution over the set of

strategies

is a probability distribution over the set of

strategies  , i.e. a function

, i.e. a function  such that

such that

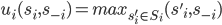

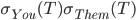

-

In other words, each strategy

has a probability

has a probability  , which is the probability that player

, which is the probability that player  will play it. All strategy probabilities a player has

must add up to 1 (naturally).

will play it. All strategy probabilities a player has

must add up to 1 (naturally).

-

From now on, we’ll call these mixed strategies, and

everything we had before we’ll call pure strategies.

-

Now, if you adopt a mixed strategy, you’ll be less

predictable and keep your opponents guessing.

-

But when we had pure strategies, we could calculate Nash

equilibria and stuff. How do we do that here? We’ll

get on to that in a moment!

-

First, we need to introduce expected utility.

-

The expected utility of a mixed strategy is how much estimated utility you

would get on average if you played that strategy.

-

It’s calculated using a weighted sum of pure

utilities and probabilities.

-

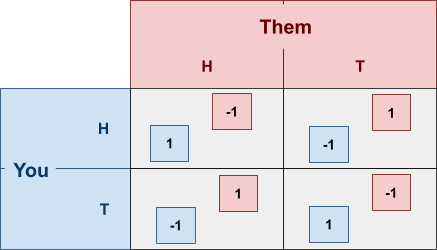

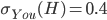

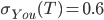

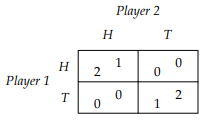

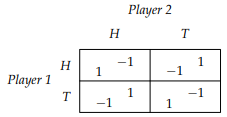

Here’s an example using Matching Pennies:

-

Let’s suppose that you play heads with probability of

0.4, meaning:

-

(because there’s only two options and 1 - 0.4 =

0.6)

(because there’s only two options and 1 - 0.4 =

0.6)

-

... and our opponent plays heads with probability of

0.3:

-

What is our expected utility?

-

We need to do a weighted sum of every possibility that

could happen in this game.

-

A weighted sum of probabilities and utilities.

- Like this:

-

You pick heads and they pick heads

You pick heads and they pick heads

-

You pick heads and they pick tails

You pick heads and they pick tails

-

You pick tails and they pick heads

You pick tails and they pick heads

-

You pick tails and they pick tails

You pick tails and they pick tails

-

The expected utility for player 2 is calculated in a

similar way.

-

Now to put all of this into maths syntax:

-

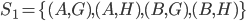

In a strategic-form game, for each player

, let

, let  be the set of all mixed strategies over

be the set of all mixed strategies over  , i.e.

, i.e.

-

Let

-

We call every element

a mixed strategy profile or mixed strategy combination.

a mixed strategy profile or mixed strategy combination.

-

In other words,

contains every possible probabilistic strategy that

each player could adopt in the game.

contains every possible probabilistic strategy that

each player could adopt in the game.

-

Like in pure strategies, we use

to represent the strategies of everyone except player

to represent the strategies of everyone except player  .

.

-

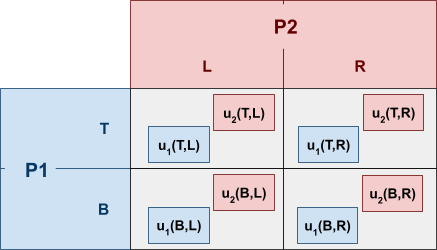

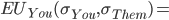

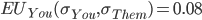

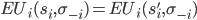

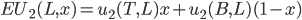

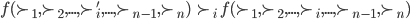

Given a mixed strategy profile

, the expected utility of player

, the expected utility of player  is given by:

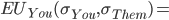

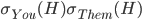

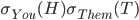

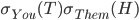

is given by:

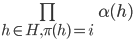

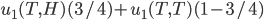

![]()

-

Let

be a strategic-form game. The mixed extension of G is the game

be a strategic-form game. The mixed extension of G is the game

- Where:

-

Each

is the set of mixed strategies of player

is the set of mixed strategies of player  over

over

-

Each

(set of reals) is a payoff function that associates with each mixed

strategy combination

(set of reals) is a payoff function that associates with each mixed

strategy combination  its expected utility (in other words,

its expected utility (in other words,  ).

).

-

Oof, that’s a lot of syntax...

-

That’s alright, though. As long as you understand the

core concepts.

I wonder what the mixed Nash equilibrium of poker is?

(more on mixed Nash equilibrium later!)

-

Wait, what’s a mixed extension? We mentioned that

above.

-

A mixed extension is a “new” game built on top of a

strategic-form game with only pure strategies.

-

When you think about it, mixed games are actually just

generalisations of pure games.

-

Pure games are just special forms of mixed games: in pure

games, there’s one strategy for each player with

probability of 1, and every other strategy is 0.

-

So if we have a pure game, we can actually treat it like a

mixed game, with probabilities of 0 and 1. That’s what

a ‘mixed extension’ means.

Mixed Nash Equilibria

-

To introduce the concept of Nash equilibria to mixed games,

we need to define the concept of best response to mixed

games first.

-

Let

be a strategic-form game and let

be a strategic-form game and let  be its mixed extension. Let

be its mixed extension. Let  be a mixed strategy vector for all the players not

including

be a mixed strategy vector for all the players not

including  . Player

. Player  ’s mixed strategy

’s mixed strategy  is called a best response to

is called a best response to  if

if

-

In other words, it’s a best response if there’s

no other mixed strategy we can pick that’ll increase

our expected utility.

-

It’s the same as the best response in pure games,

except it’s about expected utility and mixed

strategies, and not normal utility and pure

strategies.

-

Now, defining a mixed Nash equilibrium is easy.

-

A mixed strategy combination

is a mixed strategy Nash equilibrium if

is a mixed strategy Nash equilibrium if  is a best response to

is a best response to  for every player

for every player  .

.

-

Like in pure games, a mixed strategy Nash equilibrium is

where both responses are best responses to each other (but this time, it’s mixed).

-

We’ll use the term “mixed Nash equilibrium” to refer to this, and “pure Nash

equilibrium” to refer to the Nash equilibrium defined

in “Pure Strategy Games”.

-

Naturally, since pure games are special kinds of mixed

games, pure Nash equilibria are special kinds of mixed Nash

equilibria.

-

In our matching pennies game, there is a unique mixed Nash

equilibrium, and that’s to pick heads 50% of the time,

and tails the other times, for both players:

Hype for competitive Matching Pennies at the next

Olympics

-

In pure games, pure Nash equilibria do not always exist.

Sometimes there are none.

-

However, in a mixed game, there is always mixed strategy

Nash equilibria.

-

It’s pretty hard to find them, though. It’s PPAD-complete (Daskalakis, Goldberg, Papadimitriou, 2009)!

-

It’s not so hard to find them in 2x2 games, though.

That’s what we’ll focus on.

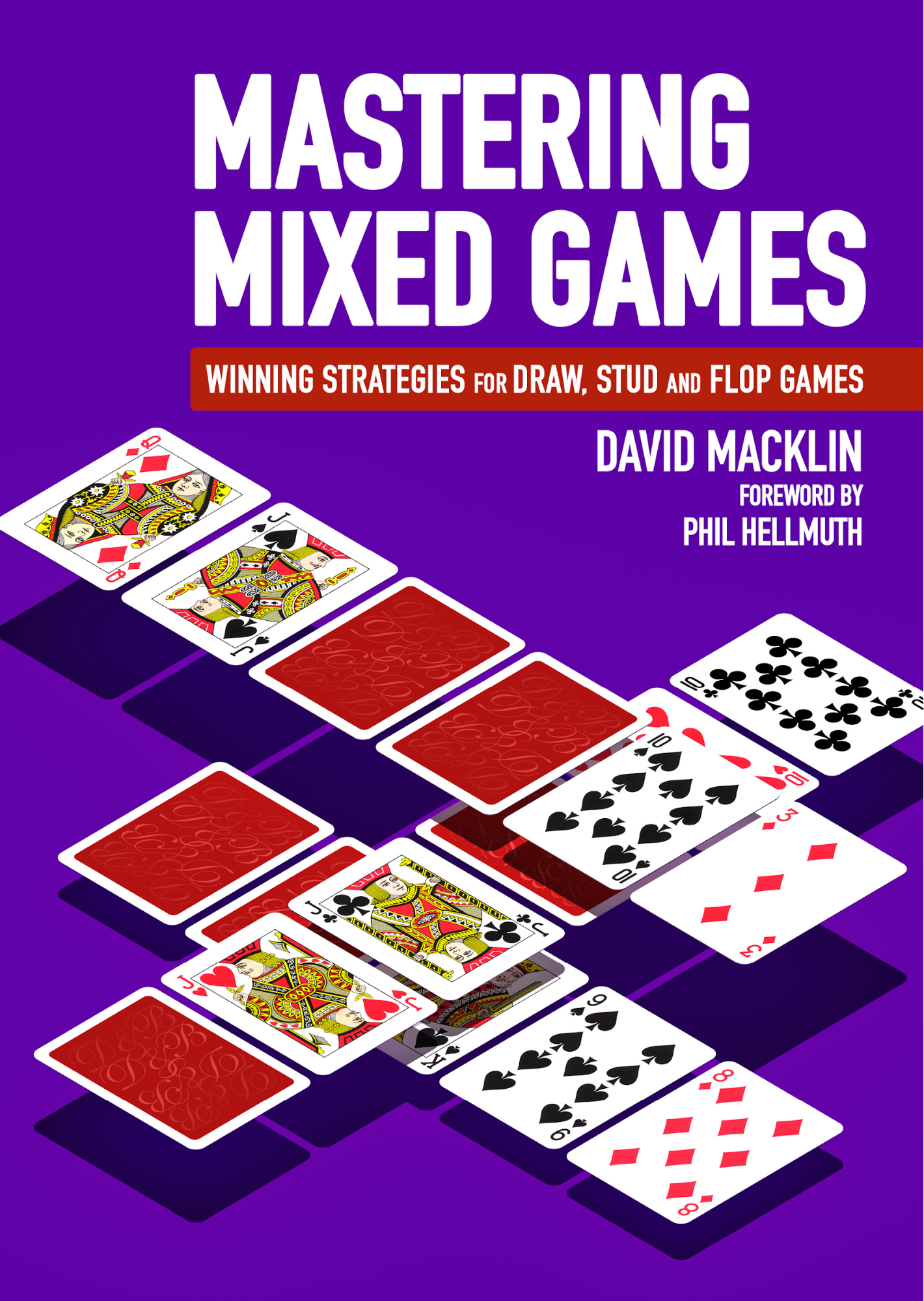

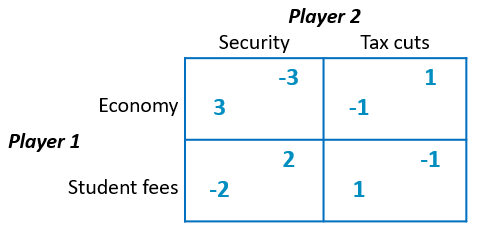

Computing Mixed Nash Equilibria

-

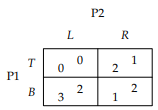

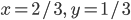

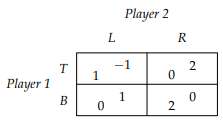

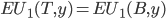

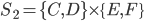

First, let’s define a generic 2x2 mixed game.

-

The probabilities of player 1 (P1) are:

-

Picking T:

-

Picking B:

-

The probabilities of player 2 (P2) are:

-

Picking L:

-

Picking R:

-

Both

and

and  are in the range

are in the range  , as a probability normally would.

, as a probability normally would.

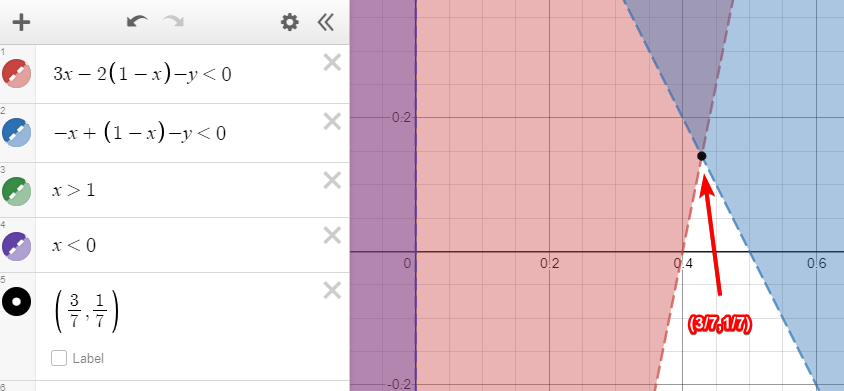

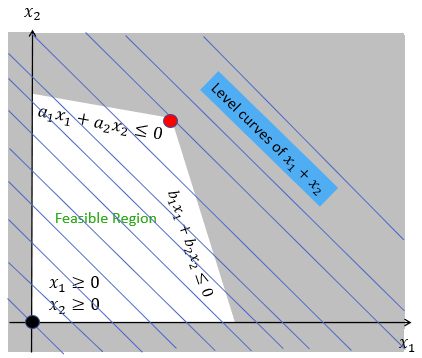

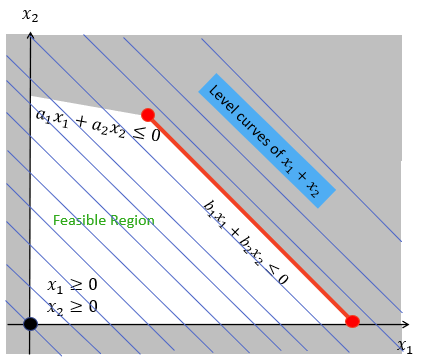

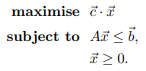

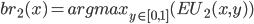

Best Response Method

-

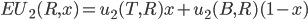

This is the first method of finding Nash equilibria.

-

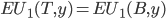

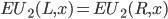

What we do is we construct functions for each

player’s best response:

-

The intersection between these functions will give us our

mixed Nash equilibria.

-

Let’s walk through an example while I explain:

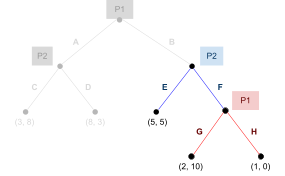

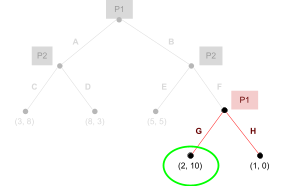

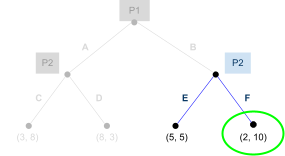

-

Here, we have 2 pure Nash equilibria

and

and  , but also a mixed Nash equilibrium that we want to

find.

, but also a mixed Nash equilibrium that we want to

find.

-

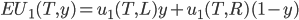

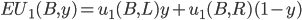

Let’s start with player 1.

-

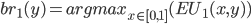

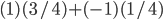

Player 1’s best response is:

-

What does “argmax” mean?

-

It stands for “arguments of the maxima”, and it means its selecting a value of

that maximises

that maximises  , and returning that instead.

, and returning that instead.

-

So you can think of

as the “best value of

as the “best value of  that maximises our expected utility”.

that maximises our expected utility”.

-

So how do we get the best values of

?

?

-

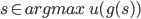

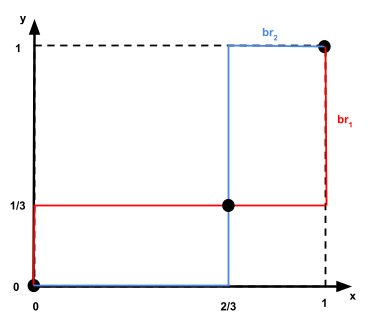

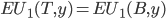

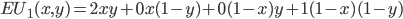

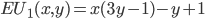

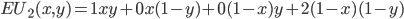

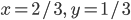

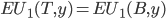

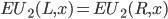

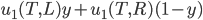

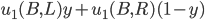

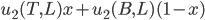

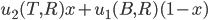

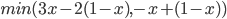

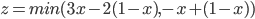

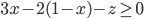

First, let’s simplify