Evolution of Complexity

Matthew Barnes

Recorded Lectures 3

Introduction 5

Evolutionary Algorithms 6

Representation of Individuals 8

Fitness Function 8

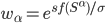

Selection 9

Variation Operators 11

Variations on a theme 13

Evolution History 14

Pre-Darwinian Evolution 14

Natural Selection 15

The Modern Synthesis 18

Fitness Landscapes 19

Definitions 19

Intuition 21

Properties 22

Epistasis 23

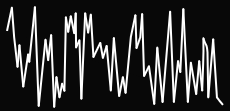

Examples: Synthetic Landscapes 25

Functions of unitation 25

NK landscapes 25

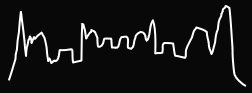

Royal Roads and Staircases 28

Long paths 29

Examples: Biological Landscapes 31

Complications 32

Sex 36

Linkage Equilibrium 37

Fisher / Muller Hypothesis 40

Muller’s Ratchet 41

Crossover in EAs 42

Focussing Variation 42

Schemata and Building Blocks 46

Schemata Theorem 46

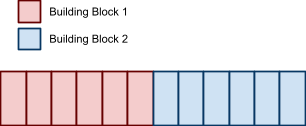

Building Block Hypothesis 46

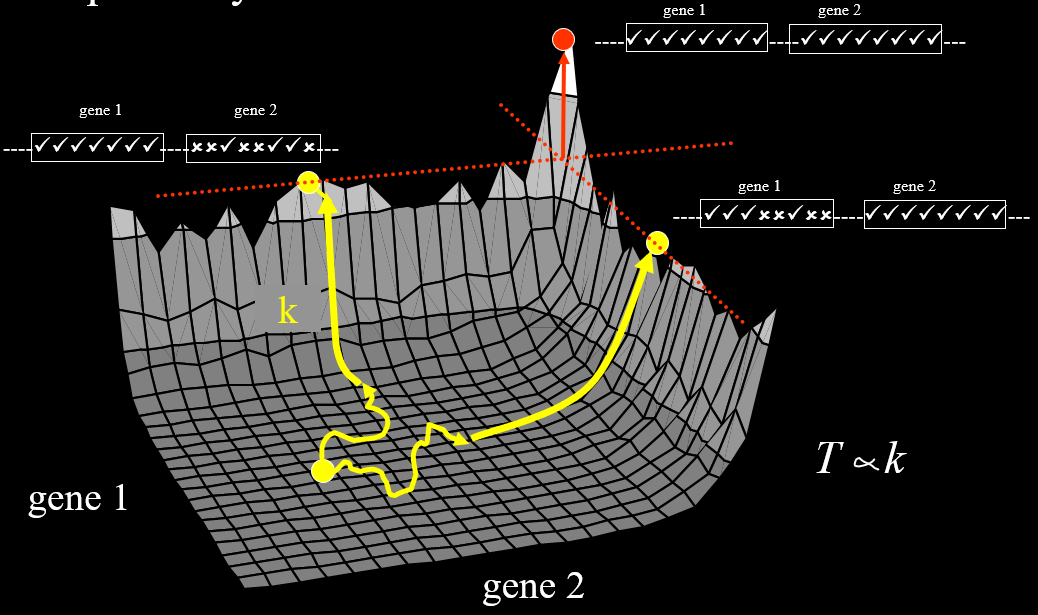

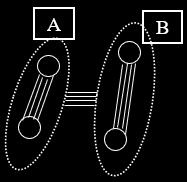

Two-Module Model 48

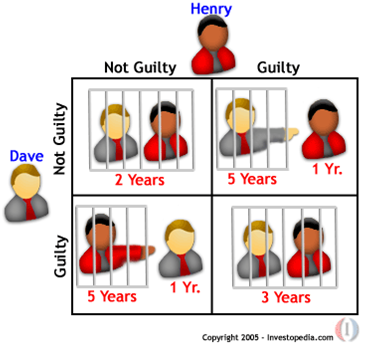

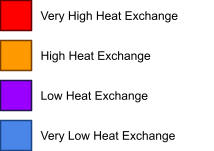

Coevolution 51

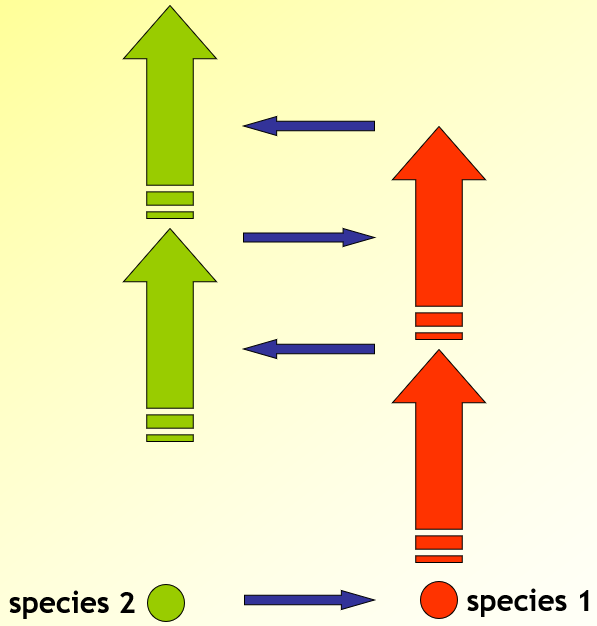

Pairwise vs Diffuse 53

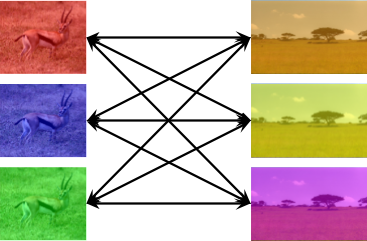

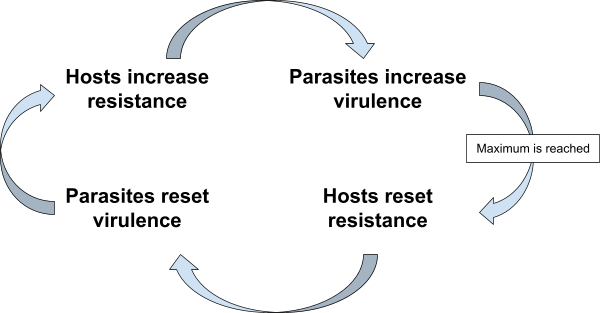

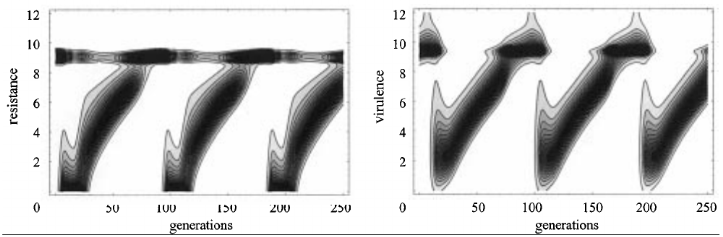

Sasaki & Godfray Model 54

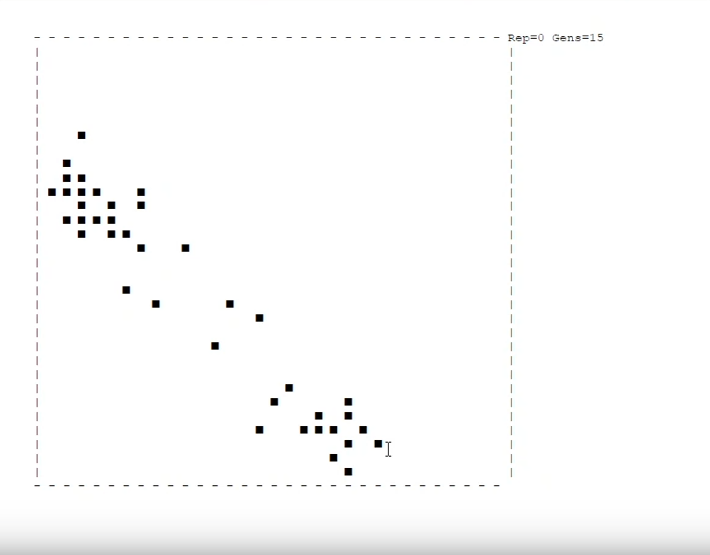

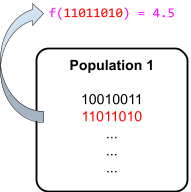

Coevolution in GAs 55

Why use Coevolution 57

Problems with Coevolution 59

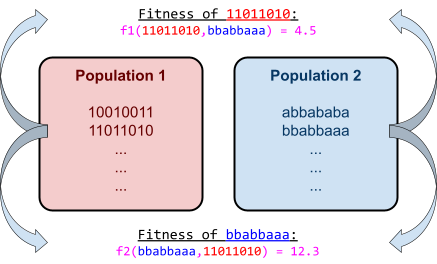

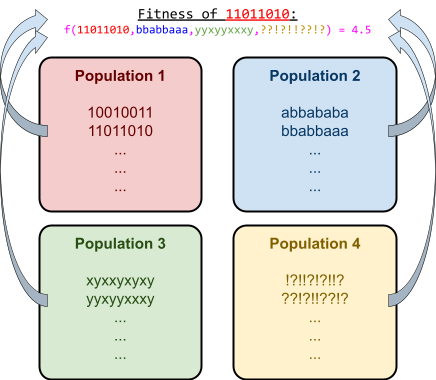

Cooperative Coevolution 60

What is Cooperative Coevolution 61

Competitive vs Cooperative 61

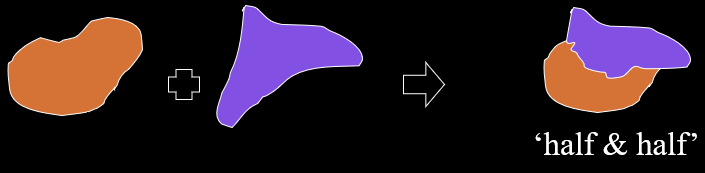

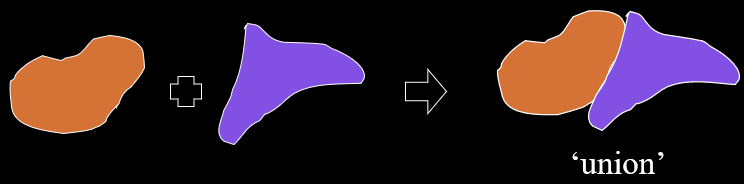

Divide and Conquer 63

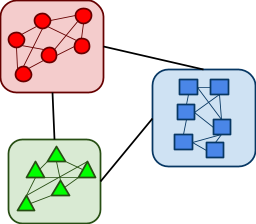

Modularity 64

Types of modularity 64

Concepts of modularity 66

Examples 67

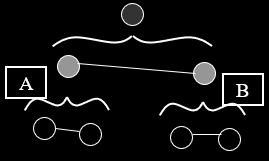

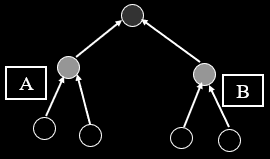

Simon’s “nearly-decomposable” systems 67

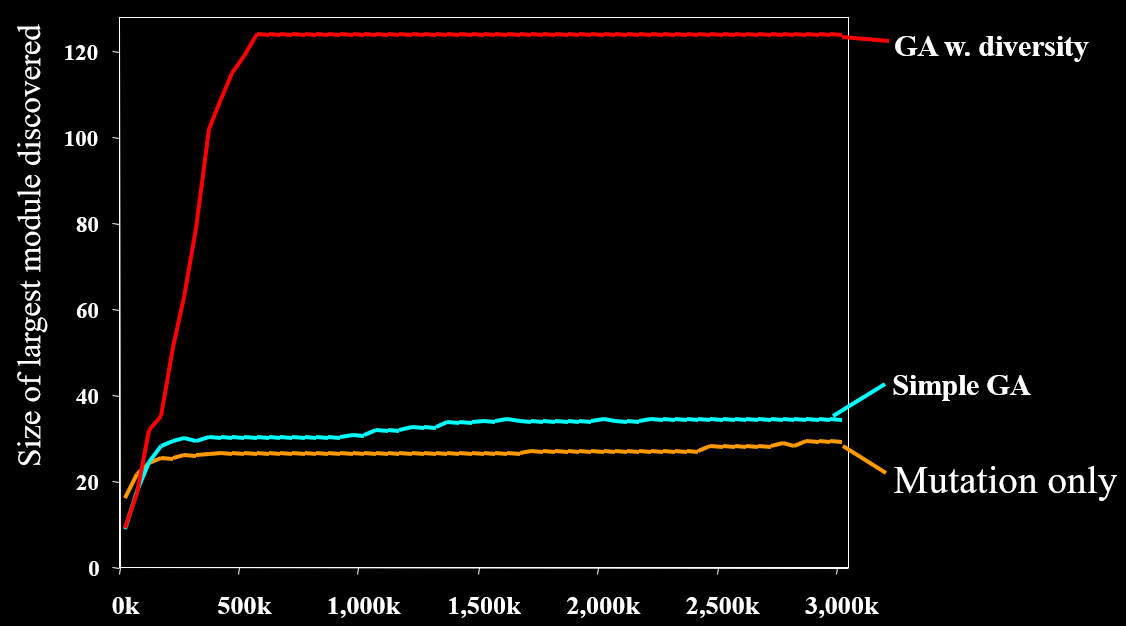

Modularity in EC 70

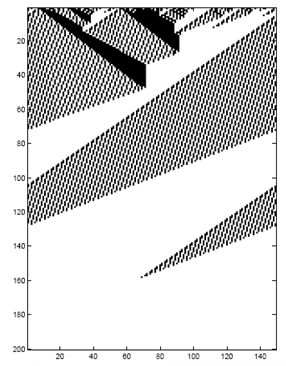

Hierarchical IFF as a Dynamic Landscape 71

Representations 76

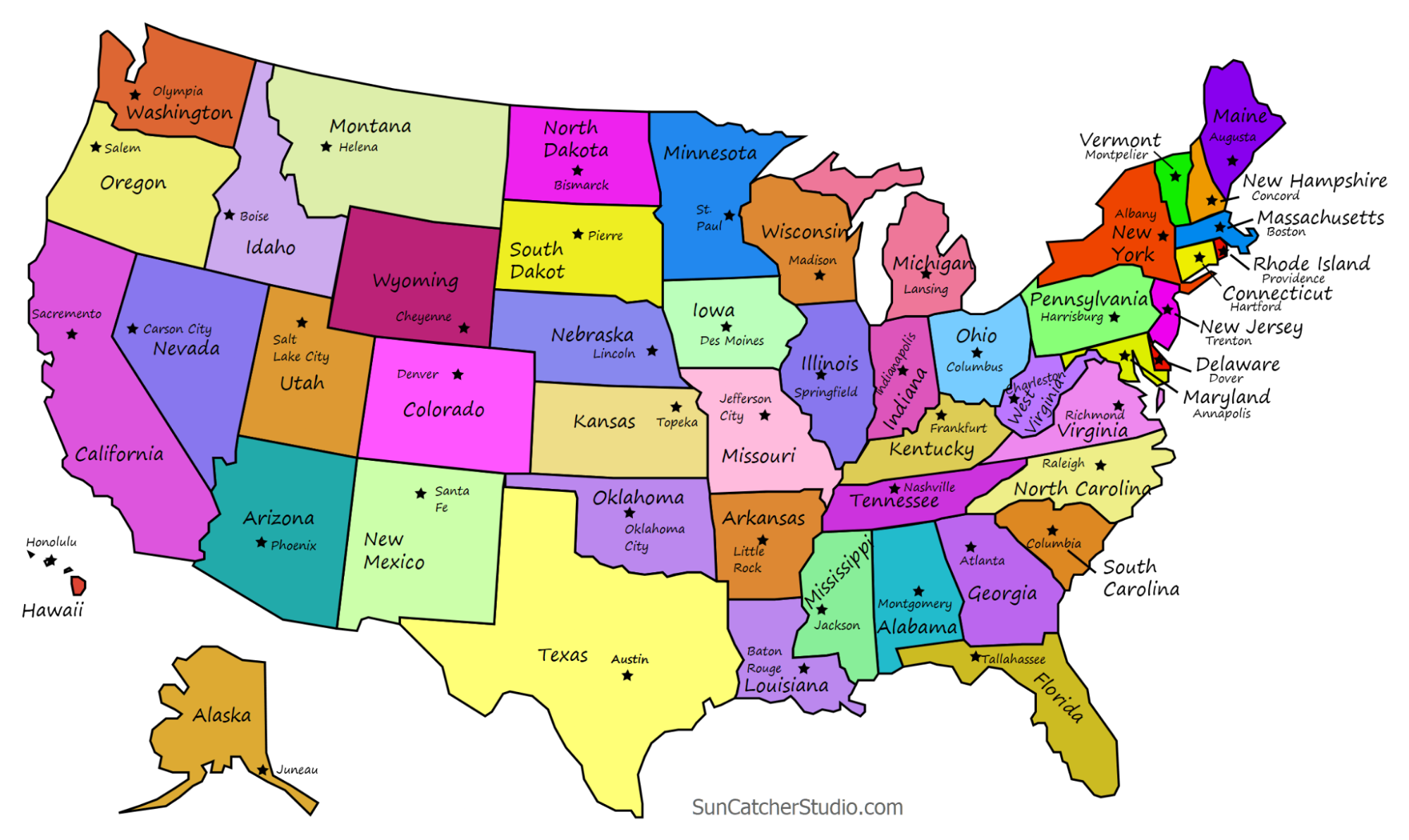

Graphs (TSP) 77

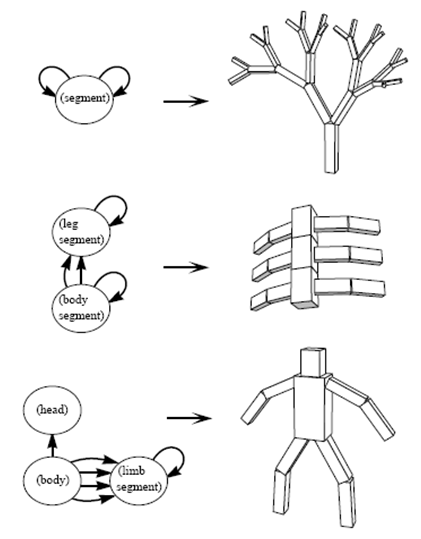

Structured Representations 80

Variable-size Parameter Sets 81

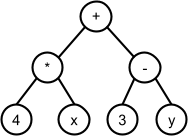

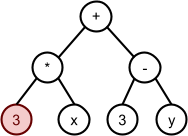

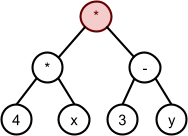

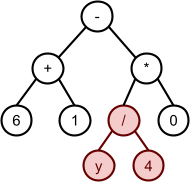

Trees 82

Grammar Based Representations 83

Cellular Encoding 84

Turtle Graphics 85

Advanced Representations 86

Artificial Life 89

Concept, Motivation, Definitions 89

Examples 90

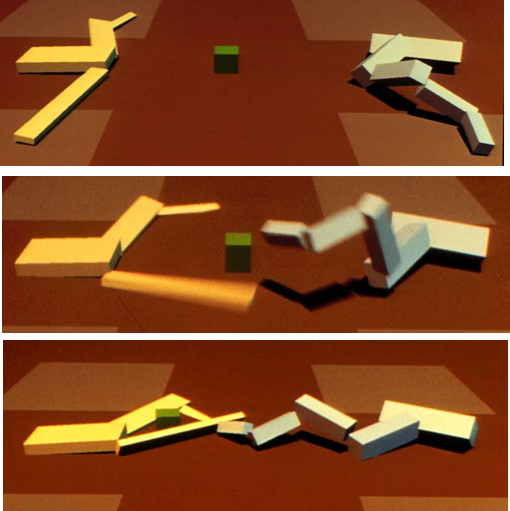

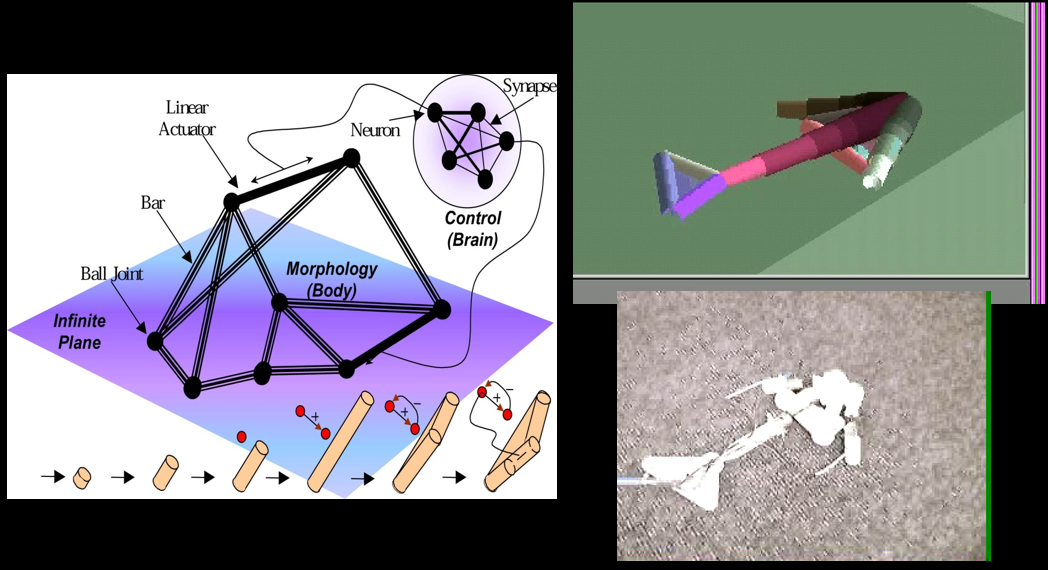

Karl Sims Creatures 90

Framsticks 91

Darwin Pond 91

Cellular Automata Based 92

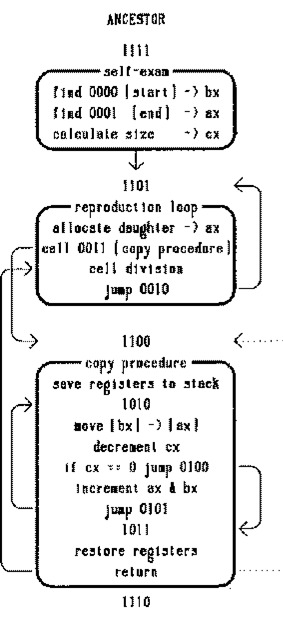

Computer Program Based 94

Arrow of Complexity 95

Information Entropy 95

Kolmogorov Complexity 95

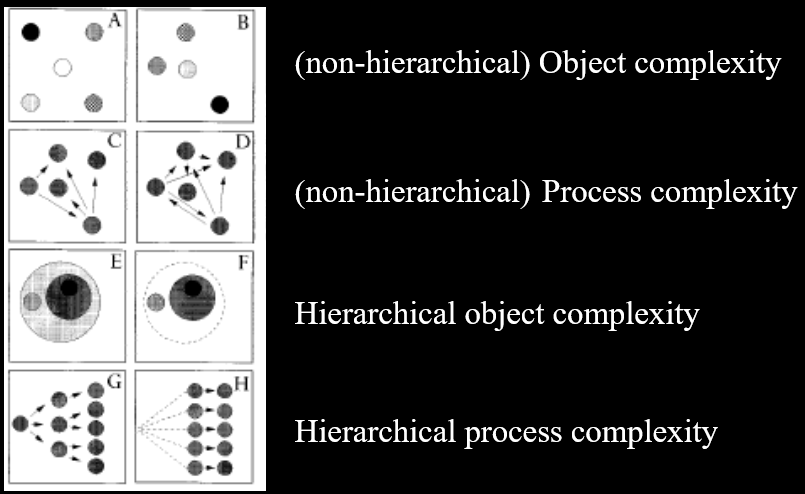

Structural Complexity 96

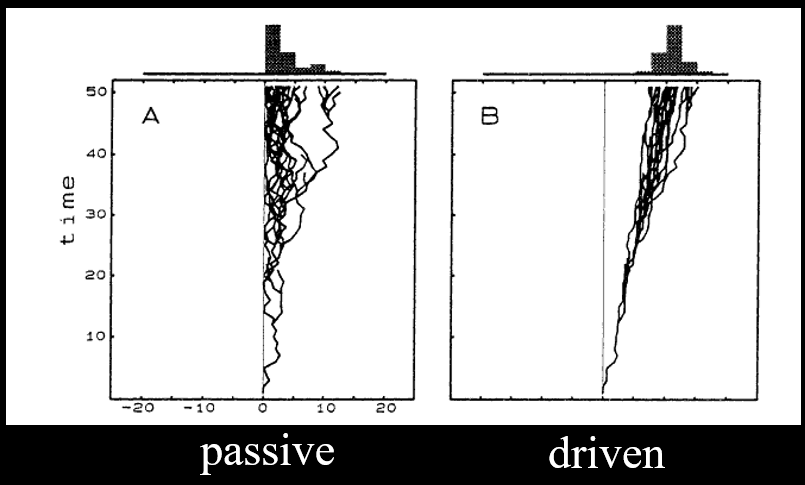

McShea’s Passive Diffusion Model 97

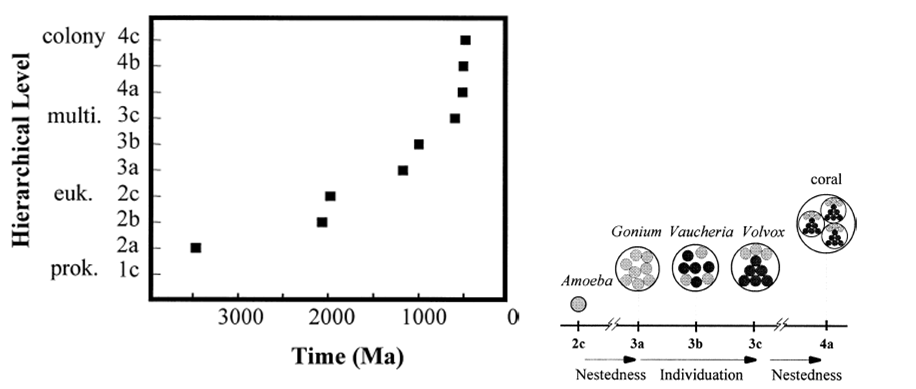

Hierarchical Structure 99

Natural Induction (not examinable) 101

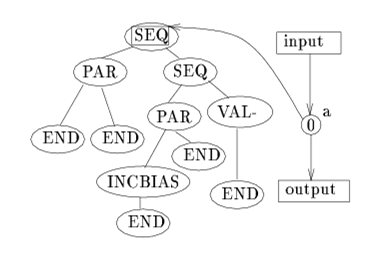

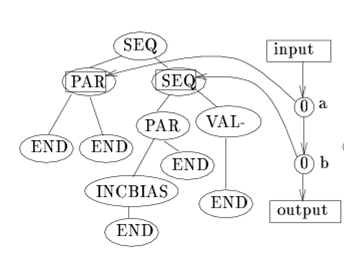

Compositional Evolution 108

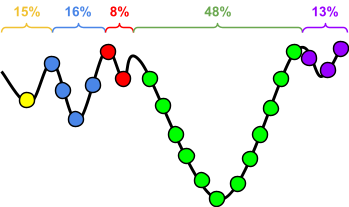

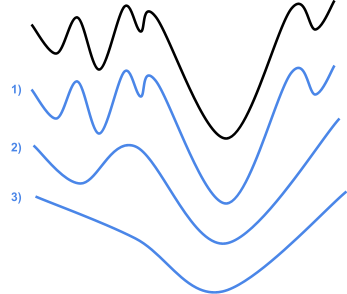

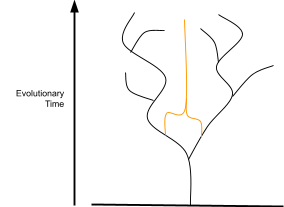

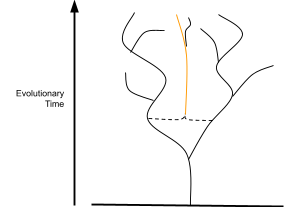

Gradual vs Compositional 110

Complex Systems 112

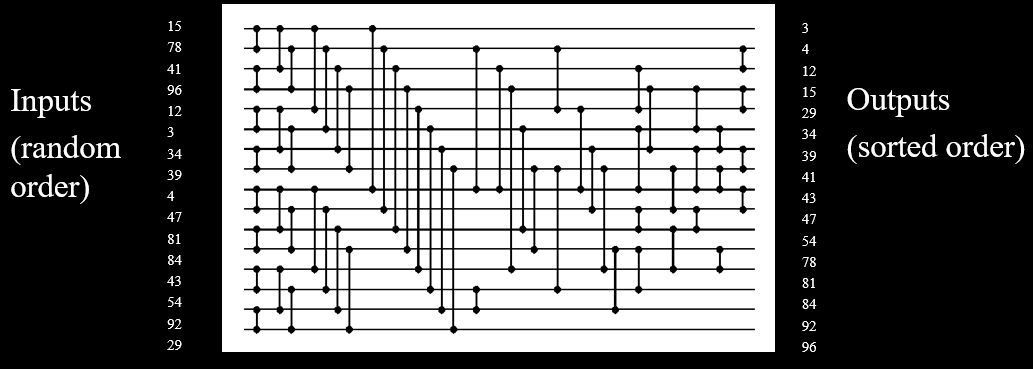

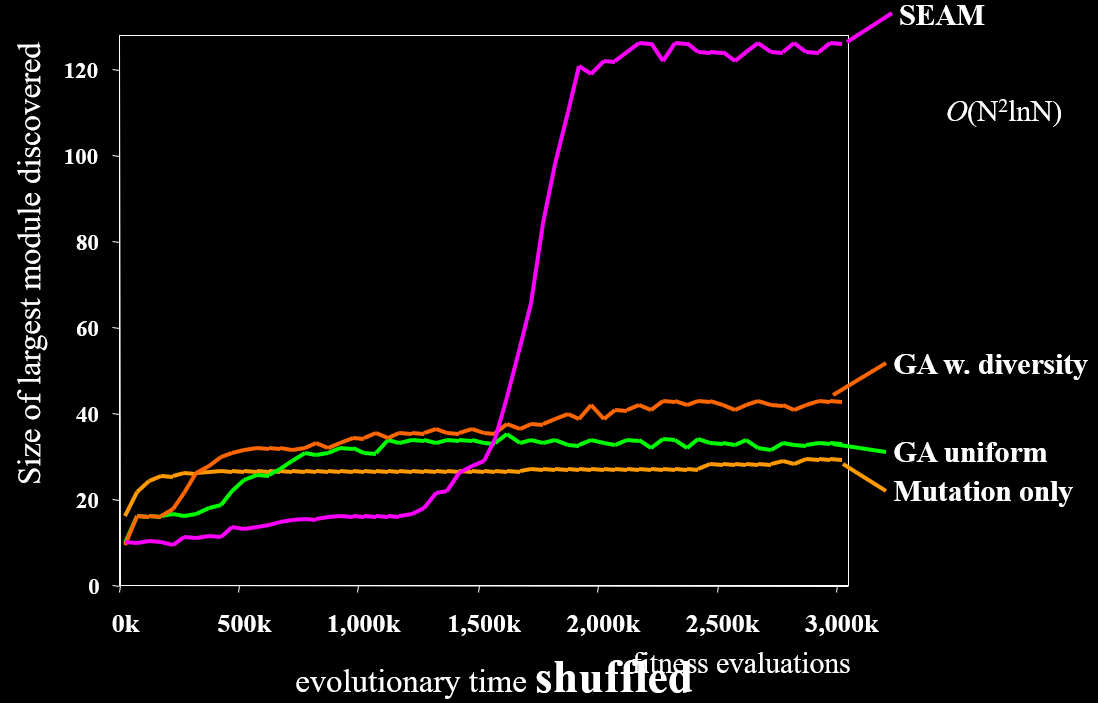

HIFF 113

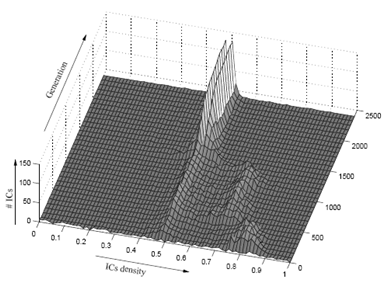

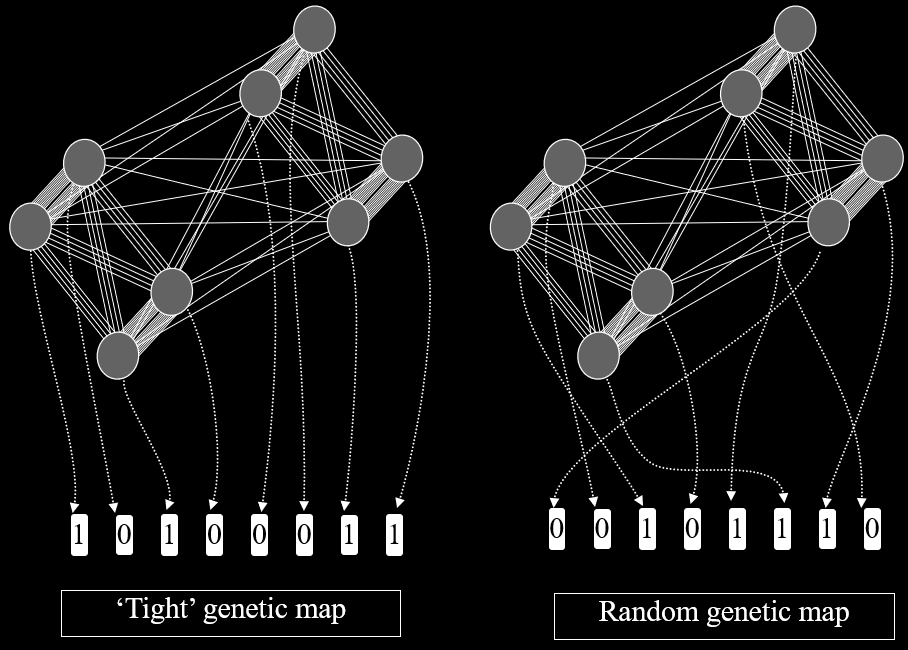

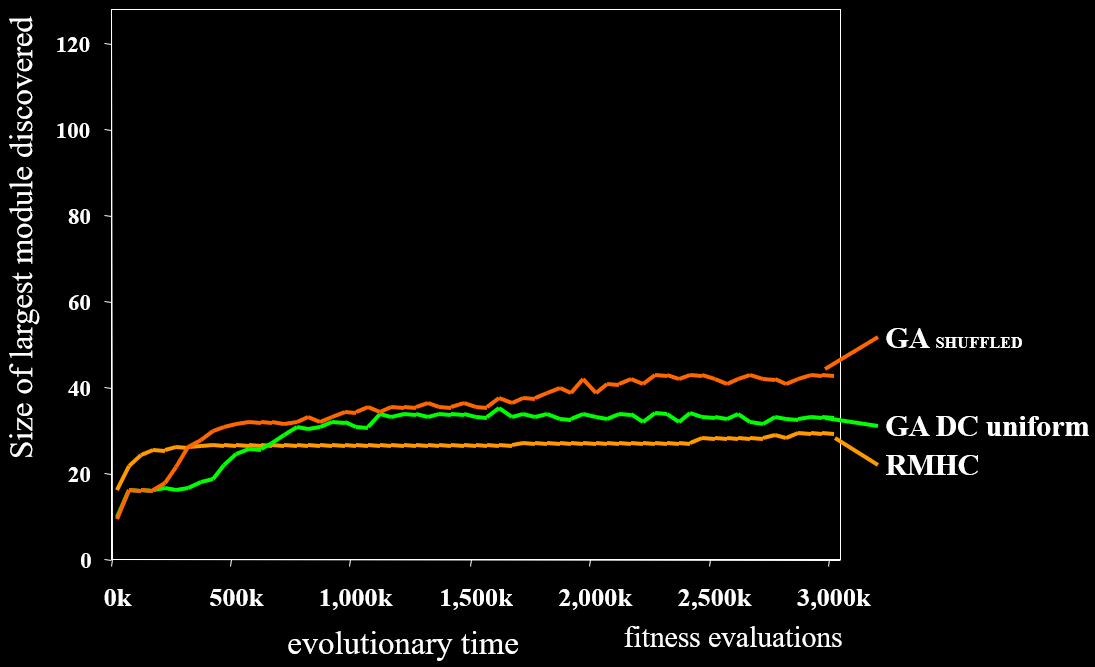

Random Genetic Map and SEAM 114

Recorded Lectures

-

At the time of writing this, it’s tricky to find the

recorded lectures for a given topic.

-

Therefore, I compiled this table.

|

Week

num (date)

|

Monday 9AM

|

Monday 11AM

|

Tuesday 1PM

|

|

1 (1/2)

|

Richard had really bad connection

problems.

|

Richard forgot to record.

|

Evolutionary Algorithms part 1

-

Assignment 1

-

Hillclimber

-

Four requirements for natural

selection

-

Evolutionary Algorithms (EAs)

-

Pseudocode of EA

-

Components of EA

-

Representation

-

Fitness functions

-

Selection (roulette wheel)

|

|

2 (8/2)

|

Evolutionary Algorithms part 2

-

Boltzmann

-

Stochastic Universal Sampling

-

Tournament

-

Truncation

-

Rank-based

-

Steady state vs generational

|

Evolutionary Algorithms part 3

-

EA schools

-

Parameters of EAs

-

Assignment 1

|

Evolution History part 1

-

Levels of life

-

Carolus Linnaeus’ “Systema

Naturae” 1735

-

Early Evolutionary Theory

-

Lamarck

-

Pre-Darwinian Views

-

Voyage of the Beagle

-

Natural Selection

-

Darwin’s “On the Origins of

Species”

-

Trouble with Inheritance

-

Post-Darwin

|

|

3 (15/2)

|

Evolution History part 2

-

Questions on evolution history

-

The Modern Synthesis

-

Neo-Darwinism

-

Fisher vs Wright

-

Contention over

‘speciation’

-

Punctuated Evolution

|

Evolution History part 3 and Fitness

Landscapes part 1

-

Contention over ‘level of

selection’

-

Growing knowledge

|

Fitness Landscapes part 2

-

Definition

-

Wright’s genotype sequence

space

-

Wright’s fitness landscape

-

Properties of landscapes

-

Epistasis

-

Synthetic landscapes (unitation)

|

|

4 (22/2)

|

Fitness Landscapes part 3

-

Epistasis networks

-

NK-landscapes

-

Royal Road / Staircase

-

Path lengths

-

Probing high-dimensional landscapes

-

Fitness distance scatter plots

|

Fitness Landscapes part 4

-

Biological examples

-

Visualisations

-

Protein folding

-

“And then it gets

complicated...”

-

Individuals vs Populations

-

Neighbourhoods and variations

-

Designing a landscape

|

Assignment 1 solution

-

Richard’s results of Assignment

1

-

GAs vs hillclimbers

|

|

5 (1/3)

|

|

Sex part 1

-

Two-fold cost of sex

-

Sexual reproduction

-

Sexual recombination

-

Crossover

-

Genetic linkage

-

Linkage (dis)equilibrium

|

Assignment 2

-

Assignment 2 explanation

-

Reading through papers

|

|

6 (8/3)

|

Sex part 2

-

Fisher/Muller hypothesis

-

Muller’s Ratchet

|

Sex part 3 and Crossover in EAs part 1

-

Doesn’t go over deterministic

mutation hypothesis

-

Sex from POV of an EC

-

Natural Selection and Variation study and

review

-

“Focussing search”

-

Hurdles problem

-

Schemata and building blocks

|

Crossover in EAs part 2

-

Schemata and building blocks

-

GA vs hillclimber on RRs

-

Two-module model

-

Richard’s weird PDF simulation

|

|

7 (15/3)

|

Crossover in EAs part 3 and Coevolution

part 1

-

Analysis with + without recombination

-

Analysis mutation-only vs crossover

-

Definition of coevolution

-

Normal vs reciprocal

-

Pairwise and diffuse coevolution

-

Sasaki & Godfray model

-

Coevolutionary algorithm basics

-

Why use them

|

Coevolution part 2

-

Why use them

-

Coevolution examples

-

Funny YouTube video of evolved 3D

creatures

-

Subjective vs objective fitness

-

Types of coevolutionary failure

-

Cool Java simulation animation

|

Cooperative Coevolution

-

Cooperative coevolution

-

Shared domain model

-

Divide and conquer

-

Talking about papers from Assignment

2

|

|

🐰 EASTER 🥚

|

-

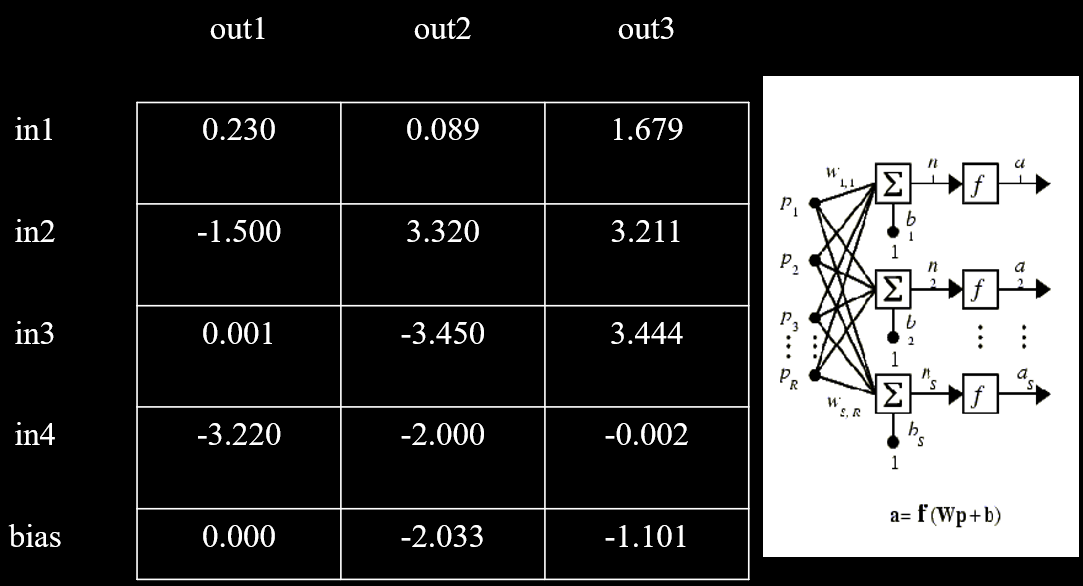

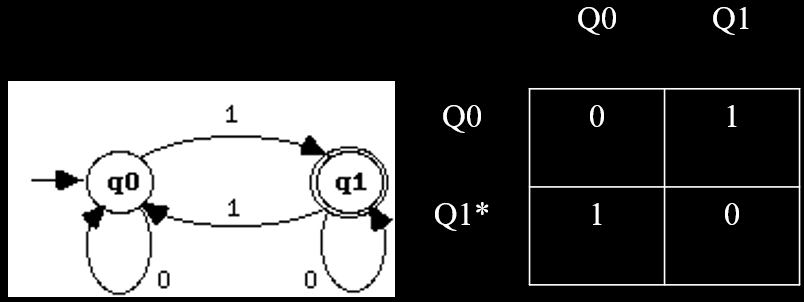

Types of modularity

-

Concepts of modularity

-

Simon’s

“nearly-decomposable” systems +

examples

-

Modularity in EC

-

Hierarchical IFF

-

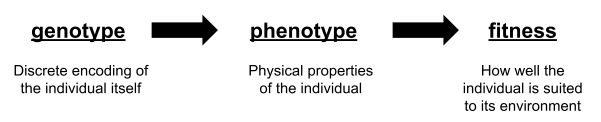

Genotype → phenotype →

fitness

-

Types of representation

-

Structured genotypes

-

Tree encodings

-

Grammar-based encodings

-

Turning strings into structure

-

Strong / Weak

-

Wet / Dry

-

Sims creatures, Framsticks

-

Darwin Pond

-

Cellular automata based

-

Computer program based

-

Information Entropy

-

Kolmogorov complexity

-

Structural complexity (object, process,

(non)hierarchical)

-

McShea’s passive diffusion

model

-

Hierarchical structure

-

Major transitions in evolution

-

(Not examinable; tbh I barely understand

this lecture)

-

Motivation

-

What is Natural Induction

-

Examples

-

Gradual vs Compositional

-

Complex systems

-

HIFF

-

Genetic maps

-

SEAM

|

|

|

|

|

Introduction

-

Hi there! 👋

-

Welcome to the Evolution of Complexity Notes.

-

In case you didn’t realise, I’m human!

-

I am the result of hundreds of millions of years of

evolution and natural selection.

-

If you’re not a screen-reader, then you are,

too.

-

Sure, you might look like your parents, but if we go really

far back, we’ll find some things that look nothing

like you.

-

Like really small protein compounds from the primordial

soup.

-

But enough about soup. Let’s compare you with a

calculator.

|

|

|

|

A calculator

(one that you’re allowed to use in

exams)

|

You, probably

|

-

Hmm... yes... that’s definitely a calculator, and

that’s definitely a person.

-

What’s the difference?

-

One is engineered; designed by us, and (if engineered properly) composed of modular components, with low coupling and

high cohesion.

-

The other is living; designed by nature, with loads of

tightly-packed organs that are very highly coupled.

-

What I’m trying to say here is that evolved things

are very complex.

-

We want to make our own things that are this complex.

-

Hence, we have evolutionary algorithms!

-

With it, we can make things like this neural net that plays snake.

-

Evolution by natural selection is the best explanation we

have for the complexity of living things.

-

But remember, that’s just a theory. A ga- uh, a scientific theory.

-

It’s not perfect, and it’s your duty as a

scientist to find holes in it....

-

... or, just do what the lecturers tell you and get decent

marks.

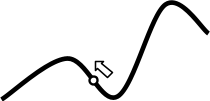

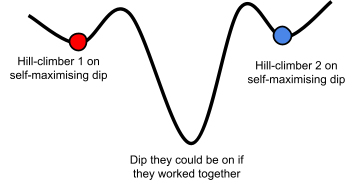

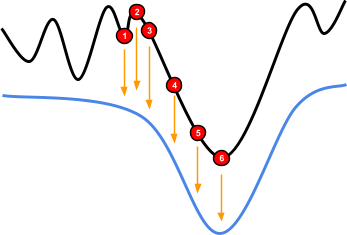

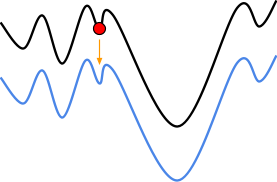

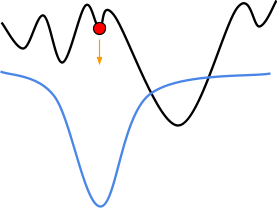

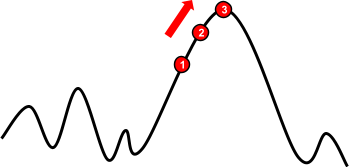

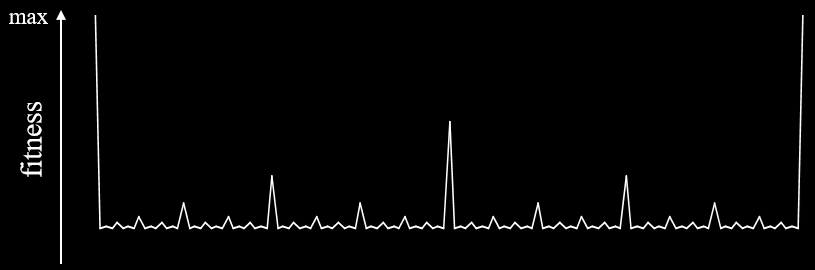

Evolutionary Algorithms

-

Do you remember stochastic local search?

-

If not, here’s a refresher on hill-climbing:

-

Start with a random solution

-

Make a small change to it

-

If that change was better, keep it. If not, discard.

-

Repeat until we reach a solution we like

-

It’s an algorithm used to search a solution space and

find a local maximum.

A computer scientist climbing a solution space

to its maximum using a stochastic local search algorithm.

-

This is kind of like evolution, right?

-

There’s generations, and mutations, and stuff like

that.

-

Evolutionary algorithms (EA) are like hill-climbers, but with a population.

-

Some individuals in the population might be luckier than

others.

-

We need to concentrate on those, so their children can

survive, and “discard” the others.

-

It sounds cruel, but that’s nature.

-

There are four requirements for evolution by natural selection:

- Reproduction

-

Individuals can replicate themselves.

-

Copy(A) → B

- Heritability

-

Offspring is similar to their parents.

-

B ≈ A

- Variation

-

There are differences, or “mutations”, in

children.

-

Vary(Copy(A)) → B1, B2, B3

-

B1 ≠ B2 ≠ B3

-

Change in fitness

-

The variations cause our fitness to change.

-

Copy(A) → B

-

Fitness(A) ≠ Fitness(B)

-

Here is the outline of an evolutionary algorithm (EA):

-

Initialise all individuals of a population

-

Until (satisfied or no further improvement)

-

Evaluate all individuals

For i=1 to P; Quality(Pop[i]) → Fitness[i]; End

-

Reproduce with variation

For i=1 to P;

Pick individual j with probability proportional to

fitness

Pop2[i] =

Pop[j];

Pop2[i] =

mutate(Pop2[i]);

End

Pop2 → Pop;

-

Output best individual

-

The necessary components of an EA are:

-

Representation of individuals

-

Fitness function

- Selection

-

Variation operators

-

Let’s go through each one.

Representation of Individuals

-

As a computer scientist, you know that computers store

information as data.

-

That means, to represent our individuals, we need to encode

them into data.

-

There are many ways to do this:

|

Problem

|

Individual encoding

|

|

A function that takes 20 binary

arguments.

Maximise the function.

|

A 20-bit binary number

e.g. 00101110101000010111

|

|

A graph theory problem, like TSP

|

A string of node names, making a path

e.g. BHGADCEF

|

|

Where to place x number of items

|

A set of (x,y) coordinates

e.g. (12,34), (56,78), (90,12)

|

-

As you can see, there’s loads of ways to encode

individuals as data.

-

If we can encode an individual into data, and we can copy data...

-

... that means we can copy an individual.

-

That covers our reproduction requirement of natural selection!

Fitness Function

-

In evolution, each individual has fitness.

-

That means we need a fitness function that takes an

individual and returns a fitness.

-

Our fitness function needs to directly correlate to our

“goal”, or what we want to achieve out of our

algorithm.

-

That way, when we maximise our fitness, we’re closer

to achieving our goal.

- For example:

|

🤔 Problem

|

🏁 Goal

|

💪 Fitness function

|

|

A function that takes 20 binary

arguments.

Maximise the function.

|

We want to find a set of arguments that

maximises our function.

|

The value outputted by the function.

|

|

Travelling Salesperson Problem (TSP)

|

Travel all nodes in the shortest length

|

Length of the tour

(we would have to minimise our fitness function

in this case, e.g. make it a cost

function)

|

|

Position of drilling sites on an oil

field

|

We want to extract as much oil as we can.

|

Oil yield from a simulation of the oil

field.

|

An individual with a high fitness.

Selection

-

How do we select what individuals to, uh...

“mate” and pass on to the next generation?

-

There’s a variety of ways to pick.

-

One of these ways is using fitness proportionate selection (FPS).

-

The slides compare it to a roulette wheel, but it’s

basically individuals with a higher fitness have a higher

chance of being selected.

-

For example, if we had a population of 2, and #1 had a

fitness of 6, and #2 had a fitness of 4, then we’d

have a 60% chance of selecting #1 and a 40% chance of

selecting #2.

-

Another way is Boltzmann Selection: a more refined version of the fitness proportionate

selection above.

-

The formula goes like this, where

is the probability of picking individual

is the probability of picking individual  :

:

,

,

-

- the fitness function of individual

- the fitness function of individual

-

- standard deviation in distribution of fitness

- standard deviation in distribution of fitness

-

controls selection

controls selection

-

means weak (neutral) selection; all individuals have an equal

chance

means weak (neutral) selection; all individuals have an equal

chance

-

means strong (choose the fittest) selection; only the highest

fitness will be picked

means strong (choose the fittest) selection; only the highest

fitness will be picked

-

Yet another way is Stochastic Universal Sampling (SUS).

-

Where FPS chooses several solutions from the population by

repeated random sampling, SUS uses a single random value to

sample all of the solutions by choosing them at evenly spaced intervals (source).

-

Comparing it to the roulette wheel, FPS spins the wheel

multiple times to get samples.

-

However, SUS has multiple, evenly-spaced pointers on the

wheel, and the wheel is spun once.

-

What’s good about this is that weaker individuals

have a chance to reproduce too, and the fittest members

won’t saturate the candidate space.

-

In other words, there’s less variation in the number

of offspring any one individual has.

-

Huh? You want even more selection operators?

-

Fine. Here’s three more:

-

Pick

individuals, and choose the individual with the

highest fitness

individuals, and choose the individual with the

highest fitness

-

Pick any individual in the top half of the population

-

Assign a rank to each individual based on fitness (e.g. worst fitness has rank 1)

-

Pick an individual with probability proportional to rank (or 1/rank, if that’s what you need).

-

How is this different from FPS?

-

In rank-based selection, only the ordinal assignment of fitness matters, not cardinal (it only matters that fitness is greater; not how much

by).

-

For example, say i1 has a fitness of 1, and i2 has a fitness of 2.

-

i1 would have rank 1, and i2 would have rank 2.

-

FPS and rank-based selection wouldn’t be any

different.

-

Now, what would happen if i2 had a fitness of 2,000?

-

FPS would pick i2 waaaay more times over i1!

-

However, their ranks are still equal, so the probabilities

of rank-based selection wouldn’t change.

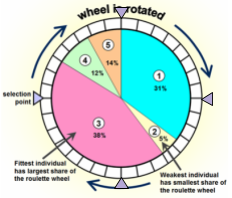

Variation Operators

-

There are two main variation operators. One of which is mutation.

-

With mutation, we slightly change the properties of an

individual.

-

How much we change by is completely random. Some might

change a lot, and some might not change at all.

-

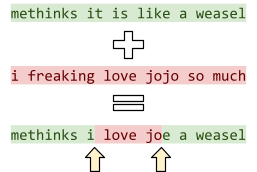

For example, we could mutate the sentence “methinks

it is like a weasel” by going through each letter and

changing it to a different letter under a probability of

1/28 (28 being the number of letters in the sentence).

-

Therefore, on average, we only change 1 letter per

mutation, but it’s still possible for some sentences

to change a lot and some to not change at all.

-

How volatile our mutations can be (mutation rate) is a set variable for our evolutionary algorithm that

we can change. We can tweak it to maximise the performance

of our algorithm.

Polydactyly; a rare mutation in humans

that gives us more than 5 fingers / toes.

-

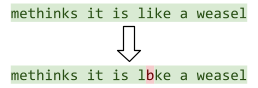

The other kind of variation is crossover, or recombination.

-

This refers to using information from two or more

individuals to make a brand new individual.

-

There’s three versions of this:

-

Randomly select one point on the strain.

-

Everything above the point is from parent 1, and everything

below the point is from parent 2.

-

Randomly select two points on the strain.

-

Everything to the left of the first point and to the right

of the second point is from parent 1, and everything between

the first and second points are from parent 2.

-

Randomly select genes from parent 1 and 2 with even

probabilities for each.

-

Here is the outline of our evolutionary algorithm 2.0, with crossover:

-

Initialise all individuals of a population

-

Until (satisfied or no further improvement)

-

Evaluate all individuals

For i=1 to P; Quality(Pop[i]) → Fitness[i]; End

-

Reproduce with variation

For i=1 to P;

Pick individual j with probability proportional to

quality

Pick individual k with probability proportional to

quality

Pop2[i] =

crossover(Pop[j],Pop[k]);

Pop2[i] =

mutate(Pop2[i]);

End

Pop2 → Pop;

-

Output best individual

Variations on a theme

-

There are two main kinds of genetic algorithm:

-

Offspring replaces one person of the population each time

they are generated

-

Pick parents for reproduction

-

Generate offspring with variation

-

Put offspring back into population

-

For each generation, P offspring are generated for the next

population

-

Pick parents for reproduction

-

Generate offspring with variation

-

Put offspring into NEW population

-

Current population = new population

-

For some weird reason, academics like to argue about

evolutionary algorithms.

-

So much so that they split into factions, or

“evolutionary algorithm schools”.

-

Here’s a range of evolutionary algorithm

schools:

-

Binary representation

-

Crossover is important

-

ES: evolutionary strategies

-

Real-valued representations

-

No crossover

-

Evolved mutation rate

-

For evolving programs

-

Specifically, LISP s-expressions

-

EP: evolutionary programming

-

Finite state automata

-

Mutation only

-

According to Richard, the schools GA, ES, and EP came

together to form their own conference, but the one in charge

of GP said no and continued doing their own thing.

-

Setting the parameters of an evolutionary algorithm is

something of a black art.

-

There’s:

-

If it’s too big, you waste computation

-

If it’s too small, there’s no useful

diversity

-

Mutation rate / crossover rate

-

If it’s too low, there’s not enough

innovation

-

If it’s too high, there’s too much

destruction

-

Choosing a good fitness function

-

Selection pressure

-

Fitness scaling via: Boltzmann selection, rank-based,

tournament size, truncation fraction

-

Diversity maintenance methods

Evolution History

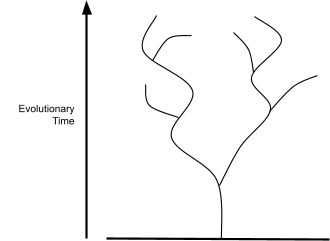

-

Nowadays, evolution and natural selection are pretty

well-known and generally accepted.

-

But how did we get here? This section covers the history of

evolution up to this point.

Pre-Darwinian Evolution

1735

-

Carolus Linnaeus published Systema Naturae.

-

It was a book that described all living things that Carolus

had studied, and put them into categories, like animals and

plants.

1758

-

Carolus Linnaeus published the 10th edition of Systema Naturae.

-

It set up the formal classifications that are used (with few changes) today.

-

With that, systematic biology took off (finding living things and figuring out what kind of a

thing it is in Linnaeus’ book).

1785

-

James Hutton published Theory of the Earth, or an Investigation of the Laws

Observable in the Composition, Dissolution and Restoration

of Land upon the Globe.

Around 1800

-

Jean-Baptiste Lamarck put forward the theory of inheritance of acquired characteristics, or “Lamarckism”.

-

He did not see all life sharing a common ancestral

tree.

-

An early proponent of evolution was Charles Darwin’s

grandfather, Erasmus Darwin.

-

The main two problems back then was:

- Religion

-

How can evolution create such complex organisms?

-

“Natural theology”, advocated by the likes of

William Paley, used complexity of life as evidence for

God.

1830

-

Charles Lyell published Principles of Geology.

-

The Biblical view of creation is starting to turn

over.

-

The world is far older than traditionally believed.

Natural Selection

1831

-

Darwin sailed out on The Beagle (a boat).

-

He publishes The Voyage of the Beagle.

-

It raised questions which lead to the theory of natural

selection.

-

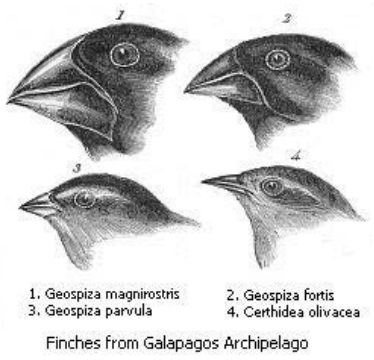

When he came back, he had examined a collection of

finches.

-

He thought they were all one species, but they proved to be

many species.

-

The simplest explanation was that a single migrant had adapted to the habitat on different islands.

-

He came up with a mechanistic explanation: natural selection:

“Natural variations in populations that provide

small

selective advantages will take over the

population.”

-

It was strongly influenced by Thomas Malthus’ Essay on the Principle of Population (1798).

-

Darwin then spent the next 20 years collecting

evidence.

-

During this, he published definitive work on cirripedes (marine invertebrates including barnacles).

-

Another naturalist, Alfred Wallace, who also thought

Malthis was cool, arrived at the same theory of natural

selection.

-

He was also inspired by Darwin’s Voyages of the Beagle. He had explored South America and Malaya.

-

After the last trip, he sent his ideas to his mentor,

Charles Darwin.

-

Wallace agreed to publish jointly with Darwin.

1832

-

Darwin wrote On the Origins of Species.

-

Everyone accepted the theory of evolution because:

-

Darwin was popular

-

He had 20 years worth of evidence

-

He had a lot of arguments, including:

-

power of artificial selection by human breeders

-

population of isolated islands by chance migrations

-

relatedness of species in a tree like structure

-

He also addressed problems with evolution:

-

missing fossil record

-

difficulty of evolving complex structures (e.g. the eye)

-

People believed evolution, but not natural selection.

-

They didn’t think it could make complex

structures.

-

Darwin wrote six editions of Origins of Species, the final editions accepting possibilities of mechanisms

besides natural selection.

-

Darwin could never explain inheritance.

-

His problem was:

-

Under crossover, any variation should be averaged

out.

-

How can there be enough variation for natural selection to

work?

-

Gregor Mendel had the answer with his Experiments in Plant Hybridization (1865) (the theory of discrete genetics), but it was neglected until 1900.

|

|

Darwin’s Problem

|

|

|

|

Let’s say we have a population of tall

and short people.

When a tall person and a short person have a

kid, under reproductive crossover, that kid will

be medium height.

After a while, the population of tall and short

people will average out to medium people.

With that, how will there be enough variation

in the population to achieve natural

selection?

(Of course, this isn’t how genetics

works, but they didn’t know that.)

|

|

-

Everyone was stuck arguing about natural selection for the

next 70-100 years.

1882

-

After Darwin died, advocates for natural selection shut up

for a bit.

-

Lamarck’s theory of “acquired

characteristics” was revived.

-

They came up with another theory:

-

Large mutations called “saltations” produce

dramatic changes to organism structure, which explains

speciation.

-

This contrasted the gradual, small changes of conventional

Darwinism.

-

When Mendelian genetics was rediscovered in 1900, it was

seen as a blow to natural selection.

-

Basically, natural selection was on the ropes.

-

It took three young Turks to turn things around...

The three young Turks who turned things around.

-

Ronald A Fisher

-

Sewell Wright

-

John B S Haldane

-

They read the Origin of Species and ignored the establishment thinking.

-

They said “wait... what if:

-

Natural selection occurs

-

and genetic inheritance occurs”

-

And thus, the modern synthesis was born.

The Modern Synthesis

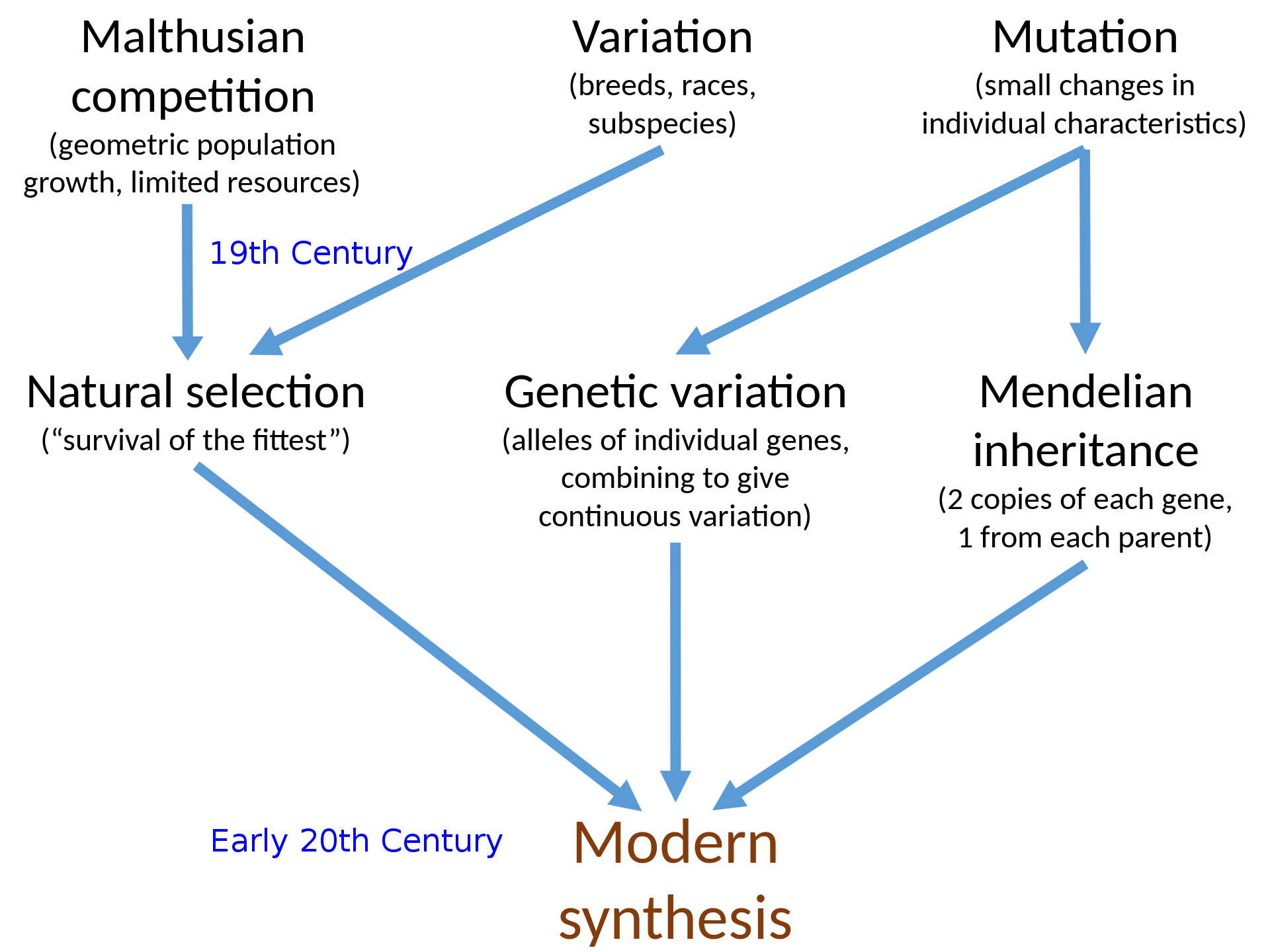

A cool picture from Wikipedia illustrating modern

synthesis

1930

-

Fisher wrote the book The Genetical Theory of Natural Selection.

-

It didn’t catch on until around 17 years later.

-

Haldane helped push these ideas into mainstream

biology.

1960

-

Natural selection and the modern synthesis, often called neo-Darwinism, triumphed.

-

But people still argued.

-

Fisher and Wright argued.

-

Wright said inbreeding is important, and small populations

were necessary to explore complex fitness landscapes.

-

People also argued about what causes speciation.

-

Saltationists put this down to large mutations.

-

Geographical isolation was considered one of the major

causes of speciation.

-

Ernst Mayr says that speciation by isolation is one of the

driving forces of invention by evolution (and says that small populations in peripheral locations

are more likely to be sources of innovation).

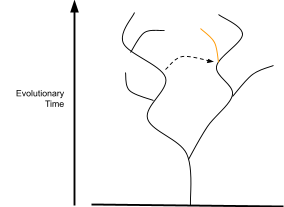

1972

-

Eldedge and Gould proposed the theory of punctuated evolution.

-

This is evolution that consists of periods of rapid

evolution in speciation events, followed by long periods of

stasis.

-

It explains rapid changes found in the fossil record.

-

People also argue about what level selection operates

at.

-

Richard Dawkin and his fanbase say it’s only at the

gene level.

-

But it may be more than that... who knows?

Fitness Landscapes

Definitions

-

Genome / Chromosome: A vector / string of genes

-

e.g. a bit string

-

“001111”

-

Gene: one variable in a genome

-

Allele: The value of a gene

-

‘tall’, ‘blue’, 0, 1, A, a

-

Locus: The position of a gene in a genome

-

The locus of ‘e’ is further from

‘a’ in “abcde”

-

Genotype: A type of genome

-

The difference between a genome and a genotype is that

genomes refer to each individual string of genes, and

genotypes refer to what the genome represents (the

semantic).

-

For example, in the population:

-

0001111

-

0001111

- 1001000

-

1100011

-

There are 4 genomes, but 3 genotypes.

-

A fitness landscape consists of:

-

A set of genotypes

-

A neighbourhood

-

We arrange genotypes in a space such that:

-

Similar genotypes are close

-

Different genotypes are far

-

e.g. neighbouring genotypes differ by a single allele

-

Height of surface in this space = Fitness of each

genotype

-

By rendering a fitness landscape in a graph, we can

visualise how changes in the genome affect fitness.

-

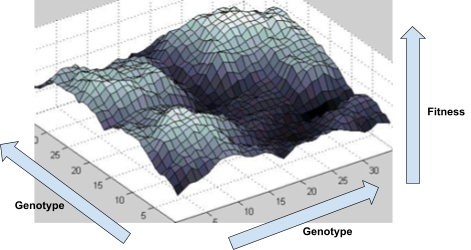

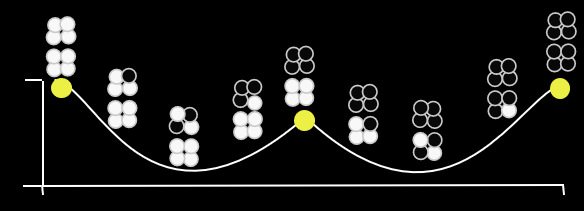

Here’s an example of a fitness landscape:

-

As you can see, some genotypes do not yield good fitness,

and some yield really good fitness.

-

There are also hills that a population can climb to achieve

better fitness.

-

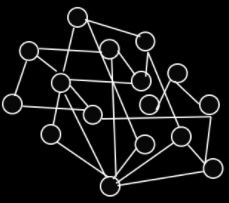

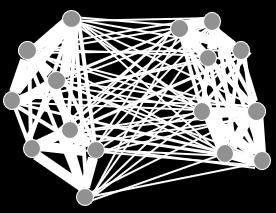

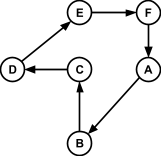

Wright’s genotype sequence space is a representational DAG (directed acyclic graph) of all the different genotype sequences, and how they

relate to each other.

-

Here’s a few examples:

-

These graphs go from left to right, and map out every

possible derivable genome.

-

Each node represents a possible derived genome.

-

Each edge adds one new gene at a time.

-

The distance from each node represents the number of

genetic changes to reach from the source genome to the

destination genome.

-

The number of genes represent the number of dimensions a

genotype sequence space has.

-

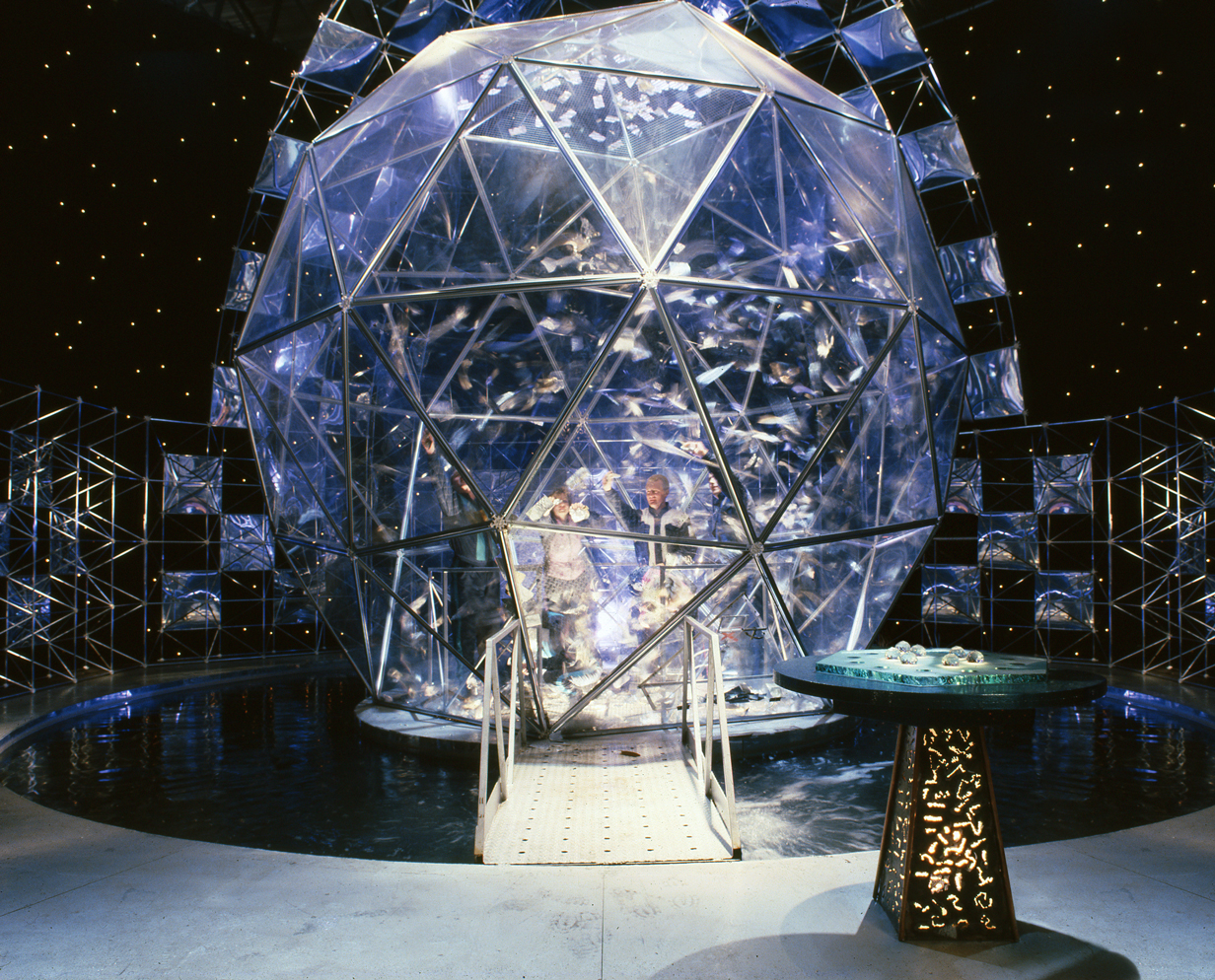

Alright, these Crystal Maze looking things are cool, but

with lots of genes, there’s going to be lots of

dimensions.

-

It’ll become really hard to visualise with lots of

dimensions.

An IRL genotype sequence space, with people inside it.

-

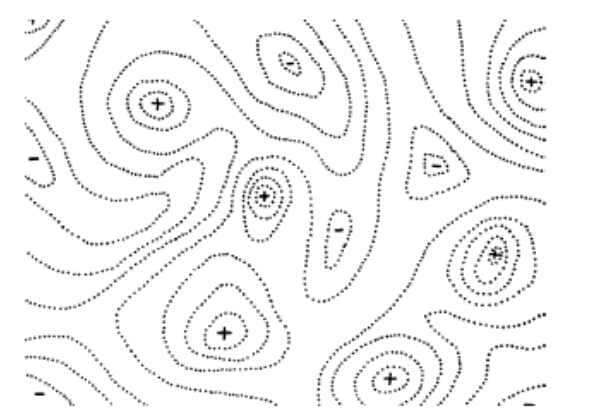

Wright’s fitness landscape is like a normal fitness landscape, but represented

with contours instead of a fancy 3D graph:

-

The contours represent fitness elevation in a

two-dimensional fitness landscape.

-

The +’s represent peaks in fitness, and the -’s

represent valleys in fitness.

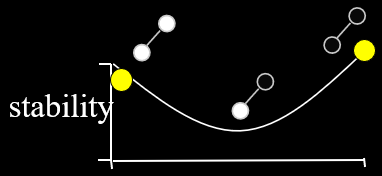

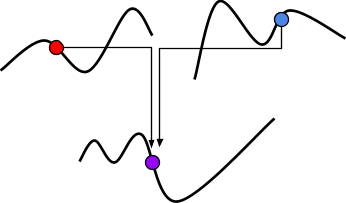

Intuition

-

We said before that evolutionary algorithms are like

hillclimbers, but with a population.

-

But how does that actually change anything?

-

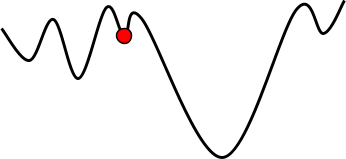

Let’s have an example:

-

With hillclimbing, we have a single individual and we

“climb up the hill” we are currently sitting on,

in the fitness landscape.

-

But look! Just to the right of us, there’s an even

higher hill.

-

Because hillclimbing only cares about the hill we’re

on, we’ll miss the chance to get an even better

maximum.

-

Because evolutionary algorithms have a population, and in

that population we have diversity, we can go up different

hills.

-

The higher hill has greater fitness than the lower hill, so

the population going up the higher hill will eventually be

favoured.

-

That doesn’t mean we’ll always get the best

maximum though: at the start, the subset that is going up

the taller hill could die out faster than the subset going

up the smaller hill, giving us the smaller maximum

again.

Properties

-

Fitness landscapes have several properties:

-

How similar neighbouring points are in terms of

fitness

-

How “rough” or “spiky” the fitness

landscape surface looks

-

Local optima, global optima

-

Global optima is the very highest fitness peak in the

landscape.

-

Local optima are peaks, but not the highest peaks.

-

How likely is it I arrive at a particular optimum?

-

In other words, how likely, if I started from somewhere on

the space, would I end up at this optimum?

-

If the spike has a greater base width, then it’s more

likely that a random space would land on that spike.

-

So it also refers to the base width of the spike at the

bottom.

-

Neutrality and redundant mappings

-

Is the gradient zero?

-

In other words, is the fitness landscape elevation

completely flat?

-

If so, then all the surrounding genotypes map to the same

fitness value.

-

This is also called a neutral plateau.

-

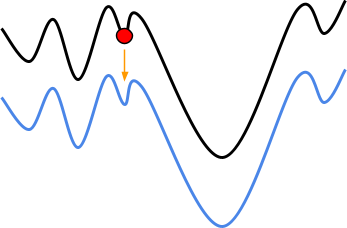

Paths of (monotonically) increasing fitness

-

Do I need to go down in fitness and cross a ‘fitness

valley’?

-

One of the biggest problems of all stochastic local search

methods is going from one peak to another (typically a higher peak).

-

In this two-peak fitness landscape, if we’re stuck at

the local optimum, how can we reach the global

optimum?

-

This is a problem with natural evolution in biology,

too.

Epistasis

-

Gross... that’s not what I meant!

-

Let’s look at the next definition:

-

Uh... how about I just tell you instead?

-

Epistasis: the fitness effect of an allele depending on the genetic

context of that allele.

-

It creates ruggedness in a fitness landscape.

- Huh?

-

In other words, the change in fitness due to substituting

one allele for another at one locus, is sensitive to the

alleles that are present at other loci.

-

You can think of epistasis as the property of genes having

“dependencies” on each other.

- What?

-

Let me just show you an example instead...

Examples: Synthetic Landscapes

-

There are two kinds of landscapes:

-

Artificial landscapes we design ourselves, for

demonstration and testing purposes

-

Fitness landscapes based on real-world data

-

First, we’re going to look at some synthetic

landscapes.

-

There’s many different kinds...

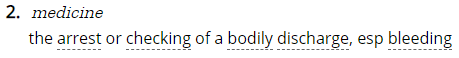

Functions of unitation

-

The name means ‘from many dimensions to

one’.

-

One of these functions include counting the number of

1’s in a bitstring:

-

This is very similar to our “methinks it is like a

weasel” coursework.

-

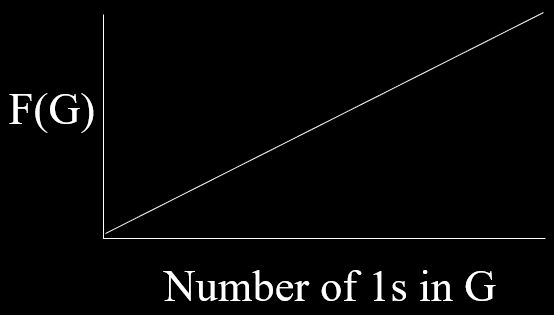

You can also tweak this slightly to make a “trap

function”:

-

By having this fitness valley, it’s a great landscape

to test EAs on.

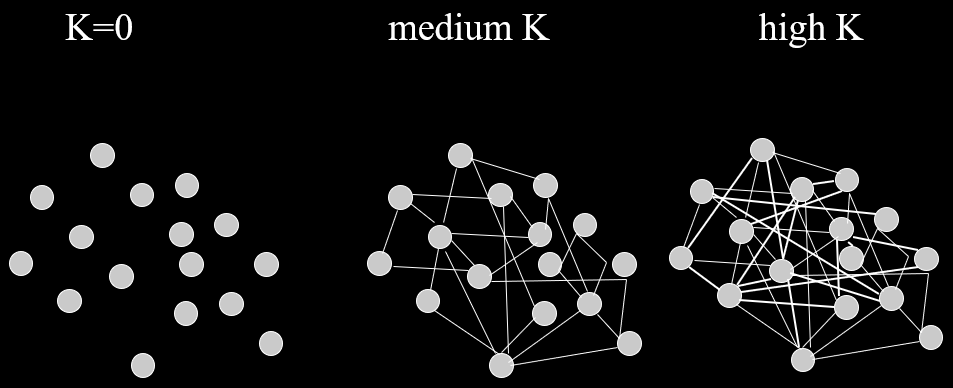

NK landscapes

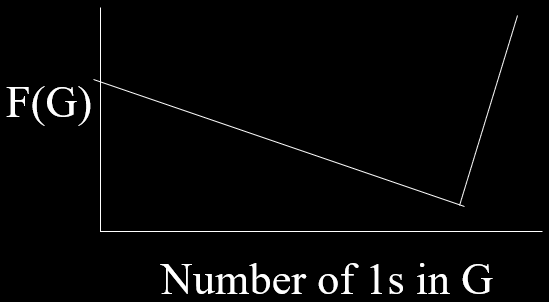

-

Before we go over NK landscapes, we need to cover epistasis

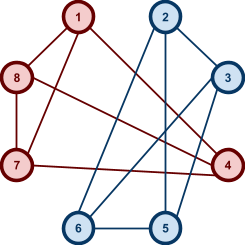

networks.

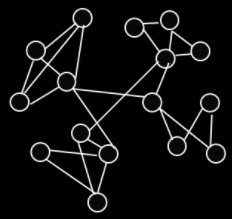

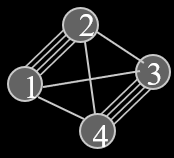

-

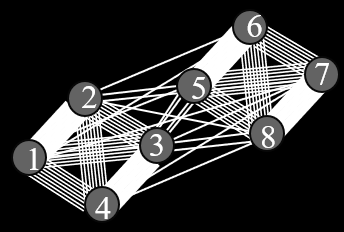

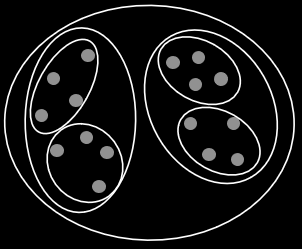

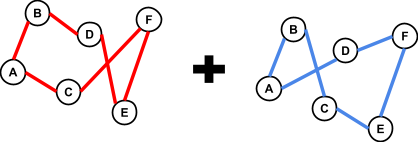

An epistasis network is a graph that represents the epistasis in a

genome.

-

The nodes represent the problem variables (or genes).

-

The edges represent dependencies / epistasis between

variables.

-

An NK landscape is a fitness landscape with epistasis, with

two variables controlling the level of epistasis:

-

- number of variables

- number of variables

-

- number of dependencies between variables

- number of dependencies between variables

-

In other words, each variable has a fitness contribution

that is a function of its own state and

others.

others.

|

Example: NK landscapes are Kim Jong-un’s favourite

fitness landscape

|

-

We have this genome, and it’s got

epistasis, where:

|

1

|

2

|

3

|

4

|

5

|

6

|

7

|

8

|

|

0

|

1

|

1

|

1

|

0

|

0

|

1

|

1

|

-

So that means each gene also depends on

three other genes for their fitness.

-

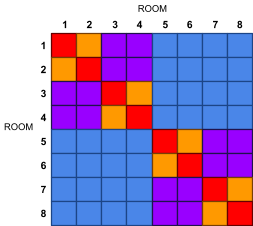

The dependencies between genes are

represented by colour.

-

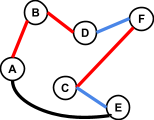

As a graph, that looks like this:

|

-

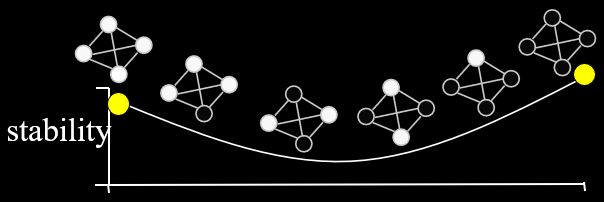

When

, there are no dependencies.

, there are no dependencies.

-

The landscape looks like one big mountain, like Mt.

Fuji.

-

As

increases, the landscape gets more rugged; more peaks

in a smaller space.

increases, the landscape gets more rugged; more peaks

in a smaller space.

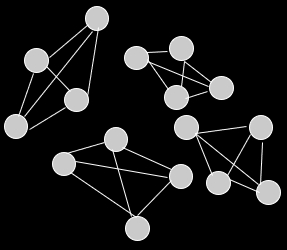

Royal Roads and Staircases

-

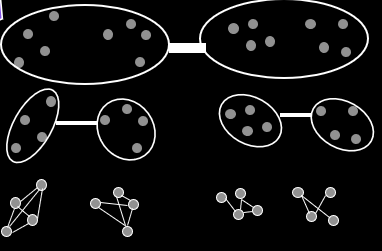

Royal Roads split the variables into sub-systems / groups /

blocks, so the fitness only goes up if a whole sub-system is

completed.

-

You can think of royal roads as special kinds of NK

landscapes, where the dependencies are in adjacent

groups.

-

Here’s an example with a couple of genomes with the

counting 1’s:

|

Bit string

|

Fitness

|

|

1111 0000 0000 0000

|

8

|

|

0000 1111 0000 0000

|

8

|

|

0000 0000 1111 0000

|

8

|

|

0000 0000 0000 1111

|

8

|

|

1111 1111 0000 0000

|

16

|

|

0000 0000 1111 1111

|

16

|

|

1111 0000 1111 1111

|

24

|

|

1111 1111 1111 1111

|

32

|

-

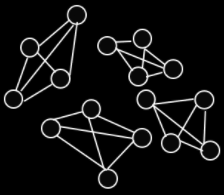

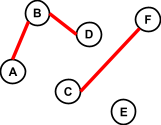

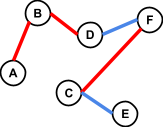

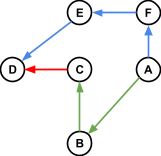

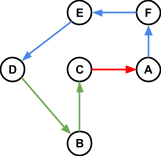

The epistasis networks of royal roads have clear

separations between the groups:

-

With royal roads, you can solve any of the groups in any

order.

-

But with royal staircases, you must solve them in a certain

order.

-

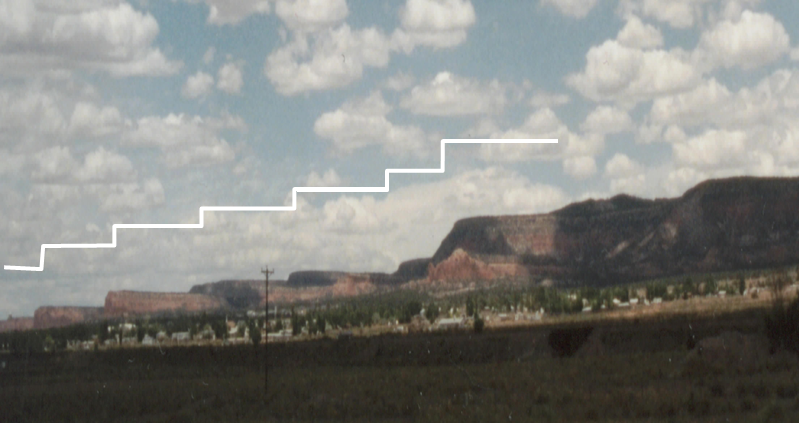

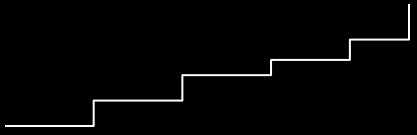

A royal staircase is a special kind of royal road where a completed

block only contributes fitness if the blocks before it are

all completed also.

-

Here’s another example with counting 1’s:

|

Bit string

|

Fitness

|

|

1111 0000 0000 0000

|

8

|

|

1111 1111 0000 0000

|

16

|

|

1111 1111 1111 0000

|

24

|

|

1111 1111 1111 1111

|

32

|

|

0000 1111 0000 0000

|

0

|

|

0000 0000 1111 0000

|

0

|

|

1111 0000 1111 1111

|

8

|

|

1111 1111 0000 1111

|

16

|

-

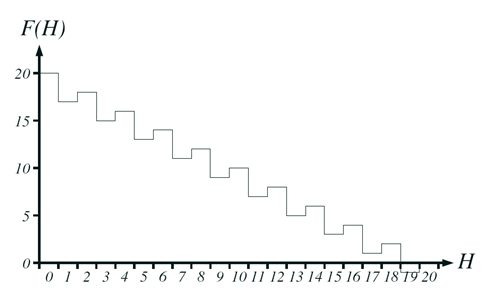

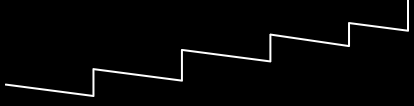

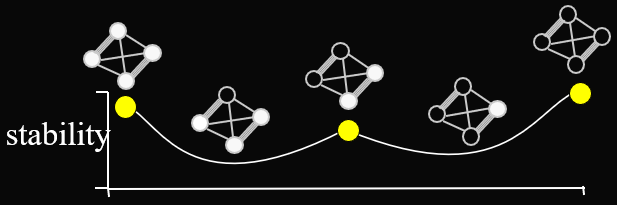

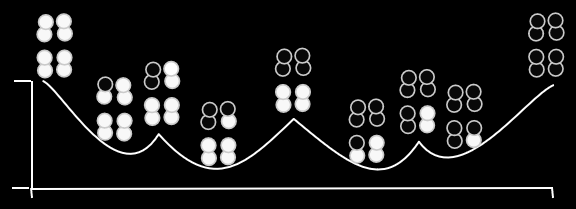

Why are they called royal staircases?

-

It’s because their fitness landscapes look like

stairs.

-

Each fitness “step” is a neutral plateau.

-

Going from one neutral plateau to the next is called

“portal genotypes”.

Long paths

-

An adaptive walk is the path a population takes from a point on the

fitness landscape up to a peak, where fitness is highest for

that hill.

-

What’s the longest an adaptive walk could be?

-

For a problem like counting 1’s, it would be

, because you’re linearly setting each gene to

1.

, because you’re linearly setting each gene to

1.

-

Is there a problem where the length of an adaptive walk is

greater than

?

?

-

Given only one gene changes per generation, we can

construct a fitness landscape whose adaptive walk length is

greater than

.

.

-

Here’s a small example where

:

:

|

Bit string

|

Fitness

|

|

00000

|

1

|

|

10000

|

2

|

|

11000

|

3

|

|

11100

|

4

|

|

11110

|

5

|

|

11111

|

6

|

|

01111

|

7

|

|

00111

|

8

|

|

00011

|

9

|

|

10011

|

10

|

|

...

|

-

These are called “Long Path Problems”.

-

Usually, the path needed to get to the optimum is

exponentially long.

-

This is because lots of genes have to be changed, and then

reverted, and then changed again etc. to get to the

optimum.

-

In some situations, landscapes are just too

high-dimensional for us to visualise.

-

How do we “probe” some useful information in

these situations?

-

There’s two methods:

-

Adaptive walks from a peak

-

By measuring how long it takes to walk to the peak of a

hill, we can figure some things out.

-

For example, if the adaptive walk length is really small,

is likely to be really high.

is likely to be really high.

-

Or, if it’s greater than

, it’s likely to be a long path problem.

, it’s likely to be a long path problem.

-

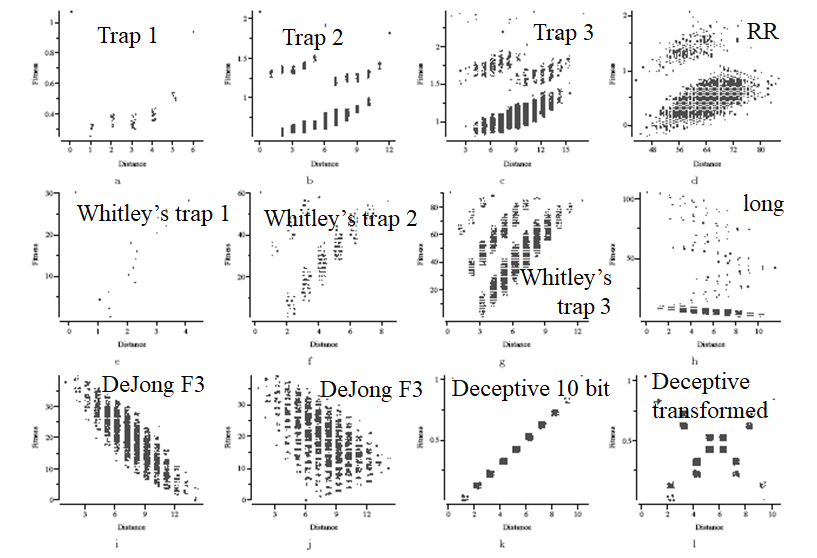

Fitness distance correlation

-

Plotting fitness against Hamming distance in scatter plots

can help visualise the shape of the fitness landscape:

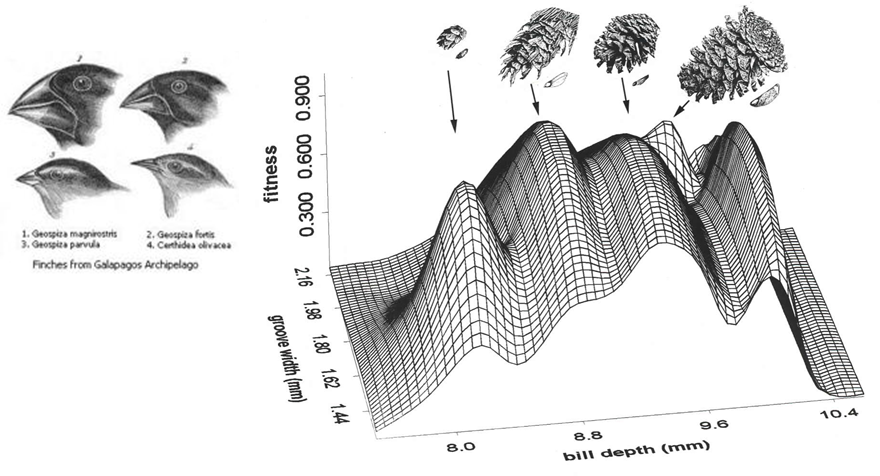

Examples: Biological Landscapes

-

A biological example of a fitness landscape would be beak

size:

-

Different beak sizes match different seeds, hence why there

are multiple peaks.

-

This graph is in trait space, which means the axis are traits of the individual, such

as bill depth.

-

But, if we think about this for a second, we realise things

aren’t quite so simple.

-

What actually affects these “fitness”

values?

-

There’s a whole sequence of things that derive fitness for individuals:

-

Nucleotide sequences (ACTGGGGACAC)

-

→ Amino acid sequence

-

→ Protein shape

-

→ Protein function

-

→ Trait value

-

→ Fitness contribution

-

That’s a lot of steps!

-

Evolution only really affects the nucleotide sequences, so

those are the things we should be graphing.

-

But that’s so complex, that we’ll be spending

the rest of our lives drawing one graph!

-

Plus, that fitness contribution is still epistatically

dependent on other proteins.

-

So, what? We cry and switch modules?

-

Not today! We create small windows on real

landscapes.

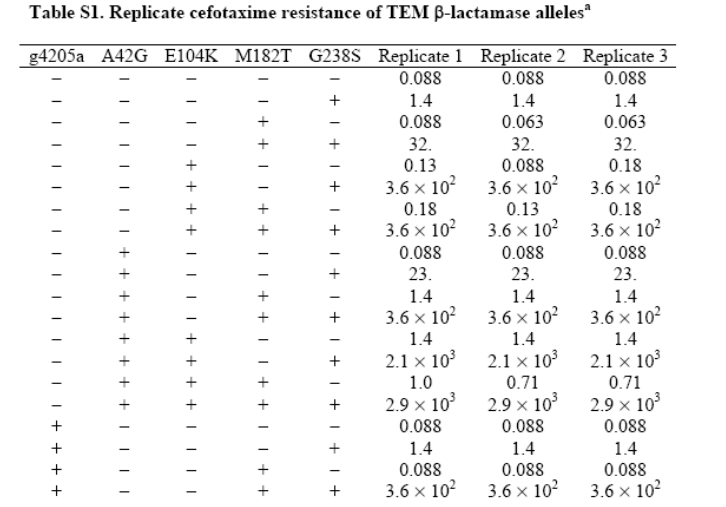

Complications

-

When we’re trying to reason with real-life evolution,

we can’t map out an entire fitness landscape.

-

Instead, we “peek” and look at small windows of

the real landscape.

- How?

-

There are different ways to do this.

-

One way is looking at populations of different gene

mutations:

-

... and then constructing accessible evolutionary paths

from that data:

-

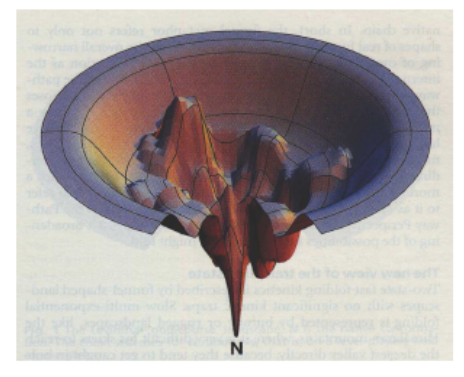

Another way is a series of one-step peeks into a

high-dimensional space.

-

So, instead of mapping the whole landscape, we map points

with a radius (like a flashlight in a horror game):

-

We can even use protein folding.

-

These long protein strings ultimately determine

fitness.

-

We can “fold” these proteins, and each protein

folds in a different way.

-

They can fold well, or they can fold... not so well.

-

If the protein folds the way it should, that means that

protein has a low fold energy, and if it doesn’t fold

the way it’s supposed to, that protein has a high fold

energy.

-

We can map this fold energy to fitness.

-

We want proteins that fold properly, so a low fold energy

is high fitness, and a high fold energy is low

fitness.

-

It gets even more complicated...

-

There’s the whole Wright vs Fisher and the relevance

of epistasis.

- Things like:

-

Spaces and operators

-

Individuals vs Populations

-

Sexual populations vs Asexual populations

-

Noise and dynamic landscapes

- Coevolution

-

Designing landscapes

-

“If we’re on a hill anyway, we’re always

going to go uphill.”

-

“Other peaks and optima do not matter.”

-

“So epistasis doesn’t matter,

either.”

-

“Dumbass.”

-

“Epistasis and local optima are a big deal for

natural populations.”

-

“They’re biologically significant too,

dickhead.”

Fisher and Wright, hard at work.

-

How do populations go from one peak to another?

-

To do that, we need to partially ignore the local fitness

gradients.

-

This can happen in small populations (or multi-dimensional populations).

-

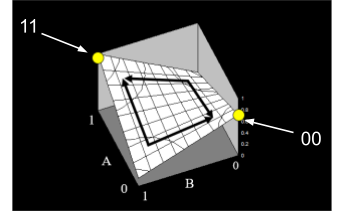

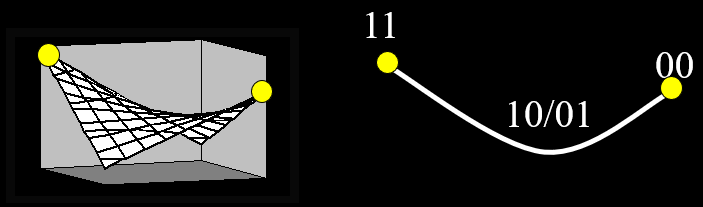

We can represent populations in a certain way.

-

We can represent it as a point in an allele frequency space, which refers to the set of all possible vectors that

denote the frequency of alleles in each loci of the

gene.

-

Movements due to selection in sexual populations move to

adjacent points in this space... sometimes.

-

Doesn’t make sense? Not a problem, here’s an

example:

|

Example

|

|

|

0

|

0

|

0

|

1

|

1

|

1

|

1

|

|

0

|

0

|

0

|

1

|

1

|

1

|

1

|

|

1

|

0

|

0

|

1

|

0

|

0

|

0

|

|

1

|

1

|

0

|

0

|

0

|

1

|

1

|

|

2

|

1

|

0

|

3

|

2

|

3

|

3

|

-

The blue rows are the genes, and are bit

strings.

-

At each column, the value in red is the sum

of the 1 values in blue.

-

So the first column is ‘2’

because there are 2 1’s in the column

above.

-

The red row is our allele frequency

vector.

-

It is our representation of this

population in our allele frequency

space. population in our allele frequency

space.

|

-

You know, all this time we’ve been assuming that

distance = genetic differences (Hamming distance).

-

Like, the genotype AAB has a distance of 1 away from

ABB.

-

But what if that’s not strictly true?

-

For example, could you get from:

-

in just one step?

-

With mutation, no.

-

With crossover...?

-

000000000000000000 +

-

111111111111111111 =

-

000000000111111111

-

So, we can get from that genome to that other genome in one

move!

-

If that’s so, then they should have a distance of 1,

right?

-

That means they should be neighbouring, right?

-

Terry Jones, back in 1995 when he was writing his PhD,

would say “yes”.

-

Richard would say “no”, though.

-

So as you can see, the representation of a genome is very

important.

-

It determines what movements are natural and what fitness

landscapes exist.

-

When you design EAs, you need to choose your encoding

wisely.

Sex

-

Just quickly, let’s go over the two-fold cost of sex, or the cost of males.

-

Let’s say individuals can produce 4 eggs per

generation.

-

Asexuals can produce 1 → 4 → 16 → 64 eggs in

4 generations

-

With sexuals, it’s halved, because half of

individuals are males who can’t produce eggs.

-

So sexuals can produce 1 → 2 → 4 → 8 in 4

generations. Far less!

-

In summary, males contribute genes but they don’t

increase egg production or population growth.

-

So, why do we have males?

-

It’s a bit more complicated than just maths.

-

Males also provide parental investment.

-

Plus, egg production in females might be increased to

compensate, so egg production is the same after all.

-

But why do humans sexually populate at all? Why don’t

we asexually reproduce?

-

Let’s find that out...

-

There’s two ways to make new individuals with

sex:

- Reproduction

-

Recombination

-

With reproduction, each parent has two diploids.

-

Their sex cells takes one of the two strands, called

haploids.

-

When they “have sex”, a haploid from

‘daddy’ and a haploid from ‘mummy’

join together to form a diploid again: a new

individual.

-

We’re not really making any new genes; we’re

shuffling them around.

-

With recombination, we make new genes for the children.

-

There’s two kinds of recombination:

-

Chromosomal reassortment: Chromosomes are split and shuffled in hard-coded cutoff

points that are the same every time

-

Crossover: Chromosomes are split and shuffled in randomly selected

cutoff points

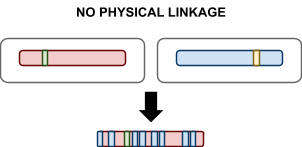

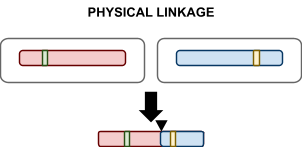

Linkage Equilibrium

-

When you have crossover, the chance that two genes are

still together after recombination depends on their physical linkage.

-

Physical Linkage: the tendency for alleles to travel together during

recombination due to physical proximity on the

chromosome.

-

In uniform recombination there is no physical linkage, because all genes are

chosen at random.

-

In one-point crossover, there is physical linkage, because adjacent genes on one

side of the cutoff point remain together.

-

When you do recombination, you can either join

alleles:

-

How do the frequencies of these combinations change?

-

Linkage Equilibrium: joint frequencies of alleles are product of marginal

frequencies

-

In other words, we say that there is no over (or under)

representation of combinations of alleles.

-

Using maths, it means f(AB) = f(A) * f(B)

-

f(A) means the frequency of ‘A’ genes in the

chromosomes

-

Linkage Disequilibrium is the deviation of the observed frequency of gene pairings

from the expected frequency.

-

Basically, it’s any deviation from linkage

equilibrium.

-

What does that really mean?

-

Let me use an example from Wikipedia.

|

Example: Two-loci and two-alleles

|

-

Let’s say you have a chromosome with

two loci (positions) and two possible

alleles for each loci:

ab

aB

Ab

AB

-

Let’s say the frequencies of getting

each of these are:

|

Haplotype

|

Frequency

|

|

ab

|

|

|

aB

|

|

|

Ab

|

|

|

AB

|

|

-

Those are the frequencies of the haplotypes

(frequency of the combination of alleles

together).

-

Here’s the frequencies of the alleles

individually:

-

If the loci and alleles are independent of

each other, then you could say that:

-

In other words, the frequency of gene

‘ab’ is just the frequency where

the first loci picks ‘a’ and the

second loci picks ‘b’.

-

That’s linkage equilibrium.

-

However, linkage disequilibrium is where

the loci and alleles are not independent of

each other, and observed frequencies like

don’t follow this

pattern. don’t follow this

pattern.

|

-

Remember physical linkage?

-

Uniform crossover has no physical linkage because random

loci are picked for the child.

-

That means there’s no way for two joint alleles to be

over or under represented, because they have an equal chance

of being selected or not selected.

-

So uniform crossover is really good at returning alleles to

linkage equilibrium.

-

However, what about one-point crossover?

-

If the joint alleles are close together, they’ll have

high physical linkage, so they’ll be over

represented.

-

If the joint alleles are far apart, they’ll have low

physical linkage, so they’ll be closer to linkage

equilibrium.

-

How do we get out of physical disequilibrium?

-

Have sex! (use sexual recombination, I mean)

-

A benefit of sexual recombination is that it reduces

linkage disequilibrium.

-

Under-represented combinations are created more than

they’re destroyed.

-

Over-represented combinations are destroyed more than

they’re created.

-

Why do organisms have sex? Why can’t they just

asexually mutate for everything?

-

What’s so good about reducing linkage

disequilibrium?

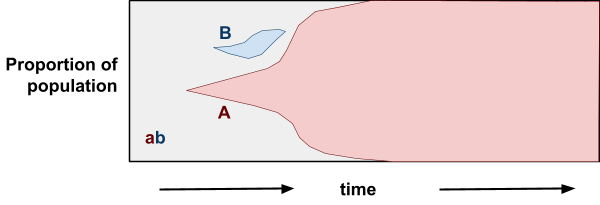

Fisher / Muller Hypothesis

-

Let’s take our two-loci two-allele example

again.

-

Let’s say we have a big population of them.

-

Upper-case alleles are more beneficial than lower-case

ones.

-

However, through mutation, individuals can only go

from:

-

This is linkage disequilibrium: the frequency of AB does

not correlate with the frequency of A and B individually

because mutation can’t get to AB.

-

With an asexual population, we get:

-

Individuals mutate and get Ab and aB, but since they

can’t mutate to be both, there’s

competition.

-

Eventually, Ab wins, and the beneficial B allele dies

out.

-

However, with a sexual population:

-

With crossover, we don’t need mutation to reach AB;

an Ab could mate with an aB and get an AB!

-

This removes competition between the two segregating

alleles.

-

In other words, sex is advantageous because it allows

alleles to be selected for independently.

-

Let’s look at this with bit-strings.

-

Let’s say you have two initial genotypes:

|

00100000000010000001 (3)

|

00000100000010001000 (3)

|

-

With asexuals, you can only produce more of these two

genotypes.

-

Yeah, there’s mutation, but that’s quite

slow.

-

But sexuals can produce all of these:

|

00100000000010000001 (3)

|

00100000000010000000 (2)

|

|

00100000000010001001 (4)

|

00100000000010001000 (3)

|

|

00100100000010000001 (4)

|

00100100000010000000 (3)

|

|

00100100000010001001 (5)

|

00100100000010001000 (4)

|

|

00000000000010000001 (2)

|

00000000000010000000 (1)

|

|

00000000000010001001 (3)

|

00000000000010001000 (2)

|

|

00000100000010000001 (3)

|

00000100000010000000 (2)

|

|

00000100000010001001 (3)

|

00000100000010001000 (3)

|

-

Way more variation!

-

Variation is good, because it means we can explore the

search space better.

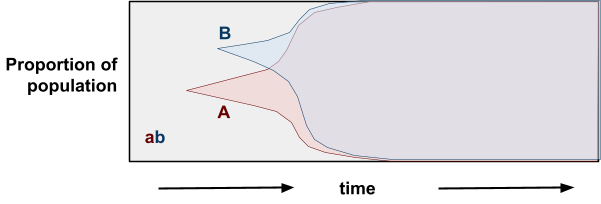

Muller’s Ratchet

-

Muller’s Ratchet is like the opposite of the Fisher /

Muller hypothesis.

-

Instead of good alleles coming together, this is about

removing bad alleles.

-

Let’s say you have a population of good

genotypes.

-

That means almost any mutation would make the individual

worse than better.

-

Like in the “methinks it is like a weasel”

where you have a sentence that’s only one character

off from being perfect.

-

With asexuals, mutations that bring the fitness down cannot

be recovered (at least not easily). That’s like a ‘click’ of the ratchet

you can’t go back on.

-

But with sexuals, you can cross two half-unmutated

individuals to recover the original unmutated

individual.

-

Populations are finite

- No elitism

-

No back mutations

-

No beneficial mutations (mostly because all genotypes are

good)

-

This is cool because deleterious mutations are more common

than beneficial ones.

-

However, this only applies to finite populations, and

according to Charlesworth, the time for a ratchet click is

very long in large populations.

|

|

💡 NOTE

|

|

|

|

Richard skipped over the deterministic mutation

hypothesis, so I won’t go over it in the

notes.

Let’s hope it doesn’t come up in

the exams!

|

|

-

Ok sex is cool and all but what about from a computing

point of view?

-

Is using sex any better than a simple hill-climber or

something?

-

The examples I used before had no local optima, so a

hill-climber would make all our problems go away, plus

it’d be easier to implement.

-

In the next section, we’ll go over why sex is good

for computer scientists too.

Crossover in EAs

Focussing Variation

-

Let’s have a look at uniform crossover again.

-

Let’s say we’re uniform crossover-ing these two

bit-strings:

|

0

|

0

|

1

|

1

|

0

|

1

|

0

|

1

|

|

0

|

1

|

1

|

1

|

0

|

0

|

1

|

1

|

-

Have a look at the first bit. It’s always going to be

zero, won’t it?

-

No matter which bit-string uniform crossover picks, that

bit will always be zero.

-

All bits where the two individuals agree on the same bit

won’t change:

|

0

|

0

|

1

|

1

|

0

|

1

|

0

|

1

|

|

0

|

1

|

1

|

1

|

0

|

0

|

1

|

1

|

|

-

|

?

|

-

|

-

|

-

|

?

|

?

|

-

|

-

What uniform crossover is effectively doing is just

mutation on those bits where the individuals are different (where I’ve put the green question marks).

-

This concept is called focussing variation, or ‘focussing search’.

I know it’s hard to, but you must focus!

-

Let’s have a look at the Hurdles problem.

-

The X axis is the number of zeroes.

-

The Y axis is the fitness.

-

You’ll notice that there’s dips when the number

of zeroes is odd, and peaks when it’s even.

-

Let’s say we have an individual on a peak. How do we

get to the next highest peak?

-

For example, let’s say, we have 18 ones and 2

zeroes.

|

11111011111101111111

|

|

-

Let

be the length of the bit-string.

be the length of the bit-string.

-

How would asexuals (mutation) vs sexuals (uniform

crossover) fare with this problem?

|

Asexuals

|

Sexuals

|

|

Let’s say each bit has a  chance of being mutated. chance of being mutated.

We have two zeroes we need to mutate to get to

the next peak.

However, we can’t just mutate one of the

two zeroes, because we’d fall into the

dip, get worse fitness, and this individual will

die out.

We must mutate both zeroes at the same

time.

The chance of that is  , or , or  . .

To generalise that, the chance of mutation

getting to the next peak is  , where , where  is how big the hurdle is. is how big the hurdle is.

|

Let’s say there’s two individuals

at the two zeroes peak:

11111111111101101111

11011011111111111111

Remember, uniform crossover is the same as

mutation on the bits that are different:

11111111111101101111

11011011111111111111

--?--?------?--?----

If we were to do mutation on those differing

bits, we’d need 4 successive ones to get

to the next peak.

The chance of that is  . .

To generalise that, the chance of uniform

crossover getting to the next peak is  , where , where  is how big the hurdle is. is how big the hurdle is.

|

-

Sexuals are way more likely to solve the problem quicker

than asexuals!

-

The chance a sexual solves the problem does not depend on

the length of the bit string, but asexuals do.

-

In other words, sexuals are way better at

“jumping”, or performing better in fitness

landscapes with gaps.

-

Can hill-climbers do that? Pfft... no.

-

Let’s compare one point crossover with uniform

crossover.

-

We have two bit-strings:

|

0

|

0

|

1

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

1

|

0

|

0

|

0

|

0

|

0

|

0

|

1

|

|

0

|

0

|

0

|

0

|

0

|

1

|

0

|

0

|

0

|

0

|

0

|

0

|

1

|

0

|

0

|

0

|

1

|

0

|

0

|

0

|

|

-

|

-

|

?

|

-

|

-

|

?

|

-

|

-

|

-

|

-

|

-

|

-

|

-

|

-

|

-

|

-

|

?

|

-

|

-

|

?

|

-

With uniform crossover, you can make up to 16 different

bit-strings from these two.

-

With one-point crossover, you can make 3 unique

bit-strings.

-

That sounds like crap, but hear me out.

-

The chance of getting each bit-string in one-point

crossover is

(on average; assuming all differing genes are

equidistant).

(on average; assuming all differing genes are

equidistant).

-

Let’s say that both uniform crossover and one-point

crossover gives you the children you want.

-

Which one are you gonna pick:

-

The one with exponential complexity or

-

The one with polynomial complexity

-

You’re going to pick the polynomial one, right?

-

If one-point crossover is going to give you the children

you want anyway, you might as well use that and get the

individuals you want faster than with uniform crossover or

mutation.

-

Yeah, uniform crossover gives more variation, but

it’s variation you don’t need. The group of

resulting individuals will be ‘diluted’ with

useless individuals of low fitness.

-

What’s the difference between:

-

the individuals you’d get from one-point crossover

and

-

the individuals you’d get from uniform

crossover?

-

Differing bits that are close together are unlikely to be

recombined.

-

Here’s an example:

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

1

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

1

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

|

-

|

-

|

-

|

-

|

-

|

-

|

-

|

-

|

-

|

?

|

?

|

-

|

-

|

-

|

-

|

-

|

-

|

-

|

-

|

-

|

-

With one-point crossover, what’s the chance of

getting a child with two ones?

-

It’d be very low, because the cutoff point would have

to hit that sweet spot to get the one from the red parent

and the one from the blue parent.

-

What about now?

|

0

|

1

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

1

|

0

|

|

-

|

?

|

-

|

-

|

-

|

-

|

-

|

-

|

-

|

-

|

-

|

-

|

-

|

-

|

-

|

-

|

-

|

-

|

?

|

-

|

-

Now there’s a huge chance that the bits are

recombined, because there’s a much bigger window for

the cutoff point.

-

In fact, it’s unlikely that they won’t get

recombined.

-

In summary, two close differing bits are unlikely to both change because of their physical linkage.

-

This is the bias of one-point crossover.

-

What if we could use this, though...?

-

What if the bits we want to keep the same are kept close

together?

Schemata and Building Blocks

Schemata Theorem

-

A schema is a general representation of a set of individuals that

share common bits.

-

For example, given this set of bit-strings:

|

1

|

1

|

0

|

1

|

0

|

1

|

0

|

0

|

0

|

0

|

1

|

0

|

0

|

1

|

0

|

0

|

0

|

0

|

|

0

|

1

|

0

|

1

|

0

|

1

|

0

|

0

|

0

|

0

|

1

|

0

|

1

|

0

|

1

|

0

|

0

|

1

|

|

1

|

1

|

0

|

1

|

0

|

1

|

0

|

0

|

1

|

0

|

1

|

0

|

0

|

1

|

0

|

0

|

1

|

1

|

|

1

|

1

|

0

|

1

|

0

|

1

|

0

|

0

|

1

|

1

|

0

|

1

|

0

|

1

|

1

|

0

|

1

|

1

|

|

*

|

1

|

0

|

1

|

0

|

1

|

0

|

0

|

*

|

*

|

*

|

*

|

*

|

*

|

*

|

0

|

*

|

*

|

-

If you’re sick of these coloured tables already,

it’s this:

-

The genes that are the same for individuals in the schema

are represented as ‘0’ or ‘1’.

-

The genes that can be anything for individuals in the

schema are represented as ‘*’.

-

The order of a schema is how many specific genes there are in the

schema.

-

For example, **1**0*1* has an order of 3.

-

The defining length is the distance from the first specified gene to the last

specified gene.

-

For example, **101** has a shorter defining length than 1***0.

-

You can also say “shorter order” if you have a

small defining length.

-

The schema fitness is the average fitness of all genotypes that fit that

schema.

Building Block Hypothesis

-

Holland (1975), Goldberg (1989) and others said that

schemas are the reason why GAs work so well.

-

They say that:

-

GAs find small schemas

-

Crossovers put small schemas together to make bigger

ones

-

It keeps making bigger schemas until the whole problem is

solved

-

These are Building Blocks: low-order, short defining length schema of above average

fitness, that can be put together with crossover to make

bigger building blocks.

-

Mitchell and Forrest set out to test this.

-

They created an idealised problem with a special kind of

building block.

-

You’ve seen it before: the Royal Road!

|

Bit string

|

Fitness

|

|

1111 0000 0000 0000

|

8

|

|

0000 1111 0000 0000

|

8

|

|

0000 0000 1111 0000

|

8

|

|

0000 0000 0000 1111

|

8

|

|

1111 1111 0000 0000

|

16

|

|

0000 0000 1111 1111

|

16

|

|

1111 0000 1111 1111

|

24

|

|

1111 1111 1111 1111

|

32

|

-

As you can see, if you

had:

-

1111 0000 0000 0000 (8) and

-

0000 0000 1111 1111 (16)

-

You can put these two building blocks together with

crossover to form:

-

Mitchell and Forrest did this, but it’s still nothing

a hill-climber can’t do.

-

In fact, a hill-climber does this better than a GA!

-

Plus, it’s a simpler algorithm!

-

How embarrassing...

-

So what can GA do better than hill-climber?

-

Well, that whole ‘sexuals being good at

jumping’ thing from earlier was pretty good.

-

That’s from Shapiro and Prugel-Bennet (1997)’s

‘two wells’/’basin with a barrier’,

and Thomas Jansen (2001)’s

‘Gap’/’jump’ function.

-

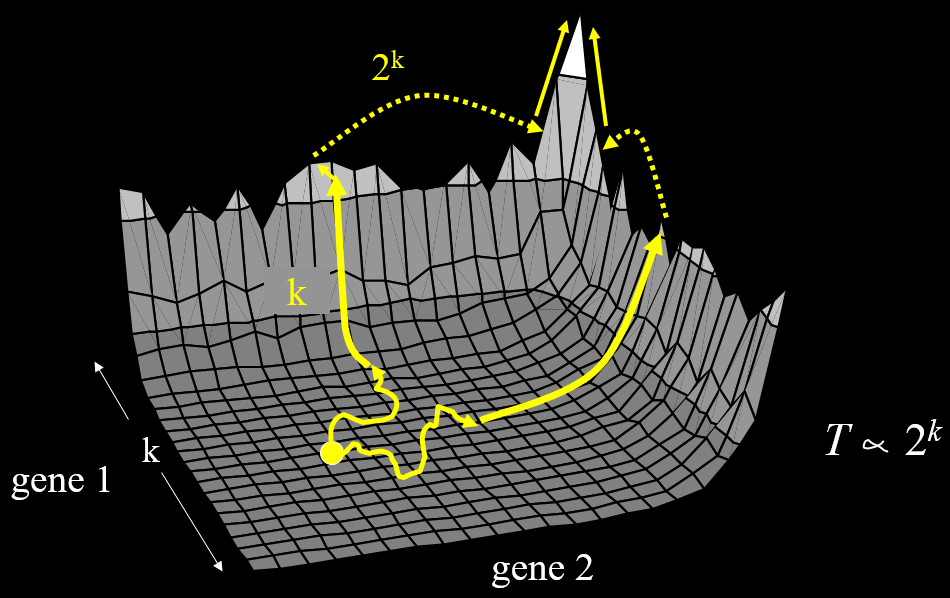

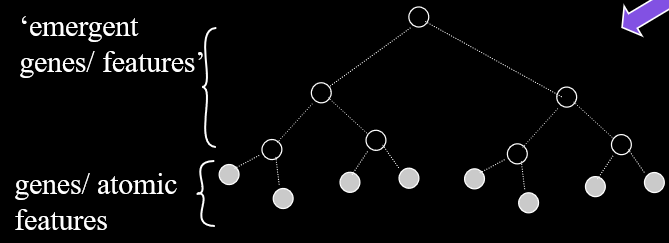

Also, Watson himself made a hierarchical modular

building-block problem (called H-IFF) where hill-climber can solve it in

, whereas GA with tight linkage can solve it in

, whereas GA with tight linkage can solve it in  .

.

-

That’s great and all, but so what?

-

Who cares? Do YOU care?

-

GAs can solve some things better than hill-climbers,

but...

-

... it’s not a standard GA, or even a

‘standard’ problem.

-

We’re just solving problems we’ve invented

ourselves.

-

We’re not solving any practical engineering problem,

and it has nothing to do with biology.

-

Can we show that GAs are the best thing ever in a more

simple model?

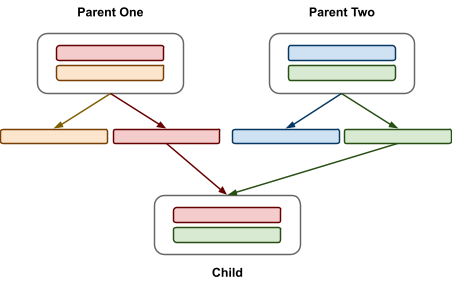

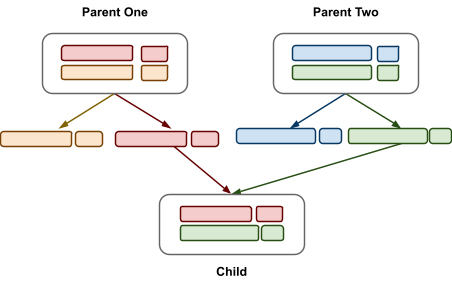

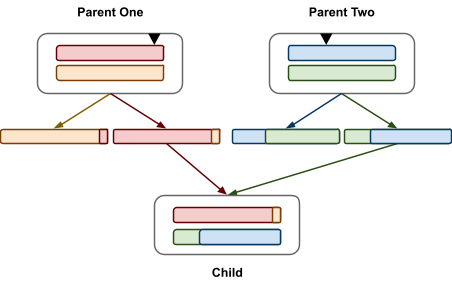

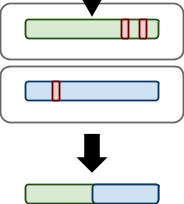

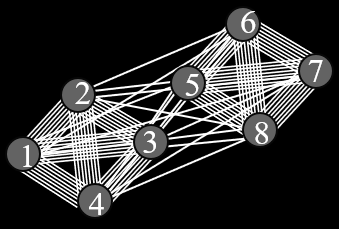

Two-Module Model

-

We’re going a bit biological in this one.

-

Nucleotide sites within a gene are grouped both

functionally and physically by virtue of shared

transcription and translation.

-

In other words, this is a simple form of biologically-real

modularity.

|

|

|

gene 1 (determines how strong you are)

|

|

|

|

|

gene 2 (determines how smart you are)

|

|

|

|

|

|

1

|

1

|

0

|

1

|

0

|

0

|

|

|

|

|

1

|

1

|

1

|

1

|

1

|

1

|

|

|

-

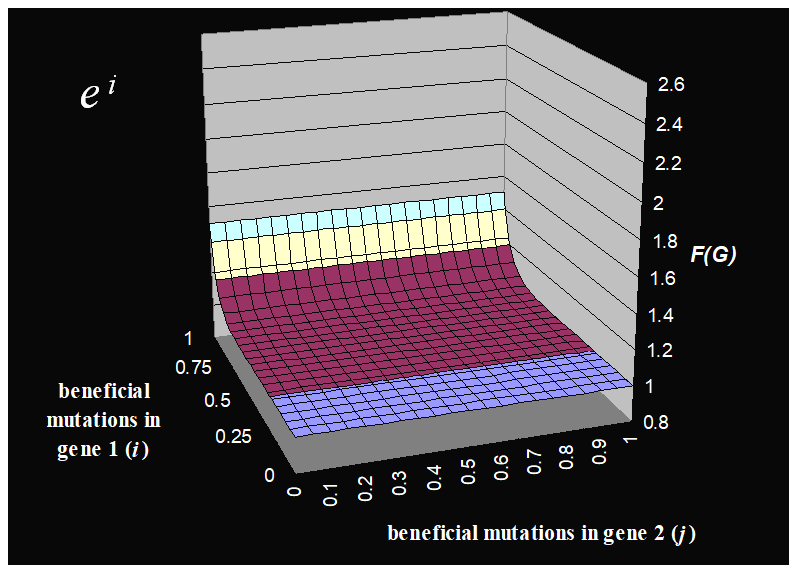

Obviously, we want to be strong and smart 😎 so we’d want to maximise the

ones in both these genes.

-

Let’s say that mutations in the same gene have

stronger synergy than mutations in different genes.

-

In other words, fitness increases exponentially with each

good mutation; the closer we are to a perfect gene, the more

important the mutations are.

-

You wanna see the fitness functions of this?

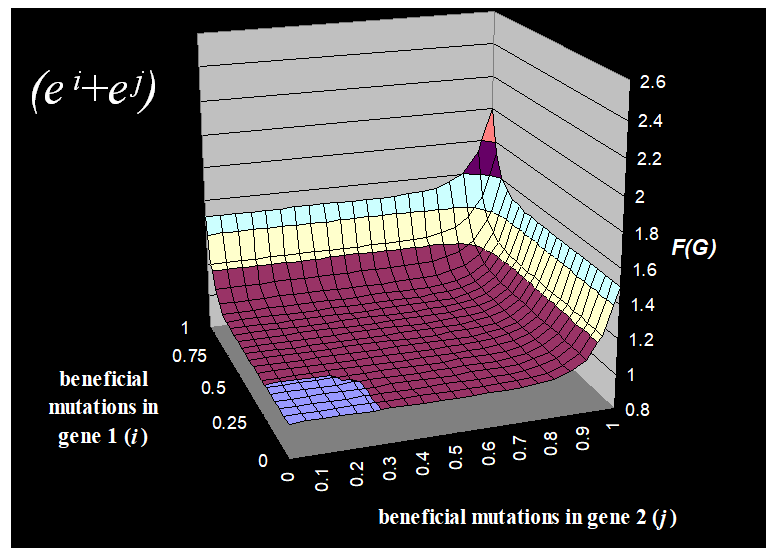

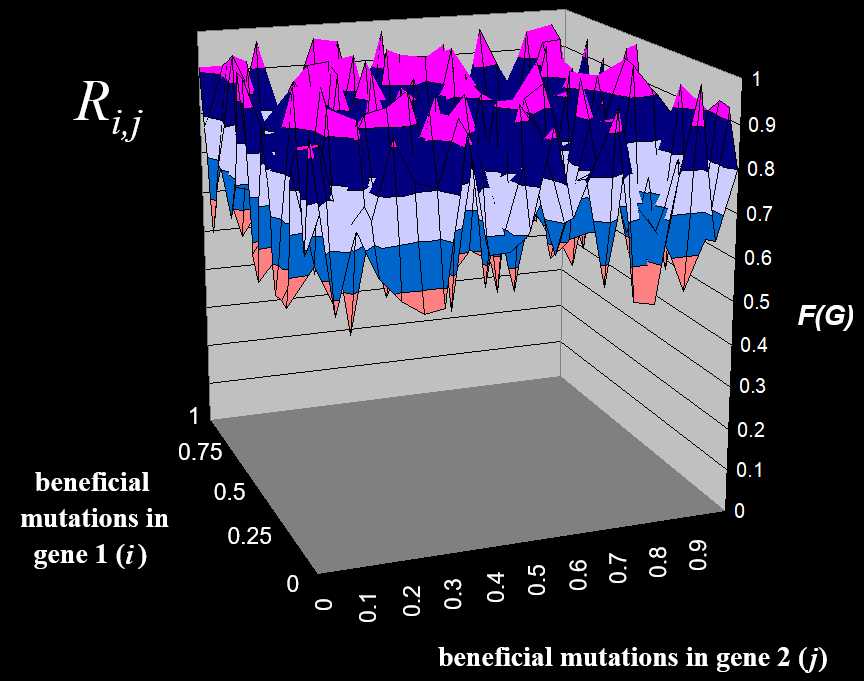

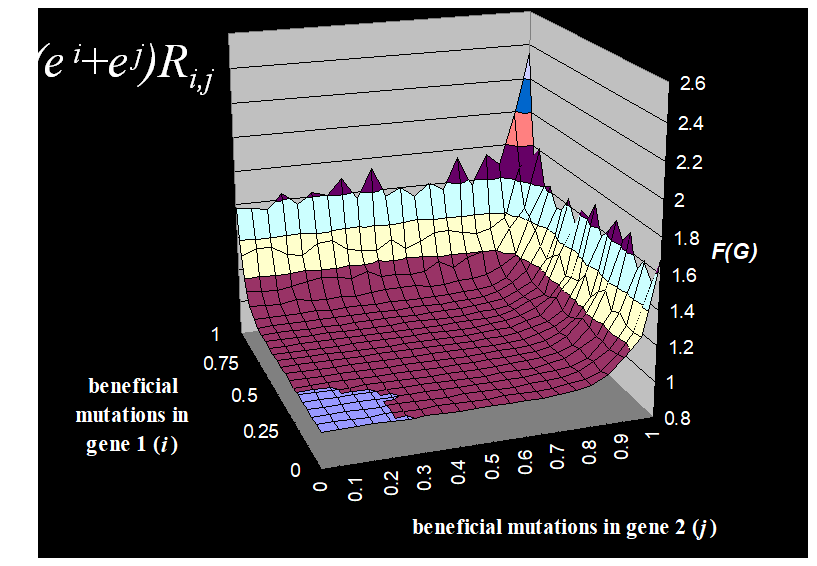

|

Fitness Function

|

Description

|

|

|

This fitness function only takes into account

gene 1.