Distributed Systems & Networks

Matthew Barnes

Networking (Kirk) 5

Physical + Link layer 5

Functions 5

Acknowledgements 7

Frames 9

Ethernet CSMA/CD 10

WiFi CSMA/CA 10

Ethernet LANs 11

Ethernet theory 11

ARP 12

Building Ethernet LANs 13

Spanning tree protocol 13

VLANs 15

Ethernet frame priority 16

Internet/Network layer 16

Internet Protocol (IP) 17

Subnets 21

Calculating subnets 23

ICMP 25

IP routing protocols 25

Aggregating prefixes 26

Interior routing protocols 27

Distance Vector 27

Link state 28

Exterior routing protocols 29

Transport layer 30

UDP 30

TCP 31

TCP or UDP? 35

Sockets 36

DNS 37

Application layer 40

Telnet 40

Email 41

SMTP 41

IMAP 42

HTTP 42

HTTP/2 43

QUIC 43

CoAP 44

RTSP 44

SMB 44

NFS 45

P2P 45

IPv6 45

IPv6 Features 46

Why use v6? 47

IPv6 headers 47

IPv6 with routers and privacy 48

Deploying IPv6 48

IPv6 security 49

Other IPv6 stuff 49

Network security 50

DNS Security 50

Firewalls 51

Intrusion Detection Systems 52

Port / Physical Security 52

DDoS 53

Wi-Fi (In)Security 54

Distributed Systems Implementations (Tim) 55

Distributed Systems Models 55

Physical models 55

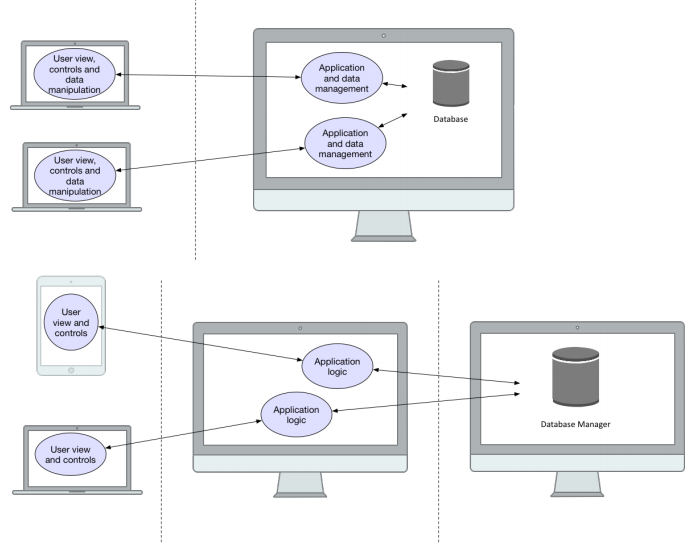

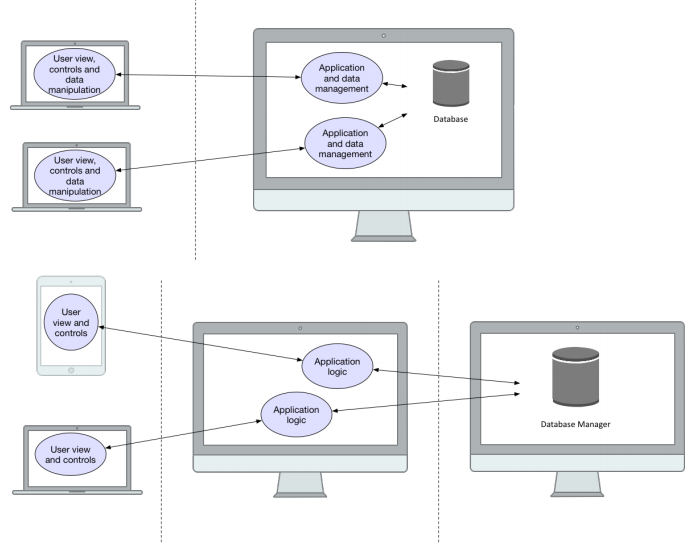

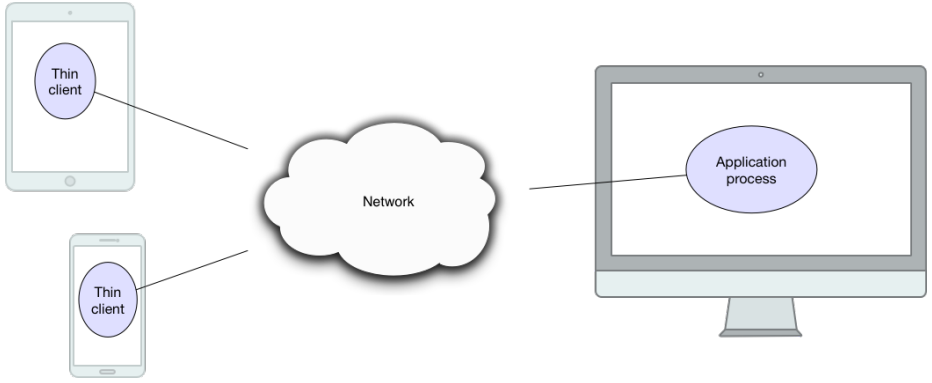

Architectural models 55

Mapping entities to infrastructure 56

Architectural patterns 56

Fundamental models: Interaction, Failure and Security 57

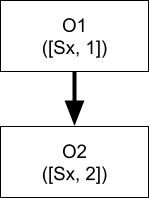

Distributed Object Systems 59

Distributed OOP 59

Remote Interfaces 60

Server Deployment 60

Dynamic Code Loading 60

Many Clients! 61

The Monitor 61

The Observer Pattern 62

RMI Limitations 62

Data Serialisation 62

Serialising Java Objects 62

Mobile code 📱 63

Pass by reference or value? 63

Programming language independence 64

JSON 64

GSON 65

Linked Data & Semantics 65

Loose Coupling 66

Space & Time Uncoupling 66

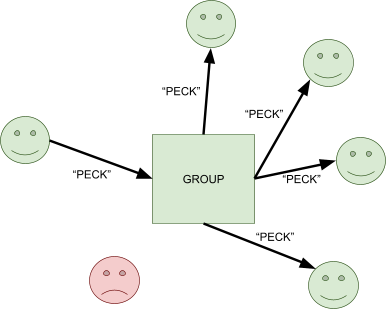

Group Communication 67

Publish & Subscribe 68

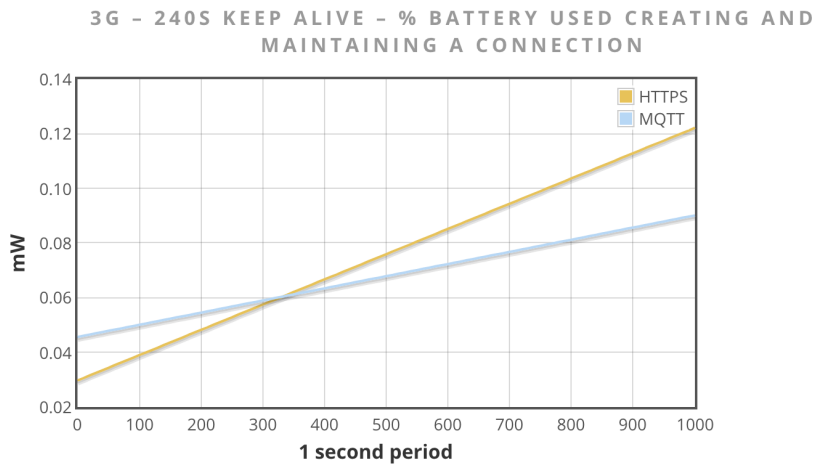

Message Queues & IoT 69

RabbitMQ 70

Distributed Transactions 71

Distributed Data 71

Lost Updates & Inconsistent Retrievals 71

Dirty Reads & Premature Writes 73

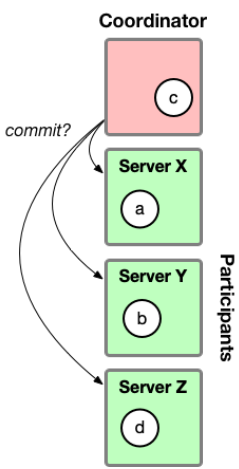

Distributed Commit Protocols 74

Two-Phase Commit 75

2PC Failure Handling 75

Increasing Concurrency & Deadlocks 76

Distributed Systems Theory (Corina) 77

Time in Distributed Systems 77

Clock synchronisation 77

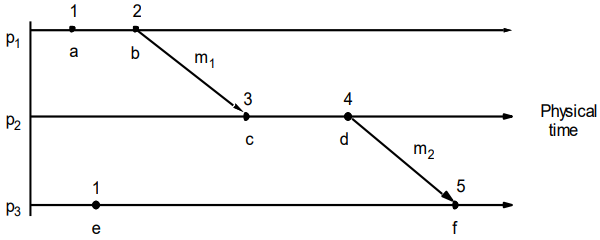

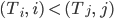

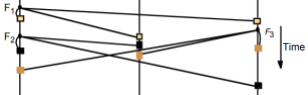

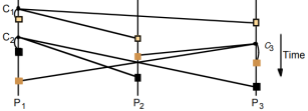

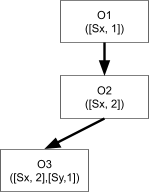

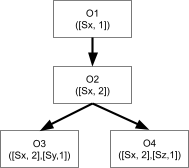

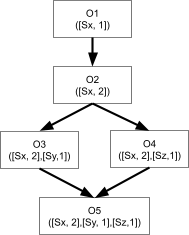

Logical clocks 78

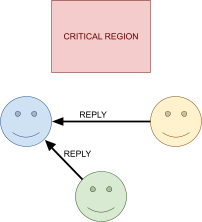

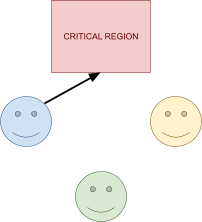

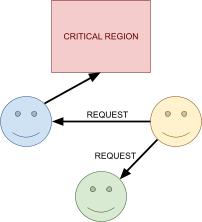

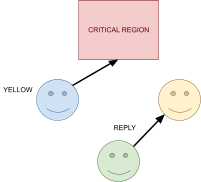

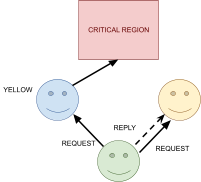

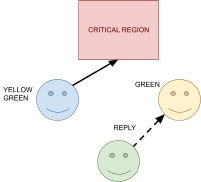

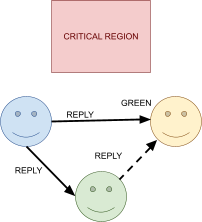

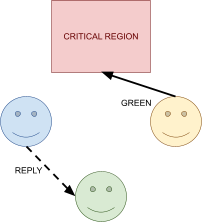

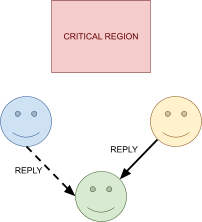

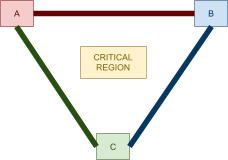

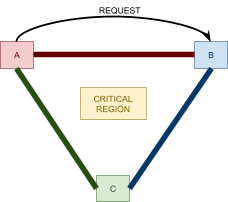

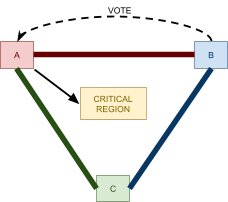

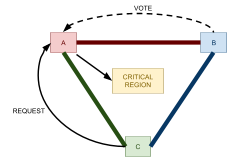

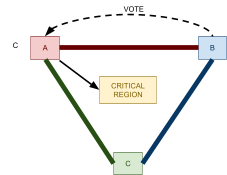

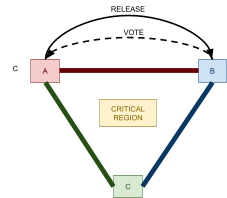

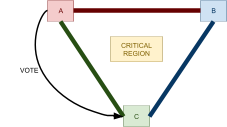

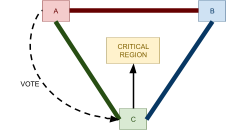

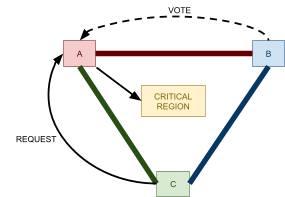

Distributed Mutual Exclusion 82

Failure detectors 82

Distributed Mutual Exclusion 82

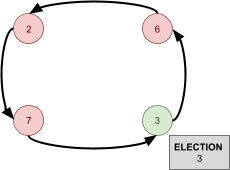

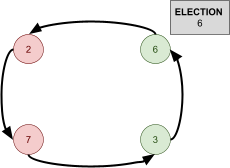

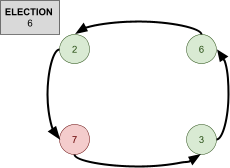

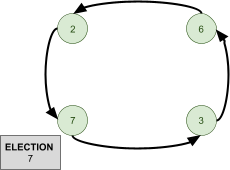

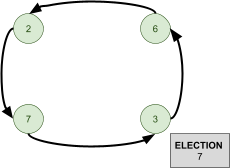

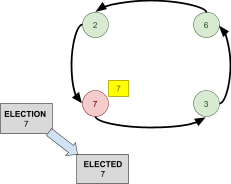

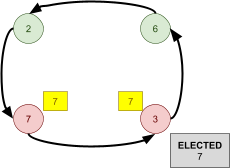

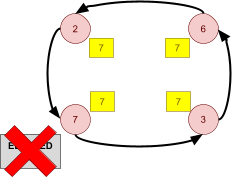

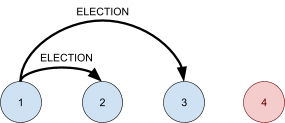

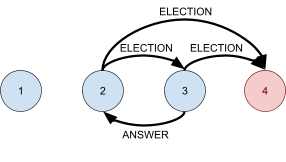

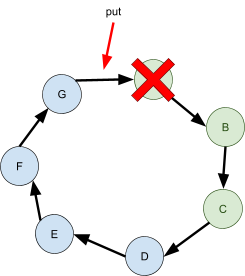

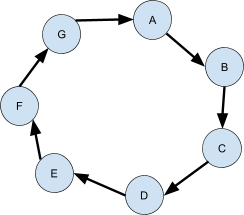

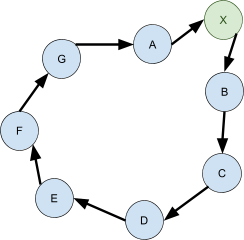

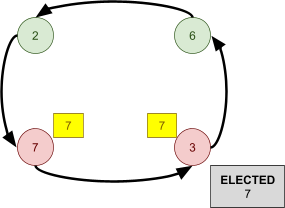

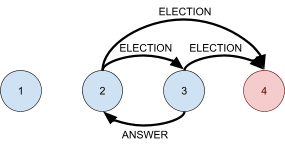

Leader Election 91

Asynchronous systems 92

Synchronous systems 96

Reliable and Ordered Multicast 98

Basic multicast 99

Reliable multicast 99

Ordered multicast 100

Consensus 103

Synchronous 104

Asynchronous 105

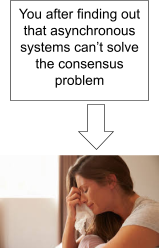

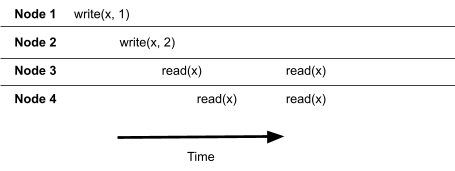

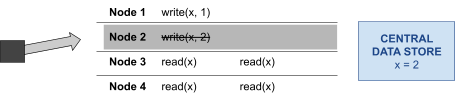

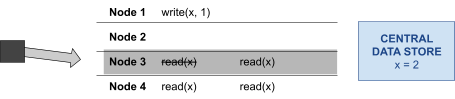

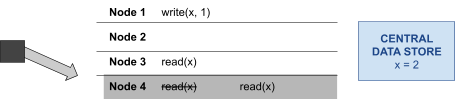

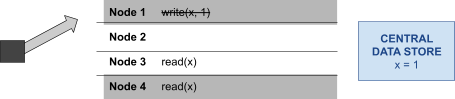

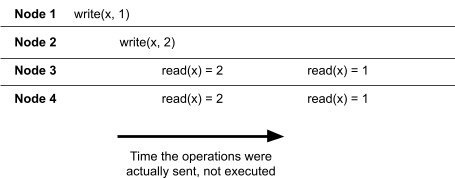

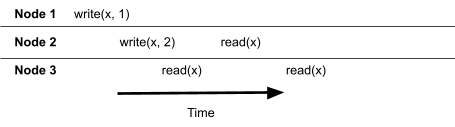

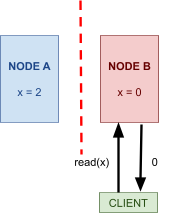

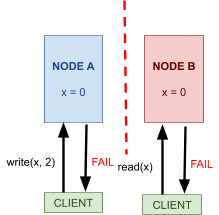

Consistency models 106

Strong consistency 107

Sequential consistency 107

Causal consistency 109

Eventual consistency 109

Strong eventual consistency 110

Other stuff (Leonardo) 110

Data Replication and Scalability 110

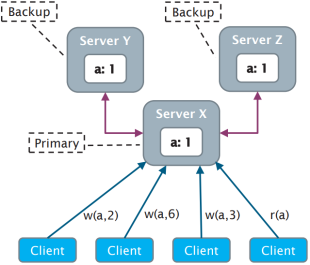

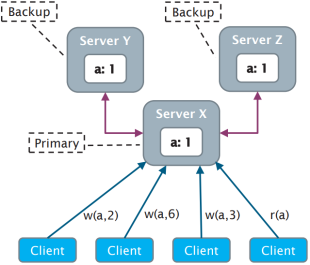

Data Replication 110

Primary-backup 110

CAP theorem 112

Scalability 115

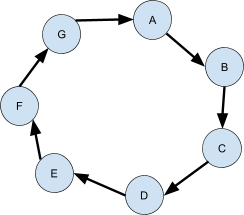

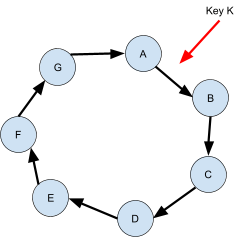

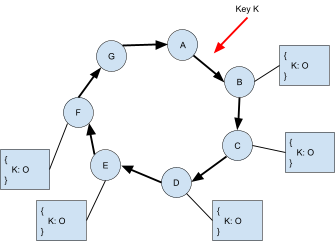

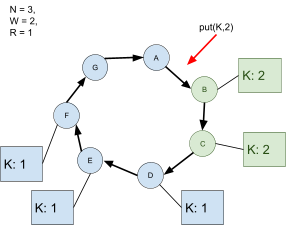

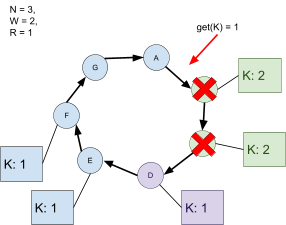

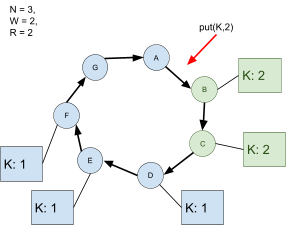

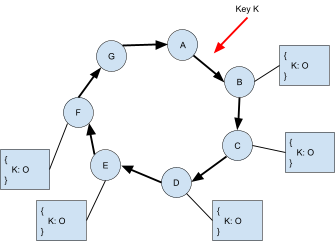

Highly Available Distributed Data Stores (Amazon Dynamo) 116

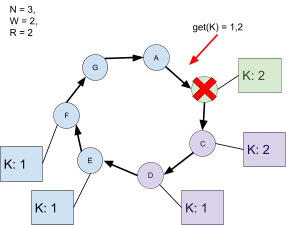

Replication 117

Consistency 118

Fault tolerance 123

Why is Amazon Dynamo AP? 123

Scalability 124

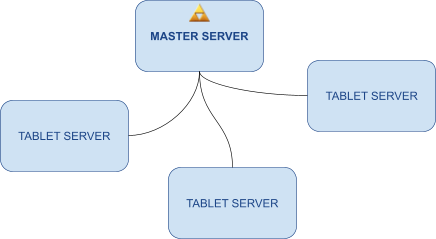

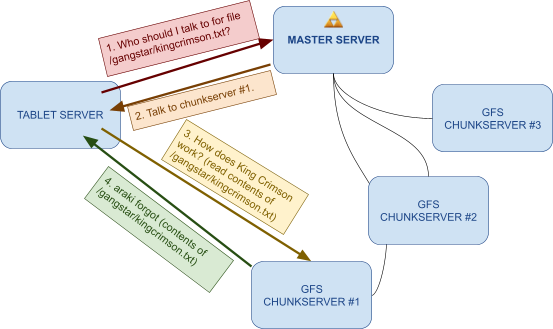

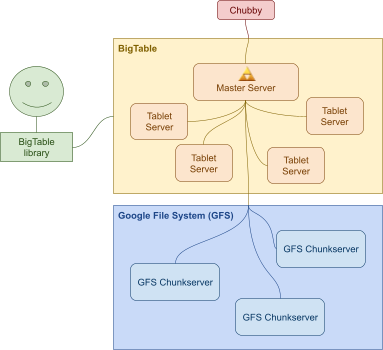

Consistent Distributed Data Stores (Google BigTable) 125

Data model 125

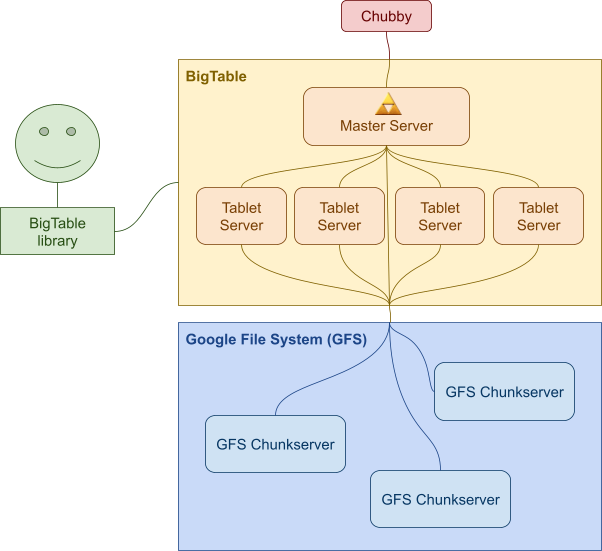

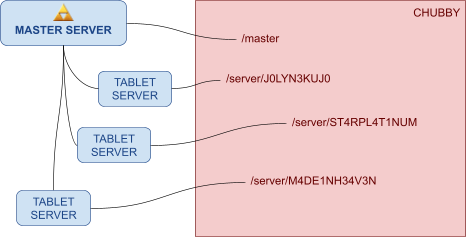

Architecture 126

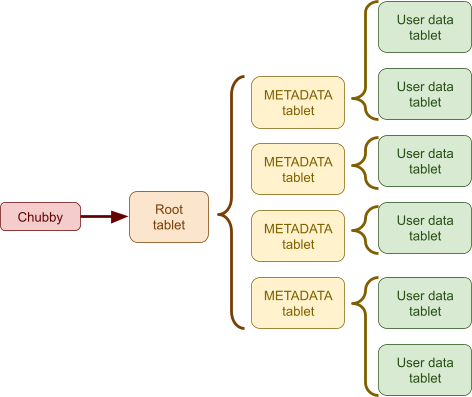

Tablets 129

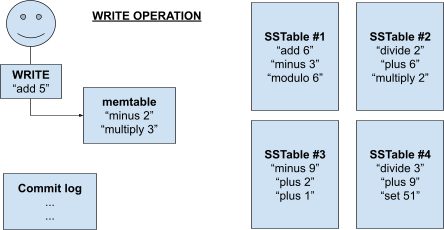

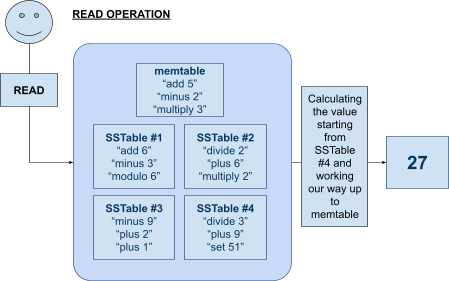

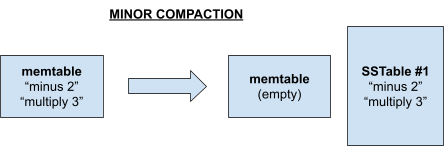

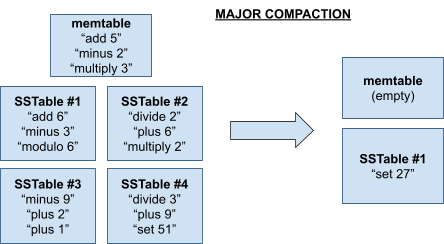

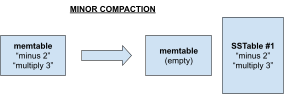

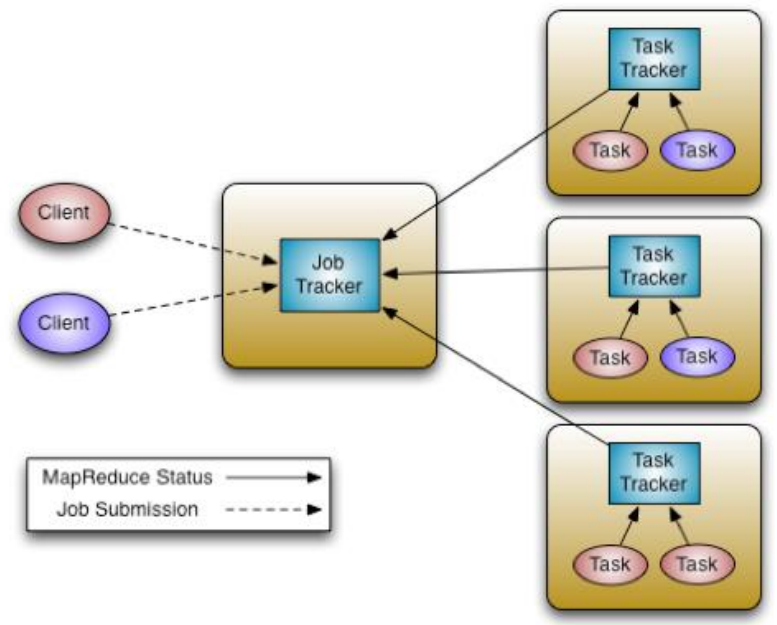

SSTables 130

Why is Google BigTable CP? 132

Online Distributed Processing 132

Use cases 132

Requirements 133

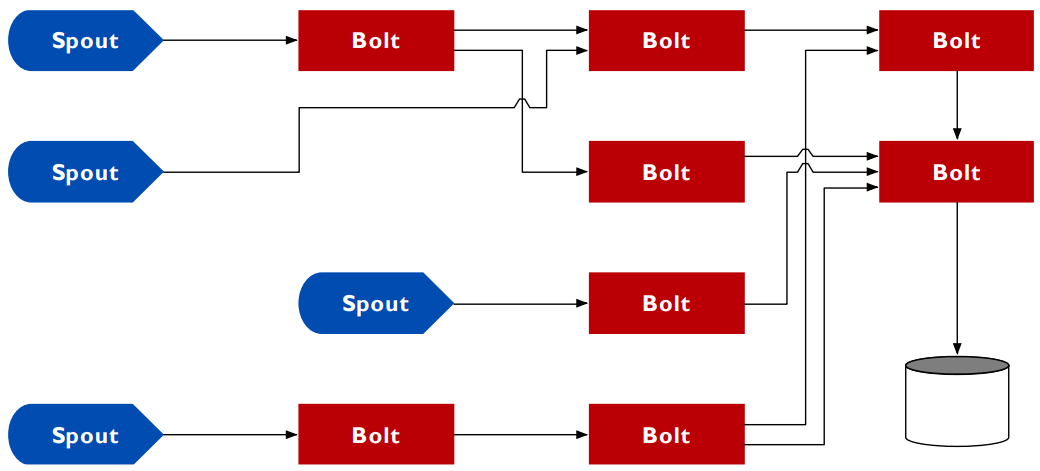

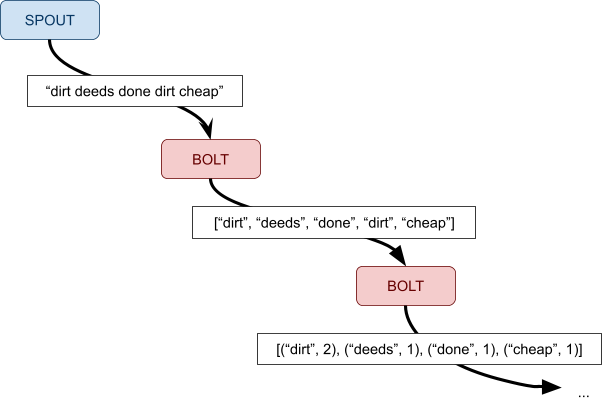

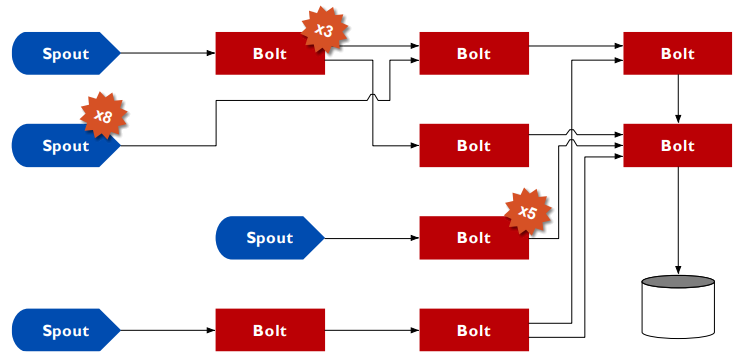

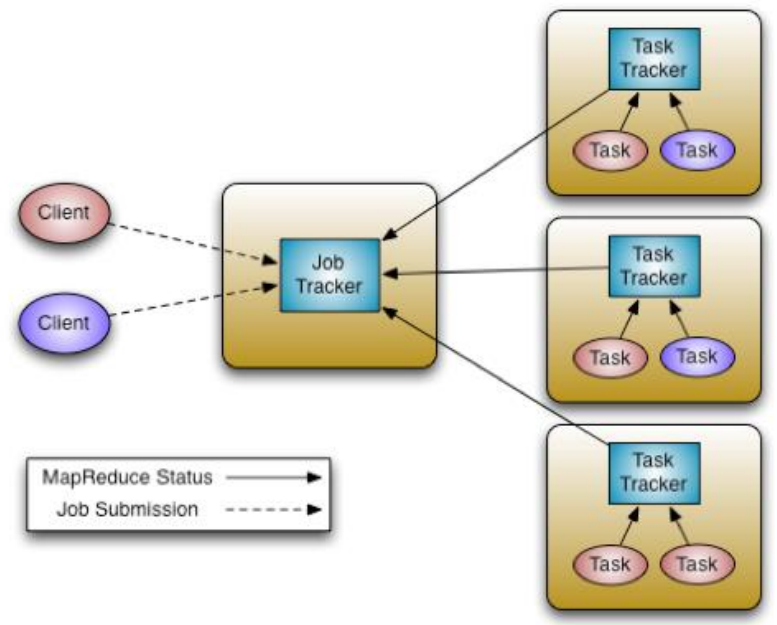

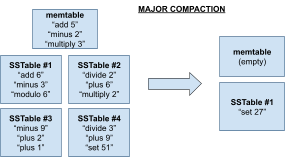

Apache Storm 133

Data model 133

Architecture 134

Replication 135

Stream grouping 136

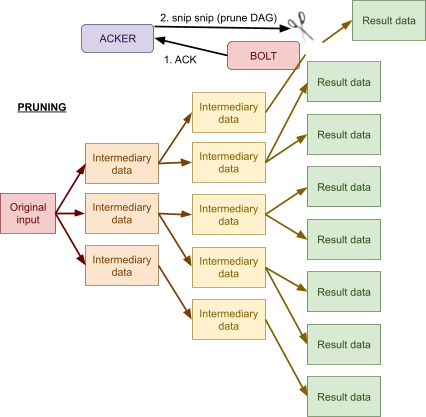

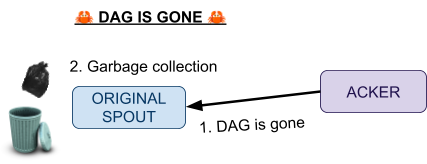

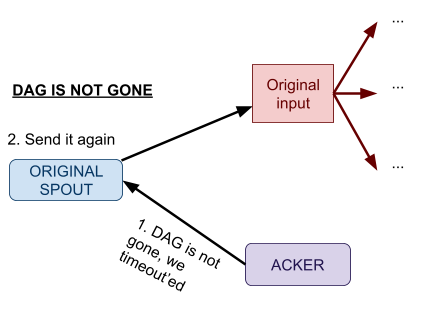

Fault tolerance 137

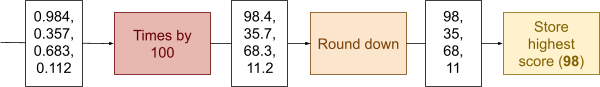

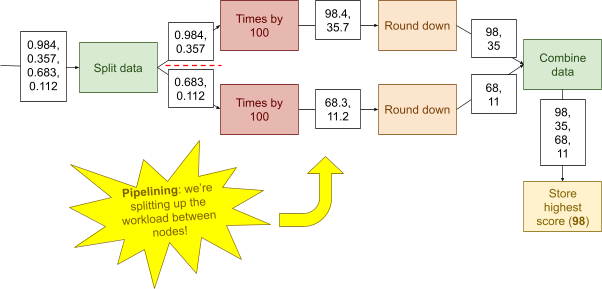

Batch Distributed Processing 139

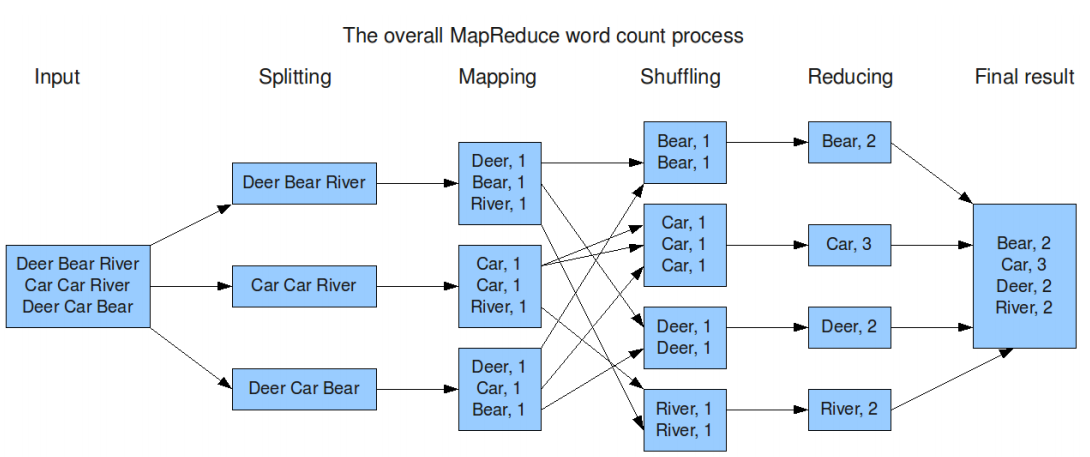

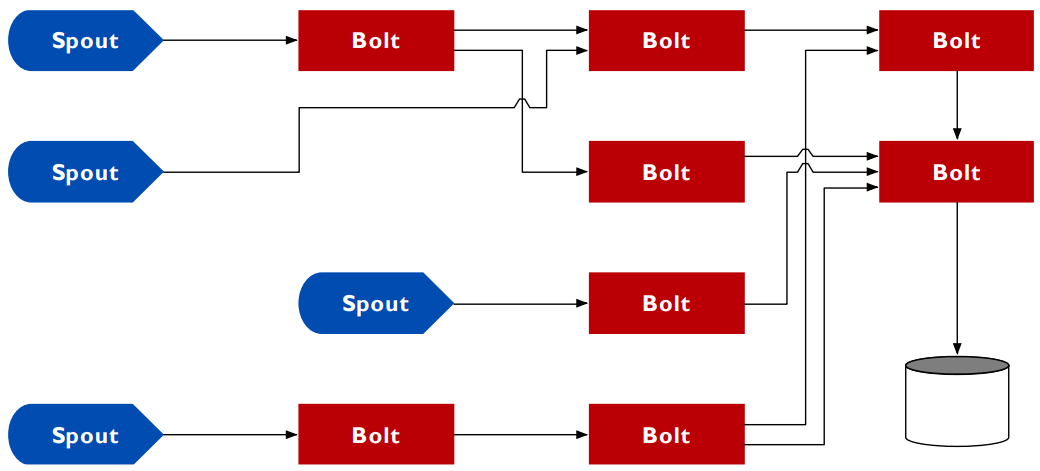

Implementation #1: Google MapReduce 139

Implementation #2: Apache Hadoop 140

TL;DR 142

Kirk’s stuff 143

Tim’s stuff 150

Corina’s stuff 154

Leonardo’s stuff 158

Networking (Kirk)

Physical + Link layer

Functions

-

There are three reference models for networking

protocols:

|

OSI model

|

TCP/IP model

|

Tanenbaum’s book

|

|

Application

|

Application

|

Application

|

|

Presentation

|

Transport

|

Transport

|

|

Session

|

Internet

|

Network

|

|

Transport

|

Link

|

Link

|

|

Network

|

Hardware

|

Physical

|

|

Data Link

|

|

|

|

Physical

|

|

|

-

Here is a sentence to remember the OSI layers, from bottom

up: Please Do Not Throw Salami Pizza Away 🍕❌🗑

-

Tanenbaum’s book is just the TCP/IP model, but they

changed Internet to Network and Hardware to Physical.

-

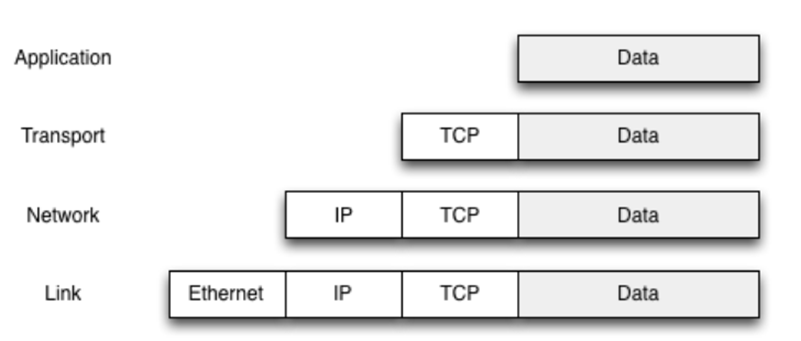

Each layer adds its own header, and it becomes part of the

payload for the layer below it:

-

There’s different types of medium for the physical

layer:

-

Coaxial cable

- Twisted pair

- Power line

- Fibre optic

-

Wireless (laser, sound, ultrasonic, pulses, radar)

-

Bits are transmitted using encoding schemes. Transmission

is based on something varying over time, like voltage or

frequency, with synchronisation.

-

What does the link layer actually do?

-

Transmits frames over physical media

-

Detects and handles transmission errors

-

The link layer doesn’t care about what the packet

says. The link layer only cares about getting the packet

from A to B.

-

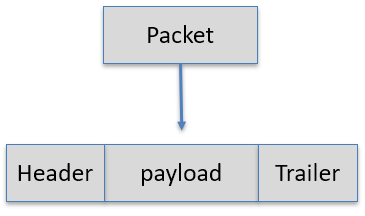

Packet: a unit of data in the Network layer (our payload)

-

Frame: a unit of data in the Link layer (what the link layer

actually sends)

-

A frame contains a packet and wraps a header and a trailer

around the packet.

-

Data frames vary based on the physical layer, for example

there are Ethernet frames and Fibre Channel frames.

-

The partitions of a frame are usually fixed in size (except

for the payload), making it far easier to tell where the

data actually is.

-

Frames are transmitted through hop by hop transmission over a packet-switched network.

-

Too jargon-y for you?

-

Hop by hop transmission: where data is transported through intermediate nodes to

its destination.

-

Packet-switched network: A network where packets dynamically find their own way to

a destination without a set path (look at the pretty

animation from wikipedia)

-

Because of these properties, framing can allow senders to

send packets through a shared medium, like you see above

(all the intermediate nodes are available to all the

coloured packets/frames).

-

You also need to know where the frame starts and the frame

ends.

-

In flow control, we may need to regulate how fast we send

data. We don’t want to swamp a slow receiver with tons of packets!

-

We can either:

-

send messages from the receiver to the sender saying

“wait, let me process this data first” and

alternatively, “alright, now send more data”

a.k.a. feedback-based flow control

-

make the communication rate-based so the speed is agreed

between both parties (rarely used in such a low layer) a.k.a. rate-based flow control

Acknowledgements

-

There are three main link layer models:

-

Connectionless, no acknowledgements

-

Used for low error rate networks (networks where packets

rarely get lost, like Ethernet)

-

Connectionless: no signalling path is established in advance.

-

No acknowledgements: Frames are sent, and may or may not be received by the

destination.

-

Acknowledged, connectionless service

-

Acknowledged: frames are sent, and the recipient notifies the sender

when they’ve received a frame through an acknowledge

(ACK) signal.

-

This is used when connections need the assurance of

delivery, but can’t afford the overhead of connection

management.

-

Acknowledged, connection-oriented service

-

Acknowledgement signals are sent, and a connection is

formally established before communications occur.

-

This is used for long delay, unreliable connections, for

example with satellites.

-

It’s computationally expensive, but it’s

secure.

-

How do we handle ACK signals and errors?

- We use ARQs!

-

ARQ (automatic repeat-request): an error-control mechanism that uses ACKs and timeouts to

ensure reliable communications with an unreliable

recipient.

-

Basically, ARQs are different ways of handling ACKs and

when they screw up.

-

There are three kinds:

|

ARQ protocol name

|

Explanation (not in the slides, so it might not be

examinable, but if you wanna play it safe then

read this anyway)

|

|

Stop-and-wait

|

The sender sends the first frame, then the

recipient sends an ACK signal for that

frame.

The sender sends the second frame, then the

recipient sends an ACK signal for that

frame.

This keeps on going until all the frames have

been sent.

|

|

Go-back-N

|

The sender sends frames #1 to #N to the

recipient, in order, over and over again, even

if an ACK signal doesn’t exist.

The recipient throws away duplicate frames and

frames that aren’t in the right order. The

recipient sends an ACK signal containing the lowest number frame it had missed, let’s say #M where 1 ≤ M <

N.

The sender gets the ACK signal, and now sends

frames #M to

#(M + N) to the recipient. This keeps on going

until all frames are collected.

Protocols like these are called sliding window

protocols.

Example:

Alice is sending frames 1, 2, 3, 4 and 5.

Bob gets frames 1, 2, 3 and 5, but misses 4.

Bob sends an ACK signal to Alice with the number

‘4’.

Alice receives this ACK signal, and is now

sending frames 4, 5, 6, 7 and 8.

Now Bob has frames 1, 2, 3, 4, 5, 6, 7 and 8.

Bob sends an ACK signal with the number

‘9’.

Alice receives this ACK signal again, and is

now sending frames 9, 10, 11, 12 and 13.

|

|

Selective-repeat

|

Go-back-N may send duplicate frames, which is

inefficient on the bandwidth. Selective-repeat

aims to fix this.

The sender sends frames #1 to #N.

The receiver can either:

-

Send an ACK signal to move onto the next

set of frames

-

Send a NAK signal to request a specific

frame

If the sender gets an ACK signal with number M,

then it’ll start sending frames #M to #(M

+ N).

If the sender gets a NAK signal with number M,

then it’ll send only the frame #M to the

recipient.

Example:

Alice sends frames 1 and 2 to Bob.

Bob gets frames 1 and 2, and sends an ACK

signal with number 3 to Alice.

Alice gets this ACK signal and sends frames 3

and 4 to Bob.

Bob misses 3, but gets 4. Bob sends a NAK

signal with number 3 to Alice.

Alice gets this NAK signal, and sends frame #3

to Bob.

Bob gets frame 3 and sends an ACK signal with

number 5 to Alice.

Alice gets this ACK signal and now sends frames

5 and 6 to Bob.

|

-

How do we detect errors in communication?

-

There are a few ways we can do this:

-

Parity bit: a bit that’s either 1 or 0 depending on whether the

number of 1’s is even or odd

-

CRC (Cyclic Redundancy Check): a type of checksum based on polynomial division. The

checksum is stored in a field of the frame, and is

calculated and compared by the sender and the

recipient.

-

Checksums may happen on other layers too. IPv4 has a

checksum, but IPv6 doesn’t.

Frames

-

In frames, how do you know where frames start and where

frames end?

-

There’s many ways to do it. One way is to use a FLAG

byte value to mark the start and the end.

-

If a FLAG byte appears in the payload, escape it with an

ESCAPE byte (similar to escape characters \n, \b, \\ ... in C, C++, Java etc.)

Ethernet CSMA/CD

-

Ethernet was the de facto link layer standard throughout the

90’s, and continues to be today.

-

Originally, it all ran through shared media. In other

words, it was all one wire and everyone had to share. There

came twisted pair with hubs/repeaters to help, but it

didn’t solve the problem.

-

In the end, switches were used (they’re like hubs,

but a little smarter). There is one device per switch port,

and since it’s fully duplex, there’s no

contention.

-

On a shared medium, how is media contention handled?

-

Originally, Ethernet used Carrier Sense Multiple Access with Collision Detection, or CSMA/CD.

-

It works by checking if the media is busy. If it is, then

wait. If it isn’t, then it sends its signals across

it.

-

If a collision occurs (two devices try to use the media at

the same time), they both stop and pick a delay before

trying to talk again.

-

Ethernet doesn’t really use this anymore because

switches are fully duplex, so there’s no

contention.

WiFi CSMA/CA

-

WiFi is the wireless alternative to Ethernet. It works in

the 2.4GHz or 5GHz range. The 2.4GHz range has 14 channels

(13 in Europe). WiFi has evolved over the years.

-

With WiFi, devices are associated with a wireless access

point (AP). Devices can select from one of many service set

identifiers (SSIDs).

-

WiFi is technically a shared medium, so there can be

contention in a busy area. Therefore, you need a collision

avoidance scheme.

-

WiFi can’t send and receive at the same time, so WiFi

uses a slightly different approach called CSMA/Collision Avoidance (CSMA/CA).

-

It’s like CSMA/CD, but instead of listening to the

medium, it waits for an acknowledgement from the AP to

determine if the frame was sent.

-

In other words, instead of dealing with collisions, it

avoids them.

-

It’s very similar to the stop-and-wait ARQ

protocol.

-

Optionally, Request to Send / Clear to Send (RTS/CTS) could be used to improve performance, where a sender

sends an RTS message to ask if it can send frames, and can

only send frames after it receives a CTS message from the

receiver (basically, send an RTS and expect a CTS).

-

/CA is used in wireless connections as it is very difficult

to determine if a collision has occurred over a wireless

network.

Ethernet LANs

Ethernet theory

-

Ethernet is used on campus networks and on home

networks.

-

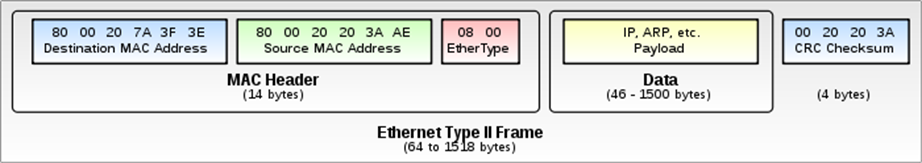

An Ethernet frame includes (remember, this is the link

layer):

-

48-bit source MAC address

-

48-bit target MAC address

-

Optional VLAN ID and frame priority

-

At max, the frame is usually around 1500 bytes. If it gets

any bigger, it’ll be broken down into smaller

frames.

-

MAC (Media Access Control) address: a unique ID for network interface controllers (NICs) at

the link layer.

-

It’s around 48 bits long, but can be extended to 64

bits.

-

They need to be unique, so MAC addresses are split up

into:

-

24 bits for vendor allocations (tells you what vendor this

hardware is from)

-

Last 24 bits assigned by the vendor

-

Ethernet networks are built with each device (also called

hosts) connected to a concentrator device (usually a

switch).

-

Desktops usually have 1 Gbit/s Ethernet, but the servers

are on 10 Gbit/s Ethernet.

-

There are different kinds of concentrator devices:

-

Hubs and repeaters:

- Really old!

-

They just take frames and forward them on to all the

devices available

-

They just extend Ethernet range

-

All hosts see all packets, which is a big no-no for

security.

-

Receives frames and forwards them to the host that needs

them

-

Has ‘smart’ forwarding (they learn which host

needs what packet)

-

Hosts only see the packets sent to them, which is good for

security.

-

Switches are smart, because:

-

they can perform CRC (Cyclic Redundancy Checks) before

forwarding

-

it learns the addresses of the hosts using a MAC table, so

they only send packets to the hosts that need them

-

can support more advanced features, like VLAN or QoS

-

How do switches learn which frames go to which host?

-

Switches match up source MAC addresses to switch

ports.

-

So if a frame with source MAC address 6F-72-61-6F-72-61 is

picked up by port number 2, the switch will remember that.

The next time a frame with that source MAC address comes up,

the switch will send it to port number 2.

-

There is a time-out for pairings like that (~ 60

seconds).

-

If there is no entry in the table the switch has to send

the package to all ports.

ARP

-

To send a frame to a host, you need its network layer

address (IP address) and its link layer address (MAC

address).

-

We can get the IP address from DNS, but how do we get the

MAC address from an IP address?

- We use ARP!

-

ARP (Address Resolution Protocol): a protocol that allows a host to get the MAC address

associated with a given IP address.

-

ARP uses a link layer broadcast message (a message sent to

everyone).

-

The broadcast message asks “Who has this IP

address”?

-

All the hosts see this message, and the one with that IP

address goes “It’s me! My IP address is

so.me.th.ing and my MAC address is wh:at:ev:er”

-

The sender gets this information and can now send the

frame.

-

This information is usually cached for around 60

seconds.

-

In an ARP message, you have:

-

Sender MAC address

-

Sender IP address

-

Target MAC address (zero-padded when we don’t know

what it is)

-

Target IP address

-

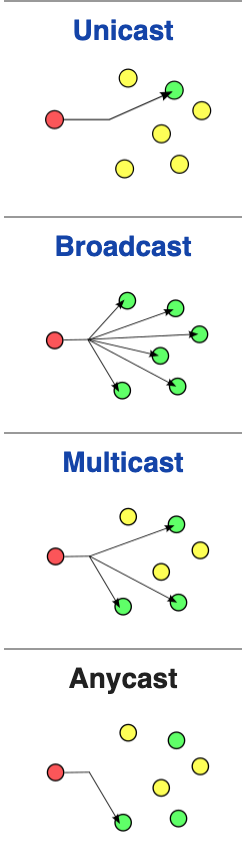

There are 4 kinds of messages:

-

Unicast: from one sender to one receiver. Purely one-to-one.

-

Broadcast: sent to everyone. To broadcast a message, make the MAC

address FF:FF:FF:FF:FF:FF.

-

Multicast: sent to anyone who is interested.

-

Anycast: sent to the nearest receiver; doesn’t matter who,

as long as it gets to someone.

-

Hosts can spoof and steal frames from other hosts

-

Low power devices may ‘sleep’ and not respond

to ARP messages

-

You can send a ‘gratuitous ARP’, which is just

making sure your information is up to date.

-

ARP probe: a use of ARP to make sure no two hosts have the same

IP

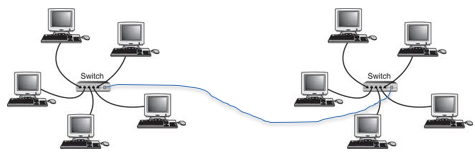

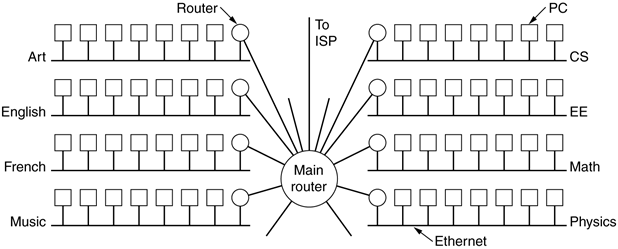

Building Ethernet LANs

-

LANs can’t be too big, or else we’ll have

problems with range and volume of messages (when

broadcasting).

-

Therefore LANs must be kept at a reasonable size, for

example in a campus, there’s typically one LAN per

floor, or per building.

-

When we create an Ethernet LAN with a switch, hosts can

talk to each other directly using their MAC addresses.

-

They’ll also allocate “local” IP

addresses to each host, for example 192.168.0.0 up to

192.168.255.255, as part of an IP address plan.

-

To connect two LANs together, we need to use an IP router,

which forwards IP packets between LANs (remember, routers do

not work with the link layer, only the network layer).

-

Routers can tell other routers the reachability of the IP

address ranges it serves, so the network can learn where to

forward IP packets.

-

Therefore, if a host was sending a packet from one LAN to a

host on another LAN, it will send the packet to its

‘default’ IP router.

-

IP routers act as network layer gateways between individual

link layer Ethernet LANs.

-

A localised network layer LAN behind a router is called an IP subnet.

-

A home network is usually a single IP subnet and Ethernet

LAN.

-

In multi-story buildings, they typically use copper twisted

pair cabling.

-

Ethernet networks may be deployed using:

-

Data riser: vertically aligned switch/server rooms

-

Flood wiring per floor: have faceplates from switch room

-

Ethernet switch stacks: one switch port per faceplate

-

A building may have one LAN, if a router connects the whole

building, or there could be one LAN per floor.

-

In homes, you typically have an ADSL router with multiple

Ethernet ports and 802.11 WiFi. It’s just one LAN,

with no internal routing. Pretty basic.

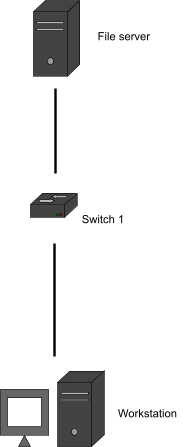

Spanning tree protocol

-

A bridge loop occurs in a network when there are two ways to reach

the same node.

-

They’re bad news because they can cause broadcast radiation, which is where there’s so much traffic on the

network cabling that it severely harms the performance of

the network.

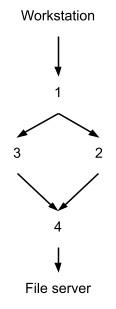

|

No bridge loop

|

Bridge loop

|

|

|

|

-

Why is this so bad?

-

Let’s say the workstation wants to send a frame to

the file server. The switches will pass the frame onto the

neighbouring nodes, right? After all, no frame has been sent

before, there’s no way the switches would know which

frames to send where.

-

The workstation’s frame could end up back at switch 1

through the path 1 -> 2 -> 3 -> 1 or 1 -> 3

-> 2 -> 1. This loop is wasteful and clogs up the

medium.

-

The file server could also end up with two of the same

frame.

-

It’s pretty clear that loops are bad. But they could

also be good, because what would happen if switch 3 dies? We

could still use the switch 2 path to get to the file server.

Whereas, on the left, if switch 1 dies, that’s it. No

more talking to the file server.

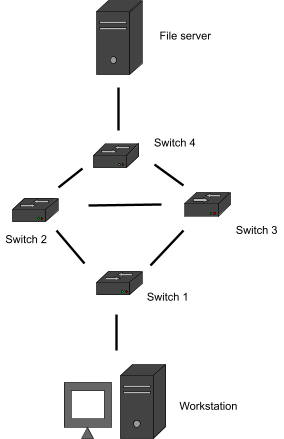

-

Introducing the spanning tree protocol!

-

The spanning tree protocol maps out a set of paths from the

source to the target in a “tree” structure. It

plucks out the least cost path, and disables all the other

switches that are not on that path.

-

The source can then send the packet, and no loops will

occur.

-

However, if a switch on that path fails, the protocol will

pick the next best path, and re-enable and disable the

appropriate switches to follow that path instead.

-

Therefore, we get the best of both worlds: no broadcast

radiation, and if any switches fail, we’re fine.

-

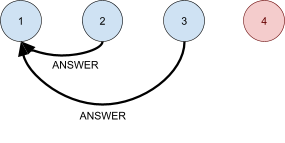

Want an example?

-

So we want to get from the workstation to the file server.

There’s two (sensible) ways of doing this:

-

Normally, we’d pick the smallest, but they’re

both the same length, so let’s go with the switch 3

path.

-

So now, switch 2 is disabled. The workstation sends the

frame.

-

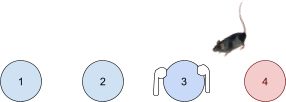

Oh no! A rat chewed on the cabling of switch 3 and made it

explode! What are we going to do?!

-

The protocol will now pick the switch 2 path. So now,

switch 2 is enabled, switch 3 is “disabled”, and

the frame is re-sent through the switch 2 path.

-

It reaches the file server successfully!

VLANs

-

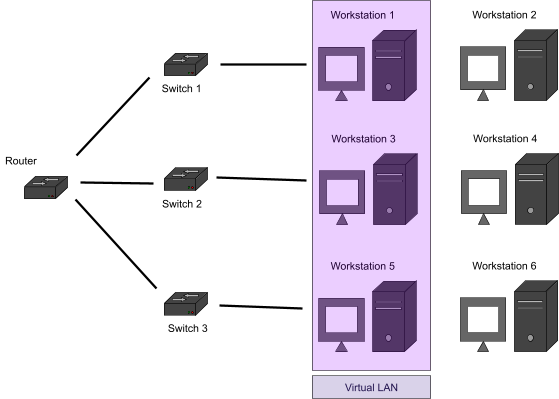

A VLAN, or a Virtual LAN, are broadcast domains that are

partitioned and isolated.

-

A VLAN can consist of multiple hosts, all of different

LANs, but they are made to think that they are all connected

to the same wire.

-

Ethernet frames include an optional VLAN identifier.

It’s 12-bits, so there are 4096 different VLAN

IDs.

-

Because it’s completely logical and not physical,

it’s quite flexible.

-

Here, workstations 1, 3 and 5 think they’re all on

the same LAN, but really it’s a VLAN.

-

This is useful to group together staff that aren’t

physically in the same LAN, for example, workstations 1, 3

and 5 might all be in the marketing department.

Ethernet frame priority

-

How important is a frame?

-

VoIP is pretty important, because you want to know what the

other person is saying as they’re saying it.

-

HTTP might not be as important, because it’s a

document; you don’t need it right away.

-

There are three bits in the 802.1Q tag that determines

priority, from 1 to 7.

-

This only affects prioritisation at switches, not

end-to-end.

Internet/Network layer

-

What does the network layer actually do?

-

The network layer is responsible for establishing a network

between computers so that they can send things to each

other.

-

In more detail, the network layer is responsible for:

-

Connecting networks together to form an

“internetwork”, or “Internet”

-

Each network is served by a router

-

Packets the data by adding an IP header

-

Processes and routes IP datagrams (data units across the

network)

-

Fragmenting packets that are too big

-

Error checking

-

Reassembling fragments if needed

Internet Protocol (IP)

-

IP (or Internet Protocol) is the main protocol used in the network layer.

-

The properties of IP are:

-

Packet-switched, connectionless:

-

IP packets are routed towards destination at each router on

the path

-

Connectionless: packets find their own way to the destination, it

doesn’t have to rely on a fixed path from A to B

-

No guarantee that any IP datagram will be received

-

Best-effort delivery: there is a variable bit rate and latency and packet loss

depending on the current traffic load. Basically, the

routers try their best but won’t guarantee perfect

performance

-

Sending a packet is only based on destination IP

address

-

Routers maintain routing tables to make routing

decisions

-

Globally unique, delegated addresses:

-

Devices must be globally unique + addressable to send

traffic to them

-

Private address space, which are not globally routed, may

be used (because that’s the whole point, since we ran

out of IP addresses)

-

There are 5 main protocols for the network layer:

- IPv4

- IPv6

-

ICMP - used for diagnostics and control

- ICMPv6

-

IPSEC - used for security

-

Store-and-forward packet switching is a technique where data is sent to

an intermediary node and then passed on to either another

intermediary node or the destination node.

-

In this context, routers store the packet and determine where to forward them.

-

IP is a bit unreliable, because routers forward packets on

a ‘best effort’ basis.

-

Packets may get dropped because of congestion. The

transport layer (next layer above) may handle

retransmissions:

-

With TCP, retransmissions are handled

-

With UDP, the application layer has to handle

retransmission

-

Quality of Service may help by prioritising certain

traffic.

-

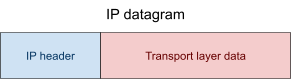

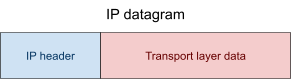

In IPv4, segments of data are taken from the transport

layer and an IP header is attached to the end of it.

-

This creates an IP datagram.

-

An IP header has the following fields:

-

Source IP address

-

Destination IP address

-

IHL - header length (can vary)

-

Identification field and fragment offset

-

Time to live (how long should this packet stay switching

before we give up)

-

IP header checksum

-

MTU (or Maximum Transmission Unit) is the size of the largest data unit that can be

communicated in a single network layer transaction.

-

If we’re sending a packet of 2000 bytes to a router,

and the MTU is 1500 bytes, then the packet has to be

fragmented.

-

Fragmentation happens in the network layer.

-

We should try to avoid fragmentation, because it adds

overhead and security issues.

-

Now, a bit of history of IPv4!

-

People used IPv4 since the 1970’s

-

It uses 32-bit addresses, written as ‘dotted

quads’, like 152.78.64.100.

-

The IPv4 address space is partitioned into 5 different

classes, where 3 classes are used for networks of different

sizes, 1 class is used for multicast groups and 1 class is

reserved:

|

Class

|

Prefix range

|

Prefix length

|

# of networks

|

# addresses per network

|

Examples

|

|

A

|

0.0.0.0 to

127.0.0.0

|

8 bits

|

128 networks

|

~16 million addresses

|

20.xx.xx.xx

102.xx.xx.xx

|

|

B

|

128.0.0.0 to 191.255.0.0.

|

16 bits

|

~16,000 networks

|

~65,000 addresses

|

152.78.xx.xx

160.125.xx.xx

|

|

C

|

192.0.0.0 to 223.255.255.0

|

24 bits

|

~2 million networks

|

256 addresses

|

196.50.40.xx

202.155.4.xx

|

|

D

|

224.0.0.0 to 239.255.255.255

|

n/a

|

n/a

|

n/a

|

n/a (used for multicast groups)

|

|

E

|

240.0.0.0 to 255.255.255.255

|

n/a

|

n/a

|

n/a

|

n/a (reserved)

|

-

Here is a graphical visualisation of the partitioning of

the IPv4 address space:

|

Class A

|

Class B

|

|

|

Class C

|

Class D

|

|

Class E

|

-

Basically, if an IPv4 address is within a certain class, it

must follow two rules:

-

The first 8 bits must be within a certain range

-

It must have a fixed prefix

-

For example, if an IP is within class B, the first 8 bits

must be between 128 and 191, and the first two bytes must be

fixed.

-

Additionally, an IP range of 152.xx.xx.xx cannot be within

class A because the first byte lies outside of A’s

range; it would be B, but the second byte would be part of

the fixed prefix too, so you should write something like

152.78.xx.xx.

-

This is generally very inefficient, e.g. there are not many

networks that require a Class A IP address and thus 16

million different addresses, which means that a lot of space

is wasted. Even 65,000 addresses in class B are too many for

most networks.

-

Since 1995, RIR (Regional Internet Registry) allowed

variable length prefixes, meaning that network addresses

were no longer assigned strictly based on the ABC class

scheme

-

This change reduced address consumption rate, but we still

ran out of addresses back in 2011.

-

Due to the fact that there are still not enough IPv4

addresses for all hosts world-wide, private IP addresses

were introduced

-

More precisely, there exist ranges in the IPv4 address

space that are used as private IP addresses

-

What does private IP address mean? It basically means that

this is an IP address that is not globally unique and thus

cannot be reached from outside the network.

-

In other words, private IP addresses may be used many many

times in different networks world-wide

-

An example for the use of a private IP address is your home

network

-

There exist three different private IP networks with the

following address ranges:

|

Address range

|

Number of addresses

|

Subnet mask

|

|

10.0.0.0 -

10.255.255.255

|

16,777,216

|

8 bits mask

|

|

172.16.0.0 - 172.31.255.255

|

1,048,576

|

12 bits mask

|

|

192.168.0.0 - 192.168.255.255

|

65,536

|

16 bits mask

|

-

For example, your home network may use 192.168.0.0

-

You can further divide the private address space into

subnets as you wish (more on subnets later), so you could

have 192.168.0.0/24, allowing for 255 ip host

addresses

-

There must be a mechanism to translate your private IP

address to a public ip address, which is called NAT (see

below)

-

We can maximise the use of address space:

-

DHCP (or Dynamic Host Configuration Protocol) requires IP addresses to be leased out for small time

periods, preventing inactive hosts from hogging valuable

address space

-

NAT (or Network Address Translation) allows you to use one IPv4 address to refer to an entire

private network, so each device on your network

doesn’t need their own unique IPv4 address

-

Classless Inter-Domain Routing: allows use of any prefix length, not just /8, /16 or

/24

Subnets

-

Remember that a subnet is a localised network behind a router (its default

gateway).

-

The full name is “subnetwork”.

-

There are various addresses concerning IP subnets,

like:

-

The first IP in the range

- e.g. 152.78.70.0

-

How big is the prefix of the range of IPs

-

e.g. 24 bits, so 152.78.70.(0 to 255) is written

152.78.70.0/24

-

Subnet mask (or network mask or netmask)

-

The fixed prefix bits for all hosts in the subnet

-

e.g. 255.255.255.0

-

In full: 11111111 11111111 11111111 00000000

-

Conveys the same information as the prefix length

-

Subnet IP broadcast address

-

The IP address to use when you want to broadcast to the

whole network

-

The last IP in the range

-

e.g. 152.78.70.255

-

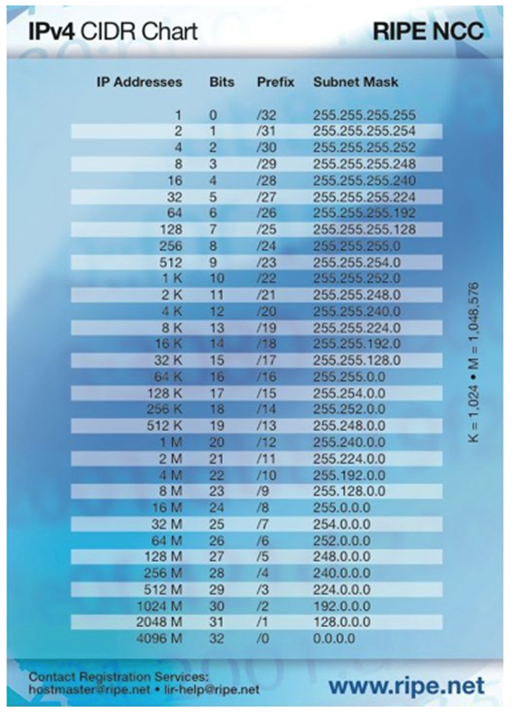

Here’s a picture of all possible subnet masks you can

use in IPv4:

-

So you have a subnet and a bunch of addresses you can

use...

-

... but not all of them can be used for hosts.

-

Three are reserved for:

-

The subnet network address (e.g. 152.78.70.0)

-

The subnet broadcast address (e.g. 152.78.70.255)

-

The address of the router, also called gateway (any other address within the subnet range, but

conventionally either 152.78.70.1 or 152.78.70.254)

-

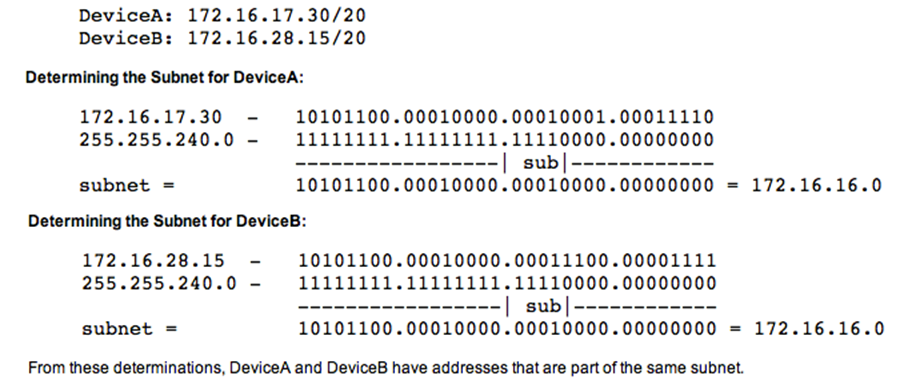

How do you determine a subnet (network) address?

-

Take the IP address and subnet mask (derived from the

prefix length), and binary AND them to get the subnet

network address.

-

How do you determine a subnet broadcast address?

-

Take the IP address and wildcard mask, that is the inverted

binary subnet mask, and binary OR them to get the subnet

broadcast address

-

Determining the broadcast address for a device with IP

address 172.16.17.30 and subnet mask 255.255.240.0 (/20),

which is the wildcard mask 0.0.15.255:

|

172.16.17.30

|

10101100.00010000.00010001.00011110

|

|

|

0.0.15.255

|

00000000.00000000.00001111.11111111

|

|

|

Broadcast =

|

10101100.00010000.00011111.11111111

|

= 172.16.31.255

|

-

ARP can also be used to find the Ethernet (MAC) address of

the default router (gateway) of the network (if the

destination IP is not in the same subnet).

-

This is why being able to use the subnet mask to determine

the subnet address is important.

-

What happens if you screw up the subnet mask (e.g. if the

network admin misconfigured the value on the DHCP

server)?

-

If it’s too short (it is /23 when it should be

/24)

-

Host may think a remote host is local, so it’ll use

ARP locally

-

It won’t get a response

-

If it’s too long (it is /25 when it should be

/24)

-

Host may think a local host is remote, so it’ll send

it to the router

-

The router may end up redirecting the packet

Calculating subnets

-

Here is a concrete example of how to calculate subnets - you could find

a question like this in the exam:

-

You have been tasked with assigning IPv4 address ranges to

six subnets in a new building and specifying the routing

tables. You have been allocated 152.78.64.0/22 to carry out

this task. How would you allocate this block of addresses to

best use the available space to cater for …? In your

answer you should include the network address, the broadcast

address and prefix length of each subnet!

-

A computing lab with 260 computers

-

First of all, let’s determine the subnet size we

need. We want to find the smallest power of two that exceeds

the host count by at least three. Why three? Because one is

the network address, one is the broadcast address and one is

reserved for the gateway (default router). Why the smallest?

Because we do not want to waste any addresses by making our

subnet too large.

-

In this case, it is 9 because 2^9 = 512 and 512 - 3 >= 260.

-

So for this subnet, we will need the last 9 bits for the

hosts, thus the first 32 - 9 = 23 bits are used for the

network and stay fixed (Why did I use 32? Well, the whole IP

address has 32 bits). Our subnet will be a /23 subnet.

-

Next, determine the network address for this subnet. The

network address is always the lowest address. Since this is

the first subnet, we are going to start with 152.78.64.0,

which is given in the question.

-

Finally, we need to determine the broadcast address. Our

network address is 152.78.64.0 and our subnet mask is /23.

The broadcast address is always the highest address.

Therefore we need to copy the first 23 bits and leave them

unchanged, and take the last 9 bits and set them to 1.

-

After you do this you will get 152.78.65.255, which is our

broadcast address.

-

So all in all our subnet is 152.78.64.0/23 with the

broadcast address 152.78.65.255

-

An electronics lab subnet with 200 computers

-

Again, we will determine the subnet size first. This time

it is 8, because 2^8 = 256 and 256 - 3 >= 200. Therefore

our subnet will be a 32 - 8 → /24 subnet

-

To determine the network address, take the broadcast

address of the last subnet and add 1. Why 1? Because we

don’t want to leave any gaps and thus waste addresses

in our allocation. 152.78.65.255 + 1 → 152.78.66.0 (by doing this, we ensure that this subnet begins

immediately after the last one ends) Attention: This only works if the current subnet is of the same size

or smaller than the last one. Otherwise, you might need to

leave a gap. You can check if you need to leave a gap by

checking whether the network address is the lowest one in

the range. If it isn’t, you must leave a gap and skip

to the next possible network address.

-

To determine the broadcast address, take the first 24 bits

of the network address and set the last 8 bits to 1.

Luckily, the first 24 bits are exactly the first 3 groups.

Now we get 152.78.66.255.

-

All in all, our subnet is 152.78.66.0/24 with the broadcast

address 152.78.66.255.

-

An office subnet with 110 computers

-

Subnet size: 2^7 = 128, 128 - 3 >= 110 → /25

-

Network address: 152.78.66.255 + 1 →

152.78.67.0/25

-

Broadcast address: 152.78.67.127

-

Also, an alternative way to calculate the broadcast address

if you already have the network address is to just add the

subnet size - 1 to the network address. In this case the

subnet size is 128 addresses, so we will add 127. Take

152.78.67.0 + 127 → 152.78.67.127 and you are done. Attention: this only works if you add to the network address, the

other procedure works with every address within the network!

This technique is especially helpful if the subnet size is 8

or smaller.

-

A server subnet with 60 servers

-

Subnet size: 2^6 = 64, 64 - 3 >= 60 → /26

-

Network address: 152.78.67.127 + 1 →

152.78.67.128/26

-

Broadcast address: 152.78.67.128 + 63 →

152.78.67.191

-

A DMZ subnet with 25 servers

-

Subnet size: 2^5 = 32, 32 - 3 >= 25 → /27

-

Network address: 152.78.67.191 + 1 →

152.78.67.192/27

-

Broadcast address: 152.78.67.192 + 31 →

152.78.67.223

-

An infrastructure subnet with 17 devices

-

Subnet size: 2^5 = 32, 32 - 3 >= 17 → /27

-

Network address: 152.78.67.223 + 1 →

152.78.67.224/27

-

Broadcast address: 152.78.67.224 + 31 →

152.78.67.255

-

We are done. As a last cross-check we can verify whether

our allocation fits within the range we were initially

given. Wait, you don’t need to scroll up, it was

152.78.64.0/22.

-

Let’s determine the broadcast address. Be careful,

because it might be the case that the address given is not

the network address (i.e. the lowest address) of this range,

so you may not be able to just use the trick I previously

used and add 1023 to this address!

-

To determine the network address, take the first 22 bits

and leave them unchanged. Set the remaining 10 bits to 0.

You will get 152.78.64.0, so as it turns out, the address

given was indeed the network address (which is the lowest

address).

-

To determine the broadcast address, either set the last 10

bits to 1 or just add 1023 to the network address. You will

get 152.78.67.255, which is exactly the broadcast address of

our last subnet.

-

So to conclude, our allocation fits perfectly in the range

that we were initially given.

ICMP

-

ICMP (or Internet Control Message Protocol) is a supporting protocol for sending status messages

between hosts instead of actual data.

-

If you ping a host, that’s ICMP.

-

ICMP can be used to send error messages or other

operational information.

-

ICMP can also be used for routers to tell other routers

about themselves to fill out their routing tables, called router advertisement.

IP routing protocols

-

When a host sends a packet, it will send it:

-

directly to the destination, if it’s on the same

local subnet

-

to the router, if the destination is not on the local

subnet

-

Hosts have no idea what goes on outside their subnet; they

just send to the router and let that take care of the

rest.

-

The router will participate in a site routing protocol, and

the site routes to the Internet using a policy-rich routing

protocol called Border Gateway Protocol (BGP).

-

To determine whether to deliver locally or forward to a

router, a host maintains a small routing table, with a list

of known networks and how to reach them (can be built from

information learnt by DHCP or IPv6 RAs)

-

The table includes a local subnet in which the host

resides, and a ‘default’ route to send a packet

if the destination is not in the routing table (the

‘default’ route is usually to the router /

default gateway)

-

But when a packet gets to the router, what do we do

then?

-

The router needs to try to forward the packet to the

destination’s router.

-

This is where we use routing protocols!

-

So, how do we solve this problem?

-

We configure the routes manually.

-

It works, but if the topology changes or network faults

occur, we have problems

-

We use routing protocols to establish a route on-the-fly,

such as:

-

Routing Information Protocol (RIP) - distance vector algorithm

-

Open Shortest Path First (OSPF) - link state algorithm

-

IS-IS - link state algorithm

Aggregating prefixes

-

You’d think all routers would need to have access to

all other routers on the Internet to communicate,

right?

-

No need; aggregated prefixes exist!

-

Instead of routing to 152.78.70.0/24 exactly, you can route

to 152.78.0.0/16, and through there the packet can find its

way into the smaller subnets, like 152.78.240.0/20,

152.78.255.128/25, and any subnet with address

152.78.??.??/x where x is greater than 16.

-

Let’s look at an example:

-

Here, R1 serves 152.78.2.0/24 and R7 serves

152.78.64.0/23.

-

Instead of sending directly to R1, we can send our packet

to the border router, which serves 152.78.0.0/16, which

makes it an “umbrella” router for all addresses

152.78.0.0 to 152.78.255.255.

Interior routing protocols

-

Routing is all about routers maintaining routing tables and

looking up destination addresses to know where to send off

the packet to.

-

The routing table is, fundamentally, a table with

destination IP prefixes and the interface or next hop to

use.

-

So how do routers fill in their routing tables? Routing

protocols! They allow routers to build or exchange routing

information.

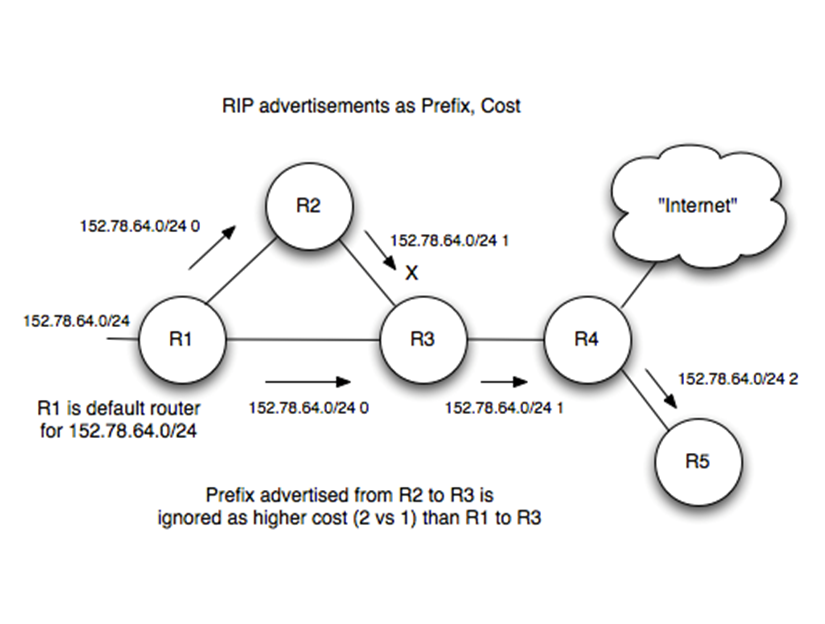

Distance Vector

-

With the distance-vector routing protocol, we determine the

best route from one router to the next by distance, the

distance being the number of routers (or hops) from the

source to the destination.

-

It does this by only talking to the directly neighbouring

routers in a site, and uses an algorithm such as the

Bellman-Ford algorithm to get the shortest distance.

-

An application of this would be the Routing Information Protocol (RIP).

-

RIP is just an application of the distance-vector protocol,

but with a few extra conditions.

-

Each router maintains a RIP routing table, storing:

-

Destination network

-

Cost (hop count); 0 if network is directly connected

-

Next hop to use for best path

-

If a router receives an update with a lower cost path to a

destination network, it updates its entry for that

destination

-

If a router receives a higher cost path later for the same

destination from the same neighbour, the higher value is

used because topologies can change and the lower cost route

might not be valid anymore

-

This is all great, but RIP isn’t perfect:

-

Updates are only sent every 30 seconds, so things take

time. Plus, updates aren’t acknowledged (UDP) so there

could be message loss

-

Metrics are simple hop count values. What if you wanted to

weight certain links? Plus, the maximum value is 15. A value

of 16 means unreachable.

-

Routers don’t have knowledge of network topology, so

this can lead to the ‘count to infinity’ problem

(basically, a loop)

Link state

-

With the link-state routing protocol, we talk to all

routers and establish full knowledge of the site

-

Routers flood information messages describing their

connected neighbours around the entire site

-

An application of this would be IS-IS (Intermediate System

to Intermediate System) or OSPF (Open Shortest Path

First).

-

The steps are as follows:

-

Discover neighbours

-

Determine cost metric to each neighbour

-

Construct link-state information packet

-

Flood this message to all site routers in the same

area

-

Use messages to build topology, and then compute shortest

paths for prefixes served by any given router

-

The cost is usually based on bandwidth/delay

-

The structure of a link state packet is as follows:

-

Source ID to uniquely identify the node

-

Sequence to allow receiver to determine if message is a new

one to process and flood, or one to discard (sender

increments sequence number with each message)

-

Age (decremented once per second, prevents old messages

persisting in the network)

-

All messages are acknowledged to senders

-

Because we know the whole topology, we don’t need to

use Bellman-Ford or anything. We can just use

Dijkstra’s, which is faster.

Exterior routing protocols

-

Autonomous system (AS): a network or a collection of networks that are all

managed and supervised by a single entity or organisation,

typically an ISP (Internet Service Provider).

-

An interior routing protocol is for exchanging routing

information between gateways (routers) within an autonomous

system.

-

In contrast, exterior routing protocols are for exchanging

routing information between autonomous systems.

-

The de facto exterior routing protocol is Border Gateway Protocol (BGP).

-

In BGP, each ISP has a unique AS Number (ASN), assigned by

RIRs (Regional Internet Registry) -they’re 32 bits

long

-

It’s like distance-vector, but BGP includes many

improvements and also incorporates the AS path associated

with using a given route, the costs of the paths and many

other richer attributes.

-

BGP neighbours, called BGP peers, are identified and create

TCP sessions over port 179

-

They send their whole routing tables to each other, and

send incremental updates when something changes

-

Then, the routers advertise routes they know to their

neighbours, containing network prefix, prefix length, AS

path, next hop etc.

-

Neighbours can then use their knowledge of ASNs to choose

whether to use that route or not

-

F needs to get to D, but how? To get to D, F will have to

go through one of its neighbours. But which neighbour should

F choose?

-

To choose, F asks all its neighbours how they would get to

D. F will then pick the neighbour with the shortest

distance.

-

F will obviously not pick I or E, because their paths

contain F itself.

-

F will likely pick either B or G, making the path from F to

D either FBCD or FGCD.

Transport layer

-

The transport layer handles how the information is going to

be transported from the source to the destination.

-

There are two protocols that the transport layer can use:

UDP and TCP

UDP

-

User Datagram Protocol (or UDP) is a connectionless protocol on a ‘fire and forget’ basis.

-

Packets are sent to their destination with no

acknowledgements. They just sort of throw packets towards

the destination and hope for the best.

-

The properties of UDP are:

-

No need to set up a connection with the recipient

-

Application layer has to retransmit, if required:

-

If the recipient doesn’t get the data, the

application layer is responsible for resending

-

UDP applications use a constant bit rate:

-

No need to control the bit rate as the packets all come in

at once anyway

-

The application should adapt if necessary

-

No connection management is used, so we use less

bandwidth

-

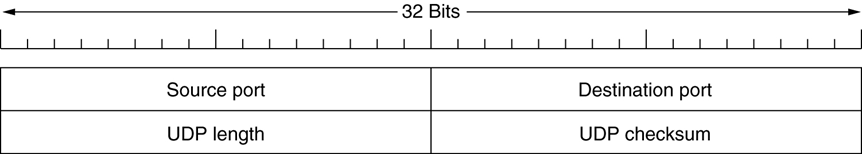

The UDP header is simpler than TCP’s header

-

The checksum is optional

-

If buffers are full, packets may be dropped. The

application can detect this and tell the server.

TCP

-

Transmission Control Protocol (or TCP) is a connection oriented protocol.

-

It includes acknowledgements and retransmissions.

-

TCP provides performance and reliability on an otherwise

unreliable IP service, at the expense of an overhead.

-

The properties of TCP are:

-

Provides connection management:

-

A connection is established and managed

-

Slows down / speeds up when needed

-

Uses that capacity but tries also to avoid congestion:

-

Backs off when there’s packet loss (indicator of

congestion)

-

Sends segments again if they were unacknowledged

-

Receiver reassembles segments in the correct order

-

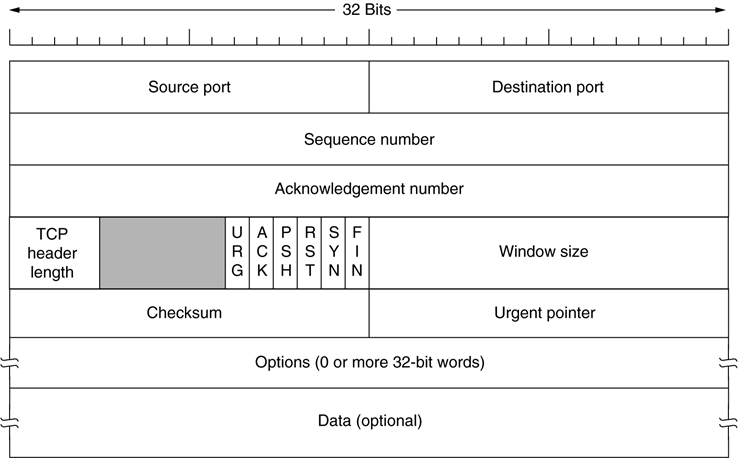

TCP headers are padded so that they’re a multiple of

32 bits.

-

Seq and Ack numbers used to track sequential packets, with

SYN bit:

-

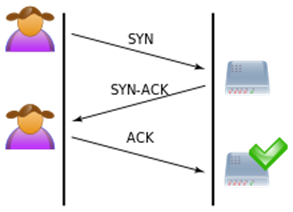

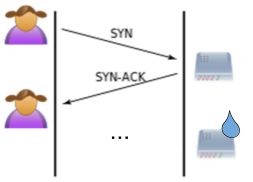

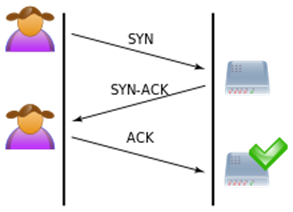

TCP connection establishment uses a three-way handshake

of:

-

SYN: “Can

I talk to you please?”

-

SYN-ACK: “You

may talk to me.”

-

ACK: “We

are now talking.”

-

Each side uses a sequence number, so both parties have a

common understanding of the position in the data

stream

-

The sender must detect lost packets either:

-

by retransmission timeout:

-

Estimate when ACK is expected

-

by cumulative acknowledgement (DupAcks):

-

A type of acknowledgement that acknowledges all past data

chunks

-

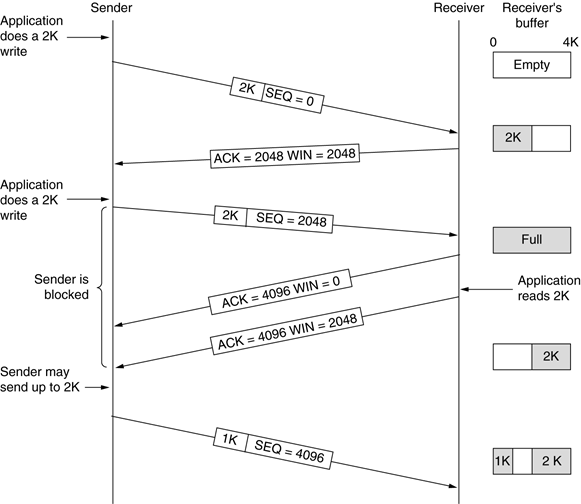

TCP uses a sliding window protocol, like go-back-N or

selective-repeat back in the link layer, to perform flow

control.

-

Basically, the window is the same size as the

recipient’s buffer space. That way, the sender

can’t send any more than the recipient can

handle.

-

Once the recipient says that it can handle more, the window

is slid and further packets will be sent.

-

The sender sends a segment with a sequence number and

starts a timer.

-

The receiver replies with an acknowledgment number showing

the next sequence number it expects and its available

buffer/window size.

-

If the receiver doesn’t send anything by the time the

timer goes off, the sender resends.

-

If the receiver says the window size is 0, the sender may

send a 1-byte probe to ask for the window size again.

-

Here is an example, with dialogue to show you what’s

going on:

-

LEFT: “This is packet 0 (#1), it’s 2K in

size”

-

RIGHT: “Nice; I want packet 2048 (#2), and I have 2048

bytes of space left”

-

LEFT: “Here’s packet 2048 (#2), it’s 2K in

size”

-

RIGHT: “Great; I would want packet 4096 (#3) now, but

I’m full (I have 0 bytes of space)”

-

RIGHT: “OK, I want packet 4096 (#3) now, and I have 2048

bytes of space”

-

LEFT: “Alright, here’s packet 4096 (#3), it’s

1K in size”

-

If the connection started to get congested, how does TCP

control this?

-

TCP actually has two windows: a “recipient

buffer” window and a congestion window.

-

The recipient buffer window is defined just above;

it’s how much the receiver can take in before it gets

full.

-

The congestion window does its best to prevent congestion

in the connection (too much data flying through means more

packet loss)

-

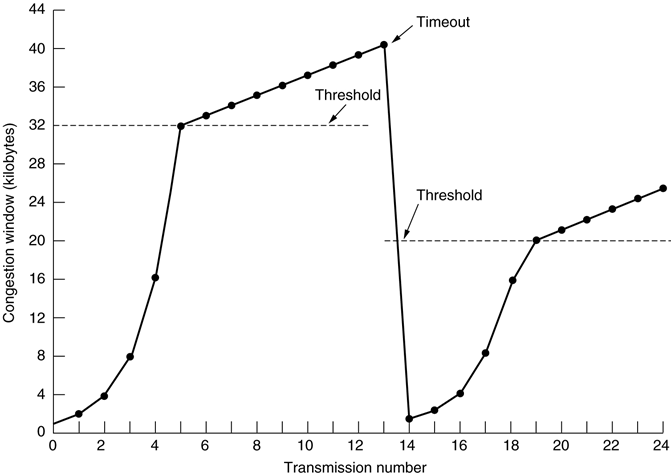

A congestion window starts off small, and grows if

everything is going alright. It resets if there is a

timeout.

-

The window size doubles each time, until a threshold is

reached, at which point it’ll increase linearly,

called Congestion Avoidance.

-

After a timeout, the threshold decreases a bit.

-

So which window do we use; the recipient buffer window or

the congestion window?

-

We use whichever one is smallest.

-

Here is a graph showing the size of the window changing

with each transmission:

TCP or UDP?

-

Streaming services and voice over IP use UDP because

it’s immediate and has limited buffering.

-

Web services like HTTP and SMTP use TCP because having a

structured connection is more important.

-

DNS uses UDP because the overhead for TCP would be too much

for processing 100 to 1000 requests per second.

-

Here are the differences between TCP and UDP:

|

|

TCP

|

UDP

|

|

Connection

|

Connection oriented

|

Connectionless: “fire and forget”

|

|

Reliability

|

Handles ACK & retransmissions

|

Application needs to handle ACK &

retransmissions if needed

|

|

Data Order

|

Guaranteed that it arrives and in the correct

order

|

No guarantee that data is received in the order

sent

|

|

Header

|

20-bytes minimum

|

8-bytes

|

|

Good for

|

Applications that need high reliability

|

Applications that need fast and efficient

transmission

|

|

Example protocols

|

HTTP(S), FTP, SMTP, Telnet, SSH

|

DHCP, TFTP, SNMP, RIP, RTP, COAP

|

-

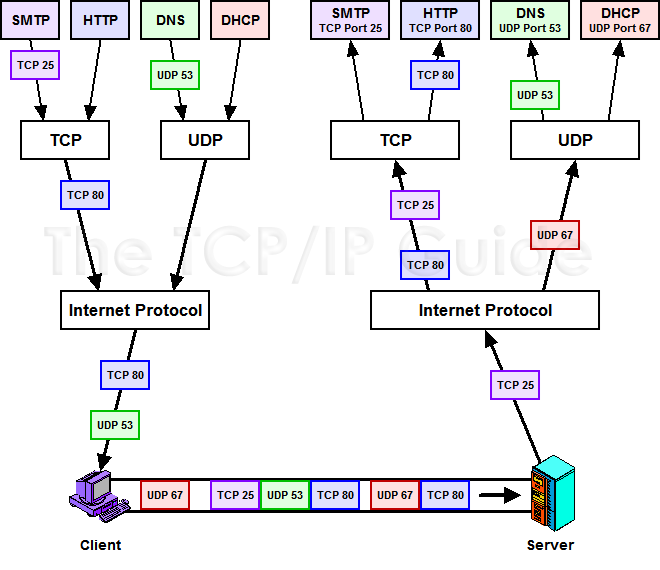

Here is a graphic showing the layers and what protocols

they use:

Sockets

-

A socket is created when a sender and a receiver act as

communication endpoints.

-

A socket has an IP address and a port number, for example

152.78.70.1 port 80

-

The socket and bind protocol uniquely identify the application’s subsequent

data transmission.

-

When multiple clients talk to one server, there is usually

one thread per client endpoint.

-

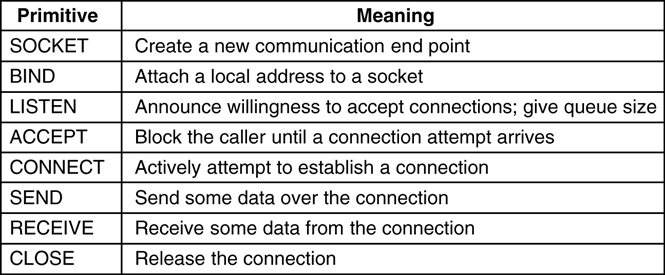

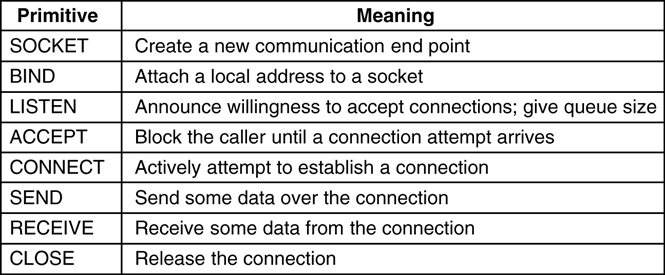

There is a sockets API, called the Berkeley sockets API.

Servers mainly use SOCKET and BIND, whereas clients tend to

use SOCKET and CONNECT:

DNS

-

First of all, what is a DNS?

-

The Domain Name System (or DNS) is a naming system for pairing up domain names to IP

addresses.

-

For example, when you search up “google.com”,

you’re actually talking to an IP, such as

“216.58.213.110”, and the DNS translates

“google.com” to that IP for you.

-

DNS names are delegated through the Top Level Domain (TLD)

registrars

-

For example, with soton.ac.uk:

-

first the “.uk” bit is delegated by

Nominet

-

then the “.ac.uk” bit is through Jisc

-

then finally, the whole thing “.soton.ac.uk”

comes down to the University of Southampton

-

It allows sites and organisations to maintain authority

over their registered domains.

-

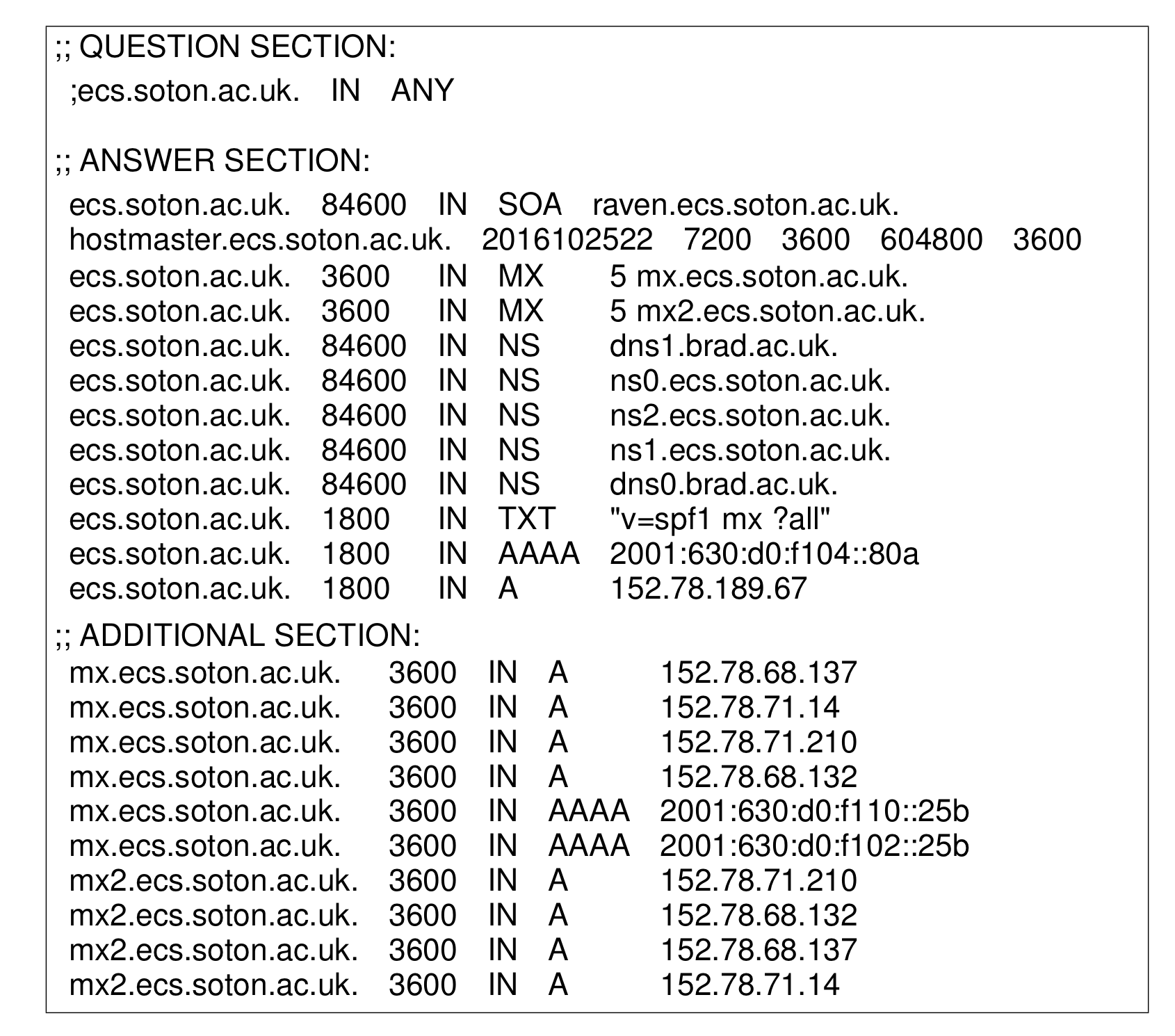

The most common DNS lookup is host name to IP.

-

The DNS returns an A record for IPv4 or AAAA record for

IPv6 when queried for a specific host/domain name.

-

This means clients need to know the IP addresses of local

DNS servers (resolvers) so they can actually send the

queries.

-

A DNS resolver is a server that acts as a DNS server that resolves

queries and sends an answer back.

-

Client hosts are configured to use one or more local DNS

resolvers. They’re usually provided via DHCP.

-

Clients can use APIs to do DNS lookups. You can even do it

via a CLI tool.

-

Packets sent to DNS resolvers are usually UDP packets, but

they can use TCP.

-

They use UDP because it’s connectionless and

quick.

-

The results of queries can be cached.

-

Here is a list of DNS record types:

-

Campuses will run their own DNS servers, so they can:

-

act as resolvers for internally sourced DNS queries

-

act as servers for external queries against internal

names

-

Some DNS infrastructures also may provide a ‘split

DNS’ view, which is where separate DNS servers are

provided for internal and external networks as a means of

security and privacy management.

-

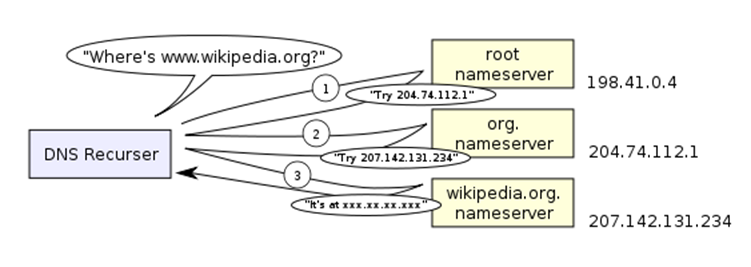

If DNS servers are local, then how does a client talk to a

server from across the world?

-

We leverage the hierarchical delegation structure! What?

That doesn’t make sense?

-

Basically, we have a set of “root” DNS servers

that can point us to other DNS servers that know about

different URL suffixes.

-

For example, if we were looking for “www.google.co.uk”, the root DNS could point us to another DNS server

and say “go to them, they know all about URLs ending

in uk”

-

Then that DNS server could point us to another DNS server

and say “go to them, they know all about URLs ending

in co.uk”

-

... and so on until we reach a DNS that knows the IP

address for www.google.co.uk.

-

Mapping one DNS to another DNS is known as DNS glue.

-

So in other words, to get your IP from your host name, your

browser goes on one big adventure, going from DNS to DNS,

looking for the IP.

-

This can also be cached, so we don’t hit DNS servers

heavily.

-

DNS resolvers are critical to Internet

infrastructure.

-

Because of this, people like to attack them.

-

Therefore, we need to make them resilient and strong.

-

Root servers are the ‘anchor’ for recursive

DNS’.

-

If someone managed to shut down all the root servers, they

could shut down the Internet.

-

There are 13 highly available clusters of root servers.

There are about 1000 servers in total used in these

clusters, and a server is picked in a cluster via

anycast.

-

This makes DNS servers more distributed and allows clients

to use the most local server, improving performance.

-

It also helps spread the load, and mitigate DoS

attacks.

-

IP anycast is where the same IP is configured in many locations,

and your network picks the closest one to connect to.

-

This is especially applied to DNS servers, as picking the

closest DNS server means you get better performance.

-

It also means you don’t have to reconfigure your

network when you’re in a different place; because of

anycast, your network just picks the closest DNS server

anyway.

-

Fast flux DNS is a technique where the association between an IP

address and a domain name is changed very frequently.

-

This is used in some peer-to-peer systems, but it can also

be used by botnets (the bad guys) to avoid detection and

make takedown harder (so they don’t get caught by the

good guys)

-

Public DNS servers are open public servers. They also answer queries for

domains, recursively. An example is the Google public DNS

server.

-

Why use them?

-

Speed improvements

- Reliability

-

Parental controls

-

Phishing protection

- Security

-

Access geoblocked content

-

Bypass web censorship

-

However, you don’t know who’s logging your

queries (and with a company like Google, can you really

trust them?)

Application layer

-

The application layer provides higher level services.

-

In the TCP/IP layer, it’s just called

“Application”, but in the OSI Model layers, the

application layer is split up into

“Application”, “Presentation” and

“Session”.

Telnet

-

Telnet: Simple unencrypted terminal emulation protocol

-

In other words, it provides a bidirectional text-based

communication service using your terminal, like a Unix / DOS

chatroom!

-

It was big in the 90’s, but nobody really uses it

anymore because

-

It’s old and computer normies non-techies don’t like using terminals

-

It doesn’t even have security, it’s

unencrypted

-

Today, it’s mainly used as a plot device in films

(like Die Hard 4) and watching the original Star Wars in

ASCII (the server is towel.blinkenlights.nl) .

Email

-

There are two main protocols for emailing: SMTP and

IMAP.

SMTP

-

SMTP (Simple Mail Transfer Protocol): a protocol used to send emails.

-

It uses TCP to connect to a mail server and uses the

commands MAIL, RCPT and DATA.

-

Authentication is an extension; you don’t need it,

but you should have it by now. Original emails didn’t

have it.

-

You can use SMTP quite easily in modern languages, like

Python and PHP.

-

How does SMTP work? Well, if you’ve been reading my

notes, you know I prefer to go in detail about stuff like

this (within reason).

-

If this illustration isn’t enough, here’s some

dialogue, where Alice is the sender and Bob is the

receiver:

-

Alice: “Hey, do you want to start a TCP

connection?”

-

Bob: “Yes. Let’s talk in TCP.” (220

message)

-

Alice: “Alright, let’s begin. Hello! Tell me what

you can do.” (EHLO extended hello message)

-

Bob: “OK. Here’s what I can do: (a list of things

Bob can do)” (250 message with list of supported SMTP

extensions)

-

Alice and Bob start performing mail transactions (you

don’t need to know these)

-

Alice: “I’m done with you. Let’s stop

talking.”

-

Bob: “OK. Goodbye.” Bob stops listening to Alice

(221 message, Bob closes transmission channel)

-

Alice receives Bob’s goodbye and also stops listening

to Bob.

IMAP

-

IMAP (Internet Message Access Protocol): a protocol used to retrieve emails.

-

It was designed in 1986 and listens on port 142 (993 for

the secure version)

-

It keeps a TCP connection open to send requests or receive

notifications.

HTTP

-

HTTP (HyperText Transfer Protocol): a text-based protocol that uses TCP and uses port

80.

-

There are two steps to using HTTP:

-

Send a request (e.g. GET /something/page.html HTTP/1.1)

-

Receive a response message (e.g. HTTP/1.1 200 OK)

-

A typical response might contain things such as:

-

Version of HTTP

-

HTTP status code

- Date

-

Content type (text, html, xml etc.)

- Server type

-

The main types of HTTP requests are:

-

GET - simply retrieve data

-

HEAD - like GET but no data is sent back

-

POST - send data to server, usually to create records

-

PUT - send data to server, usually to edit existing records

-

DELETE - send data to server, usually to delete a record

-

You can tell the variety of a status code by the left-most

digit:

-

1xx -> Information

-

2xx -> Success (e.g. 200 ok)

-

3xx -> Redirection (e.g. 301 moved)

-

4xx -> Client error (e.g. 403 forbidden, 404 not found)

-

5xx -> Server error (e.g. 500 internal error)

-

You can send HTTP requests using the wget or curl commands in Linux.

HTTP/2

-

The first major revision to HTTP, published in 2015

-

The header is now compressed as binary using HPACK, a

header compression algorithm. However, now, the whole packet is binary, not just

the header.

-

It also has Server Push, which means resources are sent to

the client before they even ask for them. No, it

doesn’t use King Crimson and erases time, instead it

goes like this:

-

Let’s say you requested index.html, and that page uses styles.css and script.js. Normally, you’d request for index.html, receive that, find out you need styles.css and script.js, and do separate requests for those assets, too.

-

With Server Push in HTTP/2, the server already knows that

you’ll need styles.css and script.js, so it prepares those responses in advance before your

browser even has the chance to parse index.html.

-

It can also multiplex multiple requests over a single TCP

connection.

QUIC

-

QUIC (Quick UDP Internet Connections): A UDP protocol to make the web faster, and possibly

replace TCP.

-

It’s pushed by Google, and it still establishes some

“state”/sessions and encryption.

-

It’s experimental, which is why you’ve probably

not heard of it.

-

HTTP/3 will be based on QUIC instead of TCP.

CoAP

-

CoAP (Constrained Application Protocol): provides HTTP-like protocol for simple devices

-

It has a minimal overhead, perfect for small devices.

-

Binary encoding for GET / PUT / etc.

-

It has a simple discovery mechanism and a simple subscribe

method

-

It’s used in things like Ikea smart lighting.

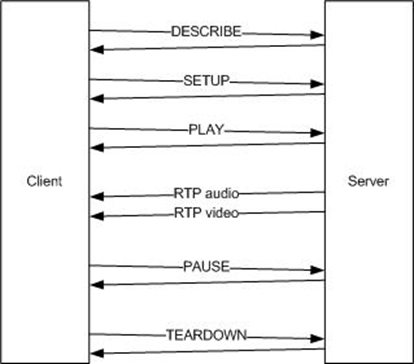

RTSP

-

RTSP (Real Time Streaming Protocol): a protocol used for streaming video / audio

-

It’s used by YouTube and Flash players (R.I.P.).

-

Video frames are packetized so that losses do not corrupt

the whole stream.

-

It has a URL scheme with arguments, similar to HTTP

GET.

-

Real-time transport protocol (RTP) delivers the media

(typically with UDP).

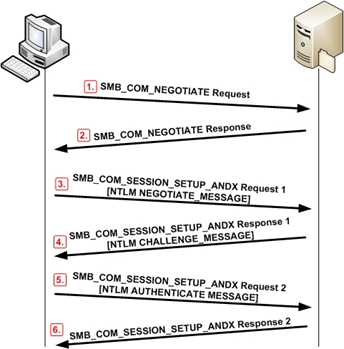

SMB

-

SMB (Server Message Block): a file-sharing protocol from Microsoft that can use

either TCP with port 445 or UDP.

-

It provides authentication, file locking etc.

-

It’s provided in Samba within Linux.

NFS

-

NFS (Network File System): a file-sharing protocol mainly used in Unix /

Linux.

-

It’s common between servers and can cope with very

large files and file numbers.

P2P

-

P2P (Peer-to-peer): instead of using a server-client model, every device has

the same priority and shares data with each other.

-

It distributes the content efficiently across everyone,

instead of having one master device rule all.

-

BitTorrent uses this for files (as you probably

know).

IPv6

-

We’re running out of IPv4 addresses!

-

The organisations handing out IP addresses (called RIRs)

have no unallocated address blocks.

-

It started off with alpha support in 1996, but drafts for

the IPv6 standard was established in 1998.

-

In the bizarre summer of 1999, IPng Tunnelbroker started,

which “tunnelled” v4 to v6.

-

They started putting them in servers at 2004, but major PC

operating systems didn’t start supporting them until

2011.

-

Now, people are starting to use it more, for instance in

2016 Sky has 80% IPv6 coverage.

-

It became an internet standard in July 2017.

IPv6 Features

-

So what can v6 do?

-

It has a 128-bit address, as opposed to the 32-bit v4

address, so there’s

IPv6 addresses.

IPv6 addresses.

-

If we gave each grain of sand on Earth a global address,

we’d only take up 0.000000000000000002%, or

of the total number of addresses we have.

of the total number of addresses we have.

-

To put it in perspective further, if we had a copy of Earth

for every grain of sand that exists on this Earth, and we

gave global addresses to all the grains of sand on every

copy of Earth, we’d still have addresses left over!

Basically, that’s a lot of addresses.

-

IPv6 addresses are usually written in colon-separated hex

format, to keep it shorter.

-

Leading 0’s are also omitted, and a single set of

repeated 0 blocks can be replaced with

“::”.

-

e.g. address 2001:0630:00d0:f102:0000:0000:0000:0022

-

can be shortened to 2001:630:d0:f102:0:0:0:22

-

which can be shortened to 2001:630:d0:f102::22

-

Remember that you can only do this once, or else there will

be confusion over where the zeros are! Source

-

Some other features include:

-

Subnets are represented in CIDR notation (like v4, e.g. 2001:630:d0:f102::/64)

-

Not as easy to remember as v4 because it’s longer,

but you shouldn’t care

-

Multicast is an inherent part of v6, and used in subnets

instead of broadcast

-

v4 and v6 can co-exist in dual-stack deployments

-

Just like how v4 had preset addresses, v6 has address

scopes dedicated to certain tasks:

-

::1/128 - loopback

-

::/128 - unspecified (equivalent to 0.0.0.0)

-

fe80::/10 - Link-local address (used only on local link)

-

fc00::/7 - Unique Local Address (ULA, used within a site)

-

2000::/3 - Global Unicast

-

ff00::/8 - Multicast

-

Hosts usually have multiple v6 addresses!

-

There are conventions, too:

-

/127 can be used for inter-router links

-

Smallest subnet is a /64 (which still allows 18 quintillion addresses)

-

Home / small business users should be given a /56

-

A “site” is usually given /48

-

Minimum v6 allocation from RIR is /32

-

Any subnet larger than /64 can be used

-

We know that v4 addresses are running out, but are there

any technical benefits to v6?

- No more NAT

-

More “plug-and-play” than v4 (require less setting up as Stateless auto configuration

(SLAAC) works)

-

Streamlined header, so more efficient routing and packet

processing

-

Fragmentation occurs at sender, so less strain on

routers

Why use v6?

-

If v4 works for now, why swap to v6 early?

-

You should get familiar with v6 before it becomes

mandatory

-

You can secure v6 and still use v4

-

Support for new applications

-

Enables innovation / teaching / research

-

To be fair, those last two are only for companies.

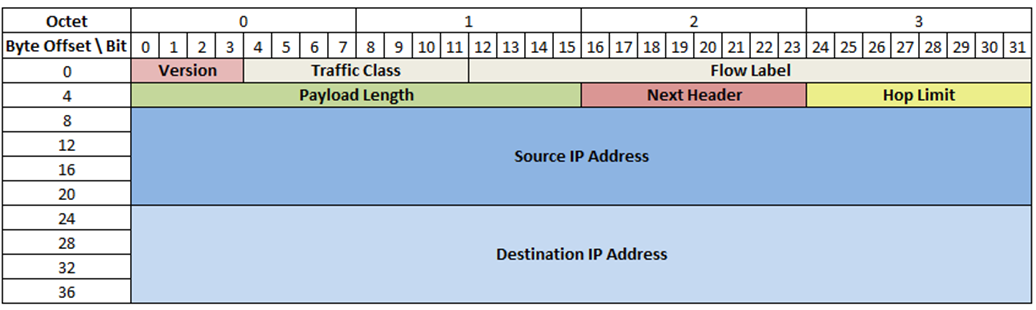

IPv6 headers

-

IPv6 headers are a fixed 40 bytes, which is bigger than

IPv4, but simpler.

-

It uses extension headers rather than options, and has 8

fields:

-

Version: represents the version of the Internet Protocol i.e.

0110

-

Source Address: the address of the originator of the packet

-

Destination Address: the address of the recipient of the packet

-

Traffic class: indicates class/priority of the packet

-

Flow label: a “hint” for routers that keeps packets on

the same path

-

Payload length: size of the payload + extension headers

-

Next header: indicates the type of the next header

-

Hop limit: equivalent to the “time to live” field from

v4

-

Extension headers carry optional Internet Layer

information, and sits after the v6 header but before the

transport layer header in the packet.

-

It’s equivalent to the options field in v4

-

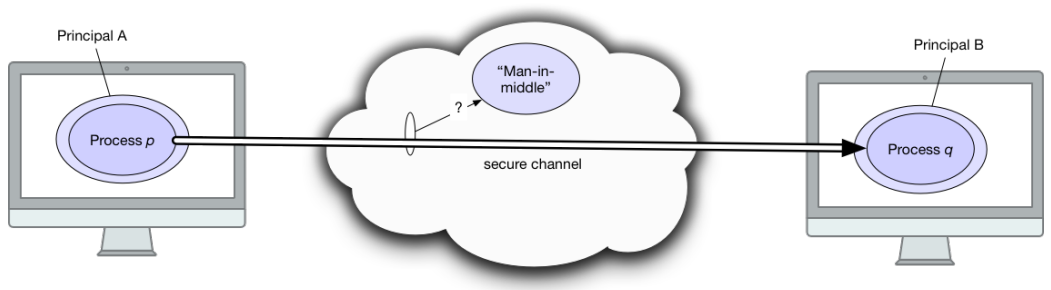

Fragmentation is an extension header

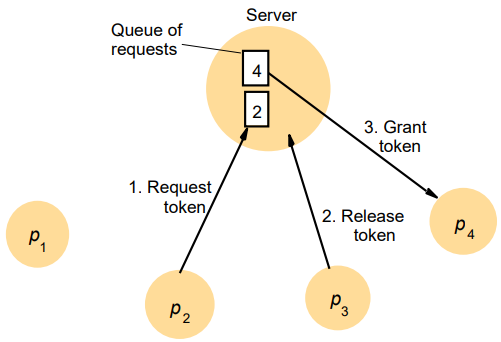

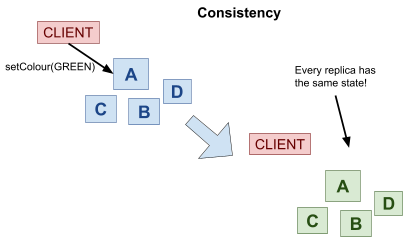

-