Computer Vision

Joshua Gregory

Notes intro 10

Introduction 10

Lecture 1: Eye and Human

Vision 12

The human eye 12

Optics 13

Spectral responses 14

Mach bands 15

Neural processing 16

Lecture 2: Image Formation 16

Decomposition 16

Resolution 17

Fourier Transform 17

What the Fourier Transform actually

does 18

A pulse and its Fourier

transform 19

Reconstructing signal from its Fourier

Transform 20

Magnitude and phase of the Fourier transform of

a pulse 21

Lecture 3: Image Sampling 21

Aliasing in Sampled Imagery 21

Aliasing 22

Sampling Signals 22

Wheels Motion 22

Sampling Theory 23

Transform Pair from Sampled

Pulse 23

2D Fourier Transform 24

Reconstruction (non examinable) 26

Shift Invariance 26

Rotation Invariance 27

Filtering 27

Other Transforms 28

Applications of 2D Fourier

Transform 29

Lecture 4: Point Operators 29

Image Histograms 29

Brightening and Image 29

Intensity Mappings 30

Exponential/Logarithmic Point Operators (non

examinable) 30

Intensity normalisation and histogram

equalisation 31

Histogram Equalisation 31

Applying intensity normalisation and histogram

equalisation 32

Thresholding and eye image 32

Thresholding: Manual vs

Automatic 33

Lecture 5: Group Operators 33

Template Convolution 34

3x3 template and weighting

coefficients 35

3x3 averaging operator 36

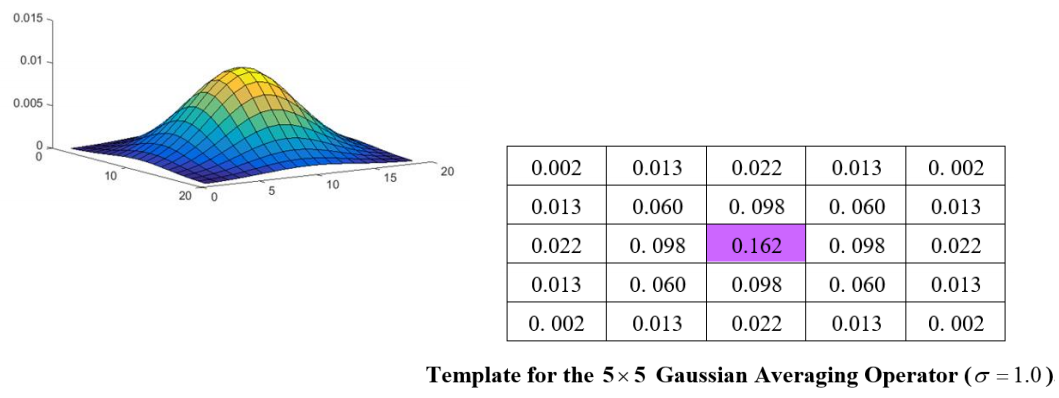

Illustrating the effect of window

size 37

Template convolution via the Fourier

transform 37

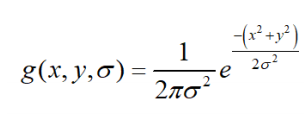

2D Gaussian function 37

2D Gaussian template 39

Applying Gaussian averaging 39

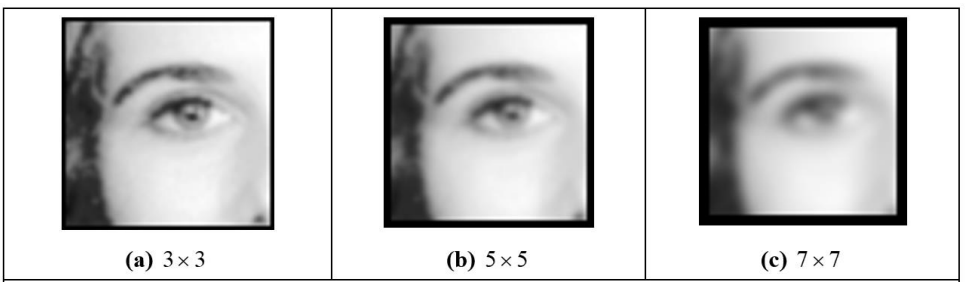

Finding the median from a 3x3

template 39

Newer stuff (non-examinable) 40

Applying non local means 41

Even newer stuff: Image Ray

Transform 41

Applying Image Ray Transform 41

Comparing operators 43

Lecture 6: Edge Detection 43

Edge detection 43

First order edge detection 43

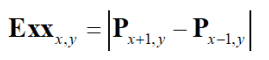

Edge detection maths 45

Templates for improved first order

difference 45

Edge Detection in Vector Format 45

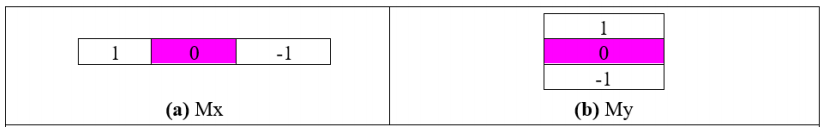

Templates for Prewitt operator 46

Applying the Prewitt Operator 46

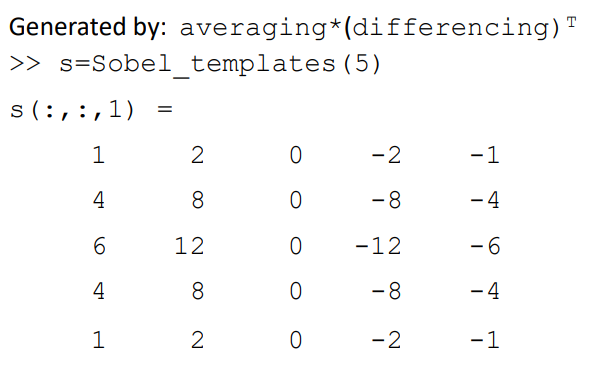

Templates for Sobel operator 47

Applying Sobel operator 47

Generalising Sobel 47

Generalised Sobel (non

examinable) 48

Lecture 7: Further Edge

Detection 48

Canny edge detection operator 48

Interpolation in non-maximum

suppression 49

Hysteresis thresholding transfer

function 50

Action of non-maximum suppression and hysteresis

thresholding 51

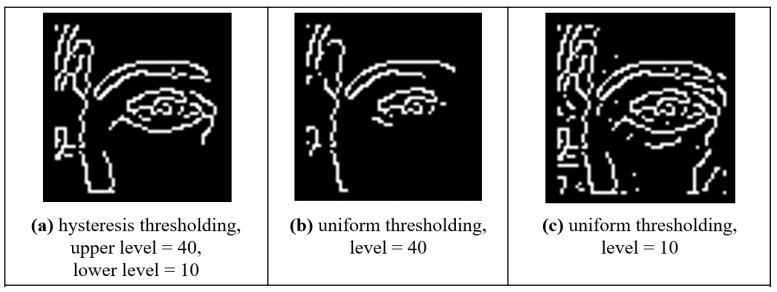

Hysteresis thresholding vs uniform

thresholding 51

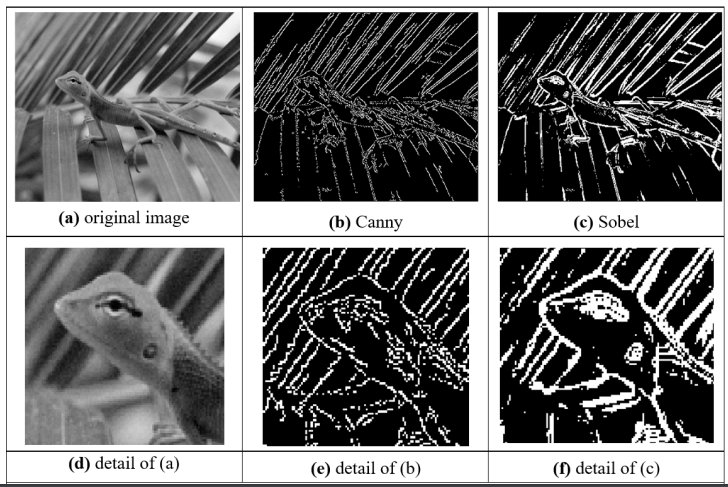

Canny vs Sobel 51

First and second order edge

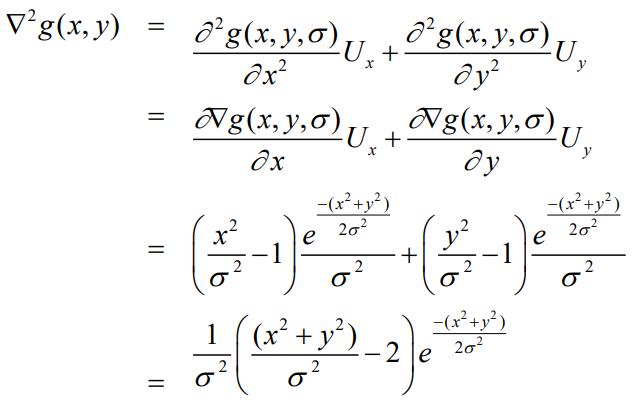

detection 53

Edge detection via the Laplacian

operator 53

Mathbelts on… 54

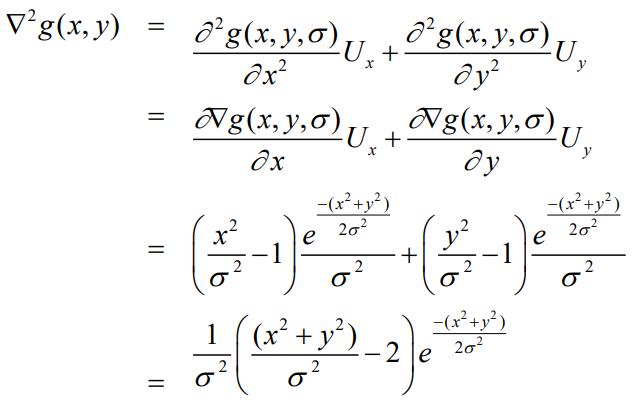

Shape of Laplacian of Gaussian

operator 54

Zero crossing detection 55

Marr-Hildreth edge detection 55

Comparison of edge detection

operators 55

Newer stuff - interest detections

(non-examinable) 56

Newer stuff - saliency 56

Lecture 8: Finding Shapes 57

Feature extraction by

thresholding 57

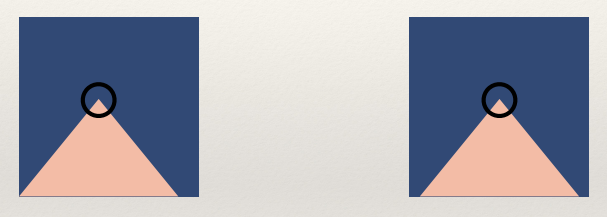

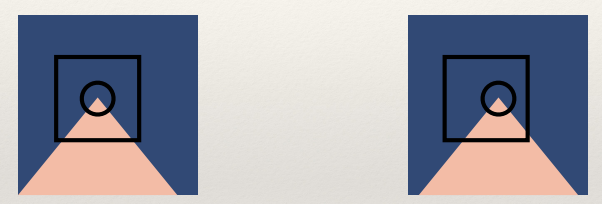

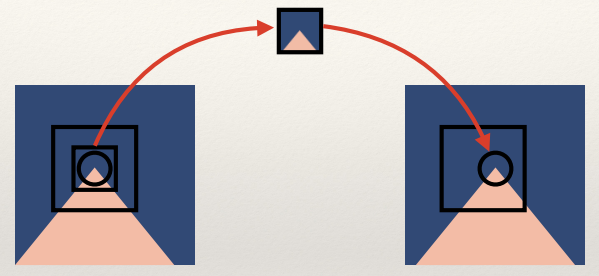

Template Matching 57

Template matching in: 58

In Noisy images 58

In Occluded Images 58

Encore, Monsieur Fourier! (???) (non

examinable) 59

Applying Template Matching (non

examinable) 59

Applying SIFT in ear biometrics (non

examinable) 60

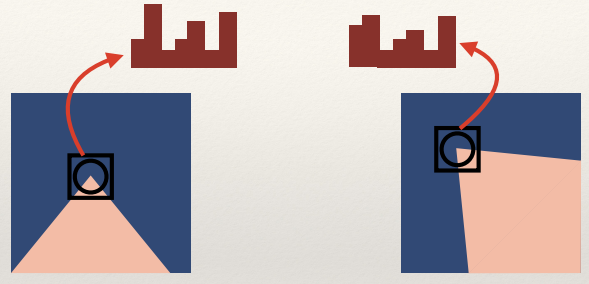

Hough Transform 60

Applying the Hough transform for

lines 61

Hough Transform for Lines …

problems 61

Images and accumulator space of polar Hough

Transform 62

Applying Hough Transform 62

Lecture 9: Finding More

Shapes 63

Hough Transform for Circles 63

Circle Voting and Accumulator

Space 64

Speeding it up 64

Applying the HT for circles 64

Integrodifferential operator? (non

examinable) 64

Arbitrary Shapes 65

R-table Construction 65

Active Contours (non

examinable) 66

Geometric active contours (non

examinable) 67

Parts-based shape modelling (non

examinable) 67

Symmetry yrtemmyS (non

examinable) 68

Lecture 10 Applications/Deep

Learning 68

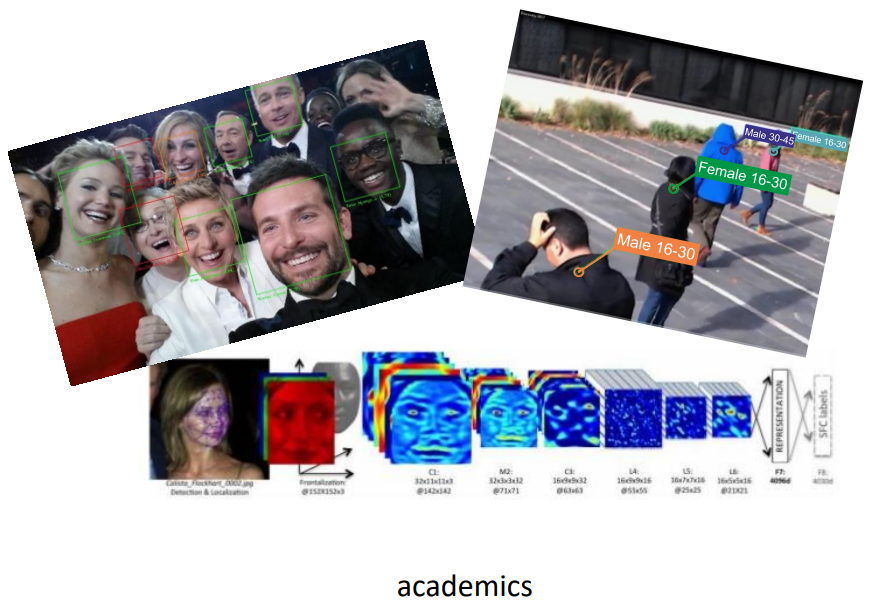

Where is computer vision used? 68

Deep Learning 68

Conclusions 68

Lecture 1: Building machines that

see 71

Key terms in designing Computer Vision

systems 71

Robustness 71

Repeatability 71

Invariance 71

Constraints 71

Constraints in Industrial

Vision 72

Software Constraints 72

Colour-Spaces (non examinable?) 72

RGB Colour-space 72

HSV Colour-space 72

Physical Constraints 73

Vision in the wild 73

Lecture 2: Machine learning for pattern

recognition 73

Feature Spaces 73

Key terminology 74

Density and Similarity 74

Distance in featurespace 74

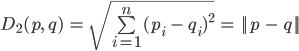

Euclidean distance (L2 distance) 75

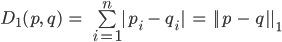

Manhattan/Taxicab distance (L1

distance) 76

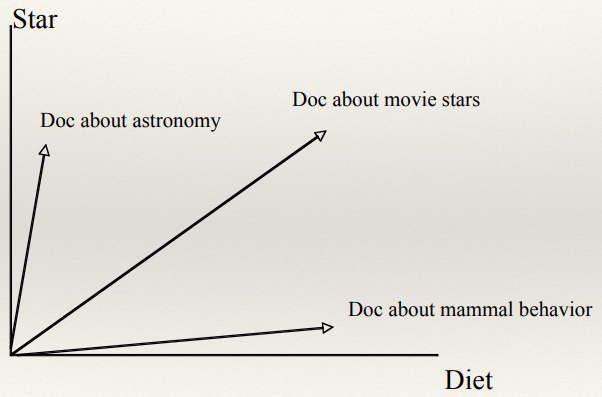

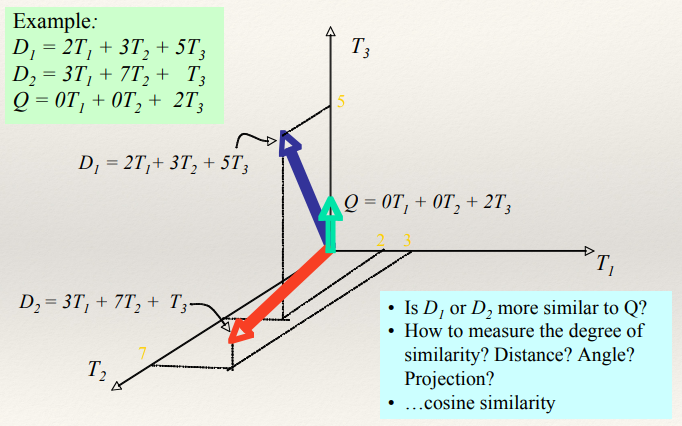

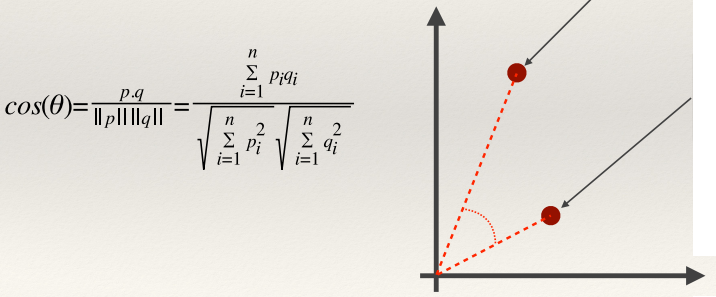

Cosine Similarity 76

Choosing good featurevector representations for machine learning 77

Supervised Machine Learning:

Classification 77

Linear Classifiers 77

Non-linear binary classifiers 78

Multiclass classifiers: KNN 80

KNN Problems (non examinable?) 80

Unsupervised Machine Learning:

Clustering 81

K-Means Clustering 81

Lecture 3: Covariance and Principal

Components 82

Random Variables and Expected

Values 82

Variance 82

Covariance 82

Covariance Matrix 83

Mean Centring 83

Covariance matrix again 84

Principal axes of variation 85

Basis 85

The first principal axis 85

The second principal axis 85

The third principal axis 85

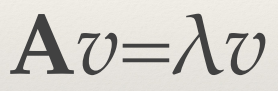

Eigenvectors and Eigenvalues 86

Important Equation 86

Properties 86

Finding Values 87

Eigendecomposition 87

Summary 87

Ordering 87

Principal Component Analysis 87

Linear Transform 87

Linear Transforms 88

PCA 88

PCA Algorithm 89

Eigenfaces 89

Making Invariant 89

Problems 90

Potential Solution… Apply

PCA 90

Lecture 4: Types of image feature and

segmentation 91

Image Feature Morphology 91

Global Features 91

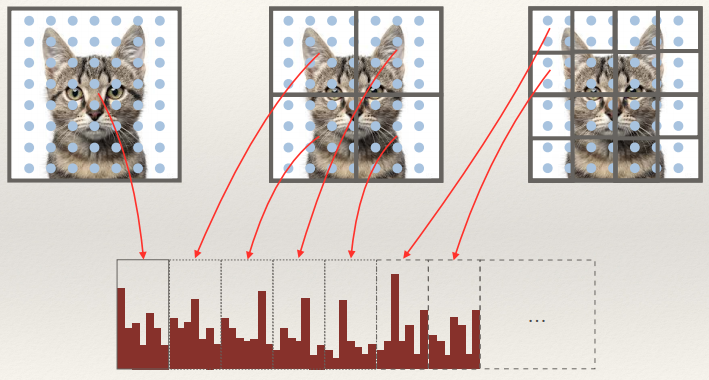

Grid or Block-based Features 91

Region-based Features 92

Local Features 92

Global Features 92

Image Histograms 92

Joint-colour histogram 93

Image Segmentation 94

What is segmentation? 94

Global Binary Thresholding 94

Otsu’s thresholding method 94

Adaptive / local thresholding 95

Mean adaptive thresholding 95

Segmentation with K-Means 95

Advanced segmentation techniques 96

Connected Components 96

Pixel Connectivity 96

Connected Component 96

Connected Component Labelling 96

The two-pass algorithm 97

Lecture 5: Shape description and

modelling 98

Extracting features from shapes represented by

connected components 98

Borders 98

Inner Border 98

Outer Border 98

Two ways to describe shape 99

Region Description: Simple Scalar Shape

Features 100

Area and Perimeter 100

Compactness 100

Centre of Mass 100

Irregularity / Dispersion 101

Moments 102

Standard Moments 102

Central Moments 102

Normalised Central Moments 103

Boundary Description 103

Chain Codes 103

Chain Code Invariance 103

Chain Code Advantages and

Limitations 104

Fourier Descriptors 104

Region Adjacency Graphs 105

Active Shape Models and Constrained Local

Models 105

Lecture 6: Local interest

points 105

What makes a good interest

point? 105

How to find interest points 106

The Harris and Stephens corner

detector 107

Basic Idea 107

Harris & Stephens:

Mathematics 107

Structure Tensor 108

Eigenvalues of the Structure

Tensor 109

Harris & Stephens Response

Function 109

Harris & Stephens Detector 110

Scale in Computer Vision 111

The problem of scale 111

Scale space theory 111

The Gaussian Scale Space 112

Nyquist-Shannon Sampling theorem 112

Gaussian Pyramid 113

Multi-scale Harris &

Stephens 113

Blob Detection Finally 113

Laplacian of Gaussian 113

Scale space LoG 114

Scale space DoG 115

DoG Pyramid 115

Lecture 7: Local features and

matching 116

Local features and matching

basics 116

Local Features 116

Why extract local features? 116

Example: Building a panorama 116

Problem 1: 116

Problem 2: 117

Two distinct types of matching

problem 117

Narrow-baseline stereo 117

Wide-baseline stereo 117

Two distinct types of matching

problem 118

Robust local description 118

Descriptor Requirements 118

Matching by correlation (template

matching) 118

(Narrow baseline) template

matching 118

Problems with wider baselines 120

Local Intensity Histograms 120

Use local histograms instead of pixel

patches 120

Local histograms 120

Overcoming localisation

sensitivity 121

Overcoming lack of illumination

invariance 121

Local Gradient Histograms 121

Gradient Magnitudes and

Directions 121

Gradient Histograms 121

Building gradient histograms 121

Rotation Invariance 121

The SIFT feature 122

Adding spatial awareness 122

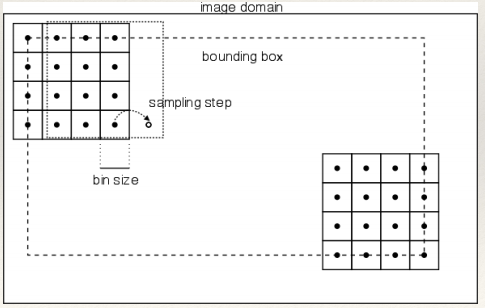

SIFT Construction: sampling 123

SIFT Construction: weighting 123

SIFT Construction: binning 123

Matching SIFT features 124

Euclidean Matching 124

Improving matching performance 124

Lecture 8: Consistent

matching 124

Feature distinctiveness 124

Constrained matching 125

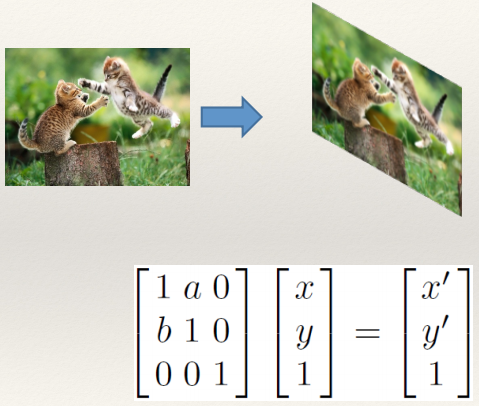

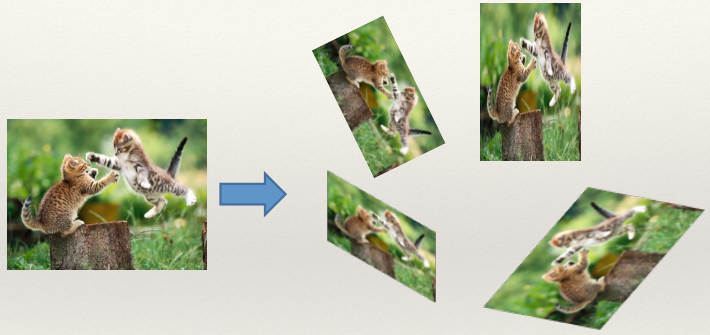

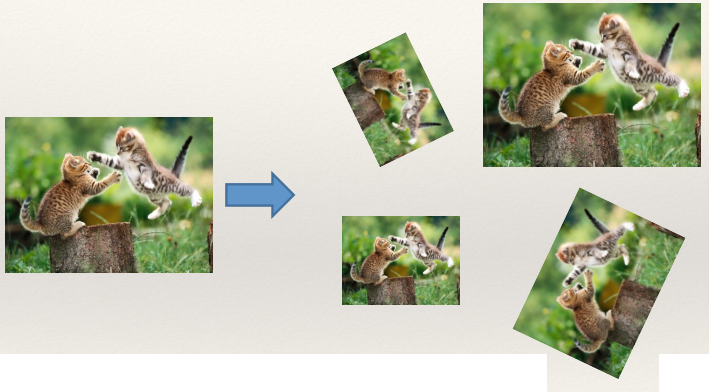

Geometric Mappings 125

What are geometric transforms? 125

Point Transforms 125

The Affine Transform 125

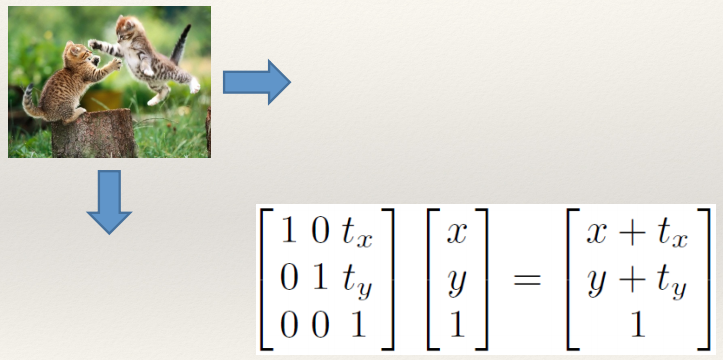

Translation 126

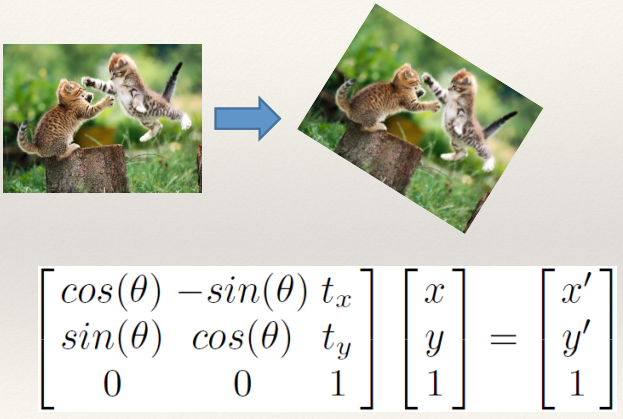

Translation and Rotation 126

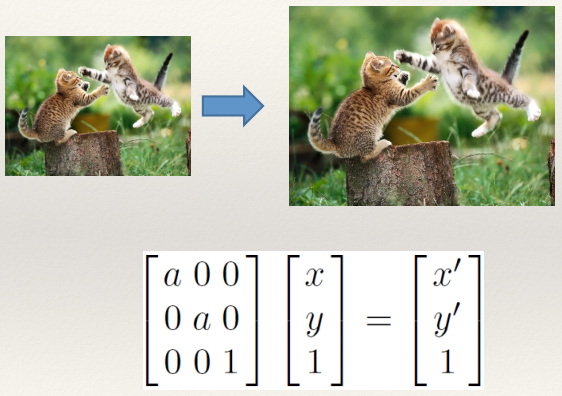

Scaling 126

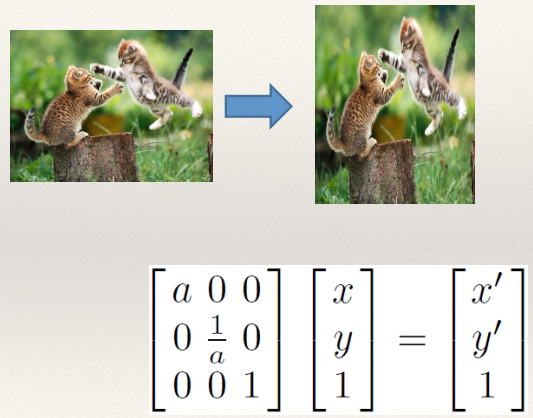

Aspect Ratio 126

Shear 126

Degrees of Freedom 126

Affine Transform 129

Similarity Transform 130

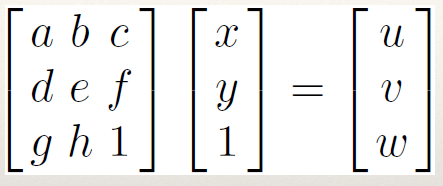

More degrees of freedom 130

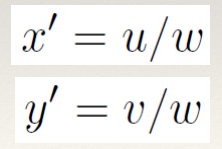

Homogeneous coordinates 131

The Planar Homography (Projective

Transformation) 131

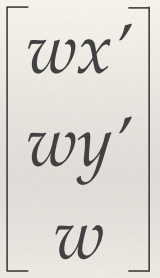

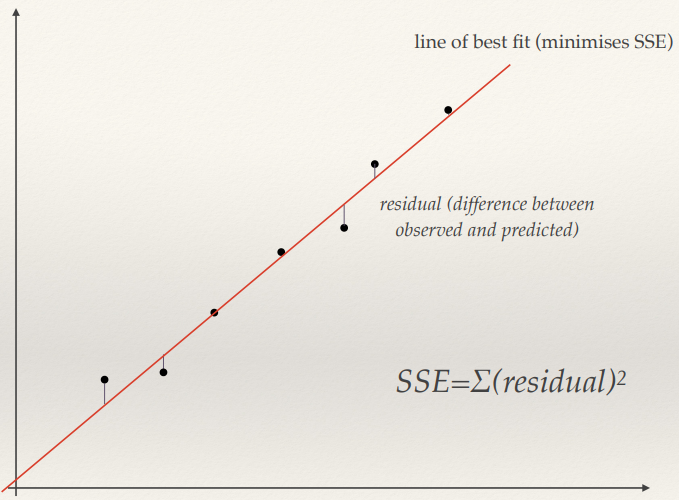

Recovering a geometric mapping 131

Simultaneous equations 131

Least-squares 132

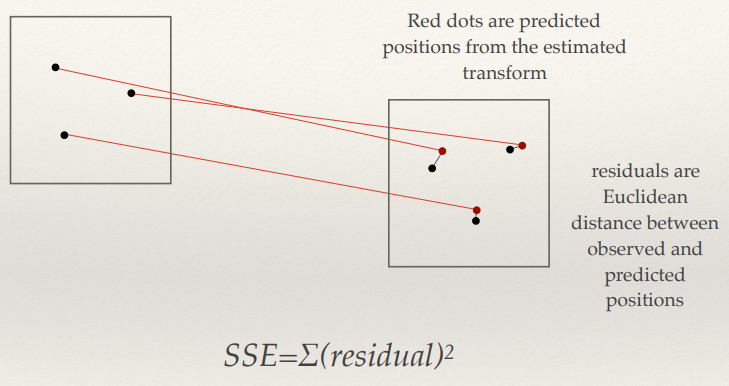

Robust Estimation 132

Problem: Noisy data 133

Robust estimation techniques 133

RANSAC: RANdom SAmple Consensus 133

Further applications of robust local

matching 134

Object recognition & AR 134

3D reconstruction 134

Problems with direct local feature

matching 134

Local feature matching is slow! 134

Efficient Nearest Neighbour

Search 134

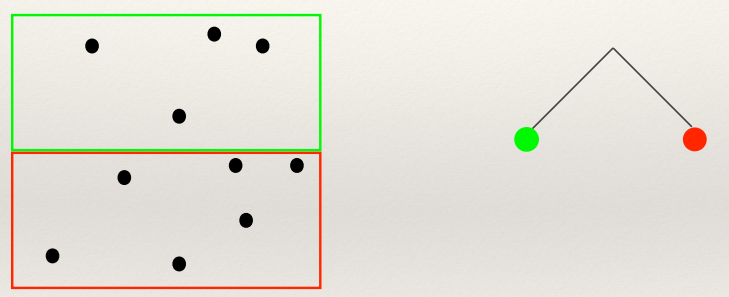

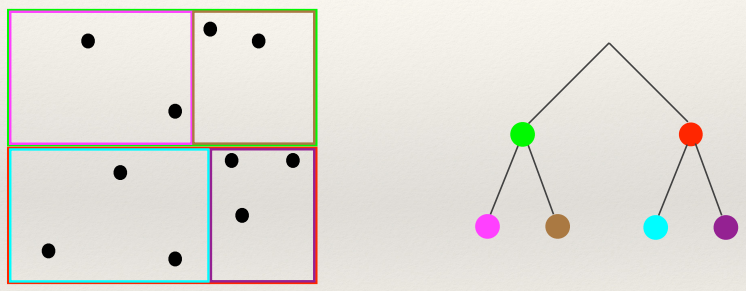

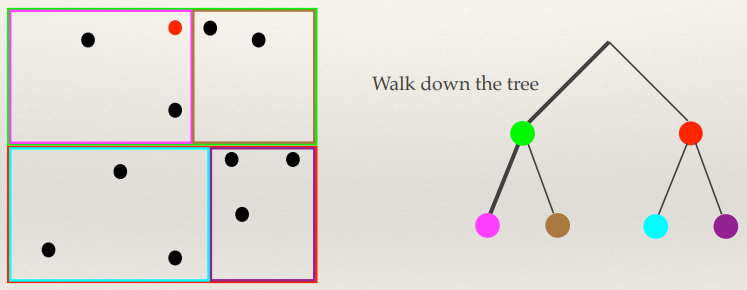

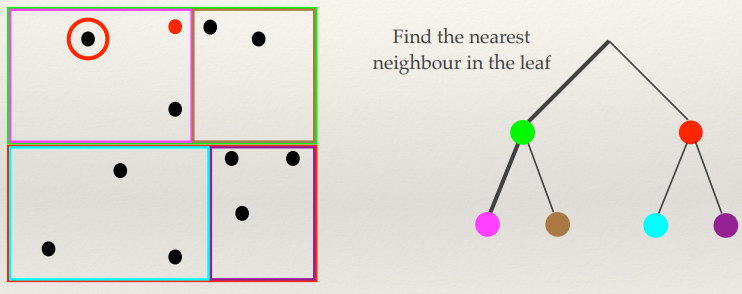

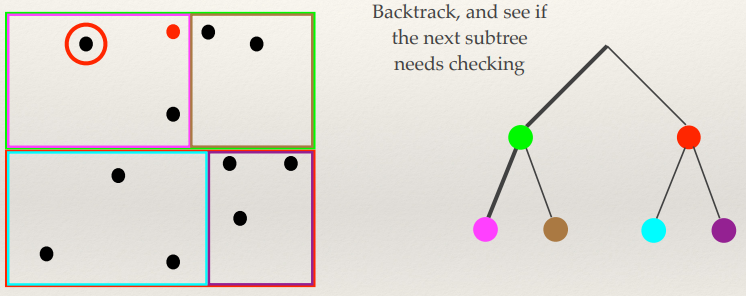

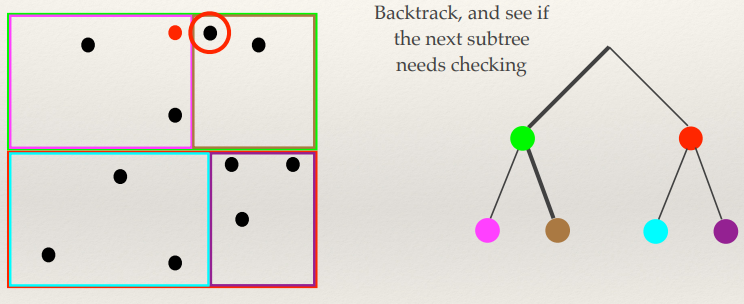

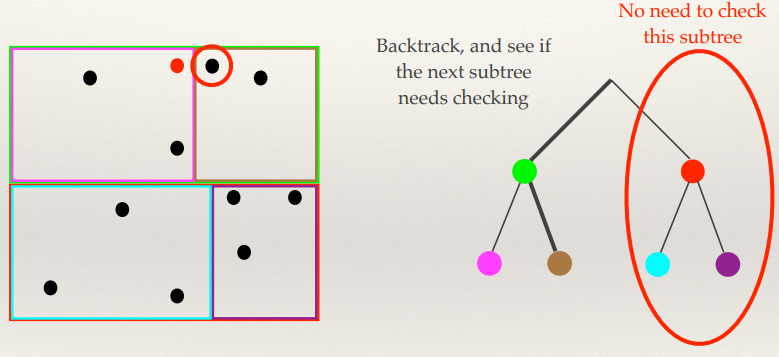

K-D Trees 134

K-D Tree problems 135

Hashing 137

Sketching 138

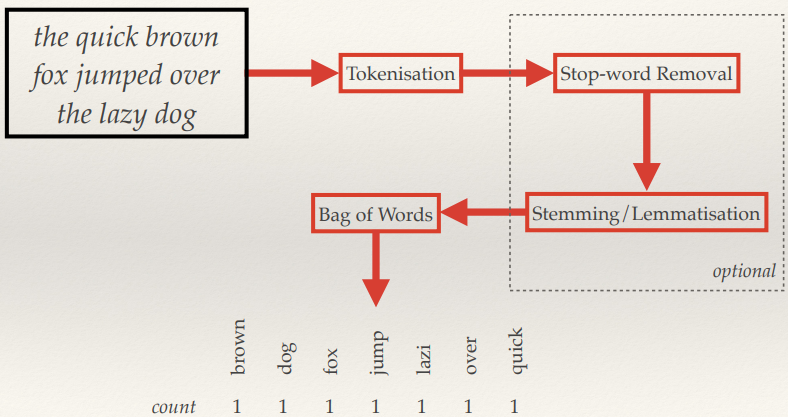

Lecture 9: Image search and Bags of Visual

Words 138

Text Information Retrieval 138

The bag data structure 138

Bag of Words 138

Text processing (feature

extraction) 139

The Vector-Space Model 139

Bag of Words Vectors 140

The Vector-space Model 140

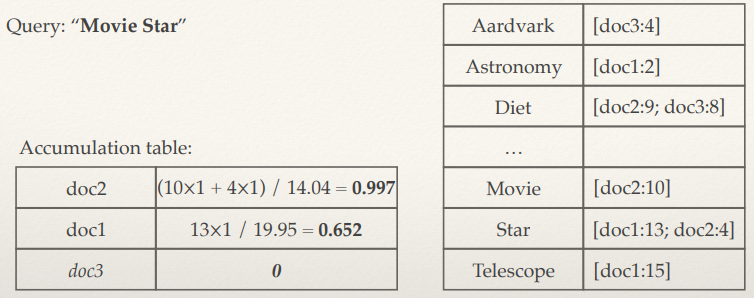

Searching the VSM 141

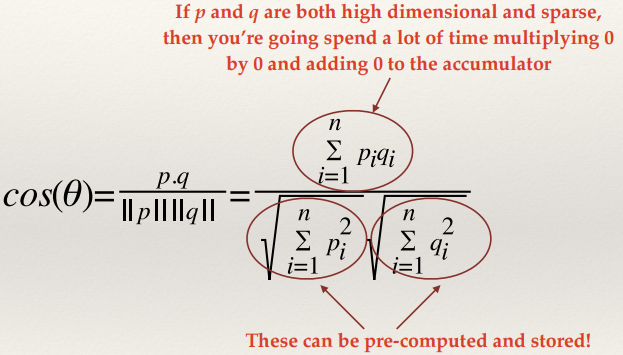

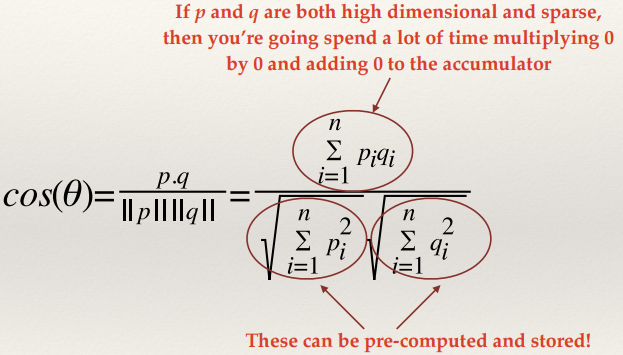

Recap: Cosine Similarity 141

Inverted Indexes 141

Computing the Cosine Similarity 142

Weighting the vectors 142

Possible weighting schemes 143

Vector Quantisation 143

Learning a Vector Quantiser 143

Vector Quantisation 143

Visual Words 144

SIFT Visual Words 144

Bags of Visual Words 144

Histograms of Bags of Visual

Words 145

Visualising Visual Words 146

The effect of codebook size 146

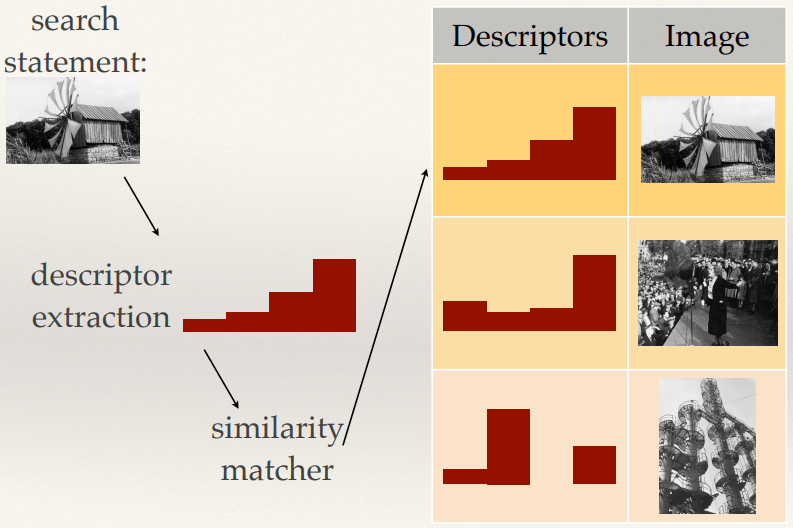

Content-based Image Retrieval 147

BoVW Retrieval 147

Optimal codebook size 147

Problems with big codebooks 147

Overall process for building a BoVW retrieval

system 148

Lecture 10: Image classification and

auto-annotation 148

Multilabel classification 148

Object Detection / Localisation 149

Slide summary: Challenges in Computer

Vision 149

Aside: Optimal codebook size 150

Another slide summary: Stuff 150

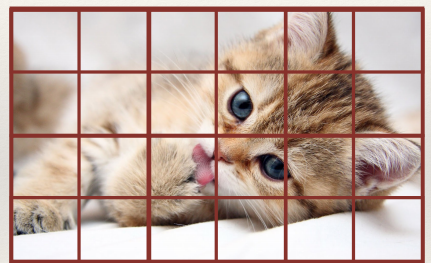

Dense Local Image Patches 150

Dense SIFT 150

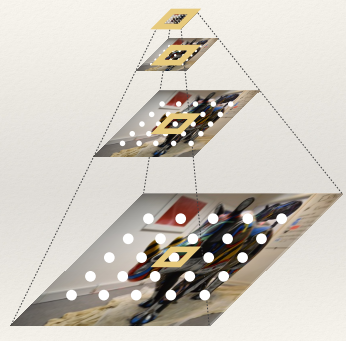

Pyramid Dense SIFT 151

Spatial Pyramids 152

Developing and benchmarking a BoVW scene

classifier 152

Evaluation Dataset 152

Building the BoVW 153

Training classifiers 153

Classifying the test set 153

Evaluating Performance 153

Lecture 11: Towards 3D

vision 153

Summary Summary 154

Programming for computer vision & other

musings related to the coursework 155

Writing code for computer

vision 155

Most vision algorithms are

continuous 155

Always work with floating point

pixels 155

Guidelines for writing vision

code 155

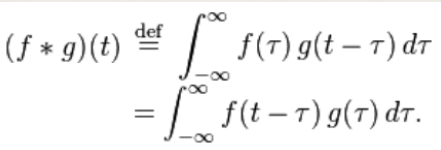

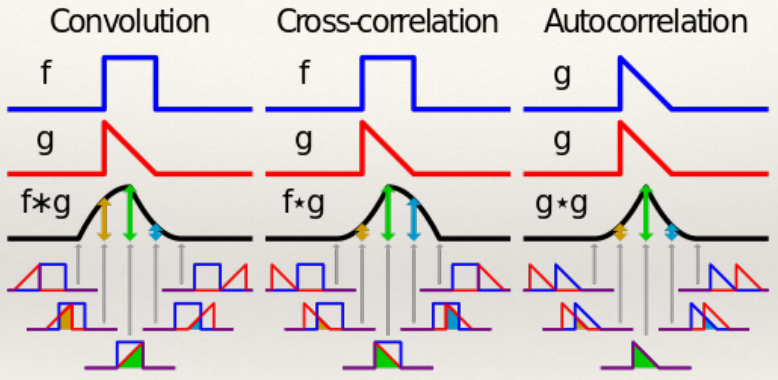

Convolution 155

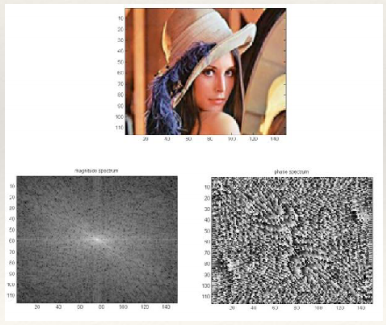

Aside: phase and magnitude 156

Aside: Displaying FFTs 156

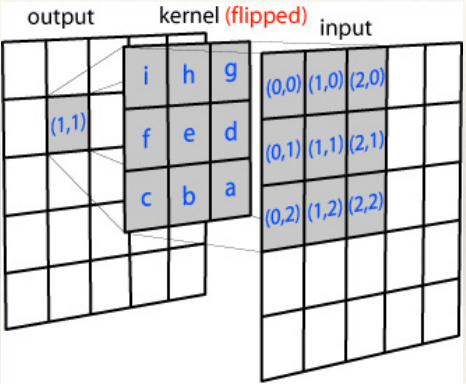

Template Convolution 157

What if you don’t flip the

kernel? 158

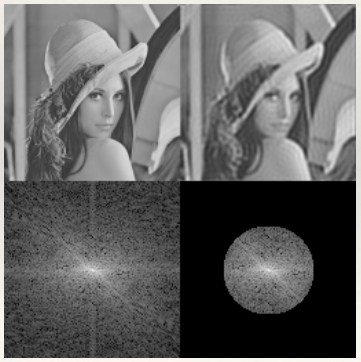

Ideal Low-Pass filter 158

Ideal Low-Pass filter -

problems 159

Gaussian filters - why 160

Building Gaussian Filters 160

High-pass filters 161

Note - Don’t do this! 161

High-pass filters have a mixture of negative

and positive coefficients 162

Building hybrid images 162

…is really simple 162

TL;DR 163

Part 1: Mark 164

Part 2: Jon 164

Lec 1 164

Lec 2 164

Lec 3 165

Lec 4 167

Lec 5 169

Lec 6 172

Lec 7 174

Lec 8 176

Lec 9 178

Lec 10 180

TL;DR By Mark Towers 182

Mark (edited by Joshua Gregory) 182

Jon 187

Notes intro

- Basically this is a summary of the slides, plus some extra

explanation.

- Text which is coloured dark yellow: “like this”, is content from the slides I have decided to

include as I think it is probably a bit important/interesting, even though there was no hand with bow

for it:

Introduction

Images consist of picture elements known as “pixels”.

- (x,y) - location

- z - depth

- colour

- colour- T - time

2D Images are matrices of numbers.

- Grey level image

- 3D view

- Corresponding Matrix

Point Operations:

- Modify Brightness

- Find Intensity

Group Operations:

- Image filtering

- Edge detection

Feature Extraction

- Roads in remotely-sensed image

- Artery in ultrasound image

Applications of Computer Vision

- Image coding (MPEG/JPEG)

- Product inspection

- Robotics

- Modern cameras/phones

- Medical imaging

- Demography

- Biometrics

Part 1: Mark

Lecture 1: Eye and Human Vision

Is human vision a good model for computer vision?

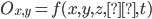

The human eye

Diagram of the human eye. Light comes in through the lens and is focused onto the

retina. These light impulses are then sent through the optic nerve.

Cones are photopic (Coloured vision) and Rods are scotopic (Black and white) (Nixon

made a mistake on his notes) - Thanks Tim :)

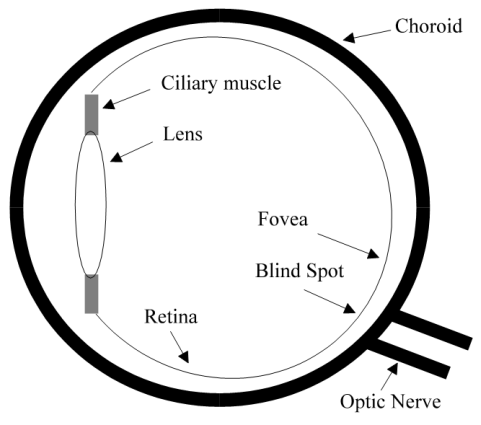

Optics

Image is flipped, just like a camera. Our brain works this out and we perceive it the

right way up.

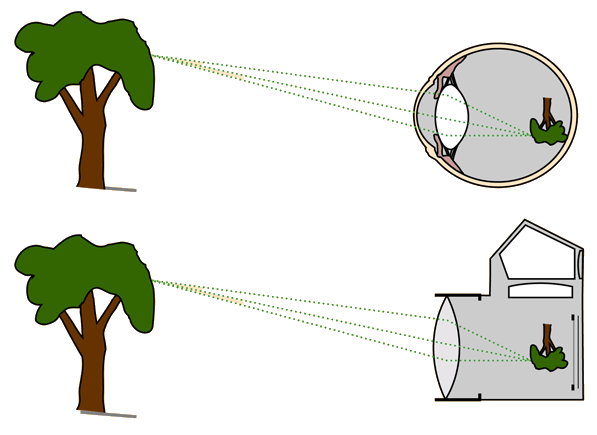

Spectral responses

The eye is most sensitive to green light, least to blue, but the point is that

colours are at different response levels.

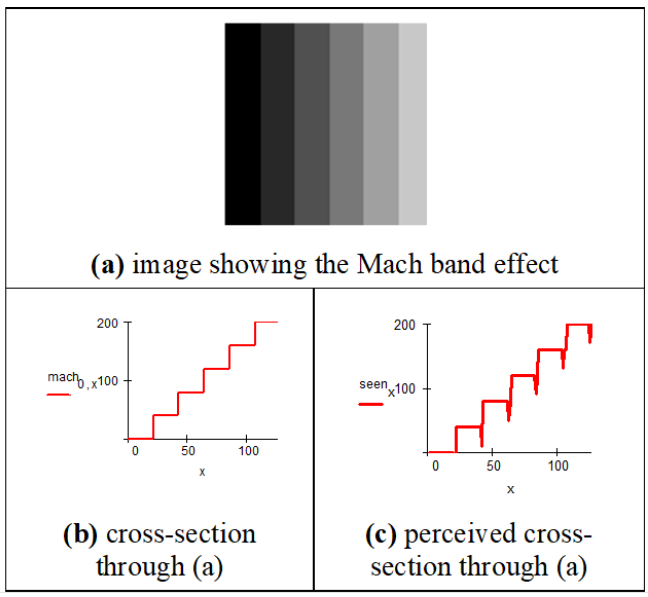

Mach bands

Mach bands are an optical illusion whereby the contrast between edges of slightly

differing shades of grey is exaggerated when they are touching.

[Source: Wikipedia]

This and many other optical illusions (edges, static, benham’s disk... you can

look at in the slides) demonstrate that our eyes can actually be not so good as a vision system.

Neural processing

Lecture 2: Image Formation

What is inside an image?

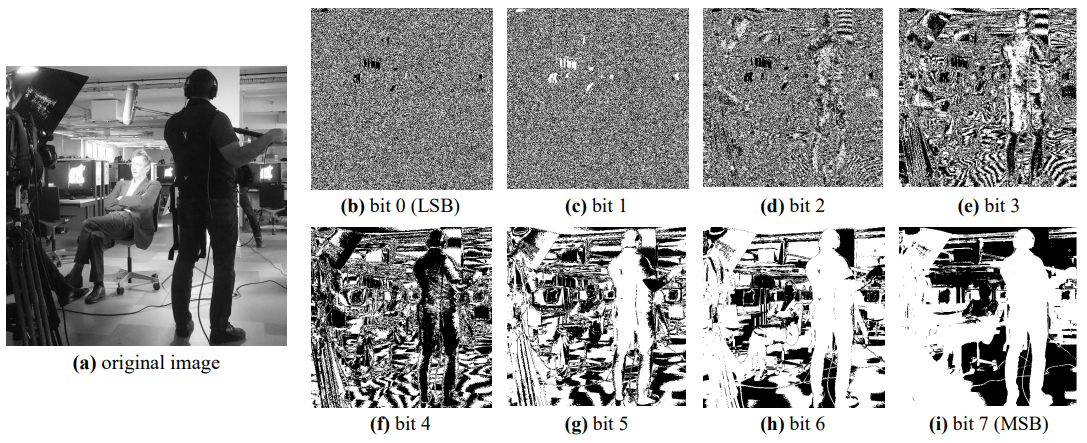

Decomposition

You can decompose an image into its bits.

- For a greyscale image, each pixel in the image is usually

represented by 8 bits; a byte.

- The number value ranges from 0-255, which represents all the

greyscale colours from white to black.

- When you decompose an image, you are only looking at the one bit in

each pixel, and this is either a 0 or 1, so either black or white.

- So for bit 0, only the first bit of each pixel is being shown in the

image. As you can see this bit represents the least amount of information and is very noisy.

- As you look at the images increasing in the bit position, you can

see more information is represented.

- The main point here is that the image gets clearer as you go up to

the most significant bit (bit 7).

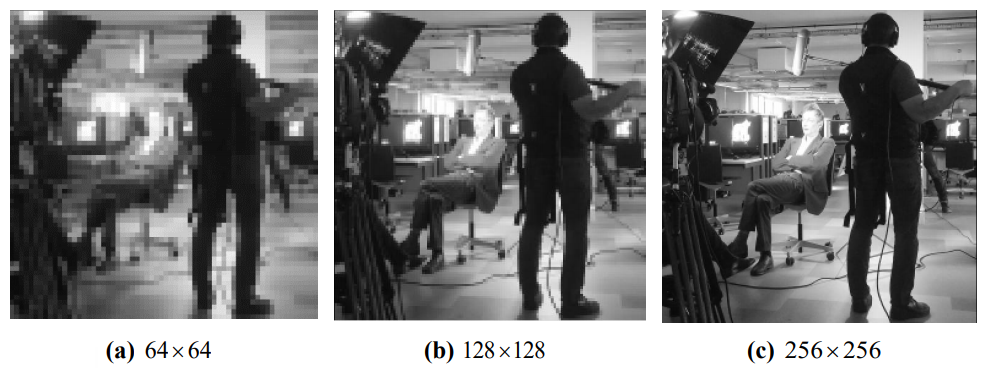

Resolution

Images are also (obviously) less detailed at lower resolutions.

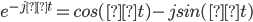

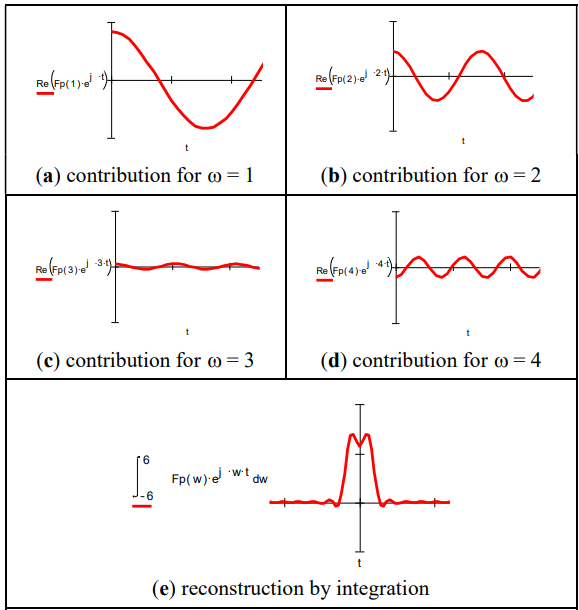

Fourier Transform

Any periodic function is the result of adding up sine and cosine waves of different

frequencies.

[This is a good visual intuitive: https://www.youtube.com/watch?v=spUNpyF58BY]

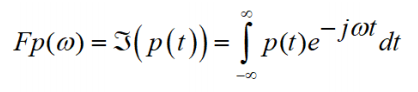

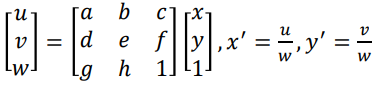

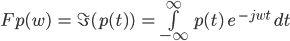

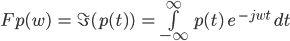

is the Fourier transform

is the Fourier transform

denotes the Fourier transform process:

denotes the Fourier transform process: is the angular frequency,

is the angular frequency,  measure in radians/s (where frequency

measure in radians/s (where frequency  is the reciprocal of time

is the reciprocal of time  ,

,  )

) is the complex variable

is the complex variable  (usually

(usually  )

) is a continuous signal (varying

continuously with time)

is a continuous signal (varying

continuously with time) gives the frequency components in

gives the frequency components in

What the Fourier Transform actually does

(To do: explain how a function that deals with (sound) waves can be used on images

with pixels. If anyone reading can explain that’d be great)

A: The fourier transform is just a technique for mapping signals that vary in one

dimension to the frequency domain of that dimension. In the case of audio this means transforming air

pressure from the time domain to the (temporal) frequency domain. If we consider the intensity of an image

to be our signal, which varies in space (let's say along the x axis), then the FT will transform the

signal into spatial frequency in the x dimension.

Now, we can generalise the fourier transform to take signals that are defined in

more than one mutually orthogonal dimensions, e.g. an image represented by pixel intensity defined in x and

y. Now the FT transforms the image into the (spatial) frequency domain, defined in two dimensions (spatial

frequency in x, and spatial frequency in the y direction). Hope that helps? - James

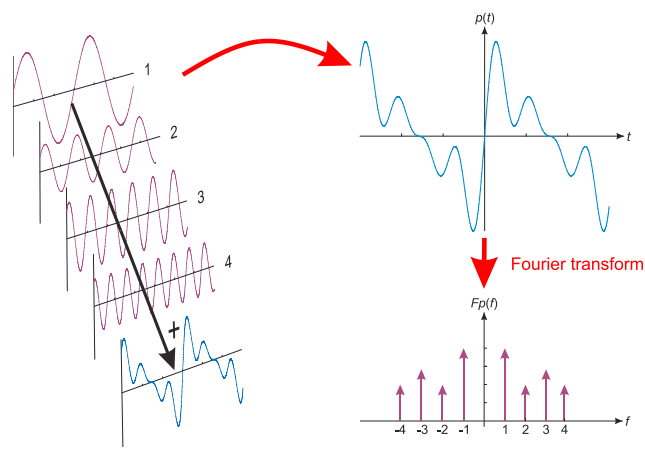

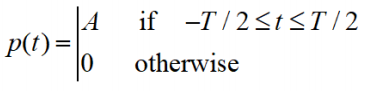

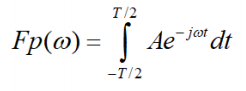

- Pulse

- Use Fourier

- Evaluate integral

- And get result

A pulse and its Fourier transform

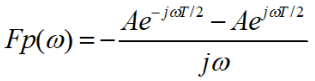

Reconstructing signal from its Fourier Transform

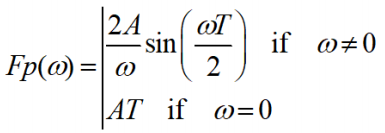

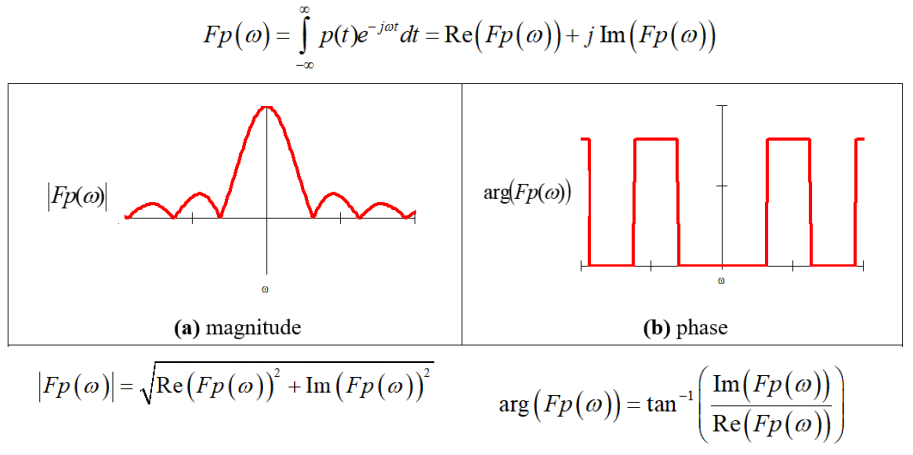

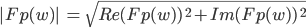

Magnitude and phase of the Fourier transform of a

pulse

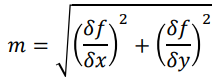

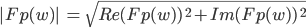

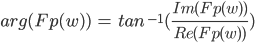

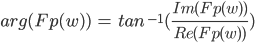

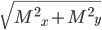

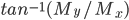

Re() is the real function and Im() is the imaginary function so for expression

then

then  and

and  . In the complex plane, you

can imagine the imaginary numbers as another number line with the real number as the other number. So to

calculate the magnitude, it is Pythagoras’ theorem of the two numbers while the angle is the angle of

the two numbers. For more information, look up complex numbers. (Thanks Mark ;) )

. In the complex plane, you

can imagine the imaginary numbers as another number line with the real number as the other number. So to

calculate the magnitude, it is Pythagoras’ theorem of the two numbers while the angle is the angle of

the two numbers. For more information, look up complex numbers. (Thanks Mark ;) )

The magnitude is the scalar amount of the frequency and the phase is its

offset (from a regular sin wave) (-Alex)

Lecture 3: Image Sampling

How is an image sampled and what does it

imply?

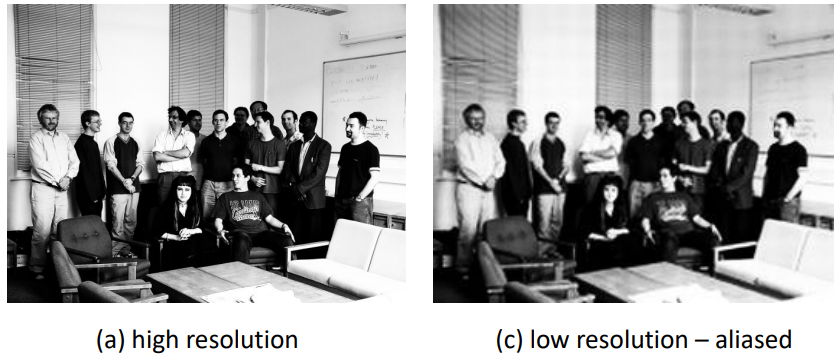

Aliasing in Sampled Imagery

- As you can see the left image looks clearer as it is at a higher

resolution, and therefore literally has more information than lower resolutions.

- The low resolution image on the right is aliased, and appears a bit

blurry to the left image.

- This is probably a result of a low sampling rate.

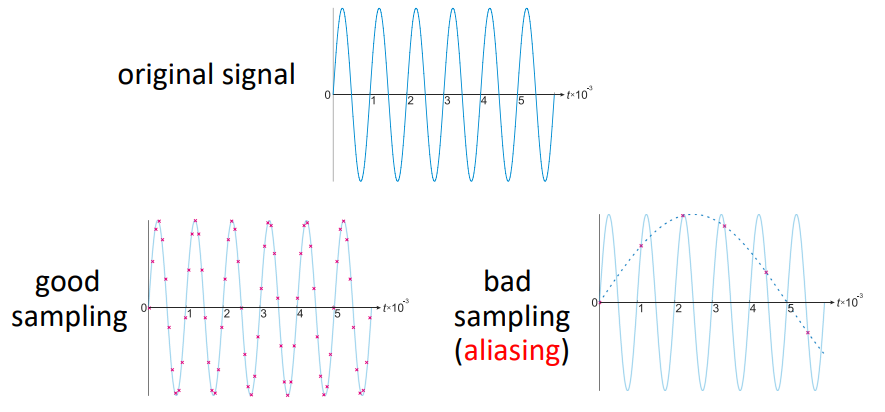

Aliasing

Aliasing is an effect that causes different signals to become indistinguishable

when sampled. It also often refers to the distortion or artifact that results when a signal reconstructed

from samples is different from the original continuous signal. [-Wiki]

Sampling Signals

- The original signal is a continuous function. We want to sample

this, and have to do it digitally.

- If we sample at a good (high) rate, then we can capture a good

representation of the signal.

- If we sample at a bad (low) rate, then this captured signal become

aliased, and is not a good representation.

- As you can see, the signal wave is completely the wrong

representation from the original image.

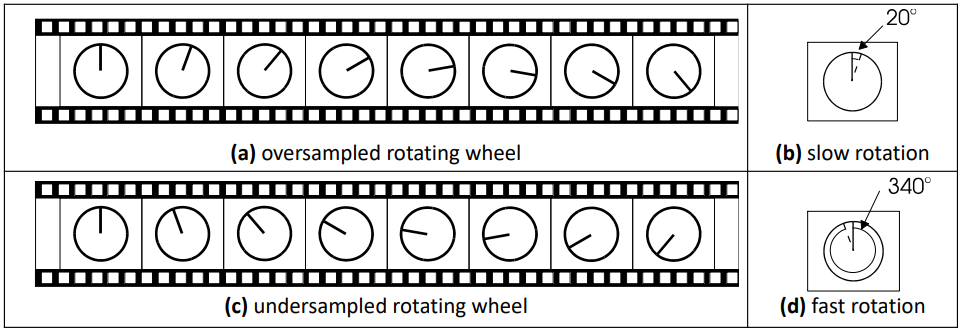

Wheels Motion

- Have you ever seen a wheel (or propeller) turn sooooooooooo fast in

a video (or even real life) that it looks like it’s not turning at all!?

- Or even turning the wrong way!?!!??!??

- This is due to the sampling rate the wheel is captured at.

- The wheel is spinning faster or slower to (a multiple) of the

sampling rate.

- This can cause the illusion of standing or reverse turning.

- Look up some videos or something to see this in action.

- The point is the sampling rate matters in vision.

Sampling Theory

- Nyquist’s sampling theorem is 1D.

- E.g. speech 6kHz, sample at 12 kHz.

- Video bandwidth (CCIR) is 5 MHz.

- Sampling at 10 MHz gave 576x576 images.

- Guideline: “two pixels for every pixel of

interest”.

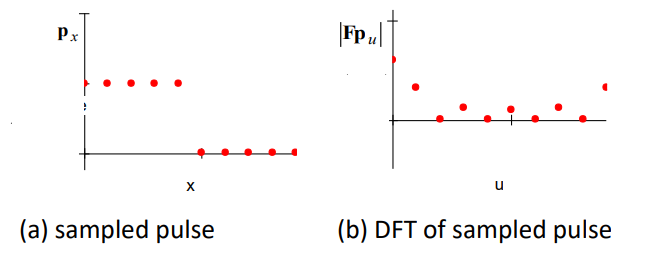

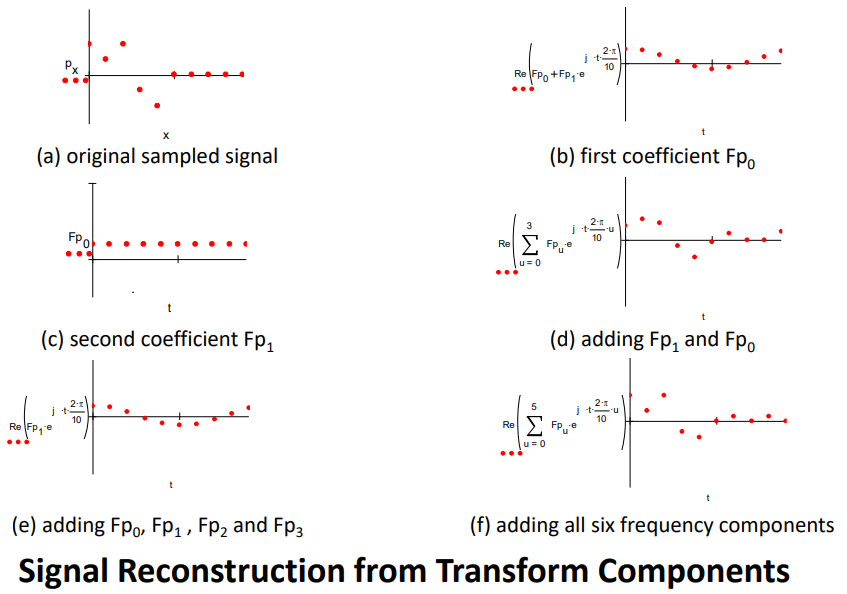

Transform Pair from Sampled Pulse

Some stuff to do with the Fourier Transform…

(If anyone could explain what these graphs are demonstrating that’d be

epic)

A: It’s showing the effect of adding reconstructing the sampled signal from

its frequency components. However, the images for (b) and (c) are the wrong way around. Essentially

it’s trying to demonstrate that as you add more frequency components from the transform of the sampled

signal, the reconstruction becomes a better approximation of the sampled signal, until eventually (f) all

the frequency components are present and the

reconstruction is perfect [(a) and (f) are identical]. - James

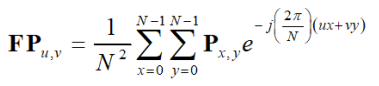

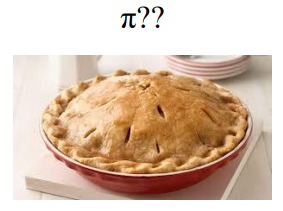

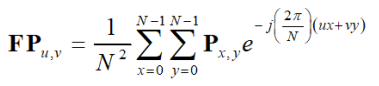

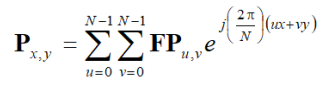

2D Fourier Transform

- The Fourier Transform has a forward and

inverse function.

- The forward transform:

- Two dimensions of space, x and

y

- Two dimensions of frequency, u and

v

- Image NxN pixels Px,y

- The inverse transform:

Reconstruction (non examinable)

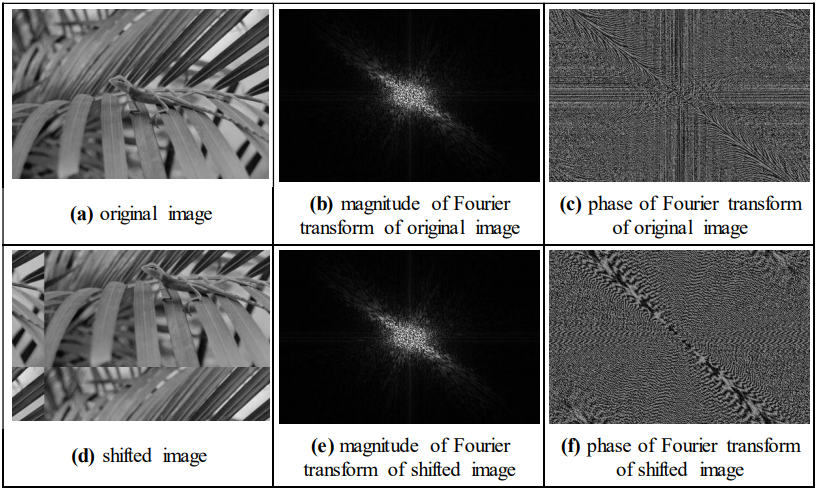

Shift Invariance

- Images can be shifted.

- What happens to the magnitude and phase of the fourier transform from shifted

images.

- This shows that the magnitude is not affected by shifting.

- But the phase is affected by shifting.

- What about for rotation?

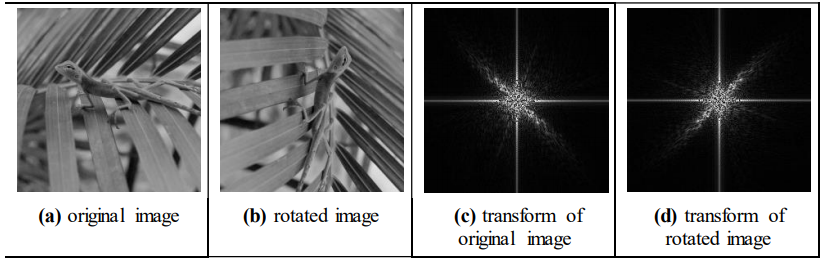

Rotation Invariance

- As you can see, the transform is just rotated too.

Filtering

- Fourier gives access to frequency components.

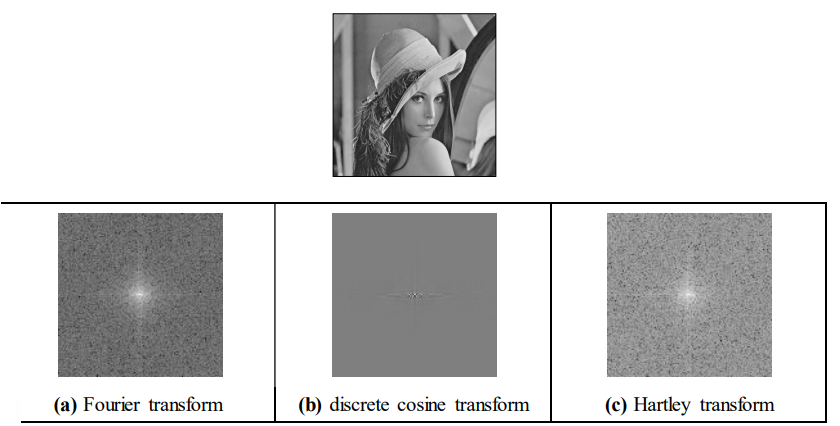

Other Transforms

Applications of 2D Fourier Transform

- Understanding and analysis

- Speeding up algorithms

- Representation (invariance)

- Coding

- Recognition / understanding (e.g. texture)

Lecture 4: Point Operators

How many different operators are there which

operate on image points?

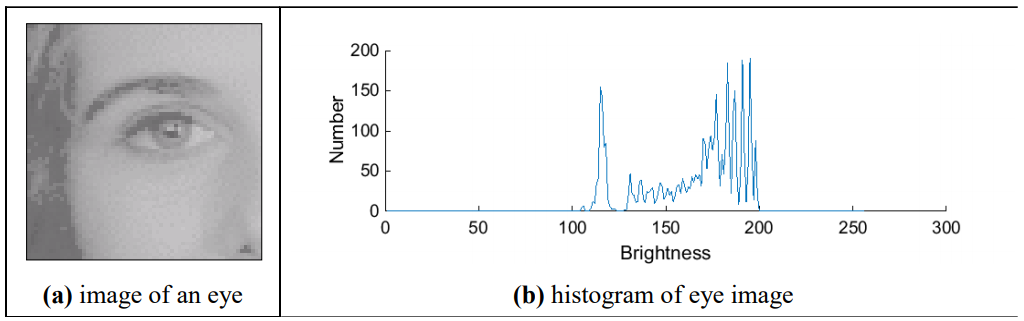

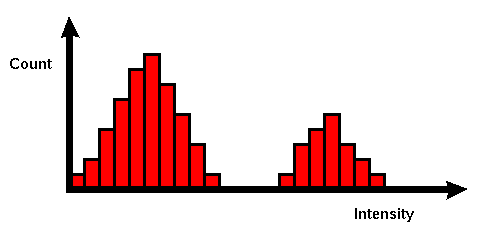

Image Histograms

- A histogram is a graph of frequency.

- In this context it is showing how many pixels have a certain

brightness level.

- This data is a global feature.

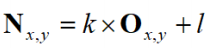

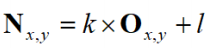

Brightening an Image

- An Image can be brightened by using this formula:

- Where:

- N -

new image

- O -

old image

- k -

gain

- l -

level

- x,y -

coordinates

Intensity Mappings

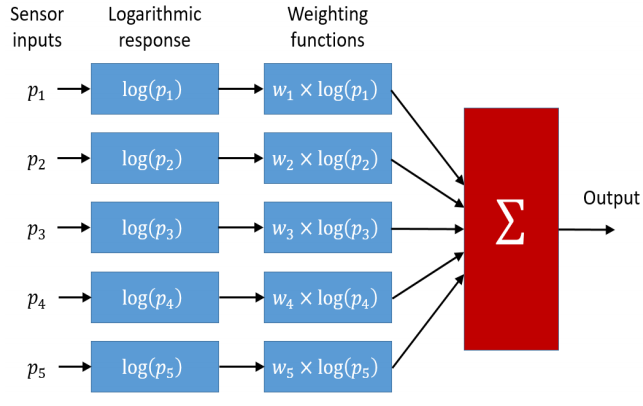

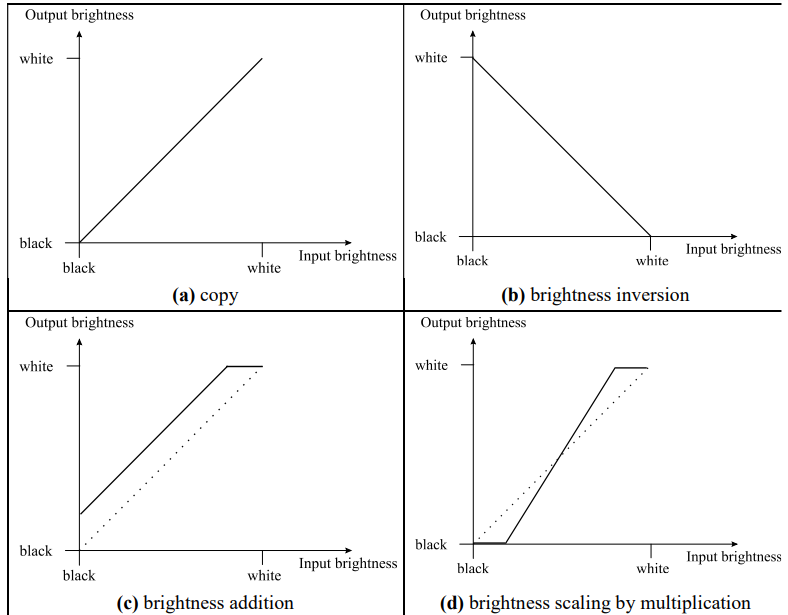

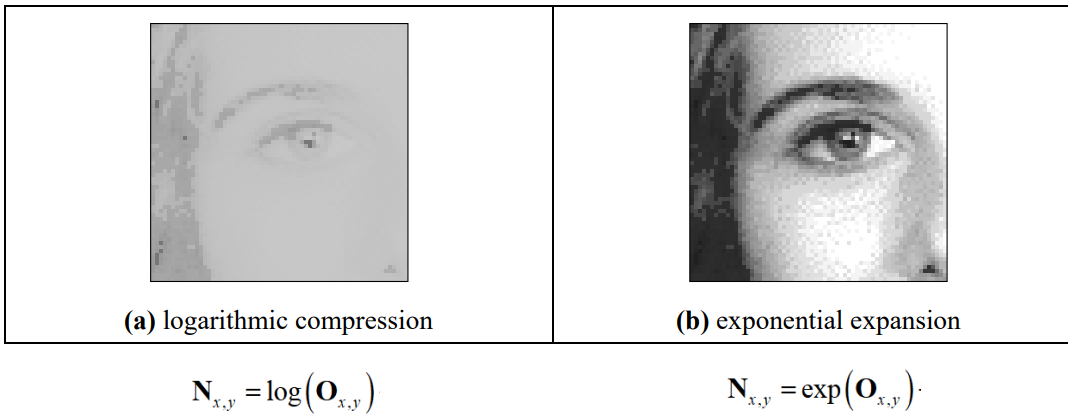

Exponential/Logarithmic Point Operators (non examinable)

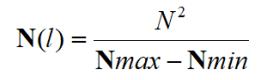

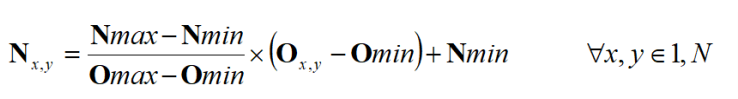

Intensity Normalisation

Intensity Normalisation

- N - new image

- O - old image

- x,y - coordinates

- Nmax - maximum input

- Nmin - minimum input

- Omax - maximum output

- Omin - minimum output

- Avoids need for parameter choice

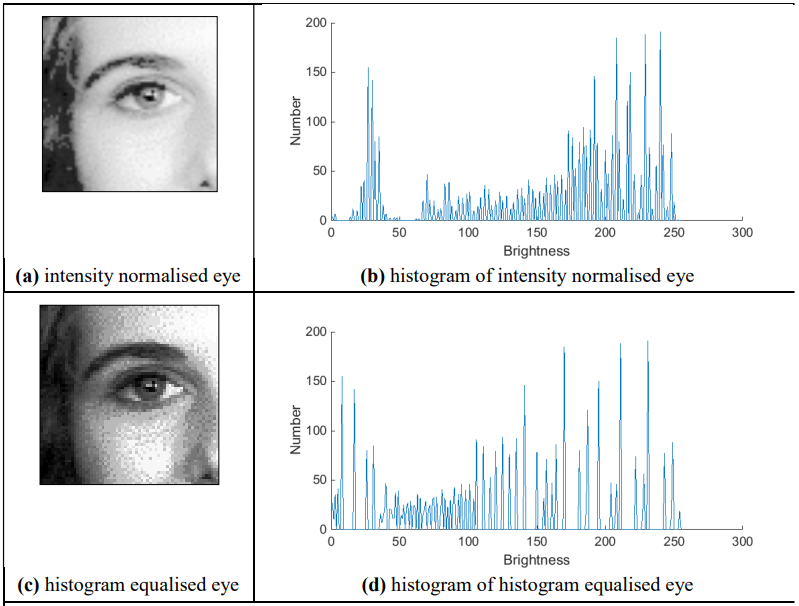

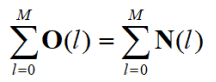

Intensity normalisation and histogram equalisation

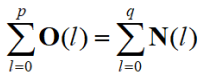

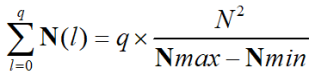

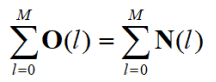

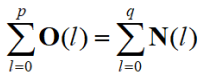

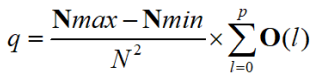

Histogram Equalisation

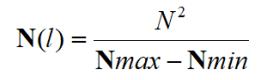

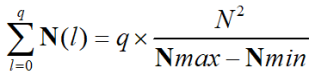

- N2 points in the

image; the sum of points per level is equal

- Cumulative histogram up to level p should be transformed to cover up to the level q

- Number of points per level in the output picture

- Cumulative histogram of the output picture

- Mapping for the output pixels at level q

Applying intensity normalisation and histogram

equalisation

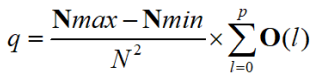

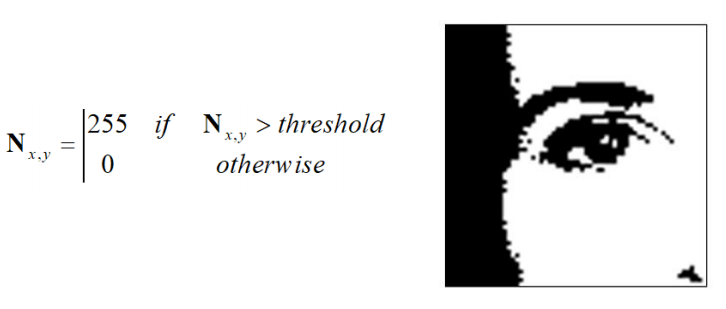

Thresholding and eye image

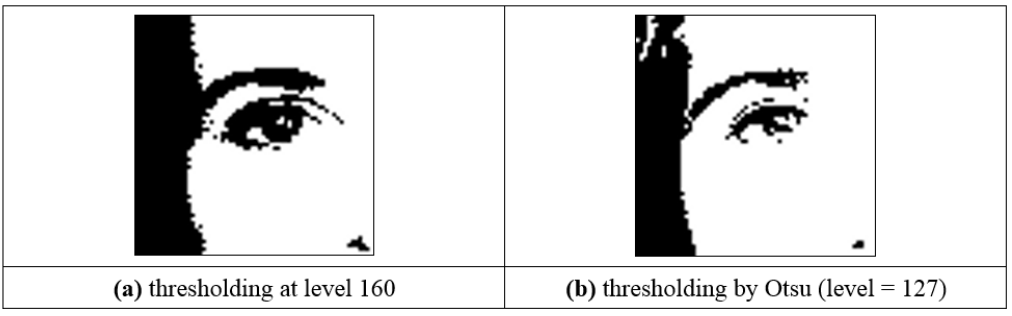

Thresholding: Manual vs Automatic

Other thresholding includes Entropic thresholding and Optimal Thresholding

Lecture 5: Group Operators

How do we combine points to make a new point in a

new image?

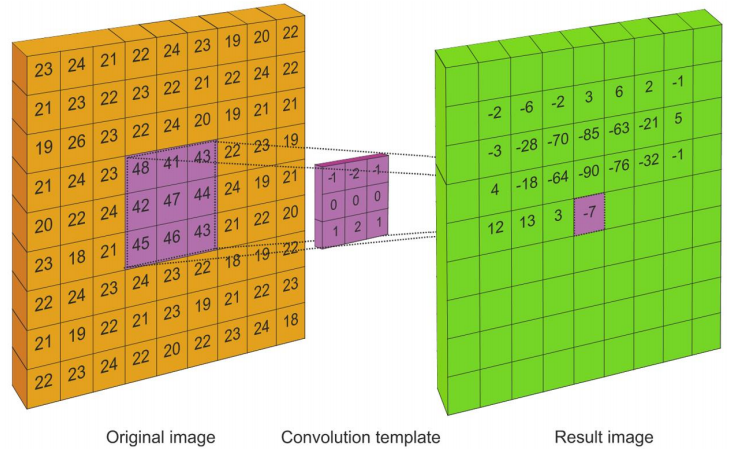

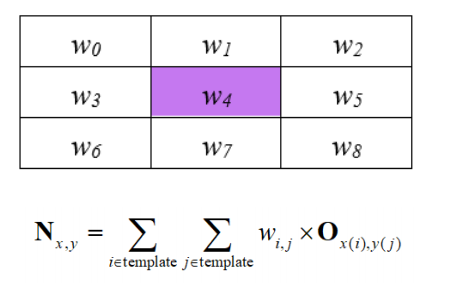

Template Convolution

I’m sure everyone knows about this by now…

This is when you take an original image and then apply a convolution template over

every pixel to create a resultant pixel value.

(to do: more explanation…)

- Convolution is a system response

- Template convolution includes coordinate inversion in x and in y

- Inversion is not needed if the template is symmetric

3x3 template and weighting coefficients

Where wi,j are the weights

and x(i), y(j) denote the position of the point that

matches the weighting coefficient position

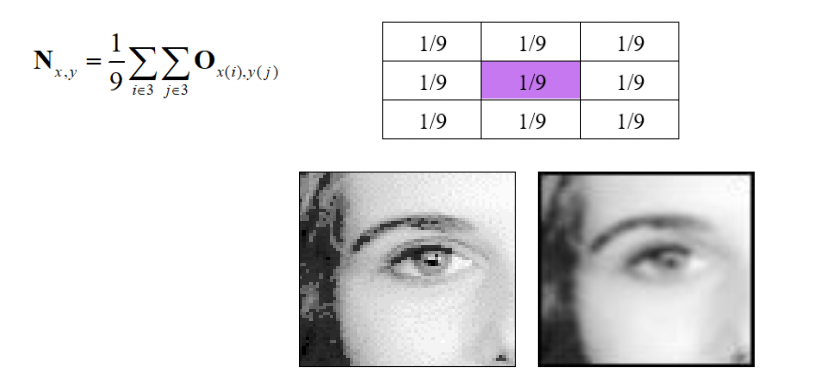

3x3 averaging operator

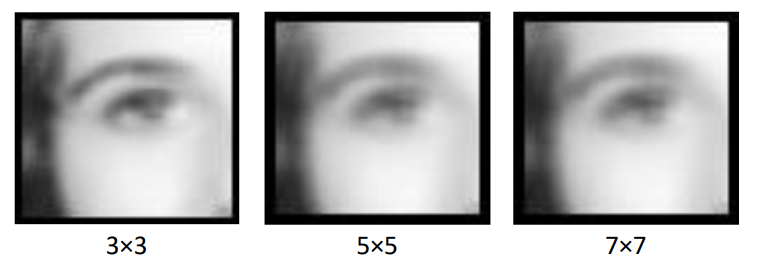

Illustrating the effect of window size

Larger windows lead to more blur.

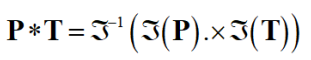

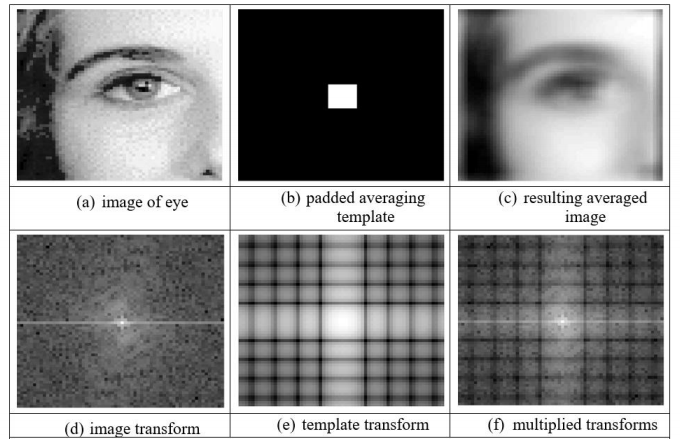

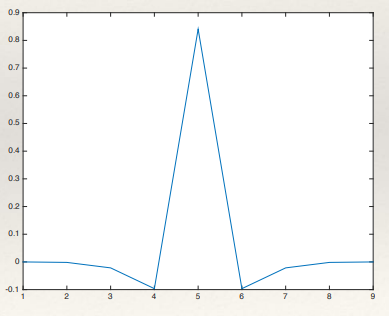

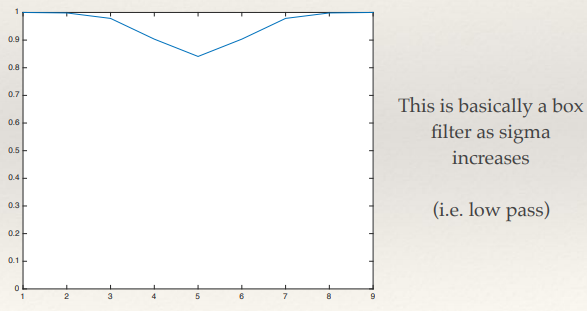

Template convolution via the Fourier transform

Allows for fast computation for template sizes >= 7x7

- Template convolution: *

- Fourier transform of the picture:

- Fourier transform of the template:

- Point by point multiplication (.×)

Note: it’s point by point! The equation is wrongly written in various

places.

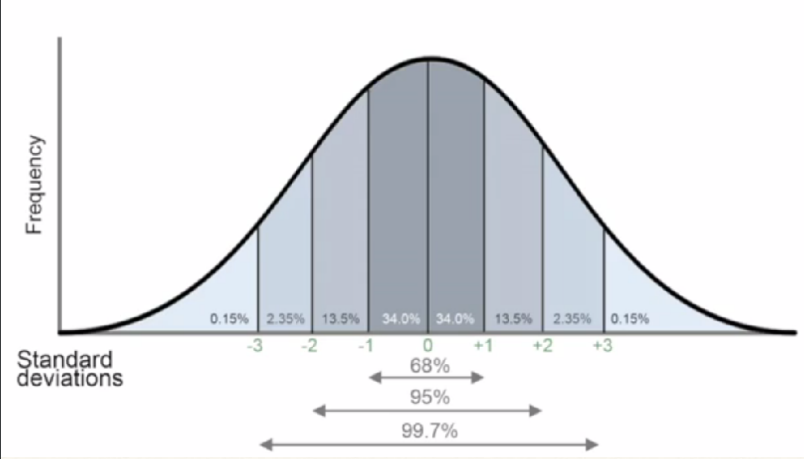

2D Gaussian function

- Used to calculate template values

- Note compromise between variance σ² and window

size

- Common choices

- 5x5, 1.0

- 7x7, 1.2

- 9x9, 1.4

2D Gaussian template

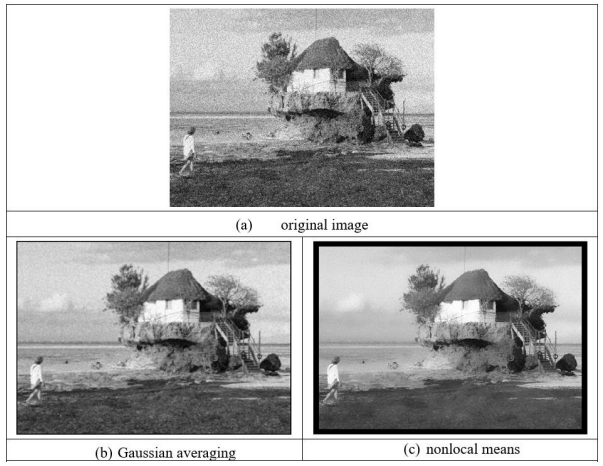

Applying Gaussian averaging

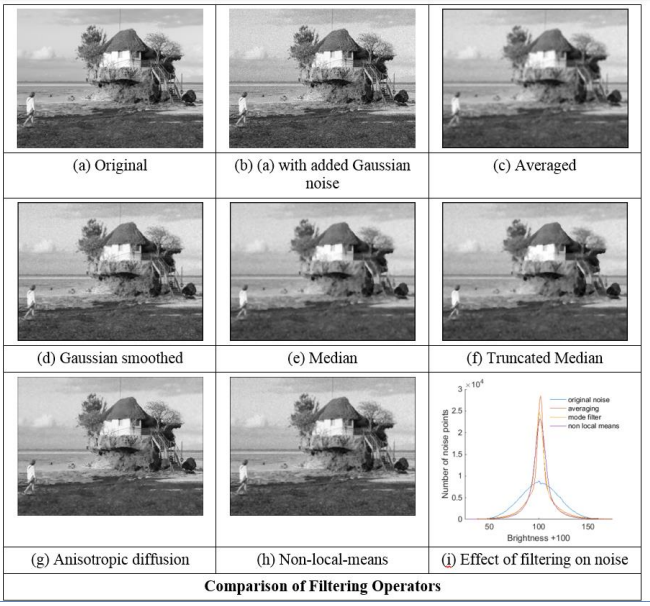

Finding the median from a 3x3 template

- Preserves edges

- Removes salt and pepper noise

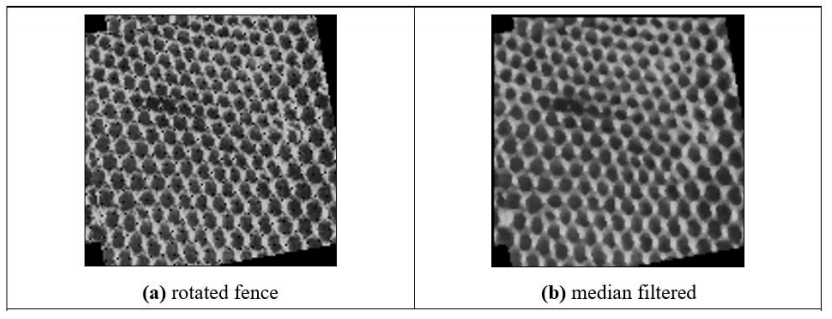

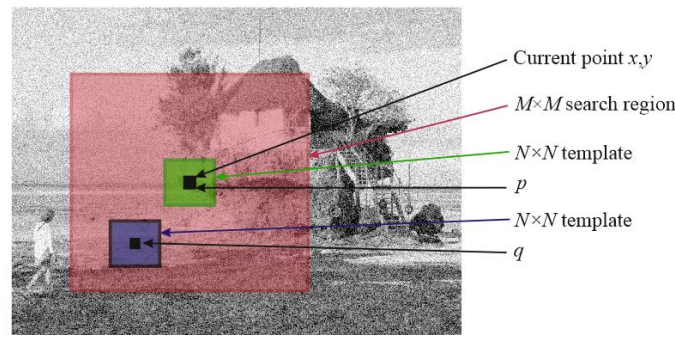

Newer stuff (non-examinable)

Averaging which preserves regions

Applying non local means

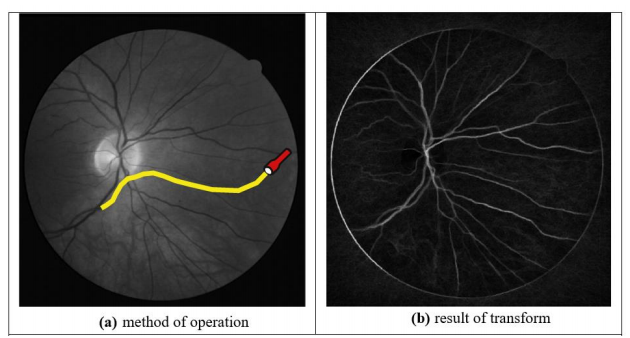

Even newer stuff: Image Ray Transform

Use analogy to light to find shapes, removing the remainder

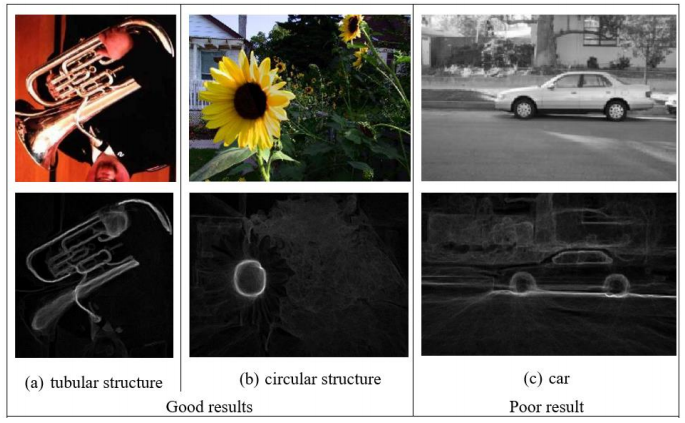

Applying Image Ray Transform

Comparing operators

Lecture 6: Edge Detection

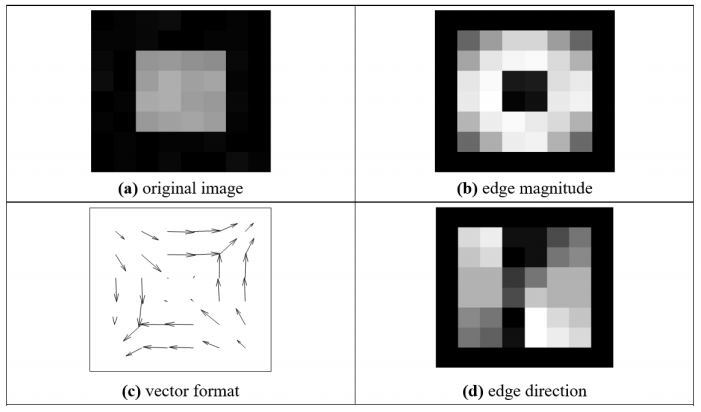

What are edges and how do we find them?

Edge detection

- Images have edges in them.

- It’s a very useful thing to find these edges in computer

vision.

- There are a few different operators for edge detection of an

image.

- It involves maths.

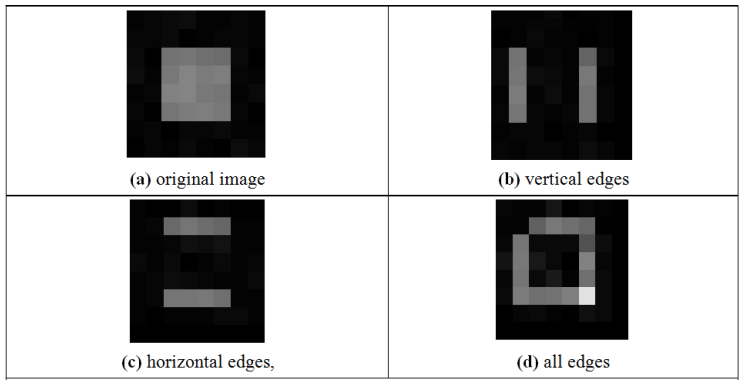

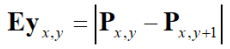

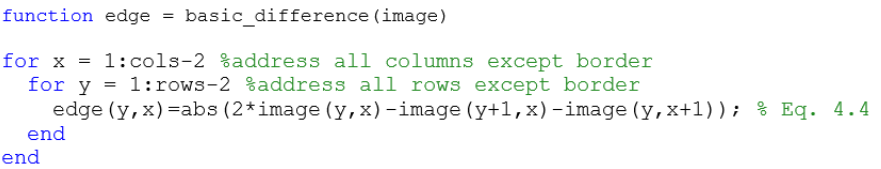

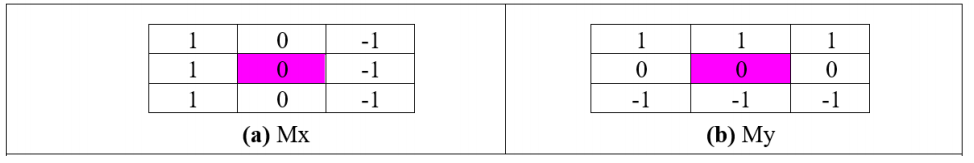

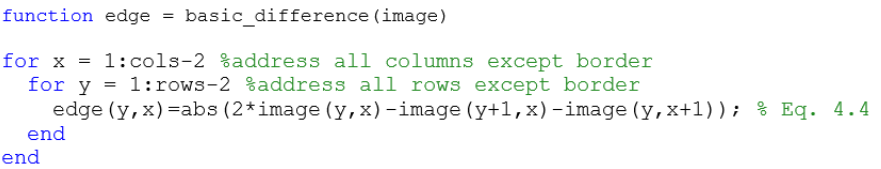

First order edge detection

- You can detect vertical and horizontal separately.

- Vertical edges, Ex

- Horizontal edges, Ey

- Vertical and horizontal edges:

- Template:

- Code:

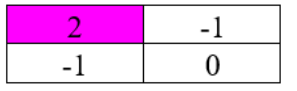

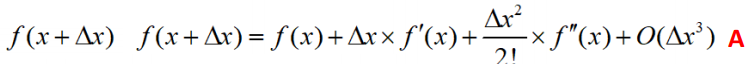

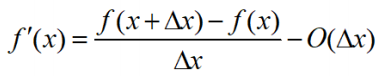

Edge detection maths

- Taylor expansion for

- By rearrangement

- Expand

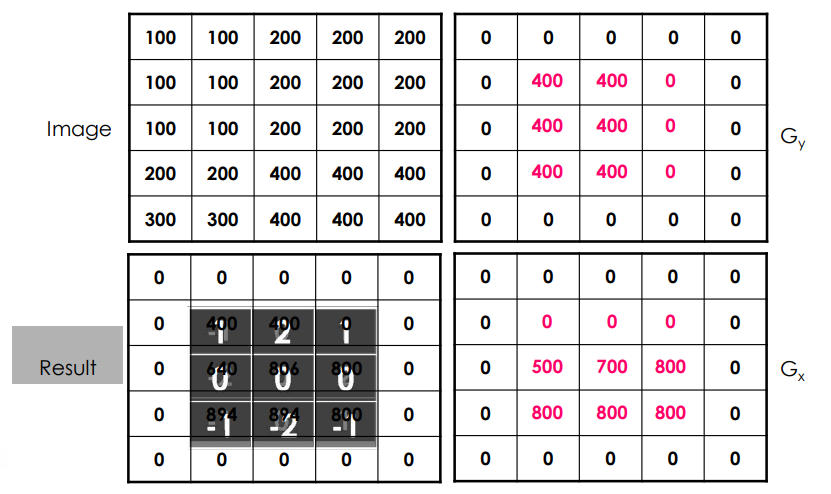

Templates for improved first order difference

Edge Detection in Vector Format

Templates for Prewitt operator

Applying the Prewitt Operator

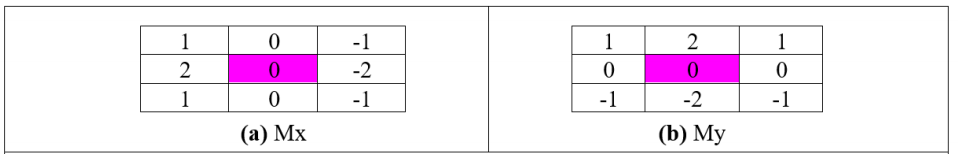

Templates for Sobel operator

- The two kernels used for the 3 x 3 sobel operator.

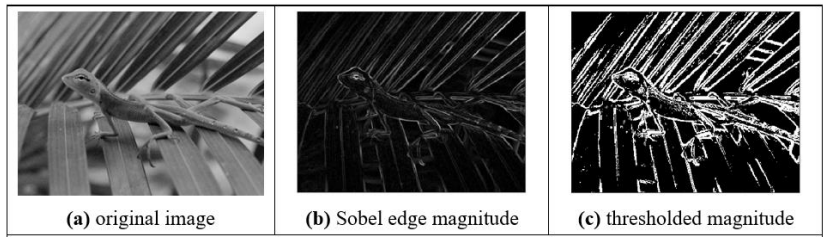

Applying Sobel operator

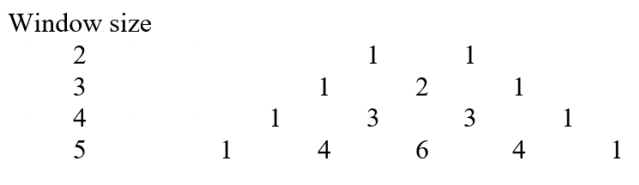

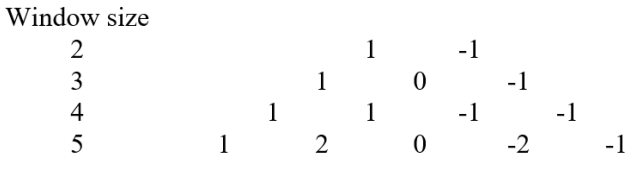

Generalising Sobel

- Averaging

- Differencing

Generalised Sobel (non examinable)

Lecture 7: Further Edge Detection

What better ways are there to detect edges?

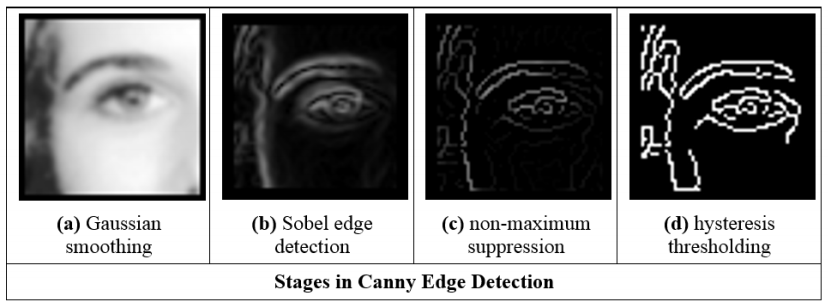

Canny edge detection operator

Formulated with three main objectives:

- Optimal detection with no spurious responses

- Good localisation with minimal distance between detected and true

edge position

- Single response to eliminate multiple responses to a single

edge.

Approximation

- Use Gaussian smoothing

- Use the Sobel operator (could combine with 1?)

- Use non-maximal suppression

- Threshold with hysteresis to connect edge points

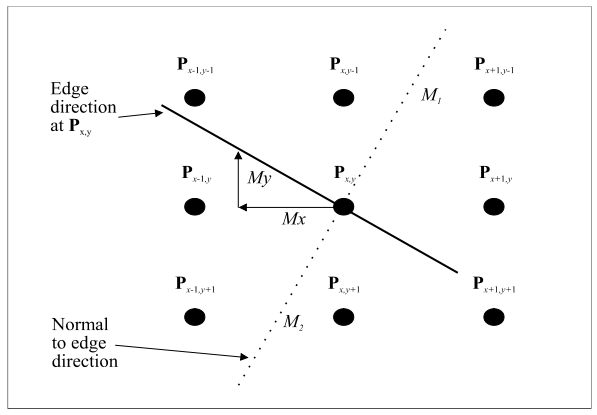

Interpolation in non-maximum suppression

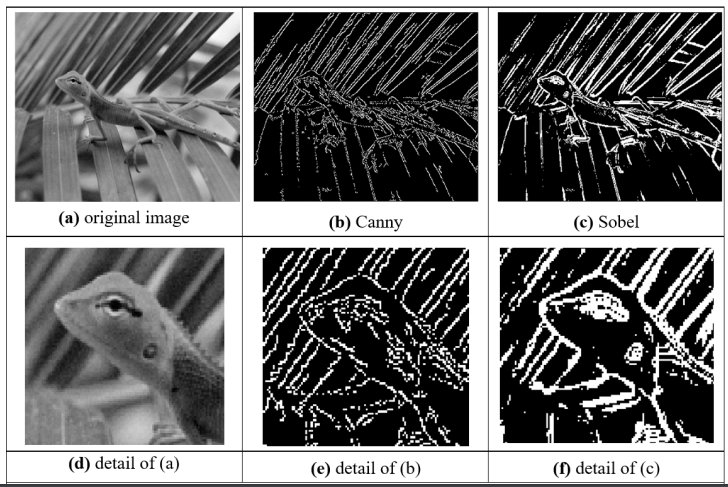

- Sobel edge detection is first order (equivalent to differentiation)

so gives us the change in x and the change in y, which we turn into a vector and use to find the

gradient direction (the dotted line in the diagram above), which will intuitively be at right angles to

the direction of the line (the non-dotted line above).

- In non-maximum suppression, we set all points that aren’t a

maximum along the line to zero (thereby thinning and sharpening the line).

- This is done by taking a line of points along the gradient

(dotted line) and setting all the points to zero, except those at a maximum.

- Interpolation is used to figure out the values of points

between pixels.

- Alternatively, we can round the direction to the nearest

horizontal/vertical/diagonal line

(Thanks Samuel Collins)

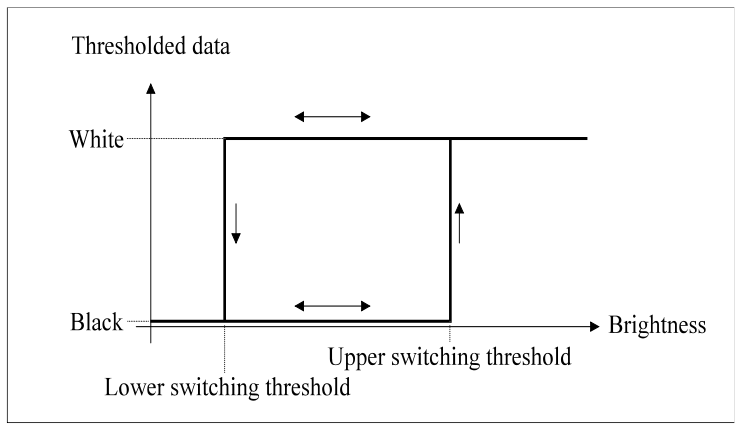

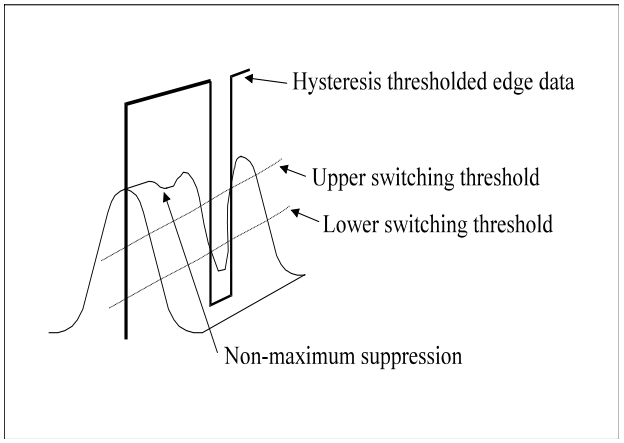

Hysteresis thresholding transfer function

To help better understand the function an example:

- The level is currently at black

- Brightness is increased until it reaches and passes the “upper

switching threshold”

- The level now changes to white

- If the brightness is decreased and goes below the “upper

switching threshold” it will not switch to black

- The brightness will have to decrease until it is below the

“lower switching threshold”, then it will switch back to black.

Action of non-maximum suppression and hysteresis

thresholding

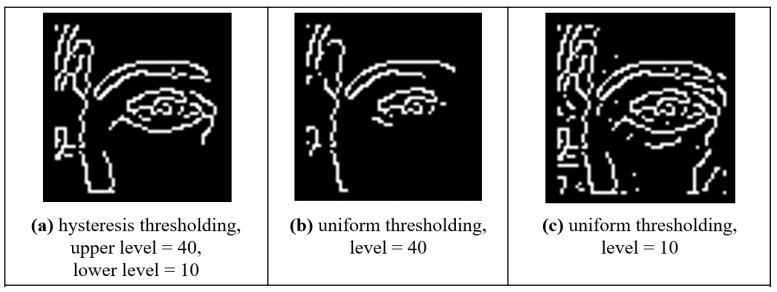

Hysteresis thresholding vs uniform thresholding

As you can see, hysteresis thresholding is arguably better.

Canny vs Sobel

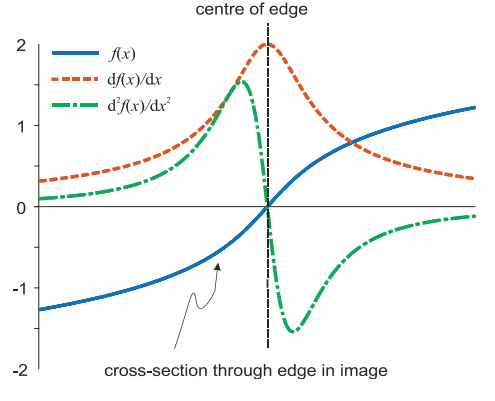

First and second order edge detection

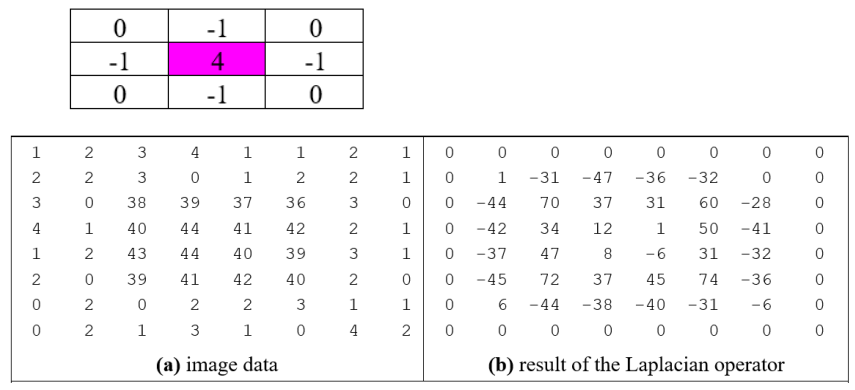

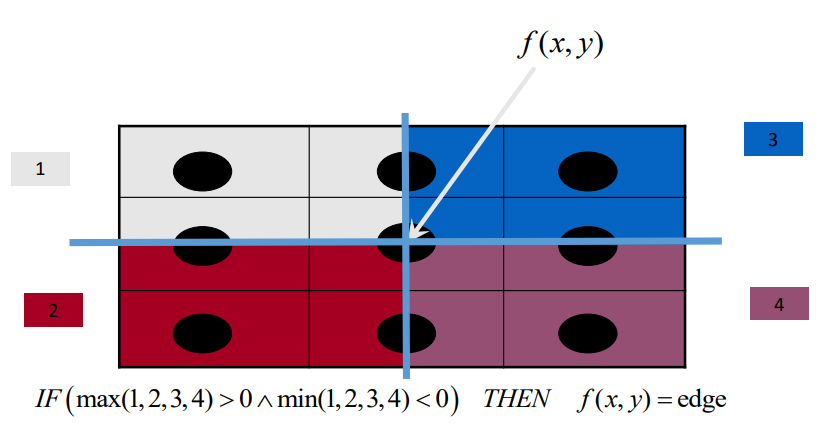

Edge detection via the Laplacian operator

Mathbelts on…

(I think this is essentially just rearranging an equation)

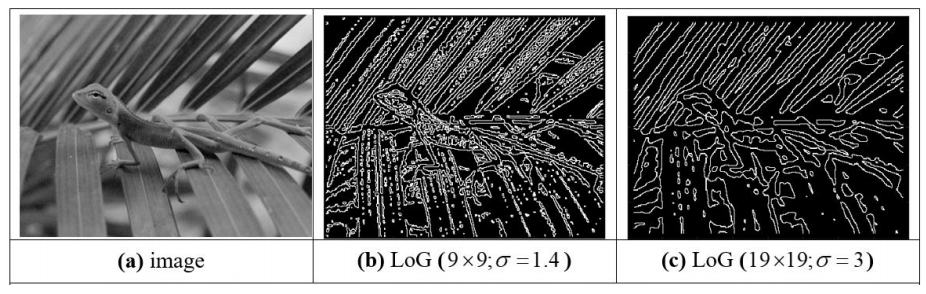

Shape of Laplacian of Gaussian operator

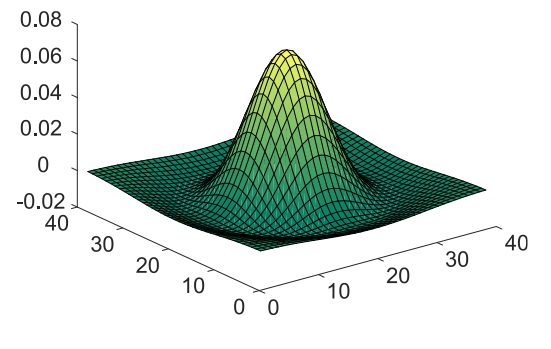

Zero crossing detection

- Basic - straight comparison

- Advanced:

- You could compare every point to try and find where a 1 switches to

0.

- So you average the 4 points in the corners

- Then you get 4 summations

- If one of those is positive and another is negative, then there

is a zero crossing.

Marr-Hildreth edge detection

- Application of LoG (or DoG) and zero-crossing detection.

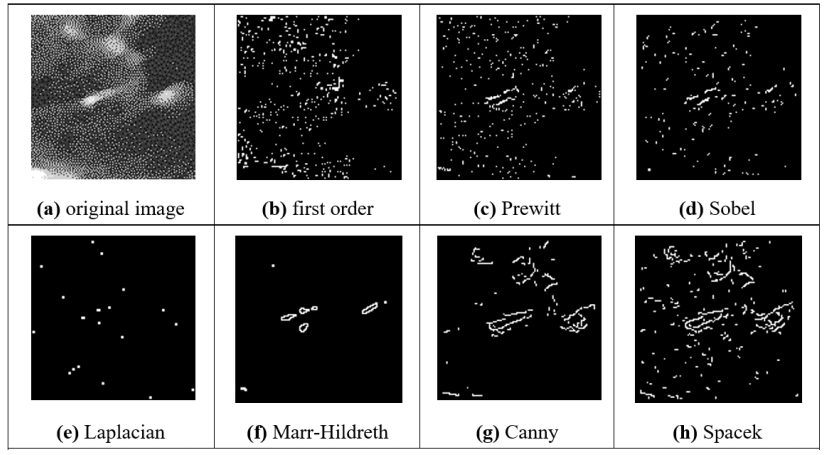

Comparison of edge detection operators

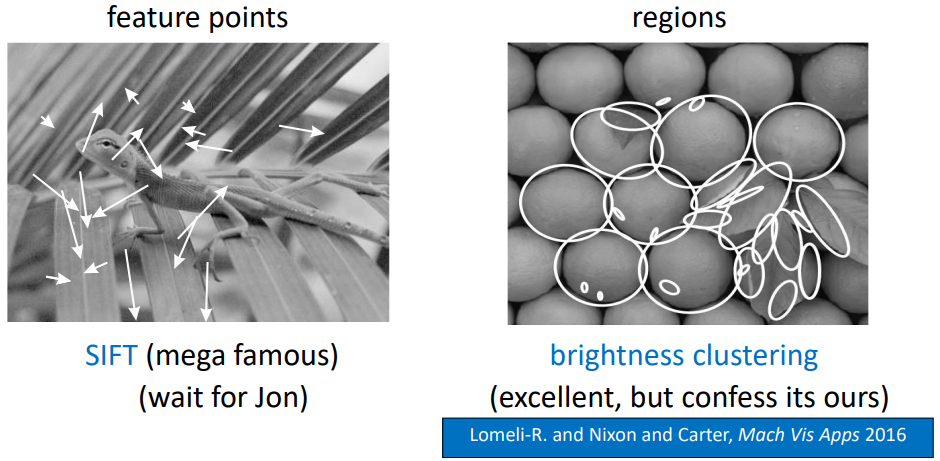

Newer stuff - interest detections (non-examinable)

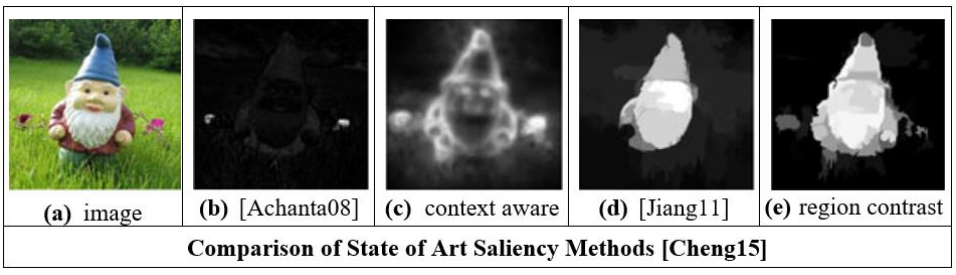

Newer stuff - saliency

Lecture 8: Finding Shapes

How can we group points to find shapes?

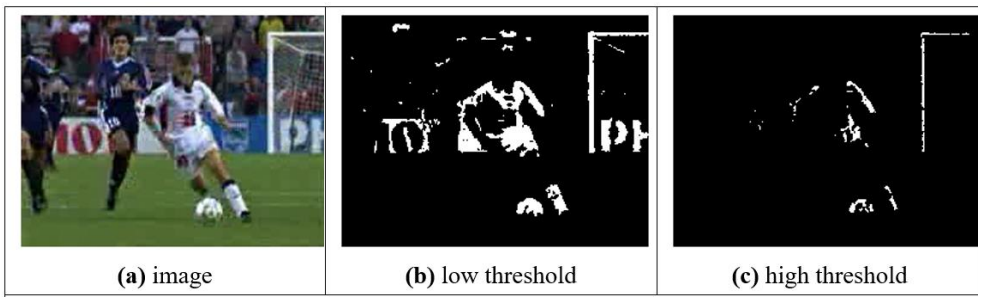

Feature extraction by thresholding

- Let’s try to extract features from this image using thresholding.

- Low threshold doesn't look that good.

- Neither does the high threshold.

- In conclusion: we need to identify shape!

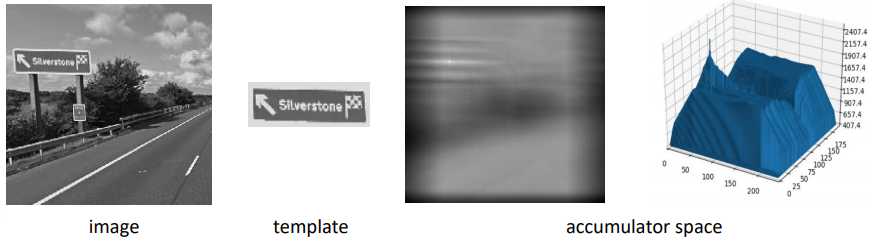

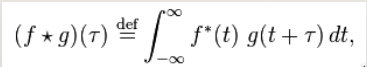

Template Matching

- This is a technique for finding small parts of an image which match

a template image.

- Intuitively simple

- Correlation and convolution

- Implementation via Fourier

- Relationship with matched filter, viz: optimality

- The template is the silverstone sign.

- This can be found in the image with template matching

- The accumulator space is a thing

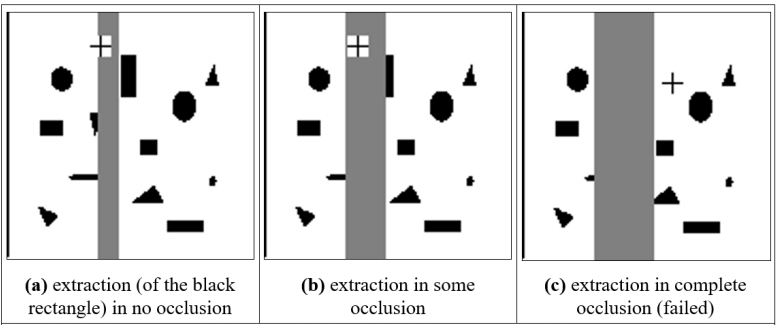

Template matching in:

In Noisy images

- Noise is an issue for template matching

- You want to create a matcher which is robust to noise

In Occluded Images

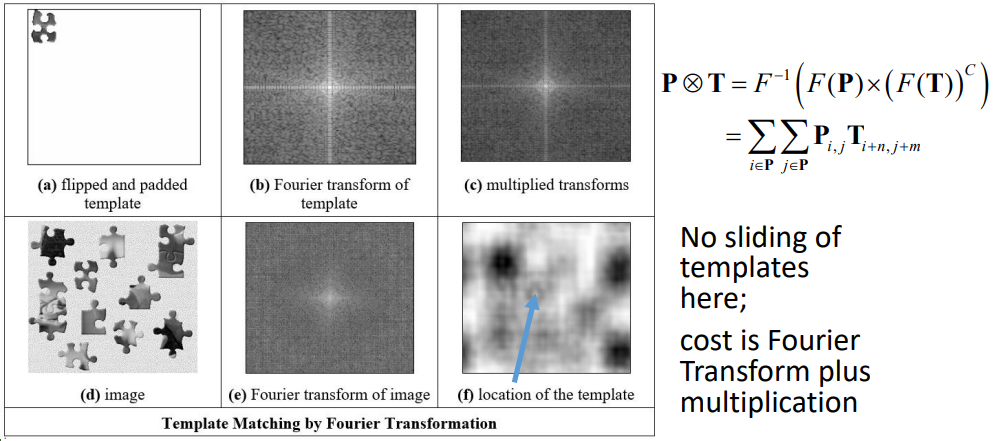

Encore, Monsieur Fourier! (???) (non examinable)

Applying Template Matching (non examinable)

Here, have this pretty low res image of a reaaaaally realistic weapon identification

system, which can detect weapons like guns and knives. (aka, this is an application of template

matching.)

Windows XP anyone?

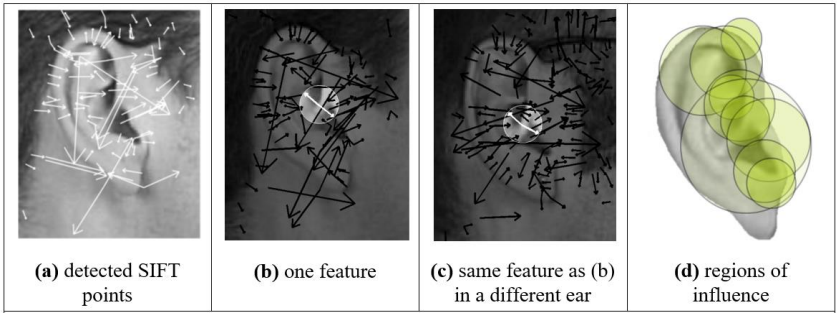

Applying SIFT in ear biometrics (non examinable)

- Have you ever wanted to identify people by their ears?

- Well now you can!

- Do this stuff with the arrows and circles and stuff

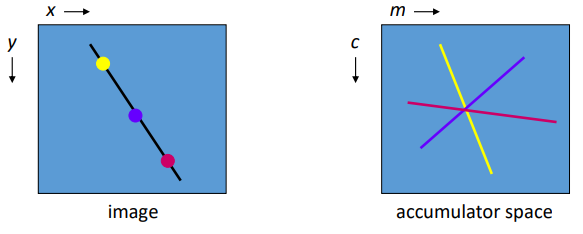

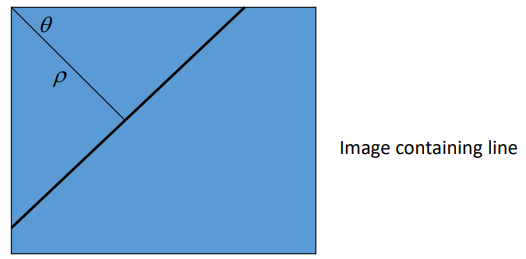

Hough Transform

- This is another feature extraction technique

- It can find imperfect instances of objects within a certain class of

shapes by a voting procedure

- The voting procedure is carried out in a parameter space, from which object

candidates are obtained as local maxima in a so-called accumulator space.

- [-Wiki]

- Performance equivalent to template matching, but

faster

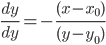

- A line is points x,y gradient is

m intercept is c.

- You can rearrange to get:

- In maths it’s the principle of duality

Go and read the following article about the Hough Transform: http://aishack.in/tutorials/hough-transform-basics/

It’s really good.

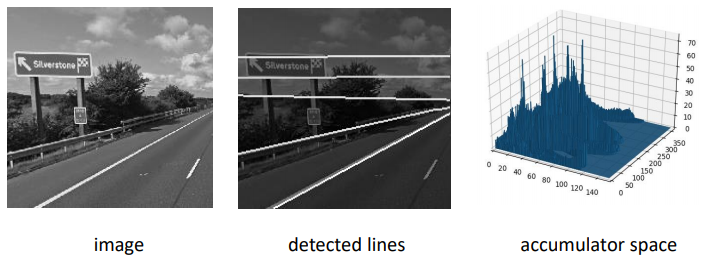

Applying the Hough transform for lines

Hough Transform for Lines … problems

- m, c tend to infinity

- Change the parameterisation

- Use foot of normal

- Gives polar HT for lines

Images and accumulator space of polar Hough

Transform

Applying Hough Transform

Lecture 9: Finding More Shapes

How can we go from conic sections to general

shapes?

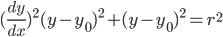

Hough Transform for Circles

- Again, it’s duality:

- Equation of a circle:

|

Points

|

Parameters

|

Radius

|

|

x,y

|

x0,y0

|

r

|

|

x0,y0

|

x,y

|

r

|

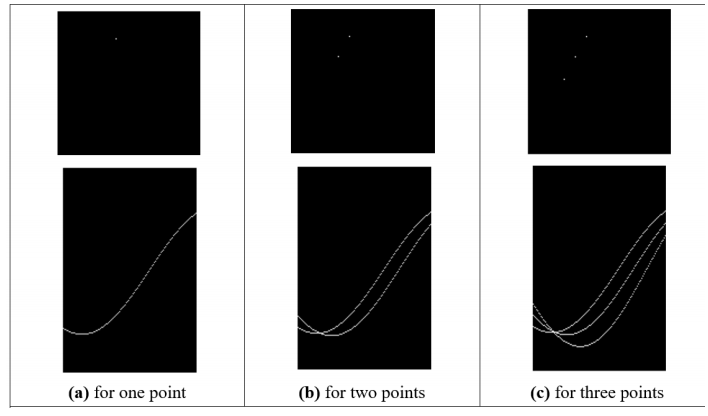

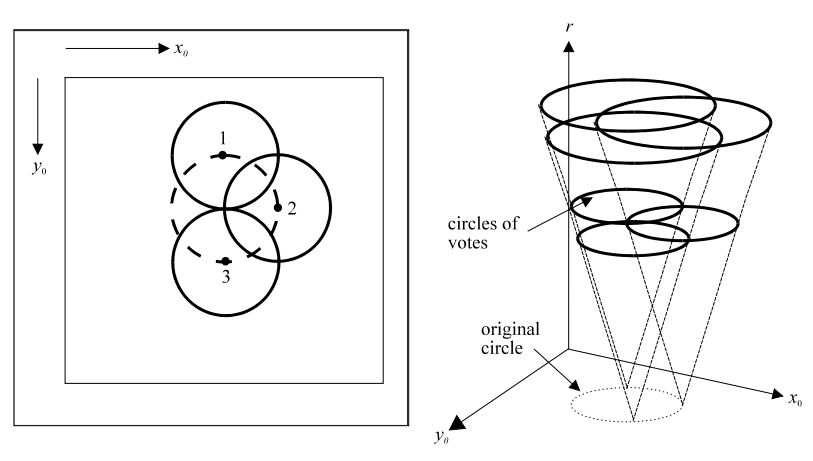

Circle Voting and Accumulator Space

Like before, but this time for circles.

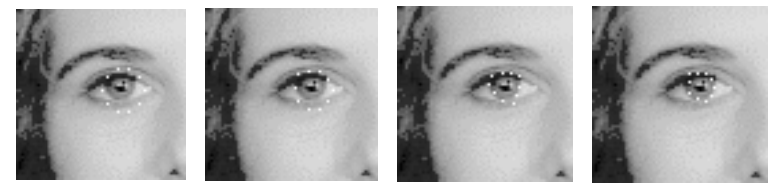

Speeding it up

- Now it’s a 3D accumulator, fast algorithms are

available

- E.g. by differentiation:

- SO edge gradient direction can be used, e.g. 2D accumulator

by:

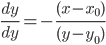

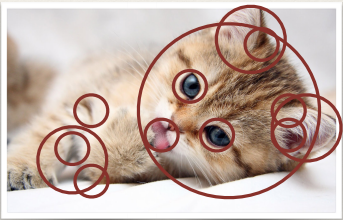

Applying the HT for circles

This can be used for detecting the shapes of an eye and iris.

Integrodifferential operator? (non examinable)

https://stackoverflow.com/questions/27058057/comparing-irises-images-with-opencv

Looks cool???

Arbitrary Shapes

- Use Generalised HT

- Form (discrete) look-up-table (R-table)

- Vote via look-up-table

- Orientation? Rotate R-table voting

- Scale? Scale R-table voting

- Inherent problems with discretisation (process of transferring

continuous functions to discrete)

R-table Construction

Pick a reference point in the image.

For each boundary point x, compute \Phi(x) - the gradient direction.

r is the distance from the reference point to the boundary point (radial distance),

and \alpha is the angle.

The table then stores each (r, \alpha) pair with the corresponding \Phi.

(Thanks Bradley Garrod)

Active Contours (non examinable)

- For unknown arbitrary shapes: extract by evolution

- Elastic band analogy

- Balloon analogy

- Discrete vs. continuous

- Volcanoes? 🌋

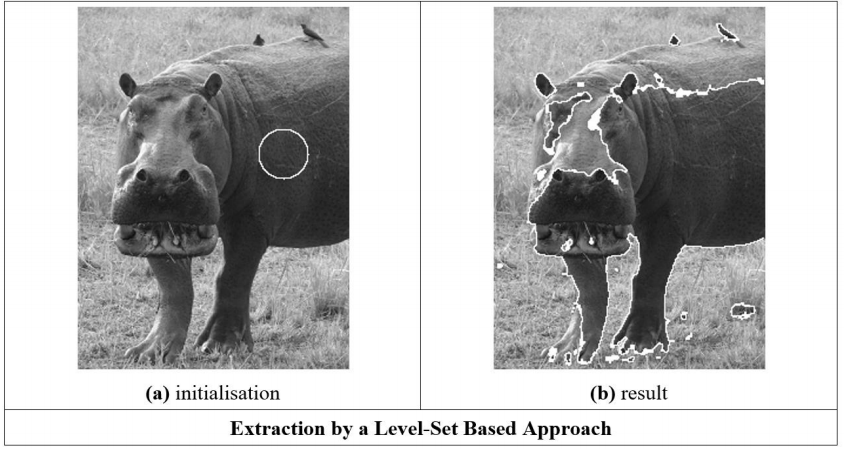

Geometric active contours (non examinable)

Couple’a hippos 🦛🦛

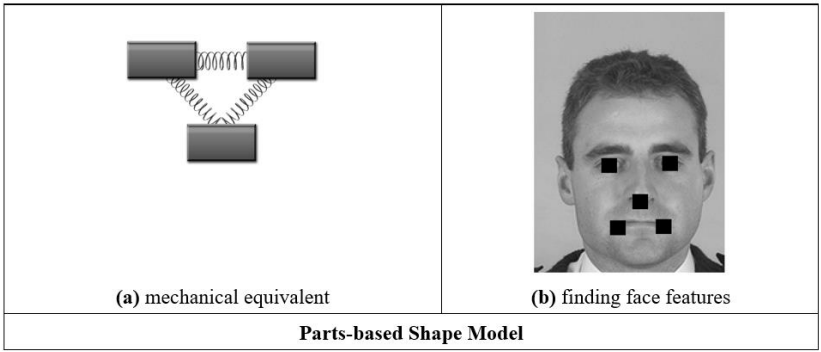

Parts-based shape modelling (non examinable)

This guy don’t look so good

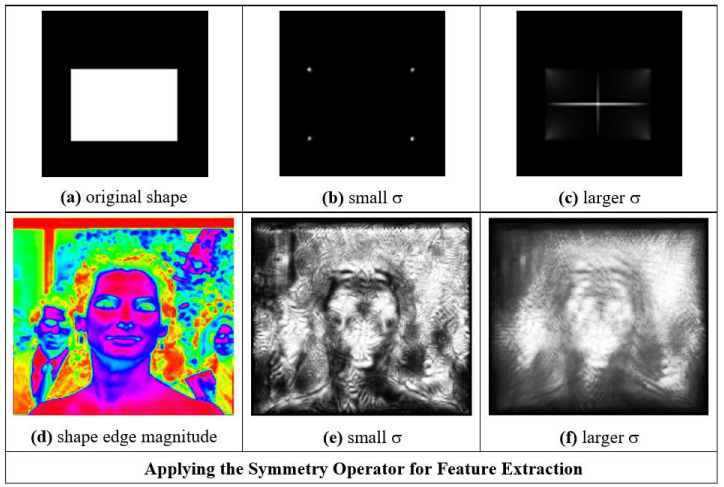

Symmetry yrtemmyS (non examinable)

SPooky

Lecture 10 Applications/Deep Learning

Where is feature extraction used these

days?

This lecture is mostly extra (non examinable) stuff, so I’m only gonna cover

the hand with bow slides. If you want a look then jump to the slides: http://comp3204.ecs.soton.ac.uk/mark/Lecture%2010.pdf

Where is computer vision used?

What you see depends on the viewpoint you take

Deep Learning

Conclusions

- Computer vision is changing the way we live

- Computer vision uses modern hardware and modern cameras to achieve

what we understand by “sight”

- No technique is a panacea (a solution for all difficulties): many

alternatives exist

- Computer vision is larger than this course

Part 2: Jon

Note: Jon has done some really good handout summaries which have basically done my

job for me, they can be found here: http://comp3204.ecs.soton.ac.uk/part2.html

Highly recommend reading these probably.

But I will still go through the slides and make notes of hand with bow

slides :)

Lecture 1: Building machines that see

Key terms in designing Computer Vision systems

- Robust

- Repeatable

- Invariant

- Constraints

- You want your system to be robust and repeatable.

- You design your system to be invariant.

- You apply constraints to

make it work.

Robustness

- The vision system must be robust to changes in its environment

- i.e. changes in lighting; angle or position of the camera;

etc

Repeatability

- Repeatability is a measure

of robustness

- Repeatability means that the system must work the same over and

over, regardless of environmental changes

Invariance

- Invariance to environmental factor helps

achieve robustness and repeatability

- Hardware and software can be designed to be invariant to certain

environmental changes

- e.g. you could design an algorithm to be invariant to

illumination changes…

Constraints

- Constraints are what you apply to the

hardware, software and wetware (human brains in the system) to make sure your computer vision system

works in a repeatable, robust fashion.

- e.g. you constrain the system by putting it in a box so there

can’t be any illumination changes

Constraints in Industrial Vision

Software Constraints

- Really simple, but incredibly

fast algorithms

- Hough Transform is popular, but note that it isn’t all

that robust without physical constraints

- Actually, the same is true of most algorithms/techniques used in

industrial vision

- Intelligent use of colour…

Colour-Spaces (non examinable?)

Even though there is no hand with bow for these slides, they seem

useful.

- There are many different ways of numerically representing colour

- A single representation of all possible colours is called a

colour-space

- It’s generally possible to convert to one colour-space to another by applying a mapping (in the form

of a set of equations or an algorithm)

RGB Colour-space

- Most Physical image sensors capture RGB

- By far the most widely known space

- RGB “couples” brightness (luminance) with each

channel, meaning that the illumination invariance is difficult

HSV Colour-space

- Hue, Saturation, Value is another colour-space

- Hue encodes the pure colour as an angle

- Saturation is how vibrant the colour is

- And the Value encodes brightness

- A simple way of achieving invariance to lightning is to use just the

H or H & S components

LAB Colour-space

Lab color space is more perceptually linear than other color spaces. Perceptually linear

means that a change of the same amount in a color value should produce a change of about the same visual

importance. It is important especially when you try to measure the perceptual difference of two colors.

(Source)

- Can also be made invariant to lighting by only using

the AB components.

Physical Constraints

- Industrial vision is usually solved by applying simple computer

vision algorithms, and lots of physical constraints:

- Environment: lighting, enclosure, mounting

- Acquisition hardware: expensive camera, optics, filters

Vision in the wild

- So, what about vision systems in the wild, like ANPR (Automatic

Number-Plate Recognition) cameras, or recognition apps for mobile phones?

- Apply as many hardware and wetware constraints as possible, and

let the software take up the slack

- Colour information often less important than luminance

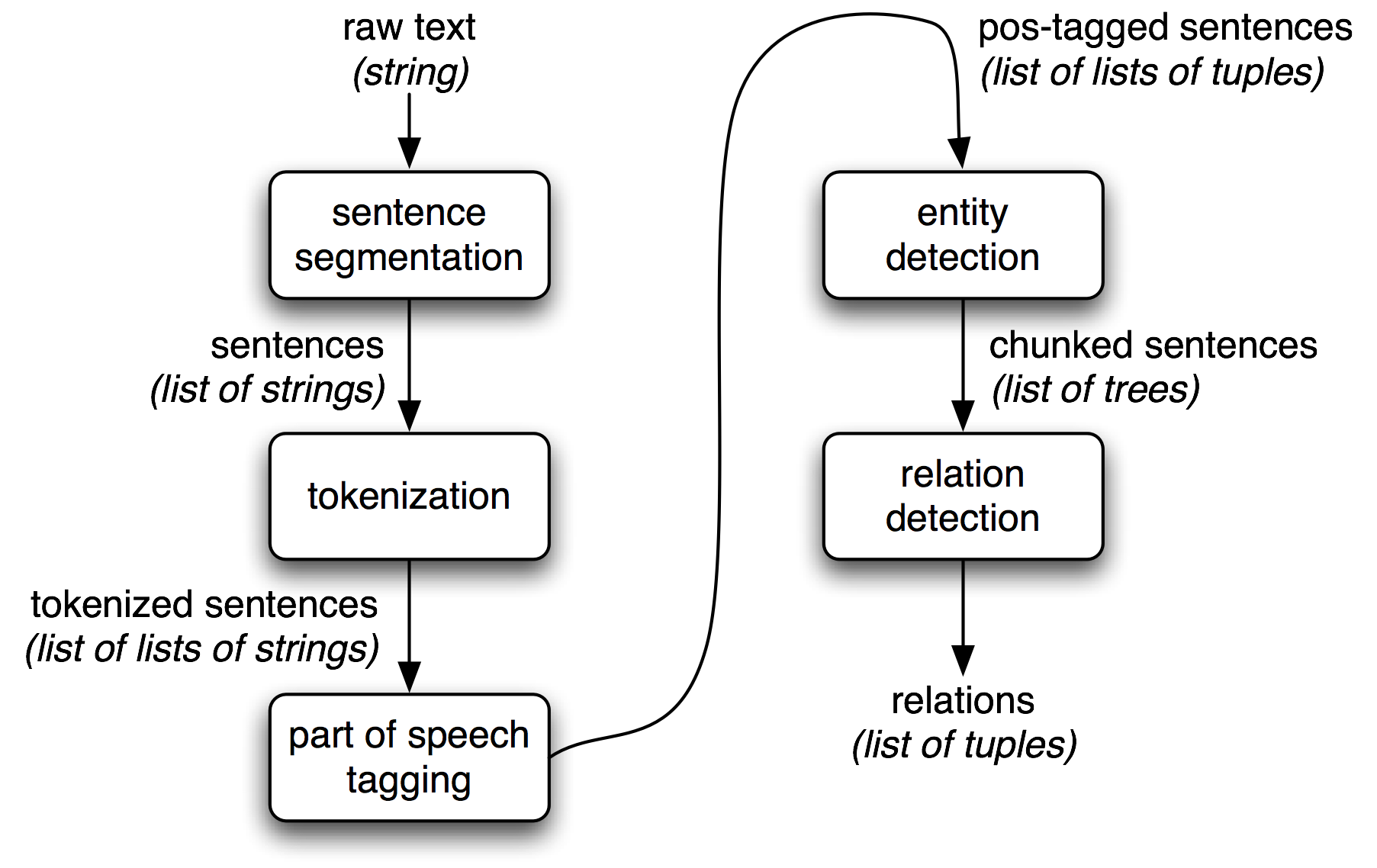

Lecture 2: Machine learning for pattern recognition

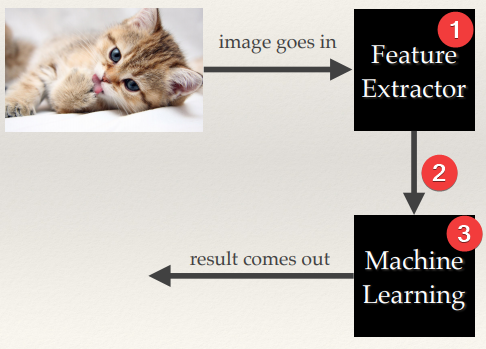

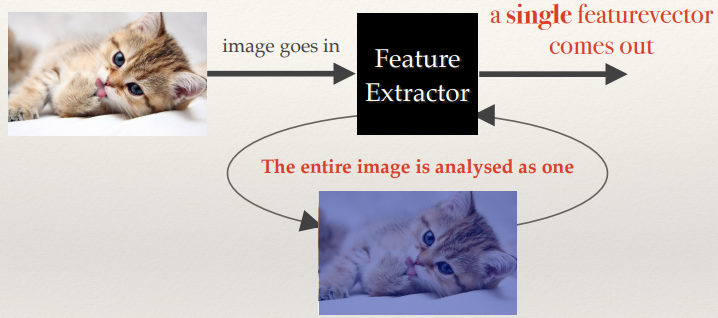

Feature Spaces

Many computer vision applications involving machine learning take the following

form

- This is where cool image processing happens

- Feature extractors make feature vectors from images

- Machine learning system uses featurevectors to make intelligent decisions

Key terminology

- Featurevector: a mathematical vector

- Just a list of (usually Real) numbers

- Has a fixed number of elements in it

- The number of elements is the dimensionality

of the vector

- Represents a point in a featurespace or equally a direction in the featurespace

- The dimensionality of a featurespace is the dimensionality of every vector within it

- Vectors of differing dimensionality can’t exist in the

same featurespace

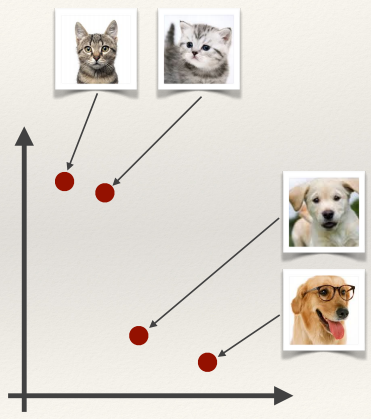

Density and Similarity

Distance in featurespace

- Feature extractors are often defined so that they produce vectors that are

close together for similar inputs

- Closeness of two vectors can be computed in the feature space by

measuring the distance between the vectors.

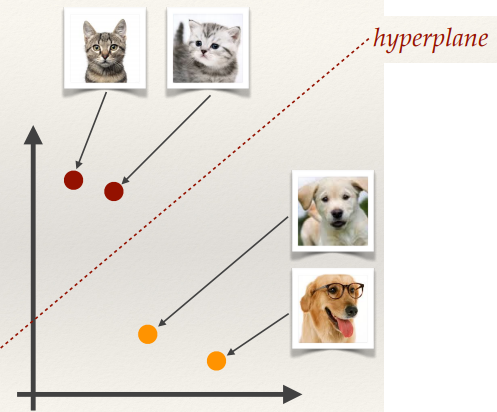

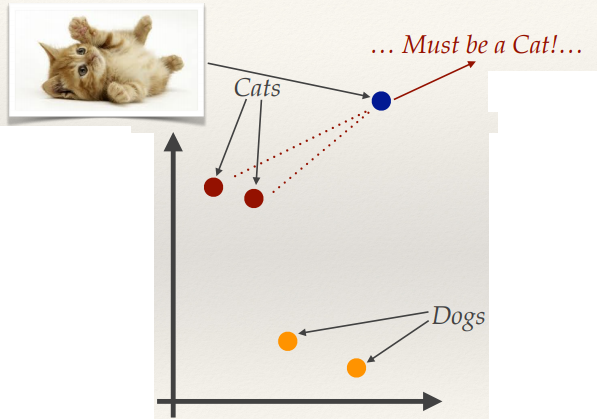

Cats are a close distance apart, and are further away from the cluster of dogs in

this feature space.

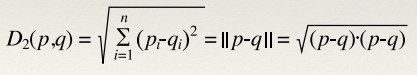

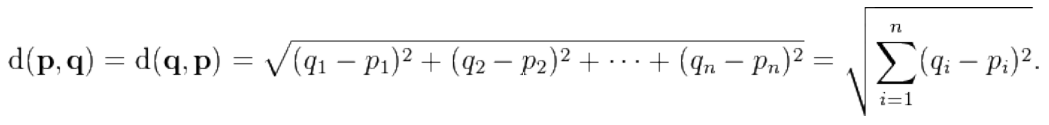

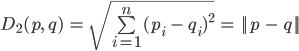

Euclidean distance (L2 distance)

- L2 distance is the most intuitive distance…

- The straight-line distance between two points

- Computed via an extension of Pythagoras theorem to n dimensions:

Equation

The straight-line; “Euclidean distance”

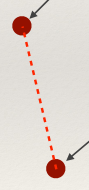

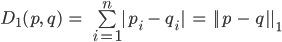

Manhattan/Taxicab distance (L1 distance)

- L1 distance is computed along paths parallel to the axes of the

space:

Equation

Essentially just like you are taking a taxi cab around the grid like streets of

manhattan

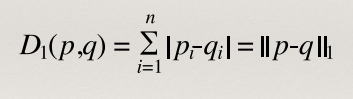

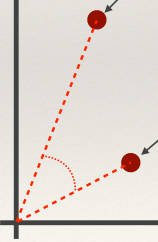

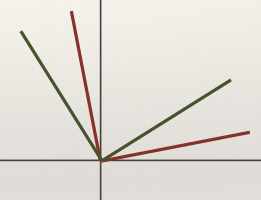

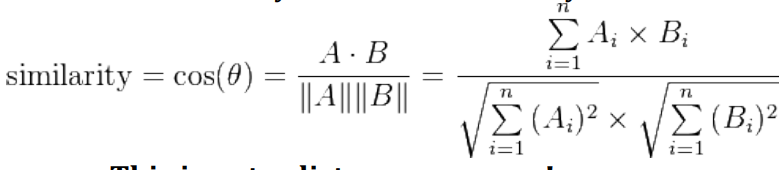

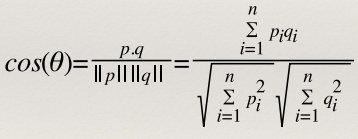

Cosine Similarity

- Cosine similarity measures the cosine of the angle between two

vectors

- Useful if you don’t care about the relative length of the

vectors

Equation

The angle between the two points from the origin

Choosing good featurevector representations for machine learning

- Choose features which allow to distinguish objects or classes of

interest

- Similar within classes

- Different between classes

- Keep number of features small

- Machine-learning can ge t more difficult as dimensionality of

featurespace gets large

Supervised Machine Learning: Classification

- Classification is the process of assigning a

class label to an object (typically represented by a

vector in a feature space).

- A supervised machine-learning algorithm

uses a set of pre-labelled training data to

learn how to assign class labels to vectors (and the corresponding objects).

- A binary classifier

only has two classes

- A multiclass classifier has

many classes

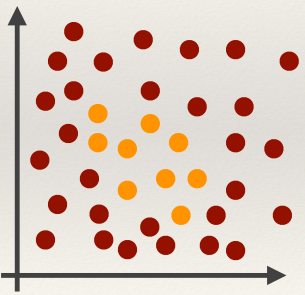

Linear Classifiers

Linear classifiers try to learn a hyperplane that

separates two classes in featurespace with minimum error

There can be lots of hyperplanes to choose from; differing classification algorithms

apply differing constraints when learning the classifier.

To classify a new image, you just need to check what side of the hyperplane it is

on.

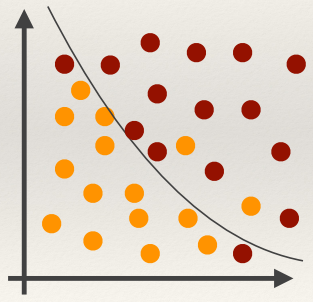

Non-linear binary classifiers

Linear classifiers work best when the data is linearly separable.

Like this:

But what if the data is like this:

There is no hope for a linear classifier! 😭

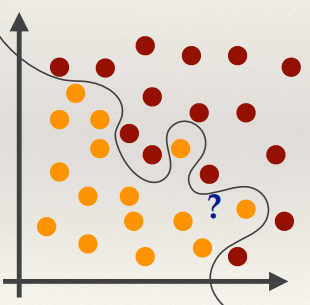

Non-linear binary classifiers, such as Kernel support Vector

Machines learn non-linear decision boundaries. (basically a curved graph

separates the data instead of a straight one)

However, you have to be careful, you might lose generality by overfitting:

What class would the blue question mark actually belong to?

Multiclass classifiers: KNN

Assign class of unknown point based on majority class of closest K

neighbours in featurespace.

KNN Problems (non examinable?)

- Computationally expensive if there are:

- Lots of training examples

- Many dimensions

Unsupervised Machine Learning: Clustering

- Clustering aims to group data without any prior knowledge of what

the groups should look like or contain.

- In terms of featurevectors, items with

similar vectors should be grouped together by a clustering operation.

- Some clustering operations create overlapping groups; for now we’re only

interested in disjoint clustering methods that assign an item to a single group.

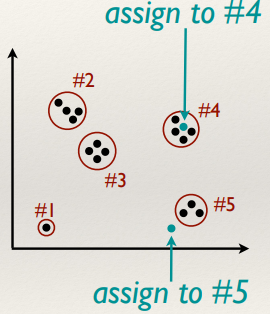

K-Means Clustering

StatQuest:

K-means clustering (good video to explain this!!!!!!!!!!!!!!!!)

- K-Means is a classic featurespace clustering algorithm for grouping data in

K groups with each group represented by a centroid:

- Pseudo code:

- The value of K is chosen

- K initial cluster centres are chosen

- The following process is performed iteratively until the

centroids don’t move between iterations:

- Each point is assigned to its closest centroid

- The centroid is recomputer as the mean of all the points

assigned to it. If the centroid has no points assigned it is randomly re-initialised to a new

point.

- The final clusters are created by assigning all points to

their nearest centroid.

Lecture 3: Covariance and Principal Components

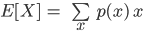

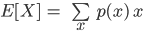

Random Variables and Expected Values

- Variable that takes on different values due to chance

- The expected value (denoted E[X]) is the most likely value a

random variable will take.

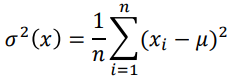

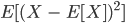

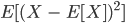

Variance

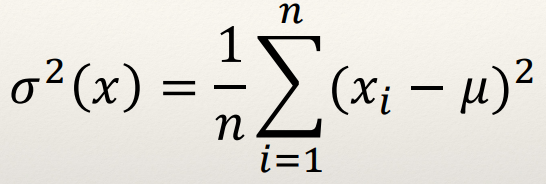

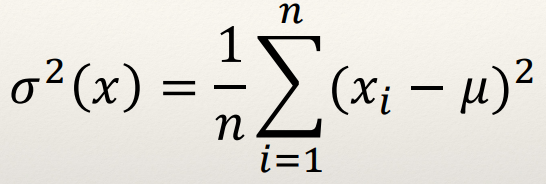

- Variance (σ2) is the

mean squared difference from the mean (μ).

- It’s a measure of how spread-out the data is.

Equation

Technically it’s E[(X - E[X])2]

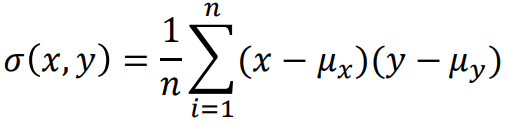

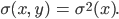

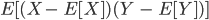

Covariance

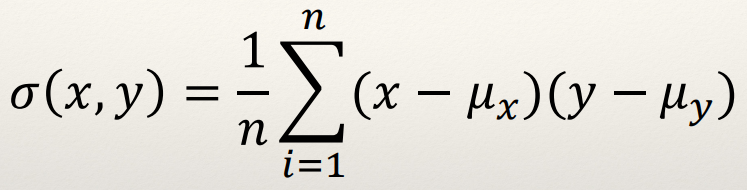

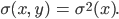

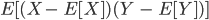

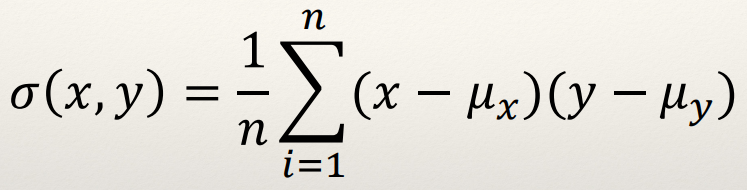

- Covariance (σ(x,y)) measures how two variables change

together

- The variance is the covariance when the two variables are the same

(σ(x,y)=σ2(x))

- A covariance of 0 means the variables are uncorrelated

(Covariance is related to Correlation though…)

Equation

Technically it’s E[ (x - E[x]) (y - E[y]) ]

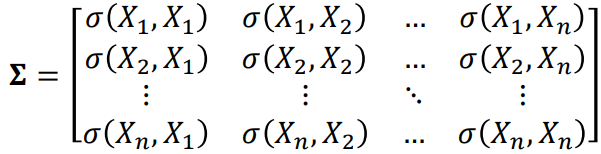

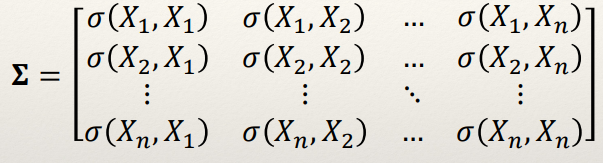

Covariance Matrix

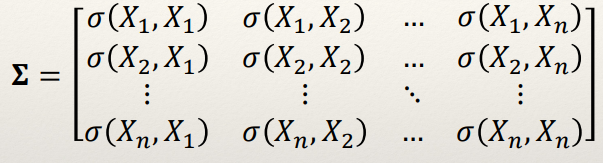

- A covariance matrix encodes how all possible pairs of dimensions in

an n-dimensional dataset vary together.

The covariance matrix is a square symmetric matrix, as you

can see by the symmetry in the example above.

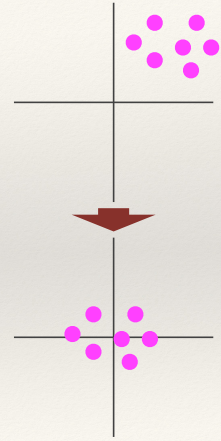

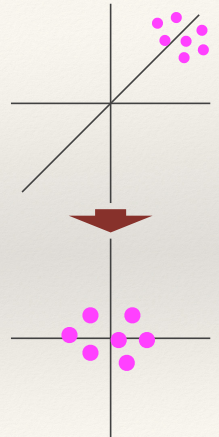

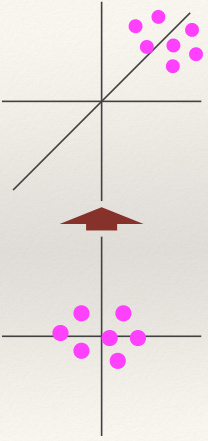

Mean Centring

- Mean Centring is the process of computing the mean (across each

dimension independently) of a set of vectors, and then subtracting the mean vector from every

vector in the set.

- All the vectors will be translated so their average positions is the

origin.

From top image to bottom image; mean centered around the origin.

Covariance matrix again

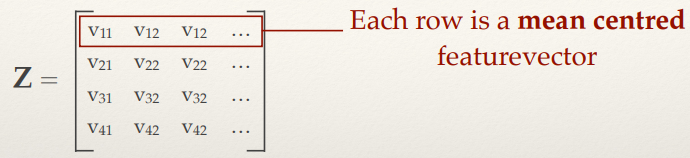

So then this means that

The covariance matrix is directly proportional to Z transposed, multiplied by Z,

where Z is the matrix formed by the mean-centred vectors (each row of matrix Z is one mean-centred vector) -

Hope this helps :) - Lorena

Principal axes of variation

Basis

- A basis is a set of n linearly independent (remember all the way back to first year

Foundations guys!) vectors in an n dimensional space

- The vectors are orthogonal (all right-angles to each other)

- They form a “coordinate system”

- There are an infinite number of possible bases

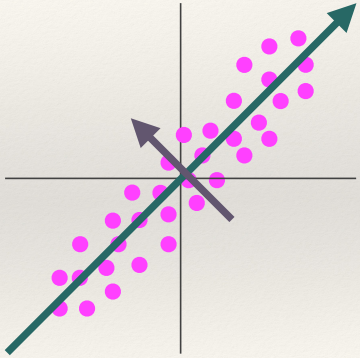

The first principal axis

- For a given set of n dimensional

data, the first principal axis (or just principal axis) is the vector that describes the direction of

greatest variance.

- (Big turquoise arrow pointing

up and to the right in the image below)

The second principal axis

- The second principal axis is a vector

in the direction of the greatest variance orthogonal (perpendicular) to the first major

axis.

- (Small lilac arrow pointing up

to the left in the image below)

The third principal axis

- In a space with 3 or more dimensions, the third principal axis is

the direction of greatest variance orthogonal to both the first and second principal axes.

- The fourth… and so on…

- The set of n principal axes of an n dimensional space are a basis.

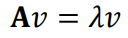

Eigenvectors and Eigenvalues

Important Equation

- A = n x n square matrix

- υ = n dimensional vector,

known as the eigenvector

- λ = scalar values, known as an eigenvalue

Properties

- There are at most n eigenvector-eigenvalue pairs.

- If A is symmetric, then the set of eigenvectors is orthogonal

- If A is a covariance matrix, then the eigenvectors are the principal axes

- The eigenvalues are proportional to the variance of the data along

each eigenvector

- The eigenvector corresponding to the largest

eigenvalue is the first principal component.

Finding Values

- For small matrices (n≤4) there are algebraic solutions to finding all the eigenvector-eigenvalues pairs

- For larger matrices, numerical solutions to the Eigendecomposition must be sought.

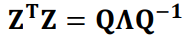

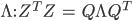

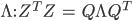

Eigendecomposition

Eigendecomposition takes a matrix and represents it in terms of its eigenvalues and

eigenvectors.

- Columns of Q are the

eigenvectors

- Diagonal eigenvalue matrix (Λii = λi)

- If A is real

symmetric (i.e. a covariance matrix), then Q-1 = QT (i.e. eigenvectors are orthogonal), so:

Summary

The Eigendecomposition of a covariance matrix A:

Gives you the principal axes and their relative magnitudes.

Ordering

- Standard Eigendecomposition implementations will order the

eigenvectors (columns of Q) such that the

eigenvalues (in the diagonal of Λ) are sorted

in order of decreasing value.

- Some solvers are optimised to only find the top k eigenvalues and corresponding eigenvectors, rather than

all of them.

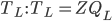

Principal Component Analysis

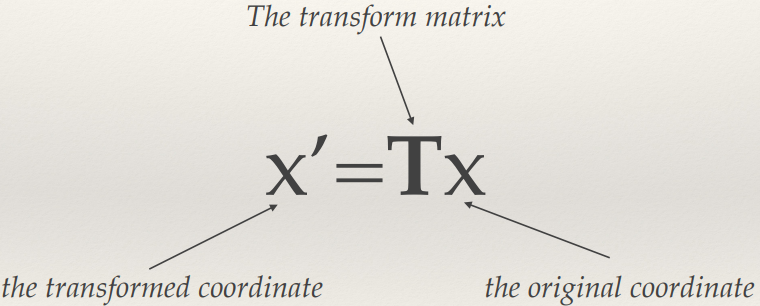

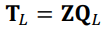

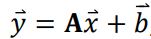

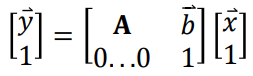

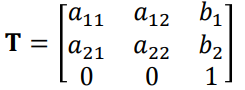

Linear Transform

- A linear transform W projects data from one space into another:

- Original data stored in the rows of Z

- T can have fewer dimensions than Z

Linear Transforms

- The effects of a linear transform can be reversed if W is invertible:

- A lossy process if the dimensionality of the spaces is

different

PCA

- PCA is an Orthogonal Linear Transform that maps data from its original space to a space defined by the principal axes of the

data.

- The transform matrix W is just the

eigenvector matrix Q from the Eigendecomposition of

the covariance matrix of the data.

- Dimensionality reduction can be achieved by removing the eigenvectors with

low eigenvalues from Q (i.e. keeping the first L columns of Q assuming the

eigenvectors are sorted by decreasing eigenvalue).

PCA Algorithm

- Mean-centre the data vectors

- Form the vectors into a matrix Z, such that each row corresponds to a vector

- Perform the Eigendecomposition of the matrix ZTZ, to recover the eigen matrix Q and diagonal eigenvalue matrix Λ: ZTZ = QΛQT

- Sort the columns of Q and corresponding diagonal values of Λ

so that the eigenvalues are decreasing

- Select the L largest eigenvectors of Q (the first L columns) to create the transform matrix

QL

- Project the original vectors into a lower dimensional space,

TL: TL = ZQL

Eigenfaces

Spooky 0_0

Making Invariant

- Require (almost) the same object pose across images (i.e. full

frontal faces)

- Align (rotate, scale and translate) the images so that a common

feature is in the same place (i.e. the eyes in a set of face images)

- Make all the aligned images the same size

- (optional) Normalise (or perhaps histogram equalise) the images so

they are invariant to global intensity changes

Problems

- If the images are 100x200 pixels, the vector has 20000

dimensions

- That’s not really practical…

- Also, the vectors are still highly susceptible to imaging noise

and variations due to slight misalignments.

Potential Solution… Apply PCA

- PCA can be used to reduce the dimensionality

- Smaller number of dimensions allows greater robustness to noise

and mis-alignment

- There are fewer degrees of freedom, so noise/misalignment has

much less effect

- And the dominant features are captured

- Fewer dimensions makes applying machine-learning much more

tractable

Lecture 4: Types of image feature and segmentation

Image Feature Morphology

- There are 4 main ways of extracting features from an image:

- Global

- Grid/Block-based

- Region based

- Local

Global Features

- A Global Feature is extracted

from the contents of an entire image.

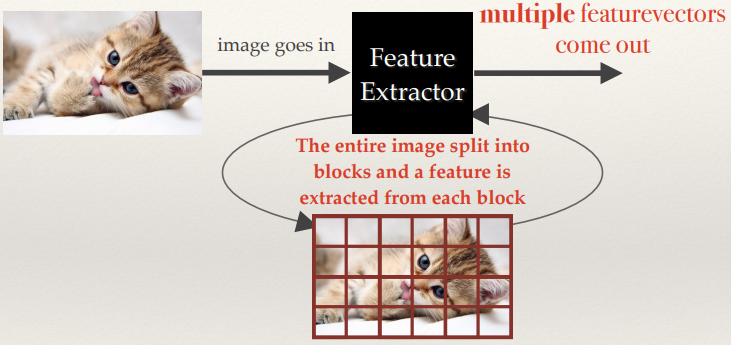

Grid or Block-based Features

- Multiple features are extracted; one per block

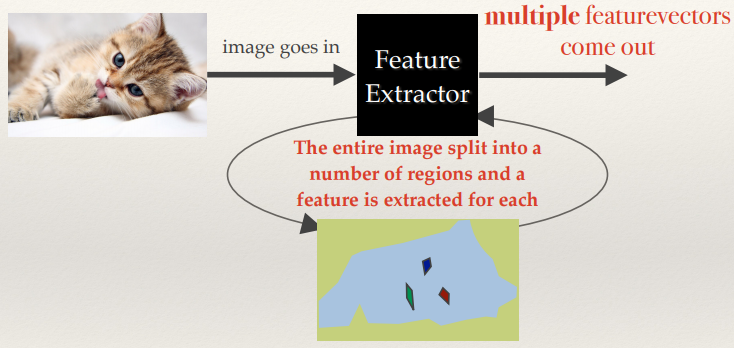

Region-based Features

- Multiple features are extracted; one per region

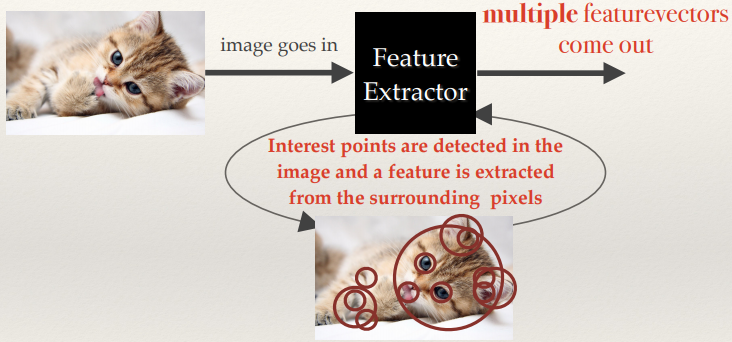

Local Features

- Multiple features are extracted; one per local interest point

Global Features

Image Histograms

- Simple global features can be computed from the average of the

colour bands of the image’s histogram.

- This wasn’t particularly robust, and couldn’t deal

well with multiple colours in the image.

- A more common approach to computing a global image description is to

compute a histogram of the pixel values.

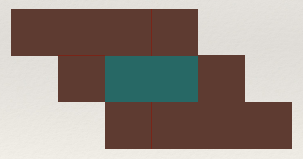

Joint-colour histogram

- A joint colour histogram measures the number of times each colour

appears in an image.

- These are different to histograms in image editing programs with

compute separate histograms for each channel.

- The colour space is quantised into bins, and we accumulate the number of pixels in each bin.

- Technically, it’s a multidimensional histogram, but we

flatten it (unwrap) to make it a feature vector.

- Normalisation (i.e. by the number of pixels) allows the histogram

to be invariant to image size.

- Choice of colour-space can make it invariant to uniform lighting

changes (e.g. H-S histogram)

- Invariant to rotation

- But vastly different images can have the same histogram! Like these

two below:

- Cool, right? Even though it’s, uh, bad; not invariant

to this kinda problem lol

Image Segmentation

What is segmentation?

- The first part in the process of creating region-based

descriptions…

- The process of partitioning the image into sets of pixels often called segments.

- Pixels within a segment typically share certain visual

characteristics.

Global Binary Thresholding

- Thresholding is the simplest form of segmentation

- Turns grey level images into binary (2 segments) by assigning

all pixels with a value less than a predetermined threshold to one segment, and all other pixels to the

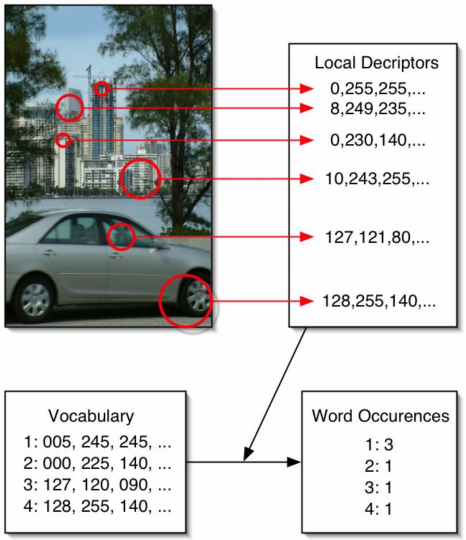

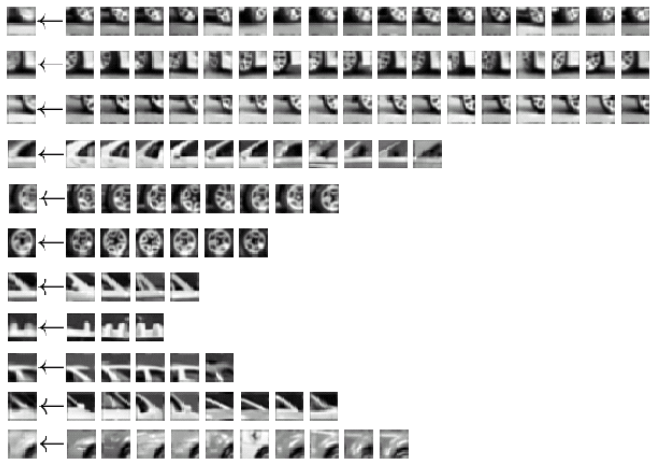

other.

- Really fast

- Required a manually set static threshold

- Not robust to lightning changes

- Can work well in applications with lots of physical constraints

(lighting control and / or high-contrast objects)

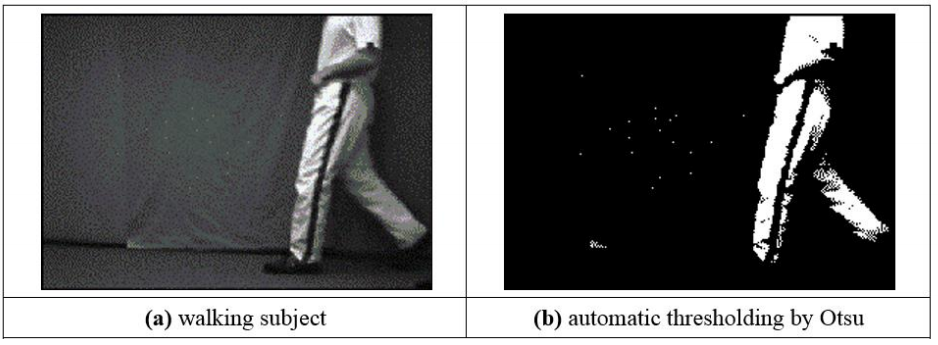

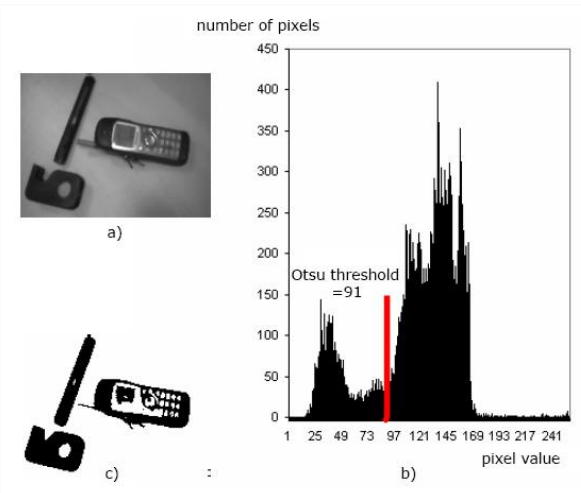

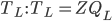

Otsu’s thresholding method

- Otsu’s method (named after Nobuyuki Otsu) provides a way to

automatically find the threshold.

- Assume there are two classes (i.e. foreground &

background)

- The histogram must have two peaks

- Exhaustively search for the threshold that maximises interclass

variance.

More

detailed look over at wikipedia!

Adaptive / local thresholding

- Local (or Adaptive) thresholding operators compute a different

threshold value for every pixel in an image based on the surrounding pixels.

- Usually a square or rectangular window around the current pixel

is used to define the neighbours

Mean adaptive thresholding

Set the current pixel to 0 if its value is less than the mean of its neighbours plus a constant value; otherwise set to 1.

- Size of window

- Constant offset value

- Good invariance to uneven lighting / contrast

- But…

- Computationally expensive (at least compared to global

methods)

- Can be difficult to choose the window size

- If the object being imaged can appear at different distance to

the camera then it could break…

Segmentation with K-Means

- K-Means clustering also provides a simple method for performing

segmentation:

- Cluster the colour vectors (i.e. [r, g, b]) of all the pixels, and then assign each pixel to a segment based

on the closest cluster centroid.

- Works best if the colour-space and distance function are

compatible

- E.g. Lab colour-space is designed so that Euclidean distances

are proportional to perceptual colour differences

- Naïve approach to segmentation using k-means doesn’t

attempt to preserve continuity of segments

- Might end up with single pixels assigned to a segment, far away

from other pixels in that segment.

- Can also encode spatial position in the vectors being clustered:

[r, g, b, x, y]

- Normalise x and y by the width and height of the image to take

away the effect of different images sizes

- Scale x and y so they have more or less effect than the colour

components

Advanced segmentation techniques

- Lots of ongoing research into better segmentation techniques:

- Techniques that can automatically determine the number of

segments

- “Semantic segmentation” techniques that try to

create segments that fit the objects in the scene based on training examples

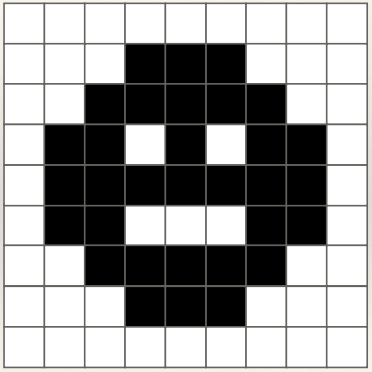

Connected Components

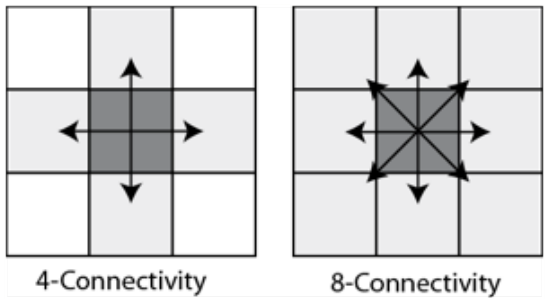

Pixel Connectivity

- A pixel is said to be connected with another if they are spatially

adjacent to each other.

- Two standard ways of defining this adjacency:

- 4-connectivity

- 8-connectivity (like minesweeper!)

Connected Component

A connected component is a set of pixels in which every pixel is connected either

directly or through any connected path of pixels from the set.

Connected Component Labelling

- Connected Component Labelling is the process of finding all the

connected components within a binary (segmented) image.

- Each connected segment is identified as a connected

component.

- Lots of different algorithms to perform connected component

labelling

- Different performance tradeoffs (memory verses time)

The two-pass algorithm

- On the first pass:

- Iterate through each element of the data by column, then by

row (Raster Scanning)

- If the element is not the background

- Get the neighbouring elements of the current element

- If there are no neighbours, uniquely label the current

element and continue

- Otherwise, find the neighbour with the smallest label and

assign it to the current element

- Store the equivalence between neighbouring labels

- On the second pass:

- Iterate through each element of the data by column, then by

row

- If the element is not the background

- Relabel the element with the lowest equivalent label

Lecture 5: Shape description and modelling

Extracting features from shapes represented by connected

components

Borders

- There are 2 types of pixel borders:

inner and outer.

Say you have this pixel shape:

Inner Border

A border made up of only pixels from the shape; the outermost pixels within the

shape.

Outer Border

A border where the outline of pixels outside the shape make up the border.

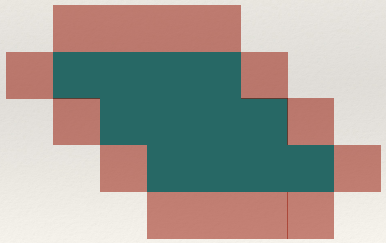

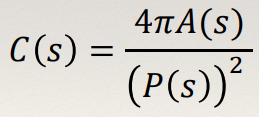

Two ways to describe shape

- Region Description

- Boundary Description

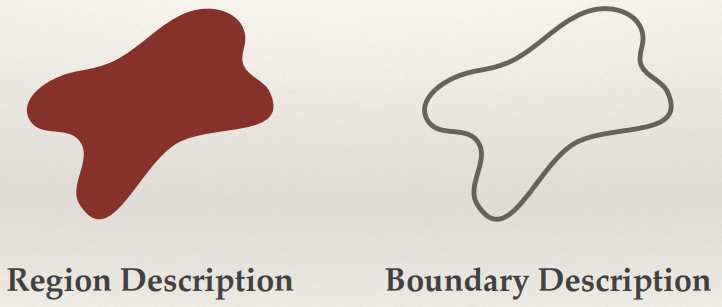

Region Description: Simple Scalar Shape Features

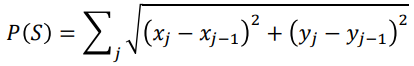

Area and Perimeter

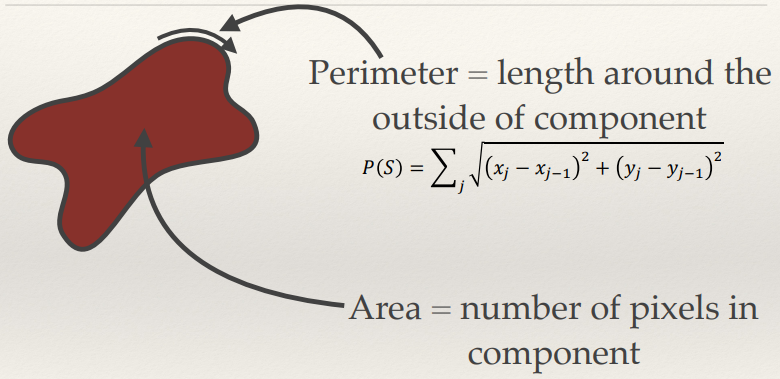

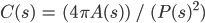

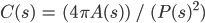

Compactness

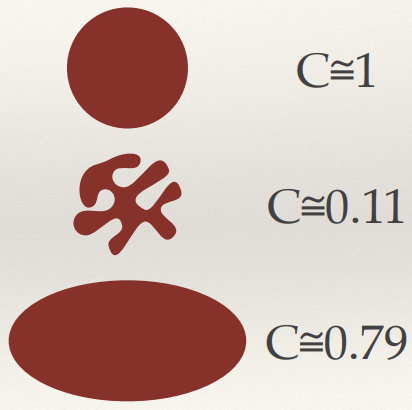

- Compactness measures how tightly packed the pixels in the component

are.

- It’s often computed as the weighted ratio of area to perimeter

squared:

Centre of Mass

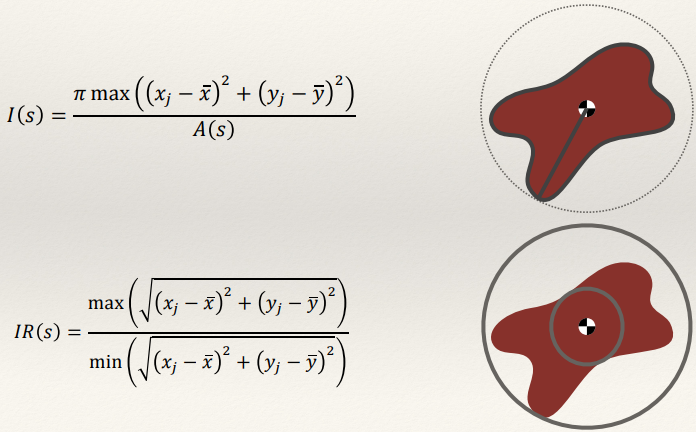

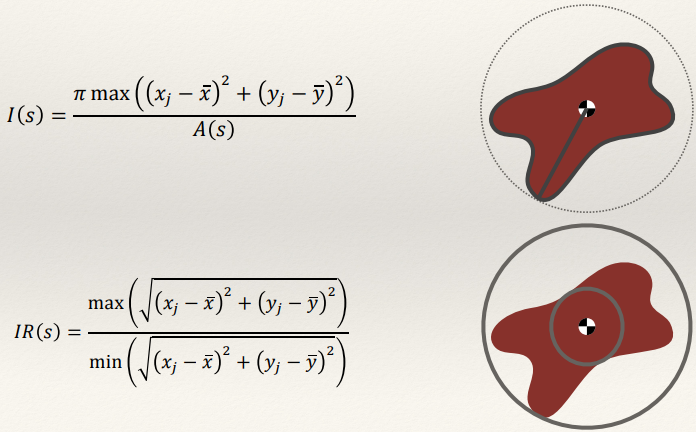

Irregularity / Dispersion

A measure of how “spread-out” the shape is

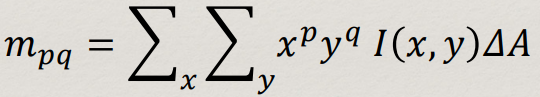

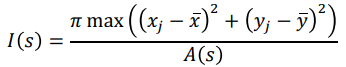

Moments

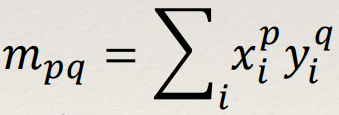

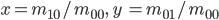

Standard Moments

- Moments describe the distribution of pixels in a shape.

- Moments can be computed for any grey-level image. For the

purposes of describing shape, we’ll just focus on moments of a connected component

- Standard two-dimensional Cartesian moment of an image, with

order p and q and I(s) as the pixel intensity, it is defined as:

- In the case of a connected component, this simplifies to:

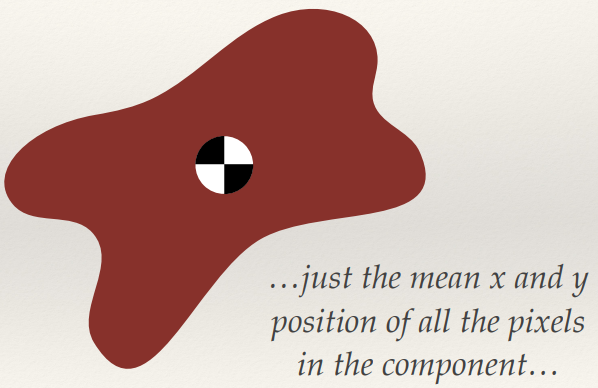

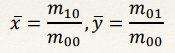

- The zero order moment of a connected component m00 is just the area of the component. The centre of mass is

(centroid):

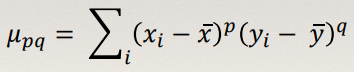

Central Moments

- Standard 2D moments can be used as shape descriptors

- But, they’re not invariant to translation, rotation and

scaling

- Central Moments are computed about the

centroid of the shape, and are thus translation invariant:

- Note: μ01 and

μ10 are always 0, so have no descriptive

power

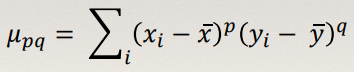

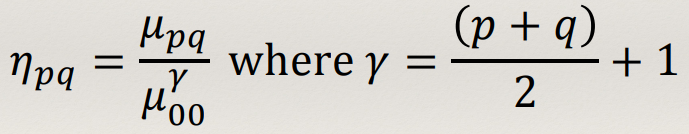

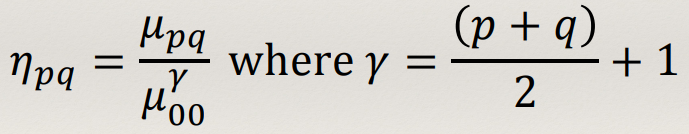

Normalised Central Moments

- Normalised Central Moments are both

scale and translation invariant

Boundary Description

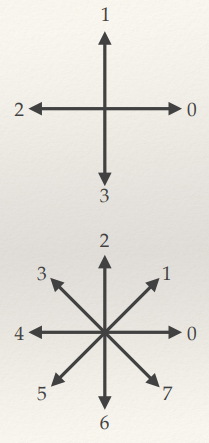

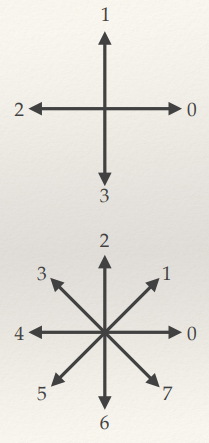

Chain Codes

- Simple way of encoding a boundary.

- Walk around the boundary and encode the direction you take on

each step as a number.

- Some direction examples are shown below left.

- Then cyclically shift the code so it forms the smallest possible

integer value (making it invariant to the starting point)

Chain Code Invariance

- Can be made rotation invariant:

- Encode the differences in direction rather than absolute

values.

- Can be made scale invariant:

- Resample the component to a fixed size

- Doesn’t work well in practice

Chain Code Advantages and Limitations

- Can be used for computing perimeter area, moments, etc.

- Perimeter for and 8-connected chain code is N(even numbers in

code) + √2N(odd numbers in code)

- Practically speaking, not so good for shape matching

- Problems with noise, resampling effects, etc

- Difficult to find good similarity/distance measures

Fourier Descriptors

- The Fourier transform can be used to encode shape information by

decomposing the boundary into a (small) set of frequency components.

- There are two main steps to consider:

- Defining a representation of a curve (the boundary)

- Expanding the representation using Fourier theory

- By choosing these steps carefully it is possible to create

rotation, translation and scale invariant boundary descriptions that can be used for recognition,

etc.

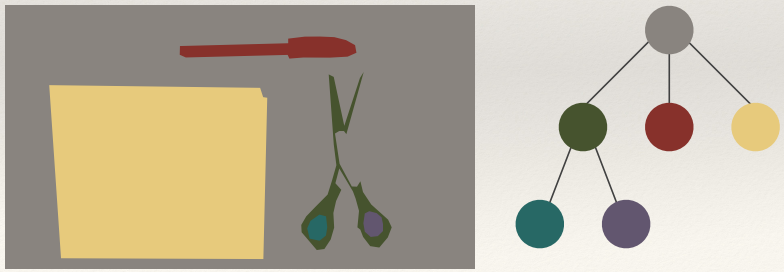

Region Adjacency Graphs

- Build a graph from a set of connected components

- Each node corresponds to a component

- Nodes connected if they share a border

- Can easily detect patterns in the graph

- E.g. “a node with one child with four

children”

- Invariant to non-linear distortions, but not to occlusion.

Theres some more stuff in the slides which isn’t hand with bow-ed, go

have a look

Slides 40-47: http://comp3204.ecs.soton.ac.uk/lectures/pdf/L5-shapedescription.pdf

Active Shape Models and Constrained Local Models

- ASMs/CLMs extend a PDM (Point Distribution

Model) by also learning local appearance around each point

- Typically just as an image template.

- Using a constrained optimisation algorithm, the shape can be

optimally fitted to an image

- Plausible shape

- Good template matching

Lecture 6: Local interest points

What makes a good interest point?

- Invariance to brightness change (local changes as well as global

ones)

- Sufficient texture variation in the local neighbourhood

- Invariance to changes between the angle / position of the scene to

the camera

How to find interest points

- There are lots of different types of interest point types to choose

from

- We’ll focus on two specific types and look in detail at

common detection algorithms:

- Corner detection - Harris and

stephens

- Blob Detection - Difference-of-Gaussian

Extrema

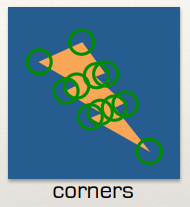

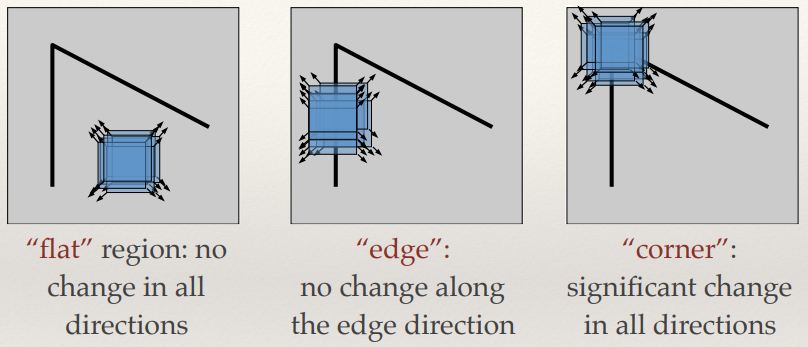

The Harris and Stephens corner detector

Basic Idea

- Search for corners by looking through a small window

- Shifting that window by a small amount in any direction

should give a large change in

intensity

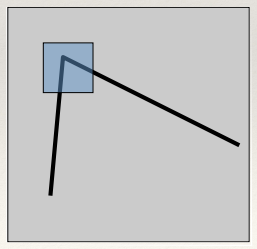

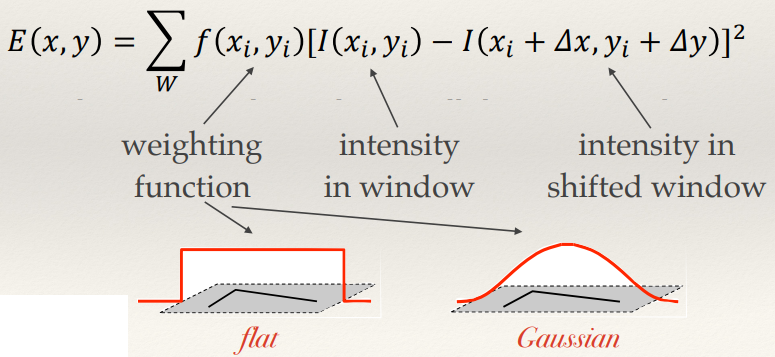

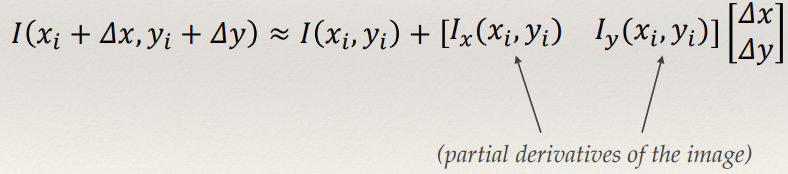

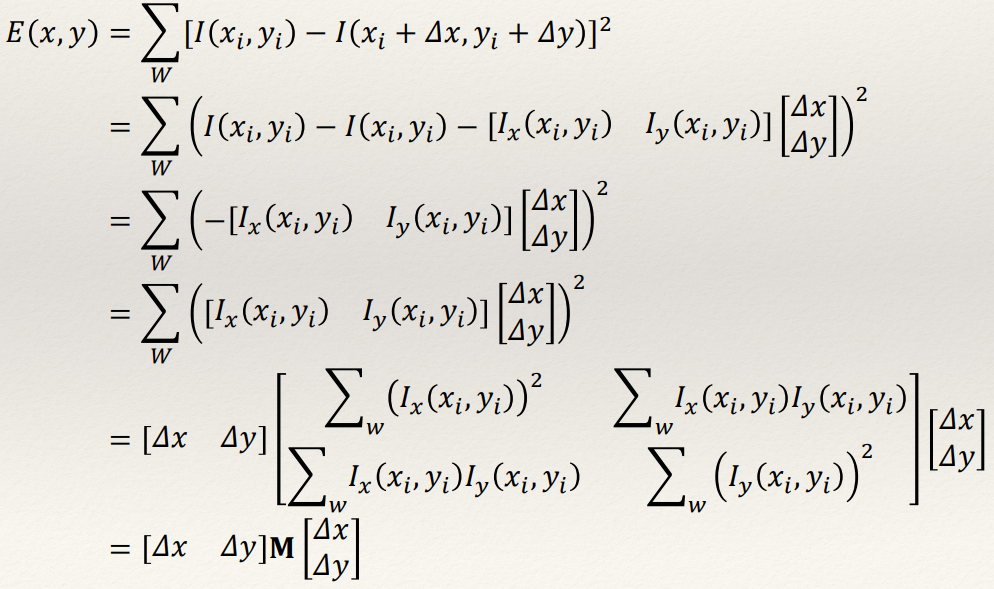

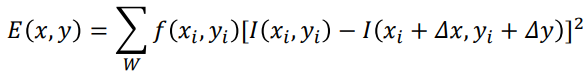

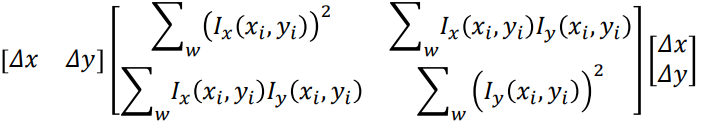

Harris & Stephens: Mathematics

Weighted average change in intensity between a window and a shifted version [by

(Δx,Δy)] of that window:

- The Taylor expansion allows us to approximate the shifted

intensity.

- Taking the first order terms we get this:

- Substituting and simplifying gives:

- Bruh.

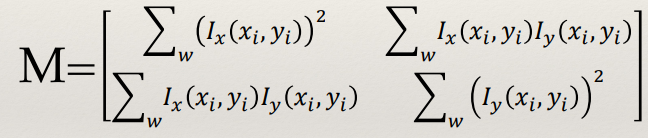

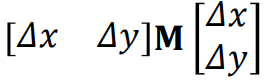

Structure Tensor

- The square symmetric matrix

M is called the Structure

Tensor or the Second Moment matrix

- It concisely encodes how the local shape intensity function of the

window changes with small shifts

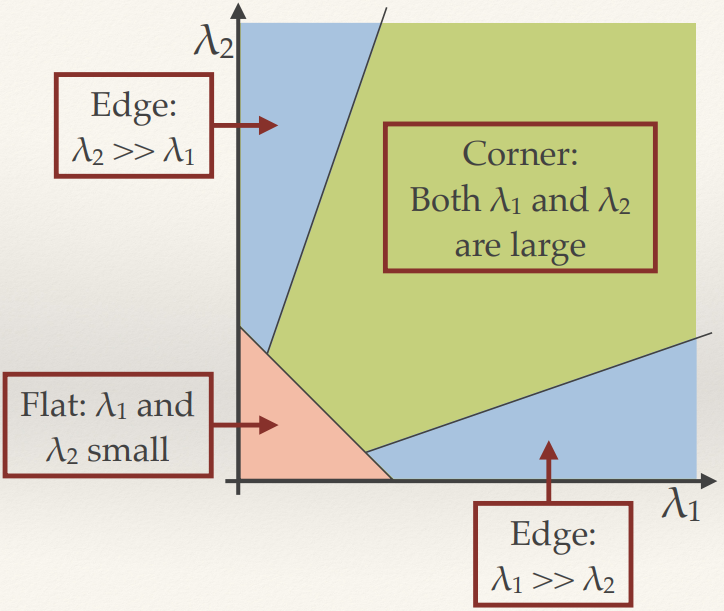

Eigenvalues of the Structure Tensor

- Think back to covariance matrices…

- As with the 2D covariance matrix, the structure tensor describes and

ellipse: xTMx=c (this is a quadratic form)

- The eigenvalues and vectors tell us the rates of change and

their respective directions

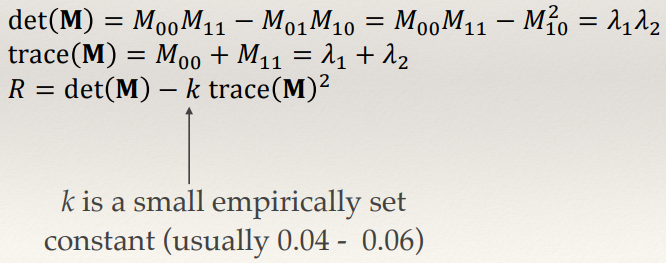

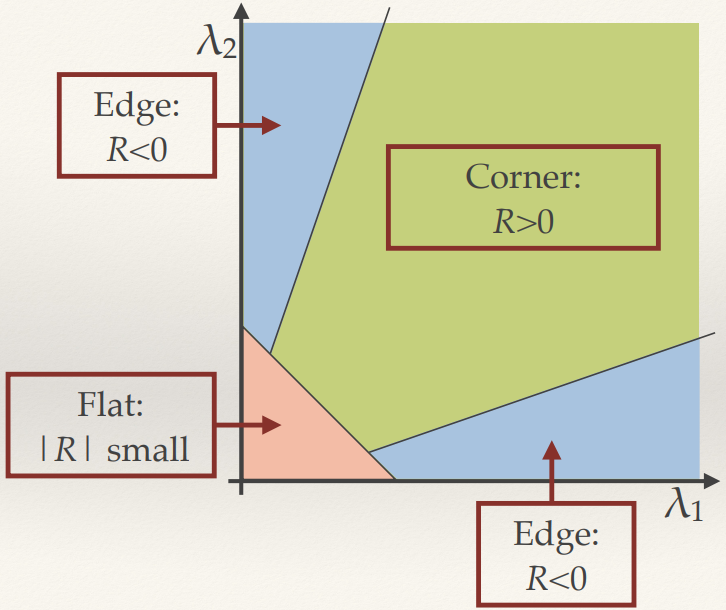

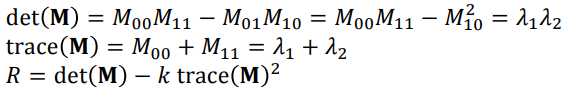

Harris & Stephens Response Function

- Rather than compute the eigenvalues directly, Harris and Stephens defined a

corner response function in terms of the determinant and trace of M:

- Smart.

Harris & Stephens Detector

- Take all points with the response value above a threshold

- Keep only the points that are local maxima (i.e. where the

current response is bigger than the 8 neighbouring pixels)

Scale in Computer Vision

The problem of scale

- As an object moves closer to the camera it gets larger with more

detail… as it moves further away it gets smaller and loses detail…

- If you’re using a technique that uses a fixed size processing

window (e.g. Harris corners, or indeed anything that involves a fixed kernel) then this is a bit of a

problem!

Scale space theory

- Scale space theory is a formal framework for handling the scale

problem.

- Represents the image by a series of increasingly smoothed / blurred images

parameterised by a scale parameter t.

- t represents the amount of

smoothing.

- Key notion: Image structures smaller than

√t have been smoothed away at scale t.

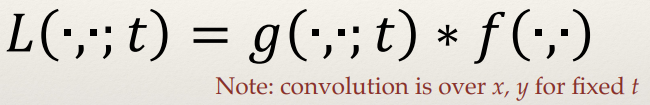

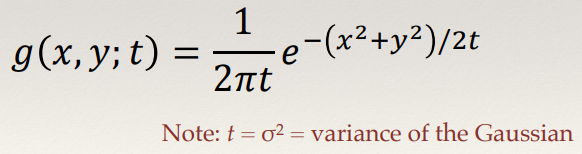

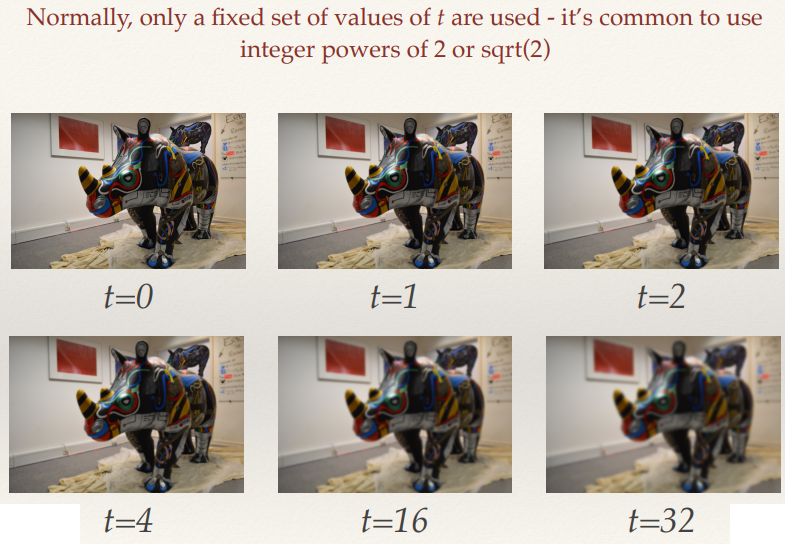

The Gaussian Scale Space

- Many possible types of scale space are possible (depending on the

smoothing function), but only the Gaussian function has the desired properties for image

representation.

- These provable properties are called the “scale space axioms”.

Formally, Gaussian scale space is defined as:

Where t ≥ 0 and,

Normally, only a fixed set of values of t are used - it’s common to use integer powers of 2 or √2

Nyquist-Shannon Sampling theorem

If a function x(t) contains no frequencies higher than B hertz, it is completely

determined by giving its ordinates at a series of points spaced 1/(2B) seconds apart.

...so, if you filter the signal with a low-pass filter that

halves the frequency content, you can also half the sampling rate without loss of information…

Gaussian Pyramid

- Every time you double t in

scale space, you can half the image size without loss off information!

- Leads to a much more efficient representation

- Faster processing

- Less memory

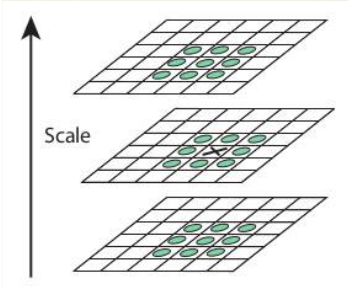

Multi-scale Harris & Stephens

- Extending the Harris and Stephens detector to work across scales is

easy…

- We define a Gaussian scale space with a fixed set of scales and

compute the corner response function at every pixel of each scale and keep only those with a response

above a certain threshold.

Blob Detection Finally

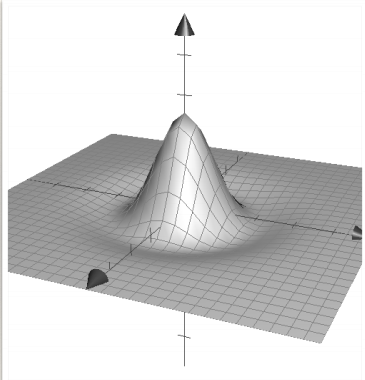

Laplacian of Gaussian

- Recall that the LoG is the second derivative of a Gaussian

- Used in the Marr-Hildreth edge detector

- Zero crossing of LoG convolution

- By finding local minima or maxima, you get a blob detector!

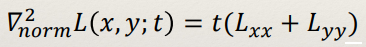

Scale space LoG

- Normalised scale space LoG defined as:

- By finding extrema of this function in scale space, you can find blobs at their representative scale (~√2t )

- Just need to look at the neighbouring pixels!

Very useful property: if a blob is detected at

(x0, y0

; t0) in an image, then

under a scaling of that image by a factor s, the same blob would be detected at (sx0, sy0 ; s2t0) in the scaled image.

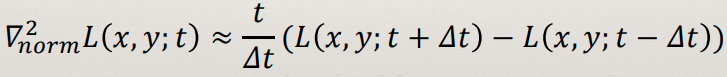

Scale space DoG

- In practice it’s computationally expensive to build a LoG

scale space.

- But, the following approximation can be made:

- This is called a Difference-of-Gaussians (DoG)

- Implies that the LoG scale space can be built from subtracting

adjacent scales of a Gaussian scale space

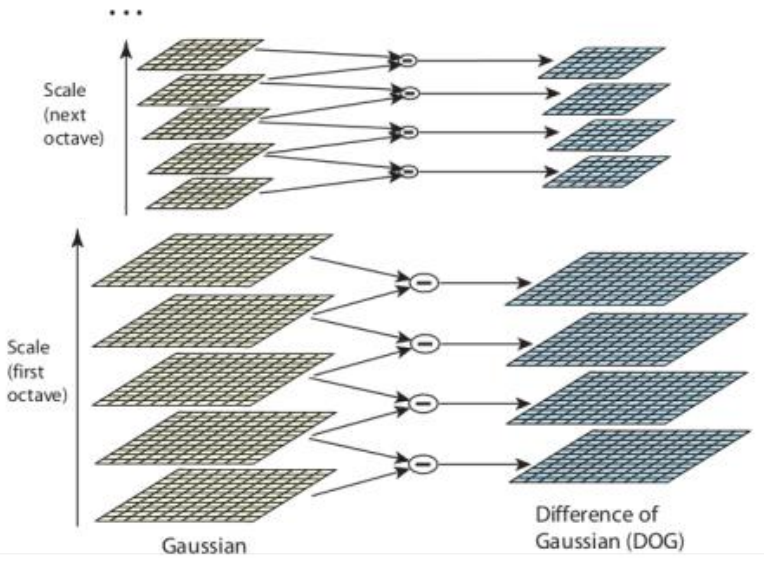

DoG Pyramid

- Of course, for efficiency you can also build a DoG pyramid

- An oversampled pyramid

as there are multiple images between a doubling of scale.

- Images between a doubling of scale are an octave.

Lecture 7: Local features and matching

Local features and matching basics

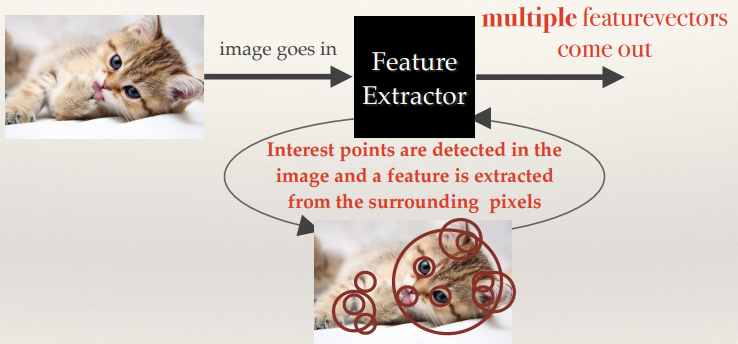

Local Features

Multiple features are extracted; one per local interest point

Why extract local features?

- Feature points are used for:

- Image alignment

- Camera pose estimate & Camera calibration

- 3D reconstruction

- Motion tracking

- Object recognition

- Indexing and database retrieval

- Robot navigation

- …

Example: Building a panorama

- You need to match and align the images

- Detect feature points in both images

- Find corresponding pairs

- Use the pairs to align the images

Problem 1:

- Detect the same points independently

in both images

- We need a repeatable detector

Problem 2:

- For each point correctly recognise the corresponding one

- We need an invariant, robust

and distinctive descriptor

Two distinct types of matching problem

- In stereo vision (for 3D reconstruction) there are two important

concepts related to matching:

- Narrow-baseline stereo

- Wide-baseline stereo

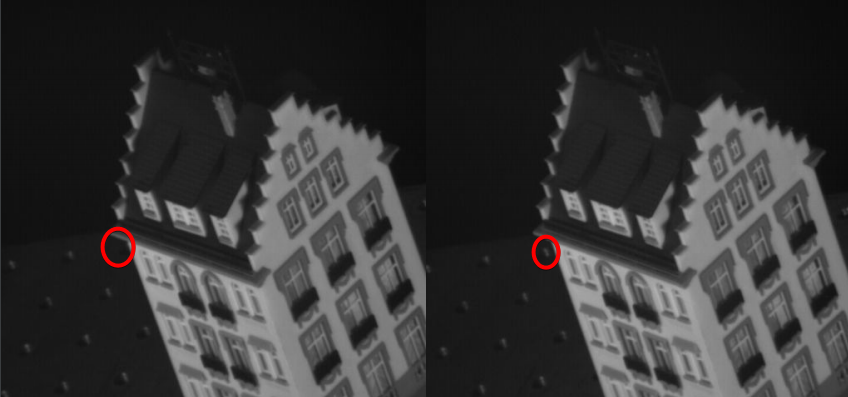

Narrow-baseline stereo

- This is where the two images are very similar - the local features

have only moved by a few pixels.

- Typically the images are from similar points in time

(Notice how the red-circled background object only appears in the second image; so

these two images have a slight difference to them)

Wide-baseline stereo

- This is where the difference in views is much bigger.

(I don’t think I need to circle the whole image lol)

Two distinct types of matching problem

- These concepts extend to general matching:

- The techniques for narrow-baseline stereo are applicable to

tracking where the object doesn’t move too much between frames

- The techniques for wide-baseline stereo are applicable to

generic matching tasks (object recognition, panoramas, etc.).

Robust local description

Descriptor Requirements

- Robustness to rotation and lighting is not so important.

- Descriptiveness can be reduced as the search is over a smaller

area.

- Need to be robust to intensity change, invariant to

rotation.

- Need to be highly descriptive to avoid mismatches (but not so

distinctive that you can’t find any matches!)

- Robust to small localisation errors of the interest point

- The descriptor should not change too much if we move it by a few

pixels, but to change more rapidly once we move further away.

Matching by correlation (template matching)

(Narrow baseline) template matching

- Interest points in two images with a slight change in

position

- Local search windows, based on the interest point in the first

image

- The template can then be matched against target interest points in

the second image

Problems with wider baselines

- Not robust to rotation

- Sensitive to localisation of interest point

- (although not such a problem with a small search window)

- With wider baselines you can’t assume a search area

- Need to consider all the interest points in the second

image

- More likely to mismatch :(

Local Intensity Histograms

Use local histograms instead of pixel patches

- Describe the region around each interest point with a pixel

histogram

- Match each interest point in the first image to the most similar

point in the second image (i.e. in terms of Euclidean distance [or other measure] between the

histograms)

Local histograms

- Not necessarily very distinctive

- Many interest points likely to have similar distribution of

grey-values

- Not rotation invariant if the sampling window is square or

rectangular

- Can be overcome using a circular window

- Not invariant to illumination changes

- Sensitive to interest point localisation

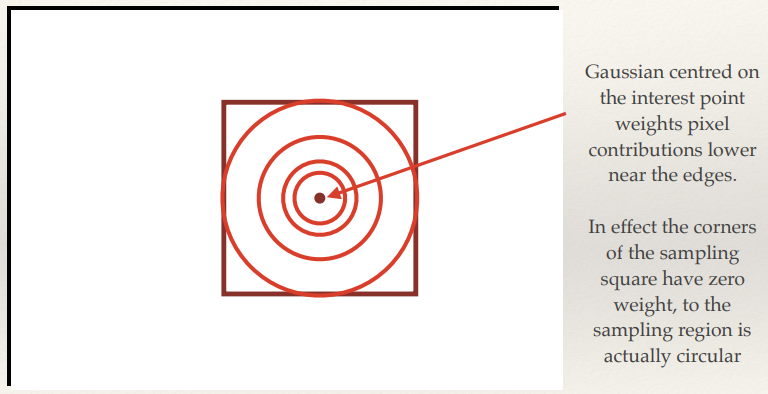

Overcoming localisation sensitivity

- Want to allow the window around the interest

point to move a few pixels in any direction without changing the

descriptor

- Apply a weighting so that pixels near the edge of the sampling

patch have less of an effect, and those near the interest point have a greater effect

- Common to use Gaussian weighting centred on the interest point for this.

Overcoming lack of illumination invariance

- Illumination invariance potentially achievable by normalising or

equalising the pixel patches before constructing the histogram

- ...but there is another alternative!................. I’m

not going to tell you……. Joking…….

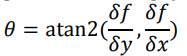

Local Gradient Histograms

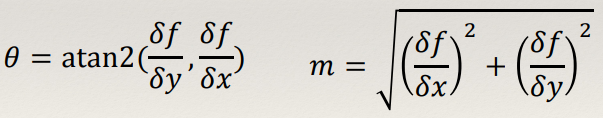

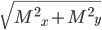

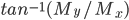

Gradient Magnitudes and Directions

- From the partial derivatives of an image (e.g. from applying

convolution with Sobel), it is easy to compute the gradient orientations / directions and

magnitudes

Gradient Histograms

- Instead of building histograms of the raw pixel values we could

instead build histograms that encode the gradient magnitude and direction for each pixel in the sampling

patch.

- Gradient magnitudes (and directions) are invariant

to brightness change!

- The gradient magnitude and direction histogram is also more distinctive.

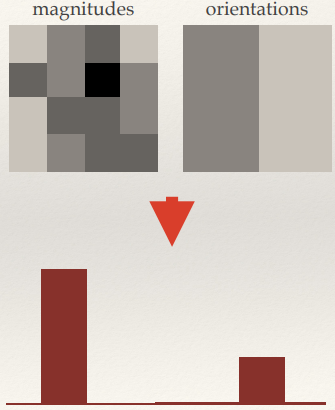

Building gradient histograms

- Quantise the directions (0°-360°) into a number of

bins

- For each pixel in the sampling patch, accumulate the gradient

magnitude of that pixel in the respective orientation bin

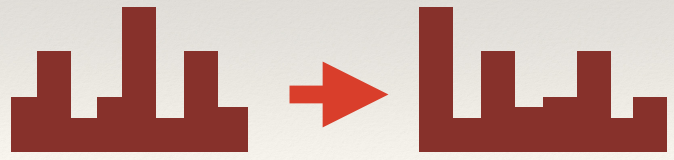

Rotation Invariance

- Gradient histograms are not naturally rotation invariant

- But, can be made invariant by finding the dominant orientation

and cyclically shifting the histogram so the dominant orientation is in the first bin.

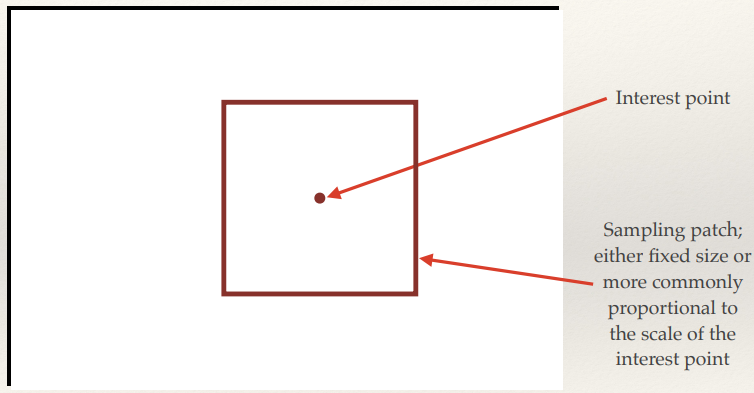

The SIFT feature

Adding spatial awareness

- The SIFT (Scale Invariant Feature Transformer) feature is widely

used

- Builds on the idea of a local gradient histogram by incorporating

spatial binning, which in essence creates multiple gradient

histograms about the interest point and appends them all together into a longer feature.

- Standard SIFT geometry appends a spatial 4x4 grid of histograms

with 8 orientations

- Leading to a 128-dimensional feature which is highly discriminative and robust!

SIFT Construction: sampling

SIFT Construction: weighting

SIFT Construction: binning

Matching SIFT features

Euclidean Matching

- SImplest way to match SIFT features is to take each feature in turn

from the first image and find the most similar in the second image

- Threshold can be used to reject poor matches

- Unfortunately, doesn't work that well and results in lots of

mismatches.

Improving matching performance

- A better solution is to take each feature from the first image, and

find the two closest features in the second image.

- Only form a match if the ratio of distances between the closest

and second closest matches is less than a threshold.

- Typically set at 0.8, meaning that the distance to the closest

feature must be at least 80% of the second closest.

- This leads to a much more robust matching strategy.

Lecture 8: Consistent matching

Feature distinctiveness

- Even though the most advanced local features can be prone to being

mismatched.

- There is always a tradeoff in feature distinctiveness.

- If it’s too distinctive it will not match subtle

variations due to noise of imaging conditions.

- If it’s not distinctive enough it will match

everything.

Constrained matching

- Assume we are given a number of correspondences between the interest

points in a pair of images

- Is it possible to estimate which of those correspondences

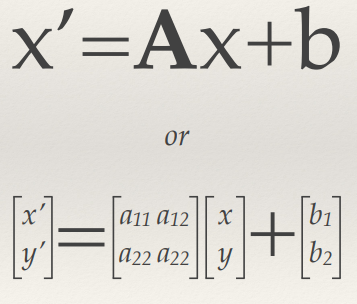

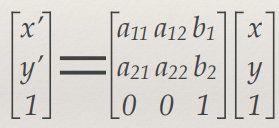

are inliers (correct) or outliers (incorrect/mismatches)?